Hi there,

I'm have used my old 1150 socket stack to build xpenology.

Info:

Boot: jun 1.04

Model name: DS918+

CPU: INTEL xenon 1265L

Total physical memory: 16 GB

OLD DSM version: DSM 6.2.2 x ?

NOW DSM version: DSM 6.2.3-25426 Update 3

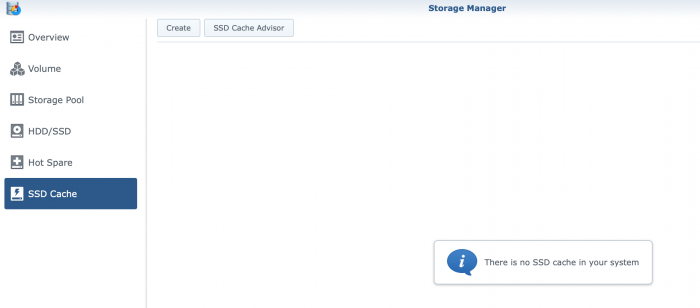

2 x nvme as SSD cache (ssd cache to OLD volume2)

2 x 14TB WD white (problematic OLD volume 2, md4 )

2 x some other hdd (volume 1, md2)

have backup files (dss)

One day it just did not run web interface, or more precisely, it booted from the pendrive but showed no more signs of life (no possibility to connect to the host, but netowrk adapter worked in e.g. reinstall mode).

So I created a new pendrive with boot 1.04 as I had it before, only this time I uploaded various DSM versions and try migrations, reinstalls.

Finally I found one version that works (6.2.3 - 25426), unfortunately I don't know what verson I had before (probably 6.2.2 - x).

To achieve this, I used a blank SSD (sata) drive with clan instll xpenology but unfortunately I deleted earlier the synology partitions from both main WDs I had data on.

The situation is additionally compiled by the fact that the SSD cache from nvme disks was attached to this storage pool (volume2).

So I'm at a point where I can't restore both my WD 12TB drives and SSD cache used by them.

Below are the commands I tried to use on my synology (before I have tried on live cd ubuntu) to save the day, but it just overwhelms me.

Device Start End Sectors Size Type

/dev/sde1 2048 1085439 1083392 529M Windows recovery environment

/dev/sde2 1085440 1290239 204800 100M EFI System

/dev/sde3 1290240 1323007 32768 16M Microsoft reserved

/dev/sde4 1323008 1953523711 1952200704 930.9G Microsoft basic data

Disk /dev/sdf: 477 GiB, 512110190592 bytes, 1000215216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xa0ae0333

Device Boot Start End Sectors Size Id Type

/dev/sdf1 2048 4982527 4980480 2.4G fd Linux raid autodetect

/dev/sdf2 4982528 9176831 4194304 2G fd Linux raid autodetect

/dev/sdf3 9437184 1000010399 990573216 472.4G fd Linux raid autodetect

# lvdisplay vg2

--- Logical volume ---

LV Path /dev/vg2/syno_vg_reserved_area

LV Name syno_vg_reserved_area

VG Name vg2

LV UUID rWqwDz-OK0Q-PWvV-ltzA-GiW7-munV-krxqGp

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 12.00 MiB

Current LE 3

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/vg2/volume_2

LV Name volume_2

VG Name vg2

LV UUID a4oUXf-f8dX-qpGO-3H7q-VT12-2x2n-KK2hah

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 12.73 TiB

Current LE 3336803

Segments 1

Allocation inherit

Read ahead sectors auto

Spoiler

# vgchange vg2 -ay

2 logical volume(s) in volume group "vg2" now active

Spoiler

# btrfs check /dev/md4

No valid Btrfs found on /dev/md4

Couldn't open file system

Spoiler

# btrfs check --repair /dev/md4

enabling repair mode

No valid Btrfs found on /dev/md4

Couldn't open file system

Spoiler

# mount /dev/vg2/volume_2 /volumeUSB1/usbshare/

mount: wrong fs type, bad option, bad superblock on /dev/vg2/volume_2,

missing codepage or helper program, or other error

In some cases useful info is found in syslog - try

dmesg | tail or so.

Spoiler

dmesg

[262720.451048] md: md4 stopped.

[262720.452870] md: bind<sdd3>

[262720.453215] md: bind<sdc3>

[262720.453769] md/raid1:md4: active with 2 out of 2 mirrors

[262720.559160] md4: detected capacity change from 0 to 13995581898752

[262725.183325] md: md127 stopped.

[262725.183821] This is not a kind of scsi disk 259

[262725.183914] md: bind<nvme1n1p1>

[262725.183946] This is not a kind of scsi disk 259

[262725.184001] md: bind<nvme0n1p1>

[262725.184543] md/raid1:md127: active with 2 out of 2 mirrors

[262725.186441] md127: detected capacity change from 0 to 256050855936

[262899.858355] Bluetooth: Failed to add device to auto conn whitelist: status 0x0c

[262899.897356] Bluetooth: Failed to add device to auto conn whitelist: status 0x0c

[262940.055094] Bluetooth: Failed to add device to auto conn whitelist: status 0x0c

[265508.974939] BTRFS: device label 2020.05.28-22:09:26 v15284 devid 1 transid 1878908 /dev/vg2/volume_2

[265508.975622] BTRFS info (device dm-1): has skinny extents

[265508.989035] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265508.989398] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.014854] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294296

[265509.029051] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294288

[265509.043346] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294280

[265509.057592] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294272

[265509.071810] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.071832] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair parent transid verify failure on 1731001909248, mirror = 1

[265509.093857] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.094154] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.097656] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359832

[265509.111851] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359824

[265509.126080] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359816

[265509.140290] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359808

[265509.154587] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.154590] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair parent transid verify failure on 1731001909248, mirror = 2

Question

ZenedithPL

Hi there,

I'm have used my old 1150 socket stack to build xpenology.

Info:

Boot: jun 1.04

Model name: DS918+

CPU: INTEL xenon 1265L

Total physical memory: 16 GB

OLD DSM version: DSM 6.2.2 x ?

NOW DSM version: DSM 6.2.3-25426 Update 3

2 x nvme as SSD cache (ssd cache to OLD volume2)

2 x 14TB WD white (problematic OLD volume 2, md4 )

2 x some other hdd (volume 1, md2)

have backup files (dss)

One day it just did not run web interface, or more precisely, it booted from the pendrive but showed no more signs of life (no possibility to connect to the host, but netowrk adapter worked in e.g. reinstall mode).

So I created a new pendrive with boot 1.04 as I had it before, only this time I uploaded various DSM versions and try migrations, reinstalls.

Finally I found one version that works (6.2.3 - 25426), unfortunately I don't know what verson I had before (probably 6.2.2 - x).

To achieve this, I used a blank SSD (sata) drive with clan instll xpenology but unfortunately I deleted earlier the synology partitions from both main WDs I had data on.

The situation is additionally compiled by the fact that the SSD cache from nvme disks was attached to this storage pool (volume2).

So I'm at a point where I can't restore both my WD 12TB drives and SSD cache used by them.

Below are the commands I tried to use on my synology (before I have tried on live cd ubuntu) to save the day, but it just overwhelms me.

# fdisk -l

...

Disk /dev/nvme0n1: 238.5 GiB, 256060514304 bytes, 500118192 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xf84c4eb1

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 2048 500103449 500101402 238.5G fd Linux raid autodetect

Disk /dev/nvme1n1: 238.5 GiB, 256060514304 bytes, 500118192 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x08001b6a

Device Boot Start End Sectors Size Id Type

/dev/nvme1n1p1 2048 500103449 500101402 238.5G fd Linux raid autodetect

Disk /dev/sdc: 12.8 TiB, 14000519643136 bytes, 27344764928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 9D38F749-BCA5-45A5-AB33-5173B5D6A0BF

Device Start End Sectors Size Type

/dev/sdc2 4982528 9176831 4194304 2G Linux RAID

/dev/sdc3 9437184 27344560127 27335122944 12.7T Linux RAID

Disk /dev/sdd: 12.8 TiB, 14000519643136 bytes, 27344764928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 2B1A4AEB-7EE1-406A-A267-ABC366CC321D

Device Start End Sectors Size Type

/dev/sdd2 4982528 9176831 4194304 2G Linux RAID

/dev/sdd3 9437184 27344560127 27335122944 12.7T Linux RAID

Disk /dev/sde: 931.5 GiB, 1000204886016 bytes, 1953525168 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 3DB76358-0537-4C2E-BE55-05361BC77A7F

Device Start End Sectors Size Type

/dev/sde1 2048 1085439 1083392 529M Windows recovery environment

/dev/sde2 1085440 1290239 204800 100M EFI System

/dev/sde3 1290240 1323007 32768 16M Microsoft reserved

/dev/sde4 1323008 1953523711 1952200704 930.9G Microsoft basic data

Disk /dev/sdf: 477 GiB, 512110190592 bytes, 1000215216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xa0ae0333

Device Boot Start End Sectors Size Id Type

/dev/sdf1 2048 4982527 4980480 2.4G fd Linux raid autodetect

/dev/sdf2 4982528 9176831 4194304 2G fd Linux raid autodetect

/dev/sdf3 9437184 1000010399 990573216 472.4G fd Linux raid autodetect

Disk /dev/md0: 2.4 GiB, 2549940224 bytes, 4980352 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/md126: 2 GiB, 2147418112 bytes, 4194176 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

GPT PMBR size mismatch (102399 != 61439999) will be corrected by w(rite).

Disk /dev/synoboot: 29.3 GiB, 31457280000 bytes, 61440000 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 52173969-41B8-4EDB-9190-B1CE75FCFA11

Device Start End Sectors Size Type

/dev/synoboot1 2048 32767 30720 15M EFI System

/dev/synoboot2 32768 94207 61440 30M Linux filesystem

/dev/synoboot3 94208 102366 8159 4M BIOS boot

Disk /dev/md1: 2 GiB, 2147418112 bytes, 4194176 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/zram0: 2.3 GiB, 2506096640 bytes, 611840 sectors

Units: sectors of 1 * 4096 = 4096 bytes

Sector size (logical/physical): 4096 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/zram1: 2.3 GiB, 2506096640 bytes, 611840 sectors

Units: sectors of 1 * 4096 = 4096 bytes

Sector size (logical/physical): 4096 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/zram2: 2.3 GiB, 2506096640 bytes, 611840 sectors

Units: sectors of 1 * 4096 = 4096 bytes

Sector size (logical/physical): 4096 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/zram3: 2.3 GiB, 2506096640 bytes, 611840 sectors

Units: sectors of 1 * 4096 = 4096 bytes

Sector size (logical/physical): 4096 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk /dev/md2: 472.3 GiB, 507172421632 bytes, 990571136 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

# cat /etc/fstab

none /proc proc defaults 0 0

/dev/root / ext4 defaults 1 1

/dev/md126 /volumeX26 btrfs auto_reclaim_space,synoacl,ssd,relatime 0 0

/dev/md2 /volume1 btrfs auto_reclaim_space,synoacl,ssd,relatime 0 0

# mdadm -Asf && vgchange -ay

# cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4]

md127 : active raid1 nvme0n1p1[0] nvme1n1p1[1]

250049664 blocks super 1.2 [2/2] [UU]

md4 : active raid1 sdc3[0] sdd3[1]

13667560448 blocks super 1.2 [2/2] [UU]

md2 : active raid1 sdf3[0]

495285568 blocks super 1.2 [1/1] [U]

md1 : active raid1 sdf2[0]

2097088 blocks [16/1] [U_______________]

md126 : active raid1 sdc2[1] sdd2[0]

2097088 blocks [16/2] [UU______________]

md0 : active raid1 sdf1[0]

2490176 blocks [16/1] [U_______________]

unused devices: <none>

# mdadm --examine /dev/sdd2

/dev/sdd2:

Magic : a92b4efc

Version : 0.90.00

UUID : a626abe1:a5f8fa38:3017a5a8:c86610be

Creation Time : Tue Mar 16 15:42:21 2021

Raid Level : raid1

Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB)

Array Size : 2097088 (2047.94 MiB 2147.42 MB)

Raid Devices : 16

Total Devices : 2

Preferred Minor : 126

Update Time : Fri Mar 19 12:21:09 2021

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 14

Spare Devices : 0

Checksum : ae8dfb7c - correct

Events : 24

Number Major Minor RaidDevice State

this 0 8 50 0 active sync /dev/sdd2

0 0 8 50 0 active sync /dev/sdd2

1 1 8 34 1 active sync /dev/sdc2

2 2 0 0 2 faulty removed

3 3 0 0 3 faulty removed

4 4 0 0 4 faulty removed

5 5 0 0 5 faulty removed

6 6 0 0 6 faulty removed

7 7 0 0 7 faulty removed

8 8 0 0 8 faulty removed

9 9 0 0 9 faulty removed

10 10 0 0 10 faulty removed

11 11 0 0 11 faulty removed

12 12 0 0 12 faulty removed

13 13 0 0 13 faulty removed

14 14 0 0 14 faulty removed

15 15 0 0 15 faulty removed

# mdadm --examine /dev/sdd3

/dev/sdd3:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : 2caf45ac:9d06f4a8:e3fccf52:7f4baff3

Name : synology:4 (local to host synology)

Creation Time : Wed May 27 23:03:08 2020

Raid Level : raid1

Raid Devices : 2

Avail Dev Size : 27335120896 (13034.40 GiB 13995.58 GB)

Array Size : 13667560448 (13034.40 GiB 13995.58 GB)

Data Offset : 2048 sectors

Super Offset : 8 sectors

Unused Space : before=1968 sectors, after=0 sectors

State : clean

Device UUID : 1cf91208:bda2eb9f:128854a8:d6d00822

Update Time : Mon Mar 22 13:19:47 2021

Checksum : 199978ee - correct

Events : 157

Device Role : Active device 1

Array State : AA ('A' == active, '.' == missing, 'R' == replacing)

# pvs /dev/md4

PV VG Fmt Attr PSize PFree

/dev/md4 vg2 lvm2 a-- 12.73t 0

# lvdisplay vg2

--- Logical volume ---

LV Path /dev/vg2/syno_vg_reserved_area

LV Name syno_vg_reserved_area

VG Name vg2

LV UUID rWqwDz-OK0Q-PWvV-ltzA-GiW7-munV-krxqGp

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 12.00 MiB

Current LE 3

Segments 1

Allocation inherit

Read ahead sectors auto

--- Logical volume ---

LV Path /dev/vg2/volume_2

LV Name volume_2

VG Name vg2

LV UUID a4oUXf-f8dX-qpGO-3H7q-VT12-2x2n-KK2hah

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 12.73 TiB

Current LE 3336803

Segments 1

Allocation inherit

Read ahead sectors auto

# vgchange vg2 -ay

2 logical volume(s) in volume group "vg2" now active

# btrfs check /dev/md4

No valid Btrfs found on /dev/md4

Couldn't open file system

# btrfs check --repair /dev/md4

enabling repair mode

No valid Btrfs found on /dev/md4

Couldn't open file system

# mount /dev/vg2/volume_2 /volumeUSB1/usbshare/

mount: wrong fs type, bad option, bad superblock on /dev/vg2/volume_2,

missing codepage or helper program, or other error

In some cases useful info is found in syslog - try

dmesg | tail or so.

dmesg

[262720.451048] md: md4 stopped.

[262720.452870] md: bind<sdd3>

[262720.453215] md: bind<sdc3>

[262720.453769] md/raid1:md4: active with 2 out of 2 mirrors

[262720.559160] md4: detected capacity change from 0 to 13995581898752

[262725.183325] md: md127 stopped.

[262725.183821] This is not a kind of scsi disk 259

[262725.183914] md: bind<nvme1n1p1>

[262725.183946] This is not a kind of scsi disk 259

[262725.184001] md: bind<nvme0n1p1>

[262725.184543] md/raid1:md127: active with 2 out of 2 mirrors

[262725.186441] md127: detected capacity change from 0 to 256050855936

[262899.858355] Bluetooth: Failed to add device to auto conn whitelist: status 0x0c

[262899.897356] Bluetooth: Failed to add device to auto conn whitelist: status 0x0c

[262940.055094] Bluetooth: Failed to add device to auto conn whitelist: status 0x0c

[265508.974939] BTRFS: device label 2020.05.28-22:09:26 v15284 devid 1 transid 1878908 /dev/vg2/volume_2

[265508.975622] BTRFS info (device dm-1): has skinny extents

[265508.989035] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265508.989398] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.014854] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294296

[265509.029051] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294288

[265509.043346] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294280

[265509.057592] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174294272

[265509.071810] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.071832] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair parent transid verify failure on 1731001909248, mirror = 1

[265509.093857] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.094154] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.097656] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359832

[265509.111851] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359824

[265509.126080] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359816

[265509.140290] md/raid1:md4: syno_raid1_self_heal_set_and_submit_read_bio(1327): No suitable device for self healing retry read at round 4 at sector 174359808

[265509.154587] parent transid verify failed on 1731001909248 wanted 1761048 found 2052670

[265509.154590] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair parent transid verify failure on 1731001909248, mirror = 2

[265509.168224] BTRFS error (device dm-1): failed to read chunk root

[265509.183152] BTRFS: open_ctree failed

How to restore/repair volume2?

Edited by ZenedithPLLink to comment

Share on other sites

6 answers to this question

Recommended Posts

Join the conversation

You can post now and register later. If you have an account, sign in now to post with your account.