Search the Community

Showing results for tags 'ssd'.

-

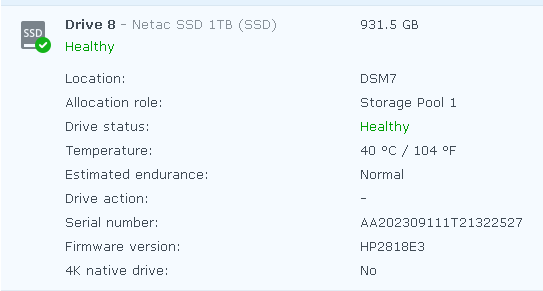

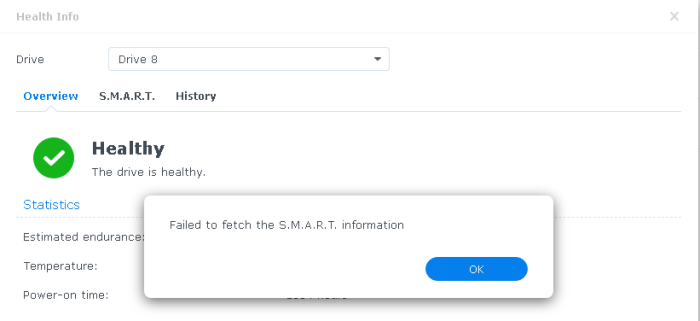

Hello guys. there is one problem with new SSD on system. Everything is good, until press 'Health Info' Everything is good, until press 'Health Info'. Smart Quick test will be run until reload. Smartmon gives normal statistic, I even update drivedb.h, but nothing changed. Any advice, suggestions, tips? Smartmon output attached. smartmon.txt

-

How is a bootable USB stick used? Does it only specify the sector from which xpenology should boot? If I have 5 disks (SATA 1, 2, 3, 4, 5 ports), will the system always boot from the SATA 1? Is the operating system stored on the flash drive? Does the entire Xpenology system load into RAM or is it constantly being loaded? I want to achieve maximum performance for my Xpenology system and Docker installation. Is it worth using a SATA SSD instead of a USB stick? Or do I need a USB stick + SATA SSD in the SATA 1 port in Xpenology?

-

Интересует совместимость по железу. А именно видит ли ХРЕНЬ m.2 накопители? Интересую и которые работают по SATA шине и PCI-E? Есть ли совместимость с NVMe? Также, как дружит хрень с 1151v2 мамками? Планирую собрать новую хранилку на Asus H310T...

- 68 replies

-

- железо

- совместимость

-

(and 2 more)

Tagged with:

-

I had setup a storage pool on Ds920+ with 7.2-64570 Having updated to 7.2-64570 Update 3 the storage pool and volume has gone . I’ve tried the scripts to add NVME as storage pool , but nothing has recovered it . Could anyone give any guidance ? It was a single NVME drive. Diskstation:/$ ls /dev/nvme* /dev/nvme0 /dev/nvme0n1 /dev/nvme0n1p1 /dev/nvme0n1p2 /dev/nvme0n1p3 /dev/nvme1

-

SHR-1 and SSD cache with no data protection - how reliable?

SergeS posted a question in General Questions

I have four drives shr-1 volume (of course, with data protection) and one drive SSD cache (of course, with NO data protection, read-only). I know, in case of any single drive of shr-1 volume will crash I will not lose any data, that is what data protection is. But what gonna happen if my SSD read-only cache drive crashed? Will I loose data on the volume? I believe not, but I would like to get some confirmation :-). -

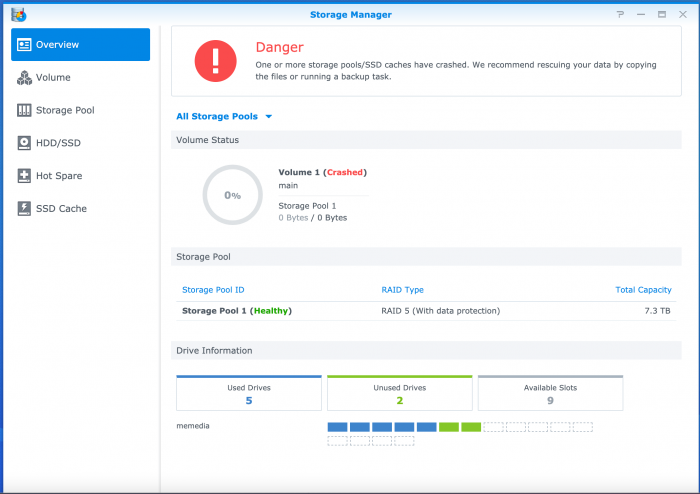

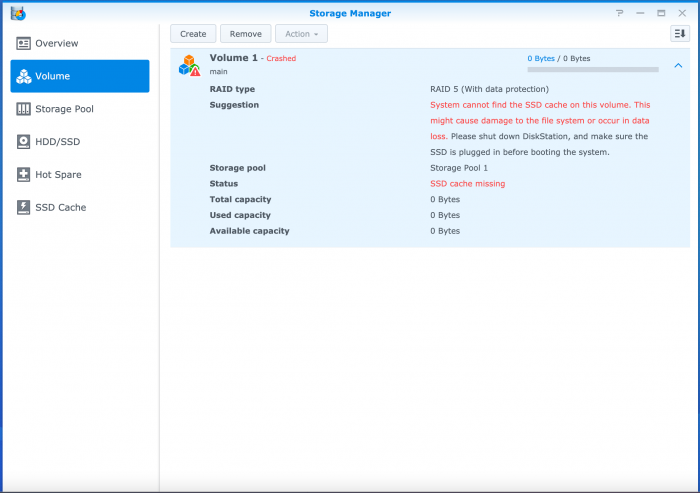

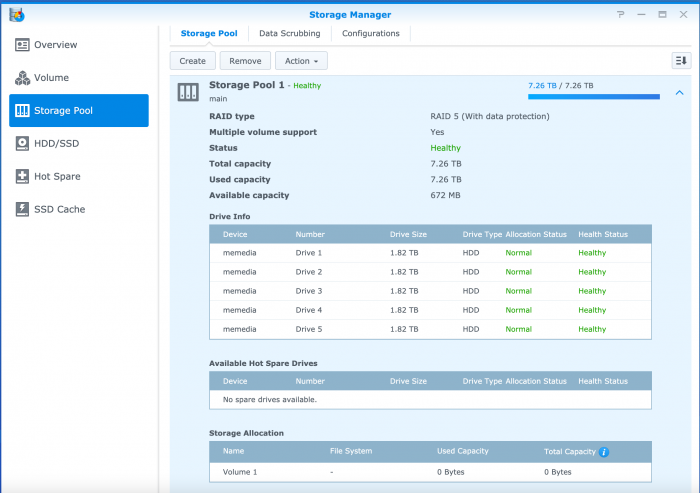

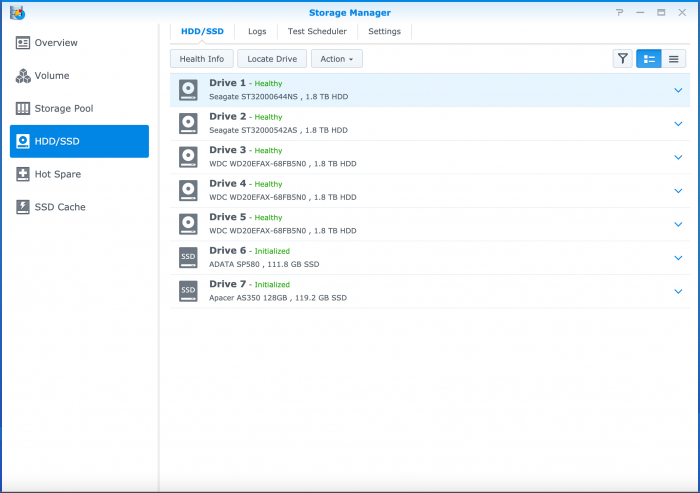

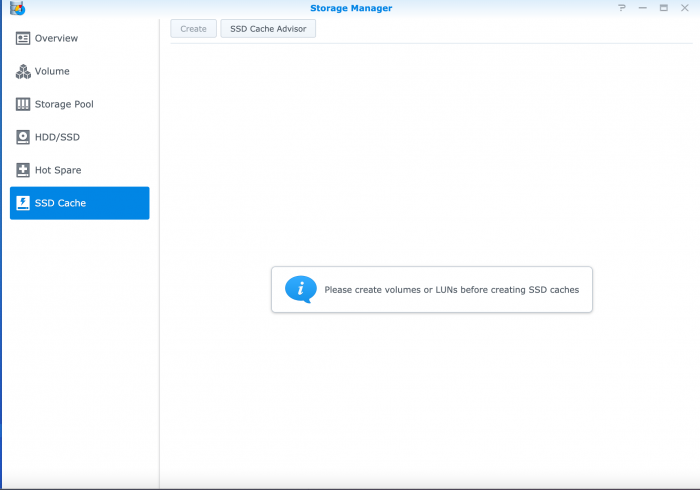

Hello. After the system was restored, the cache disappeared. Unsuccessfully switched to a new version which did not work .... What can you do about it? There are disks with cache, but the system does not connect them ... . . . ash-4.3# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md2 : active raid5 sda3[5] sdd3[4] sde3[3] sdc3[2] sdb3[1] 7794770176 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] sde2[4] sdf2[5] sdg2[6] 2097088 blocks [16/7] [UUUUUUU_________] md0 : active raid1 sda1[0] sdb1[1] sdc1[2] sdd1[3] sde1[4] sdf1[5] sdg1[6] 2490176 blocks [16/7] [UUUUUUU_________] ash-4.3# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Tue Jun 22 19:18:07 2021 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 16 Total Devices : 7 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Fri Jun 25 18:59:43 2021 State : clean, degraded Active Devices : 7 Working Devices : 7 Failed Devices : 0 Spare Devices : 0 UUID : bc535ace:18245e6d:3017a5a8:c86610be Events : 0.11337 Number Major Minor RaidDevice State 0 8 1 0 active sync /dev/sda1 1 8 17 1 active sync /dev/sdb1 2 8 33 2 active sync /dev/sdc1 3 8 49 3 active sync /dev/sdd1 4 8 65 4 active sync /dev/sde1 5 8 81 5 active sync /dev/sdf1 6 8 97 6 active sync /dev/sdg1 - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed - 0 0 12 removed - 0 0 13 removed - 0 0 14 removed - 0 0 15 removed ash-4.3# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Tue Jun 22 19:18:10 2021 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 16 Total Devices : 7 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Fri Jun 25 17:50:04 2021 State : clean, degraded Active Devices : 7 Working Devices : 7 Failed Devices : 0 Spare Devices : 0 UUID : 6b7352a0:2dd09c09:3017a5a8:c86610be Events : 0.17 Number Major Minor RaidDevice State 0 8 2 0 active sync /dev/sda2 1 8 18 1 active sync /dev/sdb2 2 8 34 2 active sync /dev/sdc2 3 8 50 3 active sync /dev/sdd2 4 8 66 4 active sync /dev/sde2 5 8 82 5 active sync /dev/sdf2 6 8 98 6 active sync /dev/sdg2 - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed - 0 0 12 removed - 0 0 13 removed - 0 0 14 removed - 0 0 15 removed ash-4.3# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Wed Dec 9 02:15:41 2020 Raid Level : raid5 Array Size : 7794770176 (7433.67 GiB 7981.84 GB) Used Dev Size : 1948692544 (1858.42 GiB 1995.46 GB) Raid Devices : 5 Total Devices : 5 Persistence : Superblock is persistent Update Time : Fri Jun 25 17:50:15 2021 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : memedia:2 (local to host memedia) UUID : ae85cc53:ecc1226b:0b6f21b5:b81b58c5 Events : 34755 Number Major Minor RaidDevice State 5 8 3 0 active sync /dev/sda3 1 8 19 1 active sync /dev/sdb3 2 8 35 2 active sync /dev/sdc3 3 8 67 3 active sync /dev/sde3 4 8 51 4 active sync /dev/sdd3

- 4 replies

-

- 1

-

-

- ssd cache missing

- cache

-

(and 1 more)

Tagged with:

-

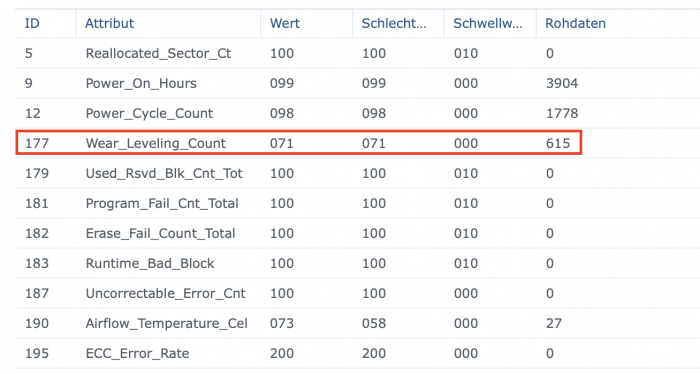

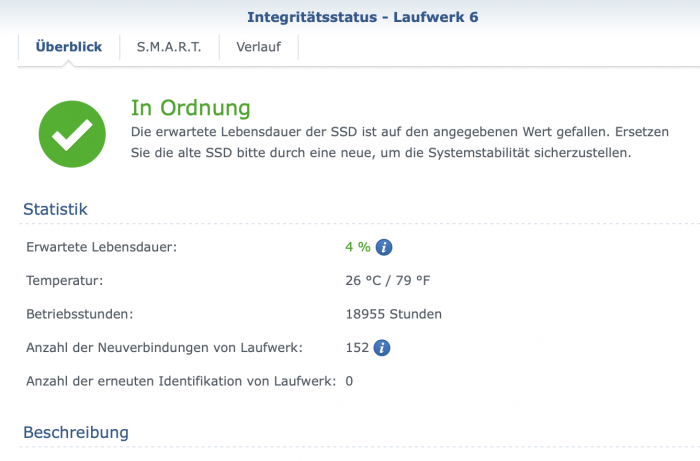

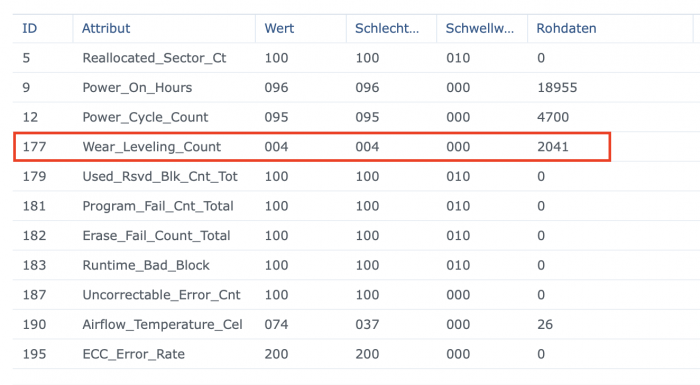

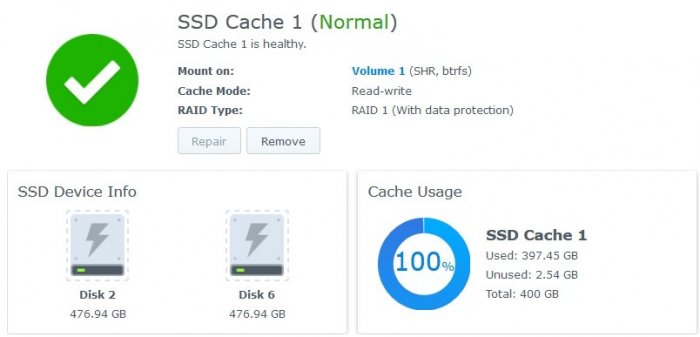

I used two used Samsung EVO 256 GB SSDs as SSD Cache for about one year and a half. Now DSM notified me about the SSDs estimated lifetime reaching end. When looking at the values of my SMART tests I am unsure what to believe. As far as I know DSM predicts the lifetime based on the Wear_Leveling_Count. However when comparing the SMART values those two SSDs makes it unclearer for me. DSM reports this SSD is OK, although the "raw value" is way higher than the limitthreshold whereas this SSD should be replaced So is there a possibility that DSM or the SMART test isn't reading the values properly or am I misinformed?

-

I noticed after the SSD write cache is full, I can no longer get 500mb/s speeds(I'm running 10Gbe). I am also starting to get errors when transferring files. Is there a way to purge the cache?

-

For users who may run HP servers or custom builds with HP SAS SSD drives: a critical firmware bug leads to the dead of the drive if a specific on-time (32,768 hours) has been reached. The drive will become inaccessible and is completely dead. Link to the advisory and patches: https://support.hpe.com/hpsc/doc/public/display?docId=emr_na-a00092491en_us

- 8 replies

-

- 1

-

-

- firmware bug

- hp

-

(and 1 more)

Tagged with:

-

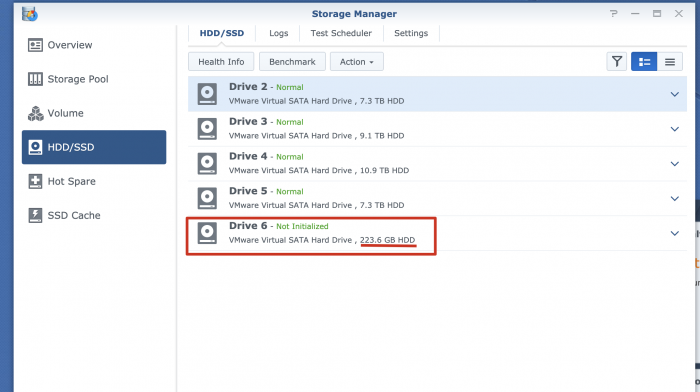

Hello, I am new here. My Settings: Hardware: HP gen8 16GB, Intel(R) Xeon(R) CPU E3-1265L V2 @ 2.50GHz, LSI-9211 HBA Exsi 6.7 installed on SSD connected from Motherboard , 4 bay connected through LSI-9211, 4 Bay with 2x8TB, 1x 10TB, 1x12TB, I use miniSAS to sata from motherboard for SSD I added two ned SSD (2x240GB) connected with miniSAS to sata from montherboard System runing 6.2.2 DS3615xs My Questions: When I mount 2 new added SSD to VM, it shows HDD instead of SSD please help, I did a lot of search, but I couldn't get any answer to that. Thanks!!

-

Hello, community! Thank you in advance for your interest I'm new in this environment, I knew a part of the basic knowledge of xpenology. What I have in mind : Build a NAS powerful, more than 2gb/s. 10Gbps and Raid 10 for safety. So, I have thought about 12 IronWolf 4TB. Also, some SSD for cache (I really don't want a slow NAS). My first's questions : Can be possible to use my future NAS xpenology like a DAS too? What MB will I need? (With how many SATA ports? Because I don't really know how PCI controllers are working) Will be awesome if you have some hardware in mind. Thank you for you're helping.

-

I have a DSM 6.1.5 running on a LSI 9207-8i controller with 8 Samsung EVO 250gb SATA drives. The raid group is a RAID10. I have also tested with a single disk basic volume as well.I cannot see the tab that allows SSD trim to be enabled. I was running in RAID6 prior, but rebuilt in RAID10. I know the option was not allowed in RAID6, but I know it *used* to be configurable and could be enabled in DSM 5.2. I upgraded from 5.2 to 6.1, so I do not know when it might have broken. Does anyone else have a different experience? What might I be missing? *edit* Forgot to mention I am using the ds3615xs firmware if that matters.

- 1 reply

-

- 6.1

- samsung evo

-

(and 2 more)

Tagged with:

-

Hi Guys, I've been setting up my XPEnology for the past few weeks and noticed that my write speeds went down horribly after enabling SSD-cache. The transfer starts out at about 1GByte/s (not bit) but goes down all the way to 30MB/s or even lower write speeds, read speeds are fine though. After trying some diffrent settings and also making a RAID 0,1,5 and basic RAID setup the speed still seemed to stay really poor,.. I blamed the network settings at first but noticed my raid5 3 HDD setup manages to keep a steady 500-600MB/s (Bytes not Bits) while transfering from my pc to the XPEnology. Copying a large file from one SSD to another gave me the same poor performance. At the Benchmark in the storage manager the SSD read speed caps out at about 550MB/s but the Write caps out at about 30MB/s. I've tried a different ssd, port, cable and controller so far but nothing seems to help. Booting up Windows and benchmarking the SSD with Crystal Disk Mark gave me about 550/500 Read/Write on both SSDs. I'm using DSM6.1.4-5 on 1.02b with the extra.lmza v4.3 driver package on a SuperMicro a2sdi-h-tf motherboard. I have come across a couple of seemingly similar cases but these were unsolved or different causes (like SMB and networksettings). Does anyone have a tip for me to try out or could give me some advice? Kind regards, Mike

-

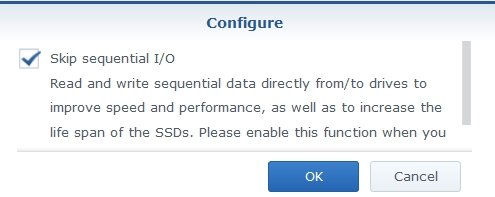

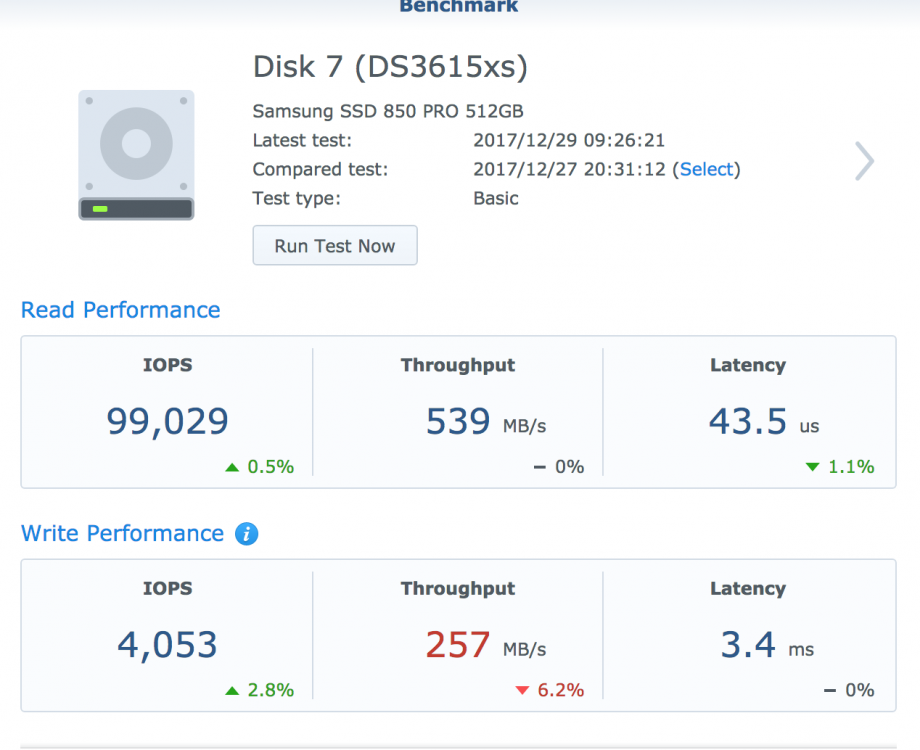

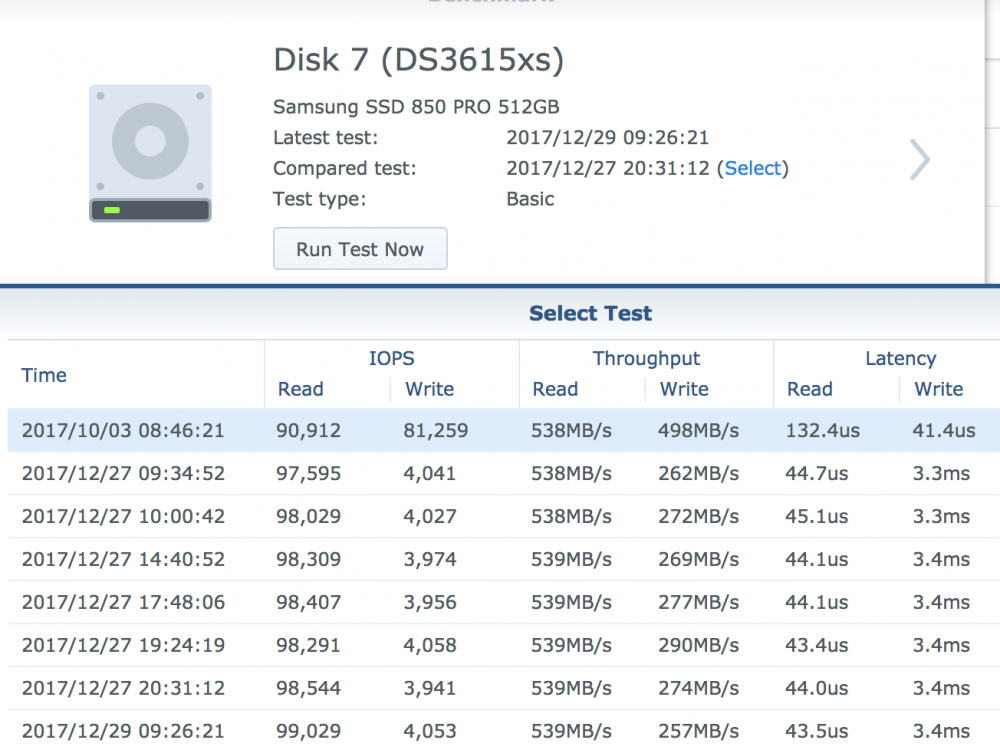

I have Proxmox 5.1. Xpenology 1.02b loader; DSM6.1.4 Update 5. Intel onbard (AHCI) SATA controller passthrough. I have 3 SSD in RAID0 mode. What I notice is, the write performance on SSD is terrible. I've try to tweak Proxmox, and the Xpeno VM, but nothing seems to help. My VM config: args: -device 'piix3-usb-uhci,addr=0x18' -drive 'id=synoboot,file=/var/lib/vz/images/100/synoboot_jun2b_virtIO.img,if=none,format=raw' -device 'usb-storage,id=synoboot,drive=synoboot' bios: ovmf boot: 4 cores: 4 hostpci0: 00:17.0,pcie=1 machine: q35 memory: 6144 name: DSM6.x net0: virtio=00:11:32:xxxxxxx,bridge=vmbr0 net1: virtio=00:11:32:xxxxxxx,bridge=vmbr1 numa: 1 ostype: l26 scsihw: virtio-scsi-pci serial0: socket smbios1: uuid=7fda6843-8411-473f-91a5-xxxxxxxxx sockets: 1 I've tried to passthrough the VM without q35 machine type, and without PCE=1. Write performance is the same. What's strange is, the write performance was really good back in October, when I was still on Proxmox 5.0. So I want to ask everyone, how's your SATA passthrough performance?

- 3 replies

-

- ssd

- passthrough

-

(and 2 more)

Tagged with:

-

Bonjour, Dans le passé j'avais suivi le tuto (http://www.fredomotique.com/informatique/nas-synology-sur-n54l/) pour monter un proliant HP 54L. Je souhaite réaliser les améliorations suivantes à mon Proliant HP54L : 1) ajouter 2 HDD red 3To acheté depuis juillet ! 2) changer l'ordre des disques afin que le SSD de 120Go où il y a le DISKSTATION et les VM soit au niveau de l'emplacement du CD-ROM 3) ajouter une carte Ethernet TP-LINK TG-3468 pour augmenter le débit. Je suis sous Exsi 5.5 4) mettre à jour Xpenology pour une version 6.2 ou supérieure (si c'est possible) 5) migrer vers Exsi 6 6) mettre en place une copie entre mon 54L et mon DS213air qui a deux disques de 3To Red de manière à sauvegarder les données clefs. Je suis pour l'instant à l'étape 1. J'ai ajouté les 2 HDD de 3To dont un dans l'espace CD-ROM. Ce dernier est relié à la carte mère avec un cable SATA (il est en version 3 mais je ne sais pas si cela a une importance ?). Je suis passé par les étapes 5 et 6 du tuto mentionné ci-dessus et au moment de mettre en marche le DISKSATATION j'ai l'erreur suivante : "Cannot open the disk '/vmfs/volumes/540548fa-3fda98c2-c6a1-9cb654046298/DISKSTATION/WDC_WD30EFRX2D68EUZN0.vmdk' or one of the snapshot disks it depends on." L'erreur provient du disque placé dans l'emplacement CD-ROM. Questions : - comment résoudre ce problème ? - est-ce lié au fait qu'il soit relié à la carte mère avec un cable SATA ? - est-il possible de supprimer les .vmd dans cd /vmfs/volumes/120Go-DATABASE/DISKSTATION afin de recommencer l'étape 5 ? - est-il possible de changer les disques d'emplacement pour mettre le SSD qui ne chauffe pas dans l'emplacement du CD-ROM mais dans ce cas que dois-je faire ? Faut il refaire des configs en SSH ? - est-il possible de renommer les vmdk des disques pour avoir des noms plus parlant : SerialNumber_rack_n (n = emplacement du disque dans le serveur) ? Sans pour autant tout refaie et SURTOUT sans perdre les données ! les disques sont pour l'instant localisé ainsi : IDE (0:0) Hard disk 1 - [120Go-DATABASE] DISKSTATION/XPEnoboot_DS3615xs_5.1-5022.3.vmdk - dans le Rack 1 SCSI (O:1) Hard disk 2 - [120Go-DATABASE] DISKSTATION/WDC_WD2DWMC4N1163018_3T.vmdk - dans le Rack 2 SCSI (O:2) Hard disk 3 - [120Go-DATABASE] DISKSTATION/WDC_WD2DWMC4N2305136_3T.vmdk - dans le Rack 3 SCSI (O:3) Hard disk 4 - [120Go-DATABASE] DISKSTATION/WDC_WD30EFRX2D68EUZN0_3To.vmdk - dans le Rack 4 SCSI (O:4) Hard disk 5 - [120Go-DATABASE] DISKSTATION/WDC_WD30EFRX2D68EUZN0.vmdk --> ce dernier est dans l'espace CD-ROM --> c'est ce dernier qui génère l'erreur mentionné "Cannot open the disk '/vmfs/volumes/540548fa-3fda98c2-c6a1-9cb654046298/DISKSTATION/WDC_WD30EFRX2D68EUZN0.vmdk' or one of the snapshot disks it depends on." quand je souhaite démarrer DISKSTATION J'ai posté sur le forum fibaro des questions mais je ne suis pas certain qu'il y est beaucoup de monde comme par le passé. Donc je poste sur ce forum en espérant avoir de l'aide afin de redémarrer mon serveur.