Search the Community

Showing results for tags 'lvm'.

-

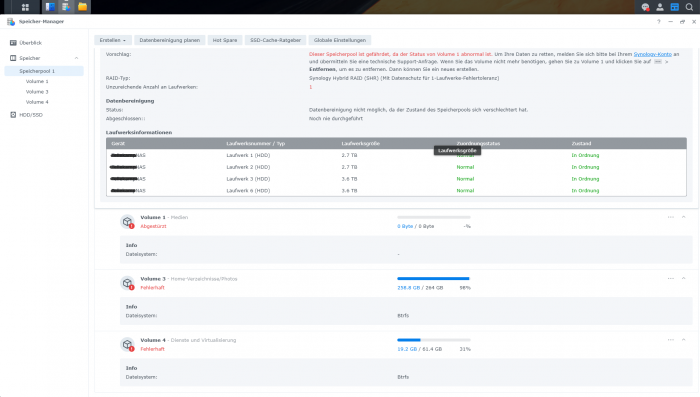

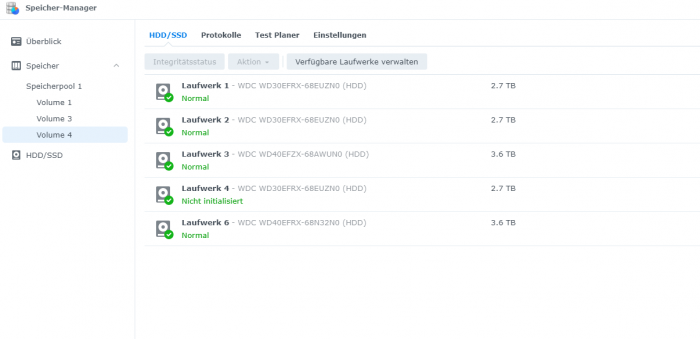

Hallo zusammen, Ich habe leider ein Problem, und hoffe hier auf eure Hilfe. Kurz zum Aufbau und aktuellem Status SHR-1 mit 5 (vor dem Stress 4) WD Red + HDDs : 3x 3TB und 2x 4TB (eine der 3TB Platten ist derzeit nicht eigebunden s.u.) ---> Volume 1 , EXT4 , 7 TB (Kein aktuelles Backup..... Shame on me) ---> Volume 3 , BTRFS, 260 GB (Backup 2 Tage alt) ---> Volume 4, BTRFS, 62 GB (Backup 2 Tage alt) Nach dem Update auf DSM 7.0 welches mehrere Tage Problemlos lief, baute ich gestern eine weitere 4TB Platte ein und fügte sie meinem SHR-1 hinzu. Bei ca. 75 % steig dann eine der 3TB Platten aus, mit stauts "Abgestürzt" die Volumes liefen alle noch, und das Raid hat sich trotz Status (Fehlerhaft) weiter erweitert, kurz vor Ende, ist die Kiste dann gänzlich eingefroren, und hat irgendwann nicht mal mehr auf pings reagiert. Nach einem Neustart lief die Speichererweiterung einfach weiter, jedoch jedoch nun war neben der Festplatte auch mein größtes Volume (1) "Abgestürzt". Sowie die beiden anderen Volumes auf dem selben SHR-1 Speicherpool in ReadOnly gegangen. Ich habe die Raid- Erweiterung dann zuende laufen lassen. Die Defekte Platte habe ich ausgetauscht(Auch wenn sie gar nicht so defekt scheint) Jedoch bleibt mein größtes Volume (und da es Medien sind, fehlt hier leider ein aktuelles Backup) weg, und ich möchte ungern den Raid reparieren mit der neuen Platte, und dabei eventuell die noch vorhandenen Daten des Volume überschreiben und es endgültig zerstören. Daher würde ich bevor ich das Raid repariere gerne das Volume wieder eingehängt bekommen. Um sicherzugehen das ich nichts weiter beschädige. Anbei ein Paar Infos aus der Konsole: cat /etc/fstab: none /proc proc defaults 0 0 /dev/root / ext4 defaults 1 1 /dev/mapper/cachedev_0 /volume1 ext4 usrjquota=aquota.user,grpjquota=aquota.group,jqfmt=vfsv0,synoacl,relatime,ro,nodev 0 0 /dev/mapper/cachedev_1 /volume3 btrfs auto_reclaim_space,ssd,synoacl,relatime,nodev 0 0 /dev/mapper/cachedev_2 /volume4 btrfs auto_reclaim_space,ssd,synoacl,relatime,nodev 0 0 lvdisplay: Using logical volume(s) on command line. --- Logical volume --- LV Path /dev/vg1/syno_vg_reserved_area LV Name syno_vg_reserved_area VG Name vg1 LV UUID HOyuTp-ozYw-WG4X-M1vA-WqQW-tRIm-CUoU8h LV Write Access read/write LV Creation host, time , LV Status available # open 0 LV Size 12.00 MiB Current LE 3 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 1024 Block device 249:0 --- Logical volume --- LV Path /dev/vg1/volume_1 LV Name volume_1 VG Name vg1 LV UUID o1PTXF-fKVl-9mKe-TFpF-lAd7-0TE4-8qgHGM LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 7.84 TiB Current LE 2055680 Segments 16 Allocation inherit Read ahead sectors auto - currently set to 1024 Block device 249:1 --- Logical volume --- LV Path /dev/vg1/volume_3 LV Name volume_3 VG Name vg1 LV UUID 4xFm7L-rVxD-w2Fc-GLQb-Hde5-9mLi-RX5vOa LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 275.00 GiB Current LE 70400 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 768 Block device 249:2 --- Logical volume --- LV Path /dev/vg1/volume_4 LV Name volume_4 VG Name vg1 LV UUID W6q8V3-wRjS-7pG6-Ww94-6oLm-vl3H-PCq2eR LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 64.00 GiB Current LE 16384 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 768 Block device 249:3 vgdisplay: Using volume group(s) on command line. --- Volume group --- VG Name vg1 System ID Format lvm2 Metadata Areas 4 Metadata Sequence No 55 VG Access read/write VG Status resizable MAX LV 0 Cur LV 4 Open LV 3 Max PV 0 Cur PV 4 Act PV 4 VG Size 8.17 TiB PE Size 4.00 MiB Total PE 2142630 Alloc PE / Size 2142467 / 8.17 TiB Free PE / Size 163 / 652.00 MiB VG UUID NyGatF-b85O-Ceg3-MuPY-sOTW-S2bo-P3ODO5 --- Logical volume --- LV Path /dev/vg1/syno_vg_reserved_area LV Name syno_vg_reserved_area VG Name vg1 LV UUID HOyuTp-ozYw-WG4X-M1vA-WqQW-tRIm-CUoU8h LV Write Access read/write LV Creation host, time , LV Status available # open 0 LV Size 12.00 MiB Current LE 3 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 1024 Block device 249:0 --- Logical volume --- LV Path /dev/vg1/volume_1 LV Name volume_1 VG Name vg1 LV UUID o1PTXF-fKVl-9mKe-TFpF-lAd7-0TE4-8qgHGM LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 7.84 TiB Current LE 2055680 Segments 16 Allocation inherit Read ahead sectors auto - currently set to 1024 Block device 249:1 --- Logical volume --- LV Path /dev/vg1/volume_3 LV Name volume_3 VG Name vg1 LV UUID 4xFm7L-rVxD-w2Fc-GLQb-Hde5-9mLi-RX5vOa LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 275.00 GiB Current LE 70400 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 768 Block device 249:2 --- Logical volume --- LV Path /dev/vg1/volume_4 LV Name volume_4 VG Name vg1 LV UUID W6q8V3-wRjS-7pG6-Ww94-6oLm-vl3H-PCq2eR LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 64.00 GiB Current LE 16384 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 768 Block device 249:3 --- Physical volumes --- PV Name /dev/md2 PV UUID aWjDFY-eyNx-uzPD-oqBO-5Gym-54Qr-zzkxUZ PV Status allocatable Total PE / Free PE 175270 / 0 PV Name /dev/md3 PV UUID J2CWXO-UmYK-duLr-3Rii-QcCe-nIoZ-aphBcL PV Status allocatable Total PE / Free PE 178888 / 0 PV Name /dev/md4 PV UUID 9xHJQ8-dK65-2lvJ-ekUz-0ezd-lFGD-pDsgZx PV Status allocatable Total PE / Free PE 1073085 / 0 PV Name /dev/md5 PV UUID GQwpPp-1c3c-4MSR-WvaB-2vEc-tMS6-n9GZvL PV Status allocatable Total PE / Free PE 715387 / 163 LVM Backup vor der Speichererweiterung: :~$ ls -al /etc/lvm/backup/ total 16 drwxr-xr-x 2 root root 4096 May 23 17:55 . drwxr-xr-x 5 root root 4096 May 23 17:44 .. -rw-r--r-- 1 root root 5723 May 23 17:53 vg1 Ich bin Dankbar für jede Hilfe. Schönen Abend noch Steven PS: Ja ich weis, es geht genau das Volume flöten von dem ich kein Backup habe welch Ironie

-

couldn't open RDWR because of unsupported option features (3).

ADMiNZ posted a question in General Questions

Hello. Broken write and read cache in the storage. There are disks but writes that there is no cache and the disk is not available. Tried it in ubuntu: root@ubuntu:/home/root# mdadm -D /dev/md2 /dev/md2: Version : 1.2 Creation Time : Tue Dec 8 23:15:41 2020 Raid Level : raid5 Array Size : 7794770176 (7433.67 GiB 7981.84 GB) Used Dev Size : 1948692544 (1858.42 GiB 1995.46 GB) Raid Devices : 5 Total Devices : 5 Persistence : Superblock is persistent Update Time : Tue Jun 22 22:16:38 2021 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Consistency Policy : resync Name : memedia:2 UUID : ae85cc53:ecc1226b:0b6f21b5:b81b58c5 Events : 34754 root@ubuntu:/home/root# btrfs-find-root /dev/vg1/volume_1 parent transid verify failed on 20971520 wanted 48188 found 201397 parent transid verify failed on 20971520 wanted 48188 found 201397 parent transid verify failed on 20971520 wanted 48188 found 201397 parent transid verify failed on 20971520 wanted 48188 found 201397 Ignoring transid failure parent transid verify failed on 2230861594624 wanted 54011 found 196832 parent transid verify failed on 2230861594624 wanted 54011 found 196832 parent transid verify failed on 2230861594624 wanted 54011 found 196832 parent transid verify failed on 2230861594624 wanted 54011 found 196832 Ignoring transid failure Couldn't setup extent tree Couldn't setup device tree Superblock thinks the generation is 54011 Superblock thinks the level is 1 Well block 2095916859392(gen: 218669 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 Well block 2095916580864(gen: 218668 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 Well block 2095916056576(gen: 218667 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 Well block 2095915335680(gen: 218666 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 root@ubuntu:/home/root# btrfs check --repair -r 2095916859392 -s 1 /dev/vg1/volume_1 enabling repair mode using SB copy 1, bytenr 67108864 couldn't open RDWR because of unsupported option features (3). ERROR: cannot open file system root@ubuntu:/home/root# btrfs check --clear-space-cache v1 /dev/mapper/vg1-volume_1 couldn't open RDWR because of unsupported option features (3). ERROR: cannot open file system root@ubuntu:/home/root# btrfs check --clear-space-cache v2 /dev/mapper/vg1-volume_1 couldn't open RDWR because of unsupported option features (3). ERROR: cannot open file system root@ubuntu:/home/root# mount -o recovery /dev/vg1/volume_1 /mnt/volume1/ mount: /mnt/volume1: wrong fs type, bad option, bad superblock on /dev/mapper/vg1-volume_1, missing codepage or helper program, or other error. All possible commands are answered: couldn't open RDWR because of unsupported option features (3). Tell me - something can be recovered from such an array? Thank you very much in advance!- 1 reply

-

- crashed volume

- lvm

-

(and 4 more)

Tagged with: