Search the Community

Showing results for tags 'hyper backup'.

-

DSM 7.1 on UnRaid VM Hyperback to another Synology NAS

alphaboy22 posted a question in General Questions

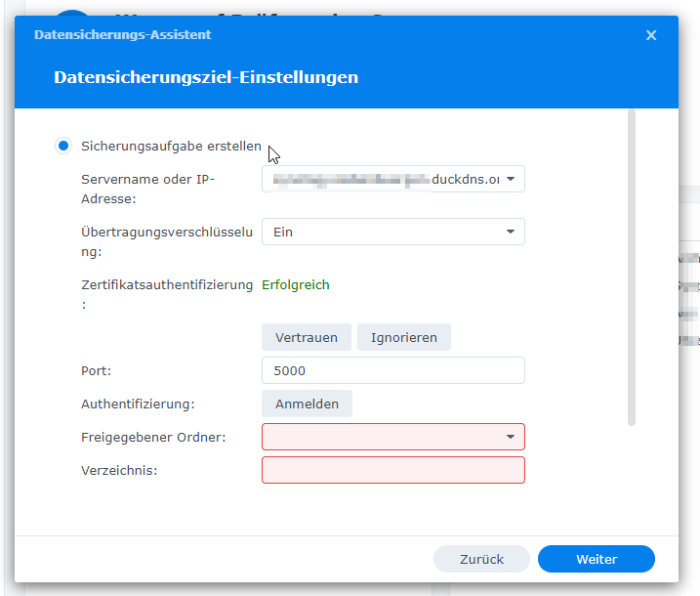

Hi there, i just explored unraid these days and i wondered if i could run DSM on it. I used THIS tutorial and yes, it worked! Now my brother got a Synology DSM (normal one; not with unraid) and we want to store his hyper-backup into my DSM7.1 UnRaid Hyper-Vault. Anyone got experience with this? On Unraid side i'm using Nginx, so there's a DynDNS behind via DuckDNS. Also i opened port 5000 on my router. We cannot get any connection together. Anyone got some tipps or XP in this ? Thank you -

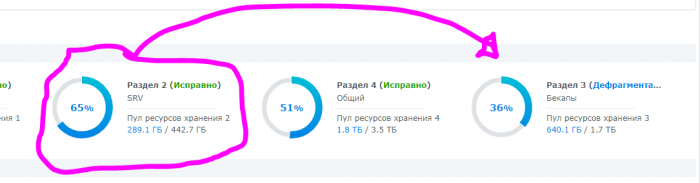

Ситуация такая, есть рабочая папка, которую нужно бекапить каждые полчаса (очень активный проект), сейчас весит примерно 250 ГБ, лежит на отдельном диске на 500 ГБ, поэтому настроено две операции ежечасного бекапа с разбежкой в полчаса.. Бекапы делаются на отдельный диск в 2 ТБ, сейчас hyper backup ругается что нет места на целевом диске, хотя на нем свободно 1,1 ТБ, а бекапы весят 385 и 310 ГБ каждый (немного по разному настроена ротация копий). Подскажите что можно сделать? По тому что показывает диск менеджер никаких проблем быть не должно... Сейчас запустил дефрагментацию, может поможет...

-

I've got an HP 8300 running bare metal DSM 6.2.3-25426 Update 3 using Jun's 1.03b loader with three disks in RAID 5. Constant uptime is less important than total storage space, which is why I'd like to change to RAID 0. I've decided that I don't need the redundancy since I have a weekly backup I make to a single USB drive using Hyperbackup and keep in a separate building. In the Hyperbackup task, I have selected every shared folder, application and service I can for backup. I've also backed up the configuration file via the Control Panel. Is the best way to do this to Erase and Restore in the Control Panel, then I would assume the discovery wizard would prompt me to go through the Synology setup wizard, choose RAID 0, import my .dss config file and import my backup via Hyperbackup? Before I obliterate the existing server, I want to have a solid plan in place. Thanks!

- 5 replies

-

- hyper backup

- baremetal

-

(and 2 more)

Tagged with:

-

I have an issue where no backups via HyperBackup at a scheduled time, If I click Backup Now the task completes with no error, it just wont start on its own... For example I scheduled one for 12:15 in HyperBackup and in system log no error is displayed and it still has the green tick but just displays the next backup schedule time. (Skips the backup) I have done alot of searching and found in the root of the system under /var/log/synoscheduler.log below is an entry from my failed task. I have tried deleting all backup tasks, backing up to another location. Also tried just backing a single folder with a txt file in it, nothing seams to work. Please help. /var/log/synoscheduler.log 2017-10-07T12:15:02+01:00 NAS synoschedtask: sched_task_db.c:111 Exec sql:[CREATE TABLE if not exists task_status(status_id INTEGER PRIMARY KEY ASC, pid INTEGER, timestamp INTEGER, app STRING, task_id INTEGER, status_code INTEGER, comment STRING, stop_time INTEGER);] error: file is encrypted or is not a database 2017-10-07T12:15:02+01:00 NAS synoschedtask: sched_task_run.c:386 Init sqlite failed. [0x0000 (null):0] I also find this error in /var/log/messages.log synoscgi_SYNO.Entry.Request_1_request[25632]: APIRunner.cpp:309 WebAPI SYNO.Backup.Task is not valid

-

- synoscheduler

- schedule time

-

(and 1 more)

Tagged with:

-

My Xpenology box is running a single volume (/volume 1) using the BTRFS system which, I understand, supports COW (copy-on-write), such that hyper backup can store multiple versions of files without duplication. Also, I have verified, via PUTTY, that the 'cp --reflink' command is available on my system (DSM 6.1.3-15152-update 5), an essential element for the COW facility utilised by Hyper Backup (which I am also using). Elsewhere, I have seen multiple users complain about file duplication created by Photo Station as it copies files to the '/photo' folder from other location on the system that those photos are already stored. I know that Synology has provided, through Storage Analyzer, a very poor (IMO) facility to identify duplicate files via MD5 checking. But this facility only identifies 200 instances of duplicates on each run, and it requires that duplicates be deleted by the operator (no batch deletion facility). Not only this, but it is reasonable that both the original files on the Synology/xpenology boxes and the copies uploaded to the /Photo folders are required in their respective locations. So a better option is required to deal with this issue: either hard linking of the duplicate files, or 'reflink'ing the copied data to minimize redundancy and maximize disk storage efficiency. My question is: has anyone managed to implement either file deduplication or reflinking of data on Synology's BTRFS in this way? If so, what have you achieved? Any advice please on how to proceed. Is anyone working on this issue? Apologies if this is expressed inadequately/too simply. Supermicro M'brd X10SL7-F-0 Xeon E3-1241-V3, 2 x 8 Gb DDR3 DIMMs giving 16Gb ECC DDR3 RAM. 9 x 8TB WD-Red HDDs in RAID 6 of 50.91 TB and 48.87 TB of BTRFS Volume DSM 6.1.3-15152 Update 5

-

- hyper backup

- storage analyzer

-

(and 1 more)

Tagged with: