Search the Community

Showing results for tags 'dsm6.2.3'.

-

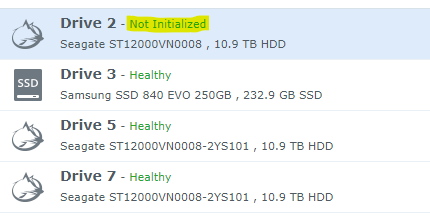

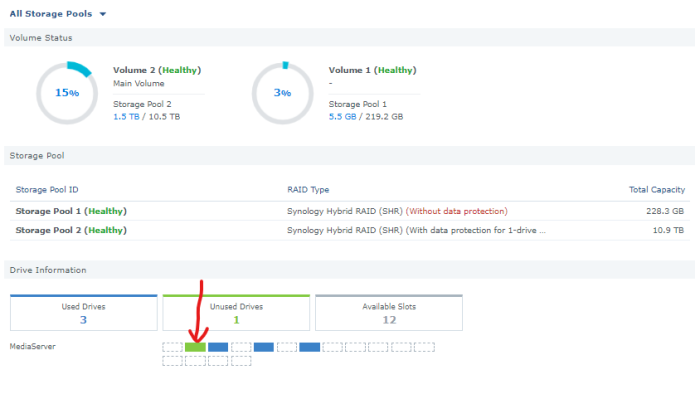

I posted this in reddit r/Xpenology but got nothing 🥲 I hope somebody could help me on this... First I am pretty new to Xpenology but I have pretty good experience with general windows machines and can do pretty much anything following the instructions. I setup a small NAS using DSM 6.2.3 (DS918+ Jun's mod) Original plan was to use DSM7 with TCRP but it did not work after many tries. I only want it to run as a media server so nothing fancy is needed anyways and 6.2 seemed more stable as well. I have no interest I just want a setup that will stay and run without me constantly maintaining. I am perfectly happy with my current setup except one thing. (Current Setup) CPU: Intel G4600 - undervolted slightly to reduce power and run cool RAM: 16G DDR3 MB: Gigabyte GA-H110N (ITX) Case: 4 + 1 Bay NAS Case (Hot-swap SATA backplane installed) PCI-e: JMB585/SATA 3.0 Non-Raid 5 ports SSD: 1 x 250G Samsung 840 EVO SSD that I have left around HDD: 3 x 12T Seagate Ironwolf ST12000VN008 My MB, GA-H110N has 3 SATA ports. My case has 4 HDD hot-swap bays and one 2.5inch bay. My original plan was to only use MB SATA ports for SSD and use 4 ports on PCIE card because it would be much cleaner in terms of cable mgmt, etc. However it was not working for whatever reasons (Probably the same reason I am posting this today) and I have moved 2 drives to MB and 1 drive hooked to PCI-e card. My SSD is setup as a Volume 1 that is being used to install and running all packages and docker - installed to 2.5inch bay I wanted to 3 HDDs to be set up as raid (1 parity drive) but after completing installation, one of the three drives, stays as "Not Initialized" I thought it was nothing in the first and tried to setup a pool with three drives, but it failed multiple times. There is no issue with my SATA backplane because I have moved cables and drives around and it is always the same. So far, what I have figured out is: Connecting more than 1 drive to PCIE to SATA card will result in all the drive connected to the card not being recognized in system When only 1 drive is connected, it shows up in the system but cannot do anything Is my card faulty? or JBM585 card not supported fully with DSM 6.2.3? I purchased a different card and installed to see what happens but then all the drives(including the 2 connected to MB directly) except SSD disappeared from DSM I do not know how to change satamap post installation.. I did read some posts but nothing was exactly the same as my situation.. If the card is faulty I would like to just replace the card and go on with life, but at this point I am not so sure. what should I do? Please help! TL;DR - PCIE SATA card is not working ahhhhhh please help

-

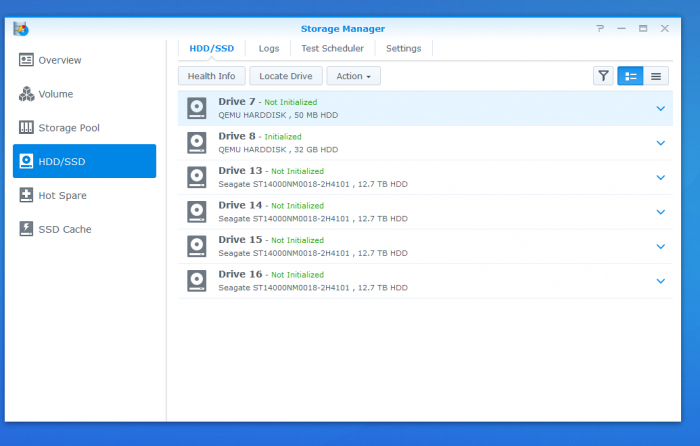

After wracking my brain for the New Years weekend and scouring both Xpe and Proxmox forums, I finally got IOMMU pass-through working for my LSI card. (Pay attention to the little details guys!!! The code goes on one line, one line!!!! It isn't delineated line by line. 😩 /rant) Prior to the PT fix, Proxmox was showing all of the 7 drives installed on the LSI PCI card. After pass-through obviously not as the Xpenology VM is accessing. However, upon logging into DSM I'm seeing some weird behavior and I don't even know where to begin so maybe someone has seen this before and can point me in the right direction. [Just as a side note, yes only 7 drives even though hard drive caddy and PCI card can support 8.] As you can see in the picture, drives are listed from 7-16. I am running two ssd's zfsmirror as the boot for Proxmox and image loader for VMs. I have 7 drives of 8 installed on the LSI 9211-8i PCI card. I see 4 of those drives as Drive 13-16. Drive 7 and 8 are the VM sata drives for the boot and loader information. Missing 3 on the other LSI SAS plug [assuming the three missing are all on the second SAS/SATA plug as it makes sense and it is port #2 on the card]. My guess is there is a max capacity of 16 drives in the DSM software. The mobo has a 6sata chipset (+2 NVME PCI/SATA unused), the two boot sata devices [drive 7 and 8] are technically virtual. 6 from the physical sata ports from chipset, +2 virtual sata for boot, +4 [of 8] from the LSI = the 16 spots listed. Is my train of thought on the right track? If so, my next thought then is, how do we block the empty [non-used] sata ports from the Chipset from taking up wasted space on the Xpe-VM? Like I said, I'm stuck. I need a helpful push in the right direction. Space below left for future editing of OP for any requested information.