Search the Community

Showing results for tags 'volume'.

-

Hi guys, For some of you who wish to expand btrfs Syno volume after disk space increased. Before: df -Th btrfs fi show mdadm --detail /dev/md2 SSH commands: syno_poweroff_task -d mdadm --stop /dev/md2 parted /dev/sda resizepart 3 100% mdadm --assemble --update=devicesize /dev/md2 /dev/sda3 mdadm --grow /dev/md2 --size=max reboot btrfs filesystem resize max /dev/md2 After: df -Th btrfs fi show mdadm --detail /dev/md2 Voila Kall

-

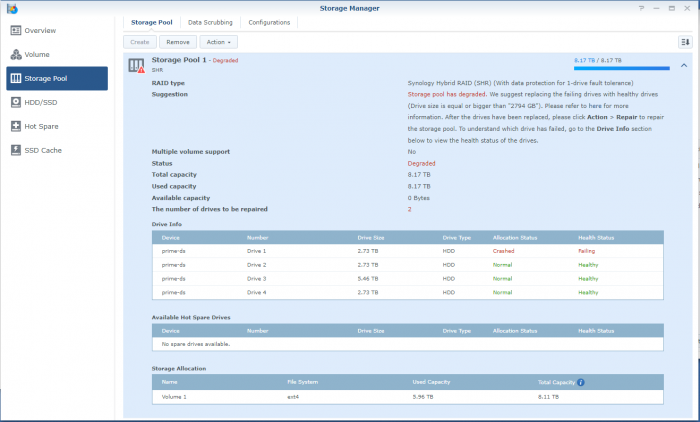

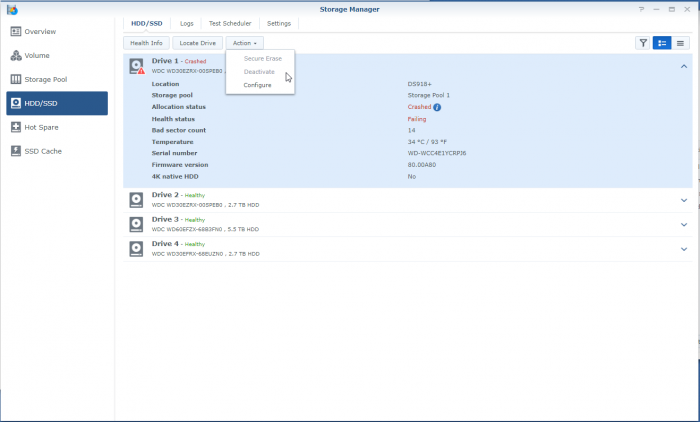

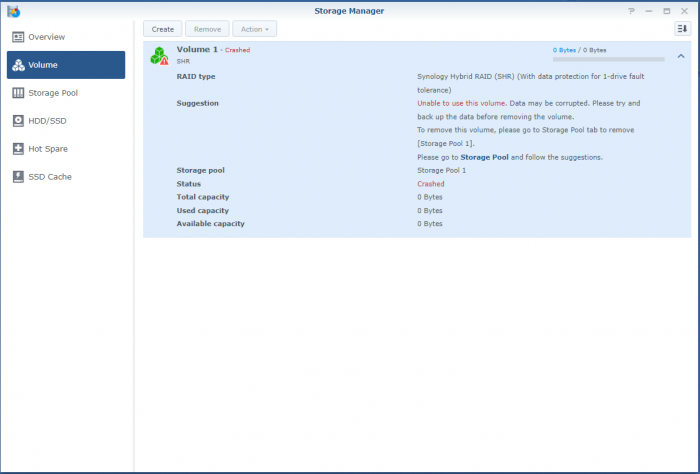

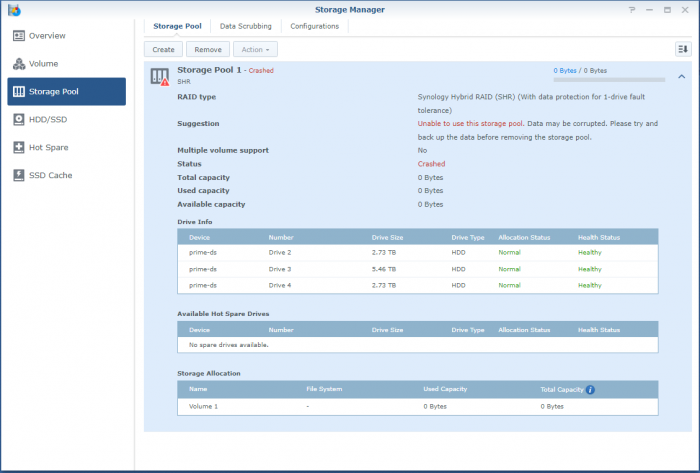

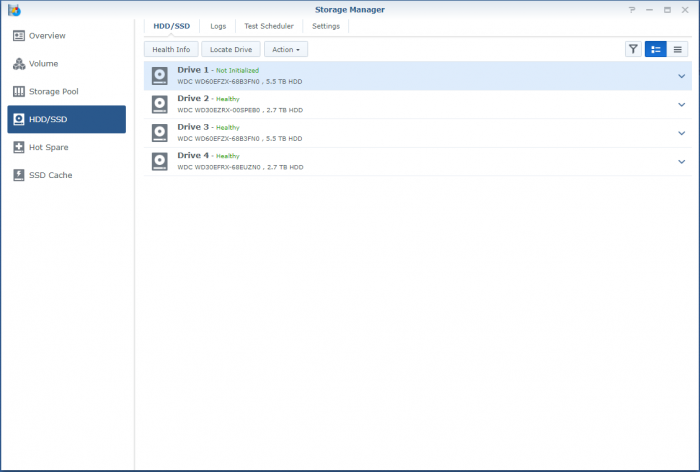

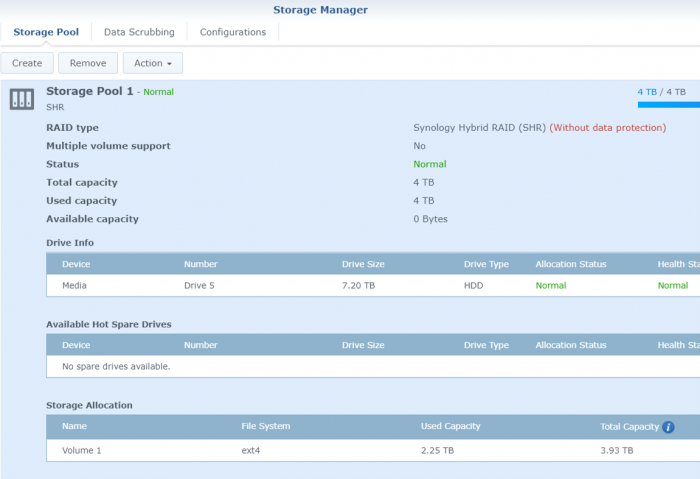

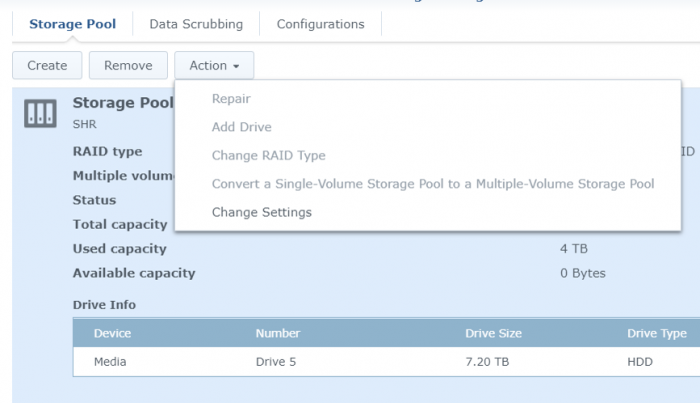

So, I'm a bit stuck. Over the last years when a disk crashed, I'd pop the crashed disk out, put in a fresh one and repair the volume. No sweat. Over time I've migrated from a mixed 1 and 2TB disks to all 3TB one and earlier this year I'd received a 6TB one as a RMA for a 3TB one from WD. So I was running: Disk 1: 3TB WD - Crashed Disk 2: 3TB WD Disk 3: 6TB WD Disk 4: 3TB WD So I've bought a shiny new 6TB WD to replace disk 1. But that did not work out well. When running the above setup; I've a degraded volume, but it's accessable. When putting in the new one, the volume is crashed and the data is not accessable. I'm not able to slect the repair option; only 'Create'. When putting back the crashed disk, after a reboot the volume is accessable again (degraded). I've did a (quick) SMART test on the new drive and it seems OK. The only thing that's changed since the previous disk replacement is that I've did an upgrade to 6.2.3-25426. Could that be a problem? However, before we proceed (and I waste your time), I've got backups. Of everything. So, deleting and recreating a new volume (or the entire system) would not be that big of a deal; It'll only cost me a lot of time. But that seems the easy way to go. I've got a Hyper-Backup of the system on an external disk and all the apps and a few of the shares. The bigger shares are backed up to several other external disks. If I delete the volume, create a new one, restore the last Hyper-Backup and copy back the other disks, I'm golden? The Hyper-Backup will contain the original shares? Might that not be the best/simplest solution, I've attached some screenshots and some outputs so we know what we are talking about.. Thanks!

-

After 4-5 years happy usage of Debian9 + Proxmox5.2 + Xpenology dsm 5.2 I upgraded six months ago to DSM6.2.3 Proxmox6.3-3 Debian10. All went well, until a few weeks ago... One night at 3am my volume 2 crashed! Since then I can read my shares from volume 2, but not write. It is read-only. I suspect the activation of write back cache in proxmox is the reason. Status of SMART and all my harddrives and raid controller is just fine, so I suspect no hardware issue. After using google, this forum and youtube I still have no solution. The crashed volume has all my music, video's, photo's and documents. I have a spare 3TB WD RED HD directly connected to the MB and copied most importend files. However I have a backup of the most important files, I prefer to restore the crashed volume, but starting to become a bit desparate. I think the solution is to stop /dev/md3 and re-create /dev/md3 with the same values, but something is keeps /dev/md3 bussy and prevents me to do. Could someone please help me? Setup: - Hardware: Asrock µATX Bxxx(I forgot), Adaptec 8158Z raid controller with 4 x 3TB WD RED (2 new, 2 second hand and already replaced b'cos broken within waranty) -Software: XPenology DSM6.2.3 Proxmox6.3-3 Debian10 -Xpenology: Volume1 is 12GB and has the OS (i guess?), Volume2 is single unprotected 8TB ext4 and has all my shares. 4GB RAM and 2 cores. Output: see attachment output.txt

-

So when installing DSM on my gen10 microserver in ESXi i gave 4TB initially to an 8TB (7.27TB formatted) to the volume thinking I could expand it later. However, I can't seem to expand the volume. It sees it as a 4TB volume in a 7.27TB drive but when I click Create, the Storage Manager doesnt see the Drive 5 where the 4TB is, only Drive 1 where the Synology OS is. How do I expand the storage so that DSM 6.2 sees the whole drive?

- 2 replies

-

- volume

- storage manager

-

(and 3 more)

Tagged with:

-

Hello, I am encountering a weird issue with my server (hp n54l baremetal). It has been working for years with no issues at all with DSM5.2 Two days ago I was thinking to backup my booting USB in case it might fail one day and if I wanted to finally update to 6.x So I turned of my server, remover the usb drive and tried to back it up with my laptop. I say tried because each time the software I use gave me an error saying it could not do it. Then I gave up and put back the usb drive into my server which booted with no problem...until I wanted to acces one volume (I had 2), but it disappeared!! Nothing! DSM does not give me any error but thr fact it cannot mount some folders (the ones on this volume). This volume is a raid 1 of two drives. Here what I did and check by order: -bios : all drives are detected, including the 2 of the missing volume -I unplugged the drives and tried others wit no luck (still detected by the bios but not DSM) -I plugged them an another machine : they are detected. -fdsik -l : does not post the missing drives -So I decided, what the hell lets update to DSM6.1, maybe something wrong with the usb drive I tried to clone. I used a brand new usb drive, loader 1.02b...etc migration done with no issue, but still the missing volume!! -OK then, let's do a clean install ... still, the two drives are missing ... -I put the drives in an old 710+, and they are detected perfectly I am out of ideas, could it be hardware while the drives are detected by the bios?

-

hi i have a ds3617xs setup. i created aa shr volume with 4 2TB disk. one of the disk died and i decided that i needed more space ,so i bought 2 new 4TB red drives. i started by replacing the failed 2TB drive with a 4TB repaired my volume and finally swapped an other 2Tb for a 4TB. all went well expect the total volume size doesn't seem to have increased. i was expecting to have 8TB available but i only have 5.6TB. do any of you can give me a clue about what's going on?

-

Hi Everyone, I made a really stupid mistake of deleting volume 1 of my NAS. The Volume consists of 2 - 2TB WD drives and 1 - 1TB WD drive. one of the 2TB drives failed and I was supposed to repair it. I've done this before but in this instance, it totally slipped my mind that I need to uninstall the failed drive first before running the repair function. I made the moronic mistake of thinking the repair function was the remove volume function and now I lost volume 1. The failed drive is now uninstalled, the other 2 drives show they are healthy but the status is "system partition failed". Is there a way I can rebuild volume 1 or just remount it from the data of the 2 remaining drives? Thanks so much for your help. Details: RAID: SHR Machine: DS3615xs DSM Version: 5.2-5644

-

- volume

- storage manager

-

(and 3 more)

Tagged with:

-

Bonsoir à tous, Voila, Ce samedi 10 mars j’ai subi une coupure d’électricité inopinée et depuis mon volume est considéré comme dégradé. Pas moyen de le réparer ! Et aucun disque n’est considéré comme défaillant ! Voici quelques images de mon interface si l’un des experts avait une idée. Merci d’avance.

-

Hi, I updated to DSM 6 using Jun's loader. Shortly after, I tried to add another HDD to my NAS. DSM wasn't recognizing the HDD, so I powered it off and used the SATA cables from one of the working drives to ensure it wasn't the cables. This is where everything went wrong. When DSM booted up, I saw the drive I needed, but DSM gave an error of course. After powering the unit off and swapping the cables back, it still said it needed repair, so I pressed repair in Storage Manager. Everything seemed fine. After another reboot, it said it had crashed. I let the parity check run overnight and now the RAID is running healthy as well as each individual disk, but the Volume has crashed. It's running SHR-1. I believe the mdstat results below show that it can be recovered without data loss, but I'm not sure where to go from here? Another thread added the removed '/dev/sdf2' back into active sync, but I'm not sure which letters are assigned where. /proc/mdstat/ admin@DiskStationNAS:/usr$ cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid1 sdc6[0] sdd6[1] 976742784 blocks super 1.2 [2/2] [UU] md2 : active raid5 sde5[0] sdd5[3] sdf5[2] sdc5[1] 8776305792 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/4] [UUUU] md1 : active raid1 sdc2[1] sdd2[2] sde2[0] sdf2[3] 2097088 blocks [12/4] [UUUU________] md0 : active raid1 sdc1[0] sdd1[1] sde1[2] sdf1[3] 2490176 blocks [12/4] [UUUU________] unused devices: <none> admin@DiskStationNAS:/usr$ mdadm --detail /dev/md2 mdadm: must be super-user to perform this action admin@DiskStationNAS:/usr$ sudo mdadm --detail /dev/md2 Password: /dev/md2: Version : 1.2 Creation Time : Sat Aug 29 05:40:53 2015 Raid Level : raid5 Array Size : 8776305792 (8369.74 GiB 8986.94 GB) Used Dev Size : 2925435264 (2789.91 GiB 2995.65 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun Aug 27 09:38:44 2017 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : DiskStationNAS:2 (local to host DiskStationNAS) UUID : d97694ec:e0cb31e2:f22b36f2:86cfd4eb Events : 17027 Number Major Minor RaidDevice State 0 8 69 0 active sync /dev/sde5 1 8 37 1 active sync /dev/sdc5 2 8 85 2 active sync /dev/sdf5 3 8 53 3 active sync /dev/sdd5 admin@DiskStationNAS:/usr$ sudo mdadm --detail /dev/md3 /dev/md3: Version : 1.2 Creation Time : Thu Jun 8 22:33:42 2017 Raid Level : raid1 Array Size : 976742784 (931.49 GiB 1000.18 GB) Used Dev Size : 976742784 (931.49 GiB 1000.18 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sun Aug 27 00:07:01 2017 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Name : DiskStationNAS:3 (local to host DiskStationNAS) UUID : 4976db98:081bd234:e07be759:a005082b Events : 2 Number Major Minor RaidDevice State 0 8 38 0 active sync /dev/sdc6 1 8 54 1 active sync /dev/sdd6 admin@DiskStationNAS:/usr$ sudo mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Sun Aug 27 00:10:09 2017 Raid Level : raid1 Array Size : 2097088 (2048.28 MiB 2147.42 MB) Used Dev Size : 2097088 (2048.28 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Sun Aug 27 09:38:38 2017 State : clean, degraded Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 UUID : a56a9bcf:e721db67:060f5afc:c3279ded (local to host DiskStationNAS) Events : 0.44 Number Major Minor RaidDevice State 0 8 66 0 active sync /dev/sde2 1 8 34 1 active sync /dev/sdc2 2 8 50 2 active sync /dev/sdd2 3 8 82 3 active sync /dev/sdf2 4 0 0 4 removed 5 0 0 5 removed 6 0 0 6 removed 7 0 0 7 removed 8 0 0 8 removed 9 0 0 9 removed 10 0 0 10 removed 11 0 0 11 removed admin@DiskStationNAS:/usr$ sudo mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Fri Dec 31 17:00:25 1999 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sun Aug 27 10:28:58 2017 State : clean, degraded Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 UUID : 147ea3ff:bddf1774:3017a5a8:c86610be Events : 0.9139 Number Major Minor RaidDevice State 0 8 33 0 active sync /dev/sdc1 1 8 49 1 active sync /dev/sdd1 2 8 65 2 active sync /dev/sde1 3 8 81 3 active sync /dev/sdf1 4 0 0 4 removed 5 0 0 5 removed 6 0 0 6 removed 7 0 0 7 removed 8 0 0 8 removed 9 0 0 9 removed 10 0 0 10 removed 11 0 0 11 removed Hide