pigr8

Member-

Posts

224 -

Joined

-

Last visited

-

Days Won

2

Everything posted by pigr8

-

Intel i225&i226 igc driver development thread

pigr8 replied to jimmmmm's topic in Developer Discussion Room

i was looking at the same goal, using the spk on a genuine syno box, gonna do some research on that usb spk, should be simple in theory. or the synocommunity synokernel drivers for the cdrom and serial adapters could be a good starting point.- 33 replies

-

si tranquillamente, anche se non userei dischi USB ma userei dischi sata. con un adattatore m.2 con chip asm1166 ottieni 5 porte sata e allora si che avrebbe senso come nas.

-

anyone tried 42962 update 1 with arpl? @fbelavenuto does arpl need an update every time?

-

Hi, does anyone know how to create a SHR volume from the command line? I found some tutorial on how to create some basic volumes but no one mentions on how to create a SHR with maybe 2 disks (SHR1). synopartition should take care on create the partitioning, and if i'm not mistaken mdadm should create the SHR. Any hint?

-

I know it works i used to have dsm7 on esxi too.. but openvmtools don't work on dsm7 because you can't have root access to shutdown or reboot, the solution is a workaround using openvmtools in a docker container that uses local ssh to make it work.

-

on dsm 7 only docker works

-

log spam, prod has less spam.

-

@fbelavenuto asked to open GitHub issues for problems and to now write here, he cannot track every single post. open a issue on GitHub, post config an logs and try to explain what is happening so he can maybe fix what is wrong. there are problems with some add-ons, maybe eudev (dunno) that does not load the drivers so many users have problems at boot, and for those one that have problems with the i915 and build 42962 it is probably a kernel panic loading the backported modules, it is mandatory a log to see what happens. a message like "oh I'm stuck at booting kernel no ip address" is meaningless, i hope that is clear.

-

you cannot, use ds3622xs+ if you want a hba card

-

he already explained that you don't need to do anything, it's loaded automatically.. and you cannot do load that this way.

-

you can still download the vmdk/img, it's just not published yet in the release tab.

-

here you have a try to in_modules.sh [13034.313725] mtrr: type mismatch for d0000000,10000000 old: write-back new: write-combining [13034.315500] Failed to add WC MTRR for [00000000d0000000-00000000dfffffff]; performance may suffer. [13034.320774] [drm] Supports vblank timestamp caching Rev 2 (21.10.2013). [13034.322263] [drm] Driver supports precise vblank timestamp query. [13034.323579] i915 0000:03:00.0: BAR 6: can't assign [??? 0x00000000 flags 0x20000000] (bogus alignment) [13034.325575] [drm] Failed to find VBIOS tables (VBT) [13034.326916] vgaarb: device changed decodes: PCI:0000:03:00.0,olddecodes=io+mem,decodes=none:owns=io+mem [13034.329680] [drm] Finished loading DMC firmware i915/kbl_dmc_ver1_04.bin (v1.4) [13034.331556] BUG: unable to handle kernel NULL pointer dereference at (null) [13034.333274] IP: [< (null)>] (null) [13034.334378] PGD 0 [13034.334859] Oops: 0010 [#1] SMP [13034.335622] Modules linked in: i915(E+) video(E) backlight(E) iosf_mbi(E) drm_kms_helper(E) drm(E) i2c_algo_bit(E) fb(E) nfnetlink xfrm_user xfrm_algo xt_ipvs ip_vs_rr ip_vs xt_mark iptable_mangle br_netfilter bridge stp aufs macvlan veth xt_conntrack xt_addrtype nf_conntrack_ipv6 nf_defrag_ipv6 ip6table_filter ip6_tables ipt_MASQUERADE xt_REDIRECT nf_nat_masquerade_ipv4 xt_nat iptable_nat nf_nat_ipv4 nf_nat_redirect nf_nat xt_recent xt_iprange xt_limit xt_state xt_tcpudp xt_multiport xt_LOG nf_conntrack_ipv4 nf_defrag_ipv4 nf_conntrack iptable_filter ip_tables x_tables fuse vfat fat 8021q vhost_scsi(O) vhost(O) tcm_loop(O) iscsi_target_mod(O) target_core_user(O) target_core_ep(O) target_core_multi_file(O) target_core_file(O) target_core_iblock(O) target_core_mod(O) syno_extent_pool(PO) rodsp_ep(O) [13034.352407] udf isofs synoacl_vfs(PO) raid456 async_raid6_recov async_memcpy async_pq async_xor async_tx nfsd btrfs ecryptfs zstd_decompress zstd_compress xxhash xor raid6_pq lockd grace rpcsec_gss_krb5 auth_rpcgss sunrpc leds_lp3943 aesni_intel glue_helper lrw gf128mul ablk_helper geminilake_synobios(PO) usblp syscopyarea sysfillrect sysimgblt fb_sys_fops cfbfillrect cfbcopyarea cfbimgblt drm_panel_orientation_quirks fbdev uhci_hcd zram usb_storage r8168(O) sg dm_snapshot dm_bufio crc_itu_t crc_ccitt psnap p8022 llc hfsplus md4 hmac sit tunnel4 ipv6 flashcache_syno(O) flashcache(O) syno_flashcache_control(O) dm_mod arc4 crc32c_intel cryptd ecb aes_x86_64 authenc des_generic ansi_cprng cts md5 cbc cpufreq_powersave cpufreq_performance processor cpufreq_stats vxlan ip6_udp_tunnel udp_tunnel ip_tunnel [13034.366501] loop hid_generic usbhid hid sha256_generic synorbd(PO) synofsbd(PO) etxhci_hcd vmxnet3(OE) vmw_vmci(E) button xhci_pci xhci_hcd ehci_pci ehci_hcd usbcore usb_common [last unloaded: fb] [13034.369128] CPU: 2 PID: 4641 Comm: insmod Tainted: P OE 4.4.180+ #42962 [13034.370181] Hardware name: VMware, Inc. VMware7,1/440BX Desktop Reference Platform, BIOS VMW71.00V.18227214.B64.2106252220 06/25/2021 [13034.371877] task: ffff88020d651980 ti: ffff88025d930000 task.ti: ffff88025d930000 [13034.372909] RIP: 0010:[<0000000000000000>] [< (null)>] (null) [13034.373965] RSP: 0018:ffff88025d9338a0 EFLAGS: 00010246 [13034.374714] RAX: ffff88001bd88040 RBX: ffff88025d86beb8 RCX: 0000000000000000 [13034.375697] RDX: 0000000000000000 RSI: ffff88001bd88300 RDI: ffff88001bd88040 [13034.376680] RBP: ffff88025d9338c8 R08: ffffffffa0f0a470 R09: ffff88001bd88040 [13034.377664] R10: 0000000000001000 R11: 00000000fffff000 R12: ffff88001bd88300 [13034.378646] R13: 0000000000000000 R14: ffff88025d86ba18 R15: ffff88025d86be78 [13034.379652] FS: 00007f2f47411740(0000) GS:ffff880272d00000(0000) knlGS:0000000000000000 [13034.380755] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 [13034.381583] CR2: 0000000000000000 CR3: 00000001005b4000 CR4: 00000000003606f0 [13034.382606] Stack: [13034.382884] ffffffff812e12b1 ffff88001bd882c0 0000000100000000 0000000000001000 [13034.383972] 0000000000000000 ffff88025d9338d8 ffffffffa0f0a878 ffff88025d933968 [13034.385076] ffffffffa0f0ae3a ffff88025d86b968 ffff88001bd882c0 0000000000000000 [13034.386238] Call Trace: [13034.386571] [<ffffffff812e12b1>] ? __rb_insert_augmented+0xe1/0x1d0 [13034.387459] [<ffffffffa0f0a878>] drm_mm_interval_tree_add_node+0xc8/0xe0 [drm] [13034.388468] [<ffffffffa0f0ae3a>] drm_mm_insert_node_in_range+0x23a/0x4a0 [drm] [13034.389495] [<ffffffffa0fe5d7f>] i915_gem_gtt_insert+0xaf/0x1b0 [i915] [13034.390421] [<ffffffffa0ffb771>] __i915_vma_do_pin+0x381/0x490 [i915] [13034.391348] [<ffffffffa10039b2>] logical_render_ring_init+0x2f2/0x3b0 [i915] [13034.392351] [<ffffffffa1001e40>] ? gen8_init_rcs_context+0x50/0x50 [i915] [13034.393305] [<ffffffffa0ffdbcc>] intel_engines_init+0x4c/0xc0 [i915] [13034.394205] [<ffffffffa0fed5b3>] i915_gem_init+0x183/0x520 [i915] [13034.395049] [<ffffffffa106ab80>] ? intel_setup_gmbus+0x210/0x290 [i915] [13034.395966] [<ffffffffa0f073ae>] ? drm_irq_install+0xbe/0x140 [drm] [13034.396867] [<ffffffffa0fa895d>] i915_driver_load+0xb6d/0x1660 [i915] [13034.397775] [<ffffffff814a1330>] ? of_clk_set_defaults.cold.1+0x87/0x87 [13034.398759] [<ffffffffa0fb35b2>] i915_pci_probe+0x22/0x40 [i915] [13034.399630] [<ffffffff813207b0>] pci_device_probe+0x90/0x100 [13034.400441] [<ffffffff813b5113>] driver_probe_device+0x1c3/0x280 [13034.401293] [<ffffffff813b5249>] __driver_attach+0x79/0x80 [13034.402024] [<ffffffff813b51d0>] ? driver_probe_device+0x280/0x280 [13034.402901] [<ffffffff813b32e9>] bus_for_each_dev+0x69/0xa0 [13034.403735] [<ffffffff813b4a59>] driver_attach+0x19/0x20 [13034.404492] [<ffffffff813b46ac>] bus_add_driver+0x19c/0x1e0 [13034.405304] [<ffffffffa10e2000>] ? 0xffffffffa10e2000 [13034.405984] [<ffffffff813b59eb>] driver_register+0x6b/0xc0 [13034.406772] [<ffffffff8131f251>] __pci_register_driver+0x41/0x50 [13034.407644] [<ffffffffa10e2029>] i915_init+0x29/0x4a [i915] [13034.408447] [<ffffffff81000340>] do_one_initcall+0x80/0x130 [13034.409263] [<ffffffff811616e9>] ? __vunmap+0x99/0xf0 [13034.409958] [<ffffffff8116f9cd>] ? kfree+0x13d/0x160 [13034.410679] [<ffffffff810bdb3b>] do_init_module+0x5b/0x1d0 [13034.411469] [<ffffffff810bf914>] load_module+0x1be4/0x2080 [13034.412257] [<ffffffff810bc7c0>] ? __symbol_put+0x40/0x40 [13034.412993] [<ffffffff811899bc>] ? kernel_read+0x3c/0x50 [13034.413760] [<ffffffff810bff6d>] SYSC_finit_module+0x7d/0xa0 [13034.414597] [<ffffffff810bffa9>] SyS_finit_module+0x9/0x10 [13034.415422] [<ffffffff8156c84a>] entry_SYSCALL_64_fastpath+0x1e/0x8e [13034.416327] Code: Bad RIP value. [13034.416828] RIP [< (null)>] (null) [13034.417581] RSP <ffff88025d9338a0> [13034.418058] CR2: 0000000000000000 [13034.418553] ---[ end trace 954c416bf4bfb73b ]---

-

Linux DiskStation 4.4.180+ #42962 SMP Sat Sep 3 22:22:17 CST 2022 x86_64 GNU/Linux synology_geminilake_920+ should be the same as before it kernel panics and stops the vm for me also, i could not figure out what that was until i rebuild arpl without the igpu drivers and it boots fine now.

-

@fbelavenutoi can confirm that 0.4a5 does not display the display output on esxi, stuck on booting kernel.. alpha4 works.

-

Develop and refine the DVA1622 loader

pigr8 replied to pocopico's topic in Developer Discussion Room

nope, not working, it's physically not wired/disabled even if the igpu is present on that cpu.. was an hp choice, you cannot even see it in esxi. same cpu on B75 mainboard has the igpu exposed and passthough works. -

Develop and refine the DVA1622 loader

pigr8 replied to pocopico's topic in Developer Discussion Room

i have a E3-1265L on my Gen8 (Ivybridge) and it has an iGPU (HD2500) with quicksync, but on the Gen8 you cannot passthrough the iGPU anyway since it's disabled/not used (because of the Matrox). -

RedPill - the new loader for 6.2.4 - Discussion

pigr8 replied to ThorGroup's topic in Developer Discussion Room

I'm perfectly aware that baremetal and passthrough/rdm drives work, what i was talking about is a complete different scenario and issue. -

RedPill - the new loader for 6.2.4 - Discussion

pigr8 replied to ThorGroup's topic in Developer Discussion Room

i was talking about virtual nvme drives, are your drives passed through or are they virtual? -

RedPill - the new loader for 6.2.4 - Discussion

pigr8 replied to ThorGroup's topic in Developer Discussion Room

does anyone know it the fake smart data shim on the hdd/ssd in redpill can be implemented for virtual nvme disks too somehow? dsm wont allow to use a virtual nvme vmdk as cache because it gives critical error on smart lifespan, from what i understand that shim was never implemented in redpill.. am i correct? -

oh yeah i'm not gonna bother either with that thing.. i'm gonna try to see if tdarr is using quicksync also. edit: also, to make the iGPU passthough working correctly in ESXi i had to issue a "esxcli system settings kernel set -s vga -v FALSE" from terminal and reboot the supervisor.. it disables the ESXi video terminal function (no hdmi/dp output once booted) but it gives the igpu the ability to be passed to a vm without issues. multi-vm passthough is not supported tho, but that's probably related to Intel limitation.

-

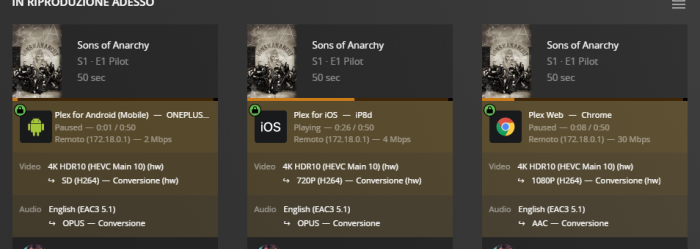

the gpu passthrough was working fine on Windows (detection, drivers install and so on) so that could not be an Hypervisor problem (VMWare ESXi 7.0f3), vt-d and vt-x enabled, could not figure out why it says "Killed" in Syno terminal, and it still does. i changed the ARPL boot from bios to efi (hypervisor was also already efi boot) and thats the only thing i did actually (and fix the *.bin wrong header files problem in arpl-addons), recreated the docker container with the /dev/dri mapped (permission correctly fixed), enabled the hw transcoding and encoding and now it shows the damn (hw) while streaming. i have to spin up a DVA test vm to see if face recognition also works in Surveillance Station, seems that the ds920+ i'm using does not have it, bummer. i did not test Video Station but i dont actually use that, i guess it "should" also work.

-

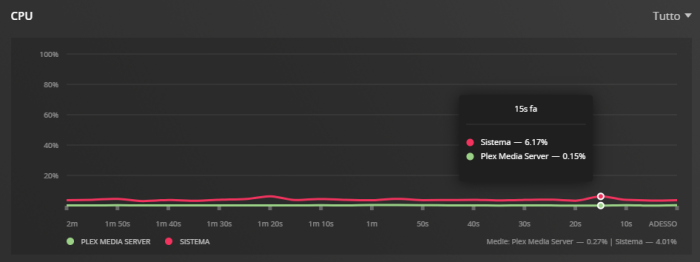

i finally got it working.. multiple streams from different devices at once, cpu utilization is really low on a i3-10100T @stock frequency.

-

sure, put the sataportmap in cmdline