cwiggs

Member-

Posts

36 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

cwiggs's Achievements

Junior Member (2/7)

3

Reputation

-

Nice thank you for doing this I'm sure it will help out a lot of people. The download link isn't working for me, do you have to actually share the file/folder?

-

If it's in the ZSTD format then it is compressed using the zstd algorithm already. https://pve.proxmox.com/pve-docs/chapter-vzdump.html I don't know the details of this, other than a vague understanding that there is IP in the DSM software after it's been setup. Not sure if that IP is after you include the pat file or just after TCRP builds the img, or some other time.

-

Did you compress the backup when you created it? 3.3GB isn't too big IMO, there isn't an inherit downside to it being "too big". Do keep in mind that the backup might have IP protected data in it that Synology Inc. wouldn't like.

-

Question about the XPEnology 918+ NAS

cwiggs replied to estrichleger's question in General Questions

You should be able to upgrade. There are pros and cons to upgrading, only you will know if it's worth it since only you know how you use the server. If you search around on this forum there are guides on how to upgrade. -

More findings: I rebuilt the storage pool as a "basic" array with ext4 as the filesystem. Here is then what I did to grow the vdisk: sudo parted /dev/sdb resizepart 3 100% sudo mdadm --grow /dev/md2 --size=max sudo reboot After a reboot login to DSM > Storage Manager > Storage pool > Expand So it looks like you don't need to take any DSM service offline (synostgvolume --unmount -p volume1) in order to grow a virtual disk. It would be nice to be able to do all this in the CLI or all of it in the GUI but it looks like for now that isn't possible.

-

More findings: the JBOD type in DSM is just a "linear" mdadm array when you ssh in and check. It seems you can't grow a mdadm array that is linear: sudo mdadm --grow /dev/md2 --size=max mdadm: Cannot set device size in this type of array. So with JBOD mode the only way to add storage space would be to attach another vdisk and add it to the JBOD array. That is currently how I do it with DSM 6 but IMO it isn't ideal.

-

After failing to be able to resize a virtual disk that was using "basic" raid level and ext4 I tried with "basic" raid level and brtfs. The interesting thing I've found is the `brtfs` command seems to be missing in the cli on 7.1.1, however I was able to expand the filesystem using the gui, here is how: # Not 100% sure if we need this command sudo synostgvolume --unmount -p volume1 parted /dev/sda resizepart 3 100% mdadm --grow /dev/md2 --size=max reboot Then after it reboots go into DSM > storage manager > storage pool > expand I assume we could do the same thing with ext4 but I haven't tried it.

-

I ran into this same issue and I just skipped the `mdadm --stop` command. Also after you reboot you will have to expand the filesystem via the gui since in 7.1.1 it seems like the `brtfs` command is missing in the cli.

-

How to expand volume size in DSM 7 installed on esxi?

cwiggs replied to W25805's topic in Developer Discussion Room

"basic" and JBOD are 2 different types of "raid" levels in DSM. AFAIK "basic" is actually raid1 just with 1 drive not attached, if you add a 2nd drive later you can attach it and DSM then considers it raid1. -

Looking through the synology docs and it doesn't seem like there is a good way to grow a virtual disk via the DSM gui. Not being able to grow the vdisk makes sense since the official DSM systems don't have vdisks for the main DSM. There is some talk on the forum about how to grow a vdisk (usually with esxi) but they are look older. These tests are on DVA3221 7.1.1-42962 with 1 vdisk in a "basic" storage pool. Here is some info growing a virtual disk: # Check which volumes we are dealing with (in this e.g it's a basic storage pool) df | fgrep volume1 /dev/mapper/cachedev_0 21543440 107312 21317344 1% /volume1 # Looks like we are using LVM, lets check the physical volume sudo pvdisplay --- Physical volume --- PV Name /dev/md2 VG Name vg1 PV Size 21.77 GiB / not usable 3.00 MiB Allocatable yes PE Size 4.00 MiB Total PE 5573 Free PE 194 Allocated PE 5379 PV UUID TmLYpo-38Ky-3Ycm-hUqn-n2M7-u19B-ooOJpo # Now we can check the "raid" array sudo mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Wed Dec 28 14:25:33 2022 Raid Level : raid1 Array Size : 22830080 (21.77 GiB 23.38 GB) Used Dev Size : 22830080 (21.77 GiB 23.38 GB) Raid Devices : 1 Total Devices : 1 Persistence : Superblock is persistent Update Time : Thu Dec 29 09:35:04 2022 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : nas04:2 (local to host nas04) UUID : d0a76bee:7701a3b8:9b3649e9:9f0dafc2 Events : 13 Number Major Minor RaidDevice State 0 8 19 0 active sync /dev/sdb3 # used to stop DSM service (syno_poweroff_task used to be used) sudo synostgvolume --unmount -p /volume1 # Resize the parition parted /dev/sdb resizepart 3 100% # Then resize the raid array mdadm --grow /dev/md2 --size=max # Not sure if we need to reboot, but idk how to bring the services and volume back online. reboot # Once DSM is back up you can login go to storage manager > storage pool and expand it there, however we will stick with the cli. # Expand the physical volume first sudo pvresize /dev/md2 # Then extend the logical volume sudo lvextend -l +100%FREE /dev/vg1/volume_1 # Next resize the actual partition (ext4) # I got an error saying the device was busy, I tried using synostgvolume again but i still wasn't able to resize the partition. # trying to resize via the GUI also gave an error and asked me to submit a support ticket. sudo resize2fs -f /dev/vg1/volume_1

-

cwiggs started following Virtual disk Info

-

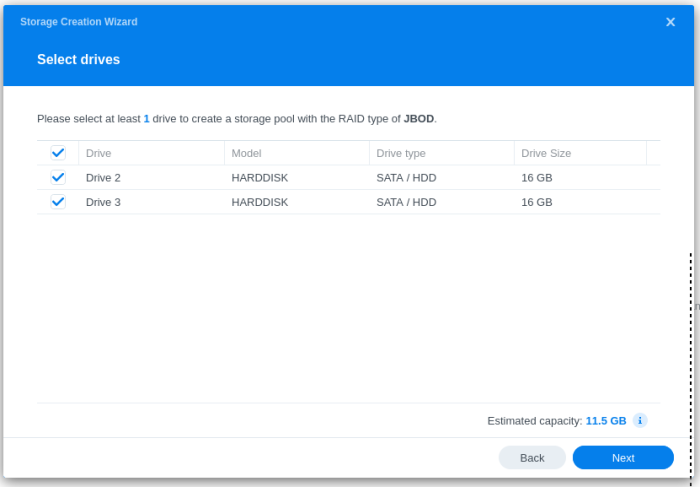

Hello! I'm looking at creating a new Xpenology VM in proxmox 7 and am looking into what the best virtual disk setup I should use. Currently my DSM 6 install is using JBOD and when I need to expand I just add a new virtual disk to the VM and add that new vdisk to the JBOD "array", however I would rather just increase the vdisk in proxmox and then expand it in DSM. Searching this forum and I haven't found much info so maybe if I ask the questions here someone can answer. Here are my questions: Is there a benefit to using SCSI vs SATA for your storage disks? If you are already using ZFS in proxmox there isn't a need to us RAID or BRTFS in DSM so which type of Raid/filesystem should I use? How can I expand a virtual disk in DSM after things are installed to it without data lose? The vdisks live on an SSD, should I check the SSD emulation in proxmox? Why there is so much storage loss with two 16G vdisks in JBOD mode. I would think it would be slightly less than 32G, but It seems to be ~10G usable, why?

-

Yes, you can boot via sata, although I'm not 100% sure if all models are supported.

-

-

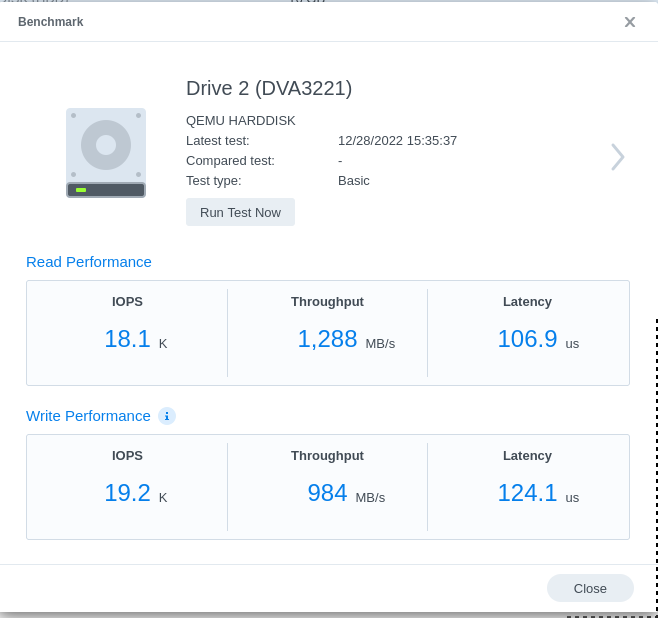

A lot to unpack here. I went through a similar path when I setup DSM years ago. Here are my thoughts: Have you benchmarked the Gigabit Ethernet when it's in proxmox yet? I'd be curious to see if it's a bottleneck. I have a cluster of 3 HP EliteDesk 800 G3 Minis that I run proxmox on. 2 of the systems have 16G of ram and 1 has 32G of ram, total of 64G of ram. All 3 systems are using a single m.2 NVMe ssd with ZFS. The main issue with ZFS is that it will use more ram than ext4, but ZFS adds a lot of cool features as well. I say this because you only have 16G of ram, if you only run a DSM VM with 4G of ram you might be alright using ZFS but keep that in mind. You can limit the ram ZFS uses but you have more storage than I do so It might not help: https://pve.proxmox.com/wiki/ZFS_on_Linux#sysadmin_zfs_limit_memory_usage TLDR: If you have the RAM use ZFS. You are correct in that you will loose the snapshot feature if you passthrough. I don't think there is a way to get both benefits, but I'd love to actually see benchmarks between passthrough vs virtual disk. I think you can do that, although I'm not sure what the pros/cons are of passthrough + cache. Keep in mind you can create a cache ssd with ZFS as well, more info here: https://pve.proxmox.com/wiki/ZFS_on_Linux These are all great questions that I don't have the answer to. benchmarks side-by-side with the same hardware would be what I would want to make decisions. In general I've heard that SCSI is better than SATA in proxmox but I think that is just because you can have more virtual disks with SCSI. I also know that you cannot boot TCRP off of SCSI, you either have to boot of USB or SATA. But you could probably boot TCRP off SATA and have additional virtual disks as SCSI. I've heard VIRTIO is what you want to use, but again I haven't seen data to support this. From a security standpoint you should have encryption at the host level I would think. Not sure about from a performance standpoint. DS920+ will not work with the default proxmox CPU type (KVM64) as it doesn't support FMA3. Seems like a lot of people go with changing the CPU type to host (assuming the host cpu supports FMA3) or they go with DS3622xs+ I'm very curious what you find out as I have a lot of the same questions. Another question that I had which led me to this post is: If you go virtual disks what is the best setup if you want to start with a small disk and grow it later? Currently my DSM setup is I have a 32G virtual disk attached and when I run out of space I add another 32G virtual disk and add it to the storage pool in DSM. My preference would be to just increase the virtual disk and grow the disk/storage pool in DSM but I haven't had very good luck with that. Btw, here is a benchmark of one of my virtual disks that are on a NVMe SSD. Not 100% sure why the write benchmark is not there, but my read benchmark is much better than yours:

-

Can we get the OP to update the first post to include that DVA3221 has 8 camera licenses, therefore DVA3221 has a benefit over other options if you want to use it for cameras? It'd also be nice to include info on how the default cpu for proxmox is KVM64, which doesn't support FMA3.