jbesclapez

-

Posts

188 -

Joined

-

Last visited

Posts posted by jbesclapez

-

-

1 hour ago, nicoueron said:

C'est vraiment chelou ton truc... il a l'air de ne voir qu'un seul disque maintenant.... Je ne sais pas trop quoi te dire.

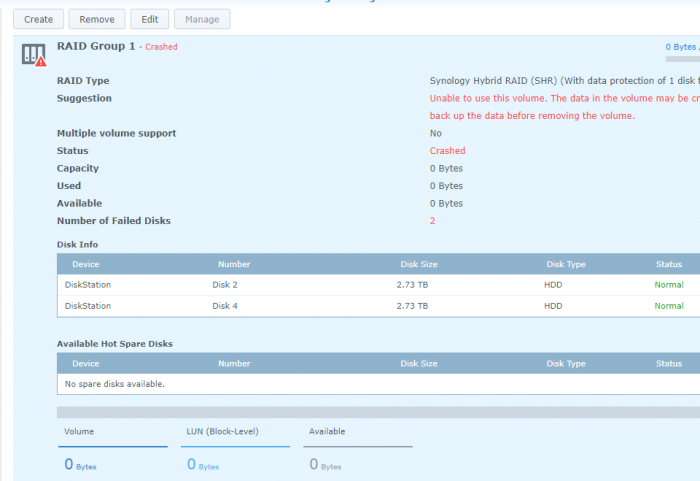

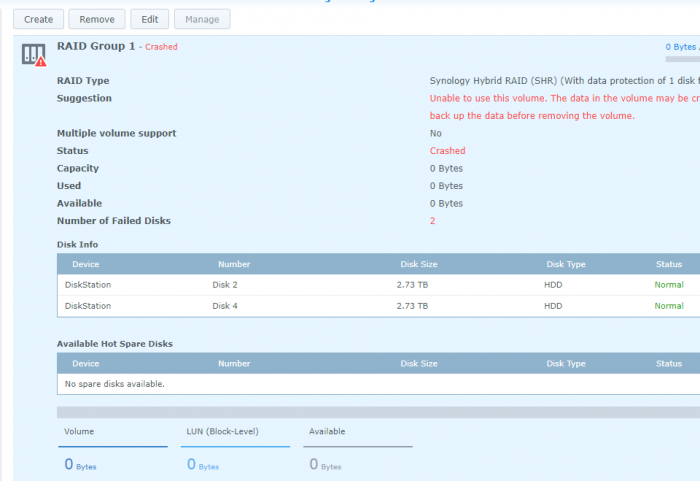

Si je résume ton pb : tu as 4 disques qui théoriquement devrait faire partir d'un seul et même groupe RAID SHR avec donc la perte d'un seul disque. or ici il semble que 2 de tes disques soient en vrac. sur ton avant-dernière capture la grappe n'est plus consistituée que de deux disques... J'ai franchement peur pour tes données 😕

Si tu te remets en mode normal sur le loader pour qu'il boot sur DSM. Tu pourrais essayer de lancer en ligne de commandes la réparation du système de fichiers mais chez moi la dernière fois que j'ai fais ça ça ma coûté pas mal de pertes de données.

Si tu as une sauvegarde je serai plus partisan d'une réinstalle propre de 0. Mais honnêtement quand DSM commence à avoir des erreurs sur la partition de l'OS c'est que le(s) disque(s) commencent à montrer de sérieux signes de faiblesse (surtout celui estampillé Green)...

Et merci de m aider au fait

-

Et comment je fais la réparation du système de fichiers?

-

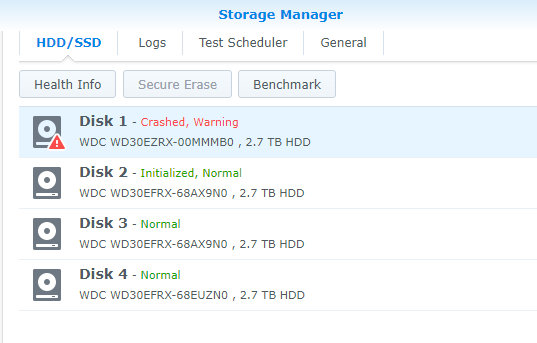

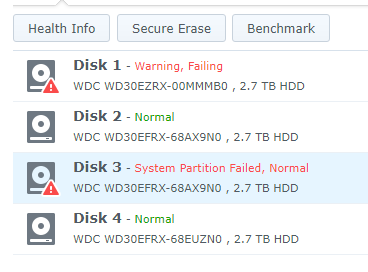

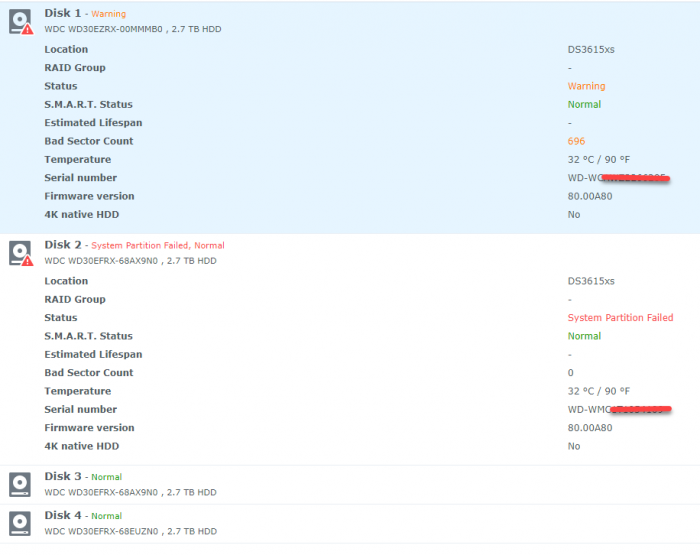

Voila ce que j ai avant de faire la reinstall. Tu vois le disque 3 est en system partition failed.

Mais tout a l heure tu as bien resume mon probleme. Je te repete par contre que je n ai pas touche aux data sur les disques.Comme tu les vois, je status des disques a change au cours du temps. Est ce que le numero du disque a un sens?

-

Je vais rebooter avec ma clef d origine mais en boot default. et je t envoie un nouveau print screen.

-

On 3/29/2020 at 1:15 PM, nicoueron said:

Non pas nécessaire. Au moment du démarrage du loader, choisi "reinstall". Prevois de téléchargement exclusivement le pat correspondant.

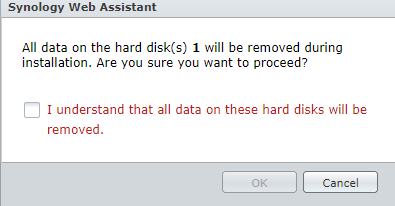

Oula, j ai maintenant ca apres avec choisi reinstall.

Est ce que je vais perdre les donnees du disque 1? ou du volume 1? Ou rien?

-

-

Forgot to add this : (and now in englishe heheh)

- DSM Version : 6.1.6.15266 update 1

- Loader JunMod v1.02b- Installed on a motherboard on which I reseted the bios but since I put back the AHCI mode for the HD > Asus PQ5 SE2 with 4 HD

- extra.lzma: NO

-

10 hours ago, nicoueron said:

au début je pensais que le disque 2 = disque 3, mais c'est peut-être normal.

En tout je pense que ton DSM est totalement parti en cacahuète! Une réinstall s'avère obligatoire pour réparer la partition de l'OS sur chacun de tes disques. je ne vois pas d'autres solutions.

Nico, j ai besoin de plus de precisions stp.

Quand tu dis une reinstalle, tu veux bien dire de la clef de boot?Avec les memes parametrages? meme numero de serie?

-

Forgot to add this :

- DSM Version : 6.1 (la derniere je pense)

- Loader JunMod v1.02b- Installation sur une mobo sur laquelle j ai fait un reset bios depuis, mais j ai remis en AHCI les disques > Asus PQ5 SE2. j ai 4 HDD.

- extra.lzma: NO

-

Hello there!

I really need your help to save my data on my volume.

I have an xpenology on 6.1 using Junboot 1.02b with disks in AHCI in bios.

There is one volume with 4 disks on a Raid SHR (with data protection of 1 disk).

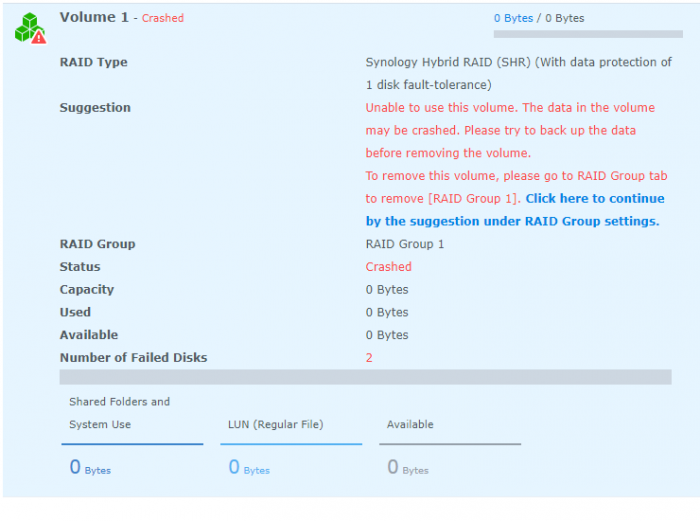

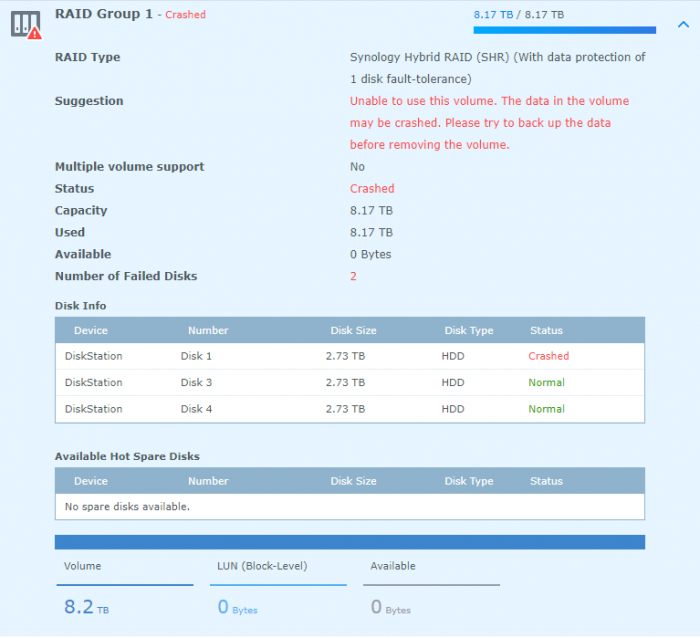

The Overview says DANGER, the status is Crashed with 2 failed disks

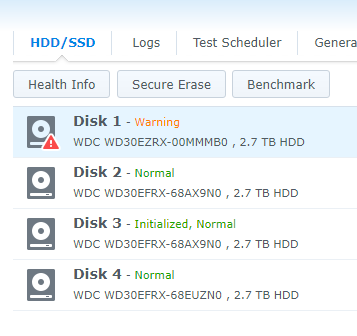

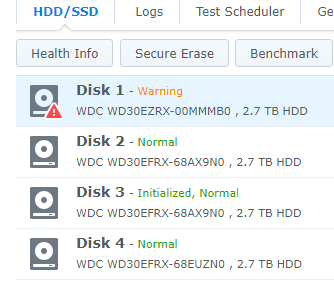

The HDD view says that Disk1 is in Warning, Disk2 is Normal, Disk 3 is Initialised, Normal and Disk 4 is normalI did not delete any data on the drive. I think there is a way to recover it, that is why I am contacting you.

It is stupid but all my life and kids videos are on those drives. I though I was covered with 1 faulty disk tolerance. Lesson learned.How can i recover that? Those 2 drives are now back but not in the RAID...

I appreciate your help, thanks.

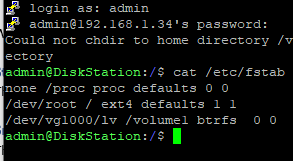

admin@DiskStation:/$ cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF 1] md2 : active raid5 sdb5[2] sdd5[4] 8776594944 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/2] [__UU] md1 : active raid1 sdb2[1] sdc2[0] sdd2[2] 2097088 blocks [12/3] [UUU_________] md0 : active raid1 sdb1[2] sdc1[0] sdd1[3] 2490176 blocks [12/3] [U_UU________] unused devices: <none> admin@DiskStation:/$

-

On 3/22/2020 at 4:55 PM, nicoueron said:

Oula... Le disque 2 est à l'état intialisé donc comme si il ne faisait pas parti de la grappe SHR et le disque 1 semble etre HS.

Tente une réinstallation avec un nouveau loader

Et maintenant j ai ca :

An y rien comprendre

-

Salut nicoueron,

Bon, en essayant de demonter un disque j ai pete la broche... c est la premiere fois que ca m arrive...

Le RAID GROUP ne presente plus rien...

Mais je n ai pas ecrit sur les disques... comment recupere les donnes? Dois je refaire un volume du coup?

Ou plutot demarer sur un Ubuntu ou un truc de ce style?

-

Salut,

Bon, ca boot maintenant. C est super lent.

Par contre une fois booter, et apres le log sur DSM 6.1.6 je vois que mon volume a crashe.

Je trouve ca super bizarre que d un coup j ai mon volume et que j ai 2 disques plante en meme temps.

J etait en RAID SHR avant le probleme avec 4 disques.

Alors pourquoi on ne voit que 3 disques dans le RAID GROUP 1 sur la derniere copie ecran.

Dois je effacer ce volume et le refaire? Vais je perdre les donnees alors?

Merci

-

Salut a tous,

- DSM Version : 6.1 (la derniere je pense)

- Loader JunMod v1.02b- Installation sur une mobo sur laquelle j ai fait un reset bios depuis > Asus PQ5 SE2. j ai 4 HDD.

- extra.lzma: NO

Alors voila, je viens de dememager. J ai donc debranche mon xpenology pour le rebrancher dans ma nouvelle chaumiere.

Depuis il boot mais j ai un pb.

Au premier boot je pensais que tout allez bien mais je ne voyais plus le syno sur son IP fixe.

J ai debranche tous les disques et la je le vois dans Synology Assistant mais en Statut non installe.

Alors pkoi quand je branche les disques il y a un pb?

Je n ai rien change d autre.

Comment ne perdre aucune donnees non system.

Si je fais une reinstalle, vais je perdre les donnees sur les disques?

Merci

-

Hello there,

I have difficulties with setting up the wireless access point. I see the wifi and I can connect to it but there is no internet.

My DS i using a Bonded network card with an IP of (192.168.1.10) and is cable connected to a router @ 192.168.1.1

Can any of you please send me the settings I have to use?

Thanks

-

Can you try installing and do few tests using a software "LAN Speed Test" : https://totusoft.com/lanspeed

Did you do a fresh install? Can you do a fresh install and try?

-

Hi bel,

I had the same problem last week. (and wrote a post about that).

I did a reset of my network settings only because i did not want to reset my all config.

My LAN was super slow (especially the reading, not the writing, which is a paradox).

I bought a dual LAN Intel Pro and installed it on my NAS. The problem seemed solved

I binded (link aggregation) the 2 LANs of my card to have great speed over my LAN.

This morning (and yesterday) I realised that instead of reading/writing at 700M I was around 350M.

To be sure, i restarted my computer and redid the test : Same result.

Then i restarted my NAS and ... booom... back to 700M.I think, but I have no proof, that JunLoader and DSM6 have a network issue.

Thanks

-

OK guys, I did a network reset using : sudo /usr/syno/sbin/synodsdefault --reset-config

However, it did not change a thing.

Then I decided to buy a new dual network card "IBM Intel PRO/1000 PT" and tested with it. It is working greatly.

I binded the 2 ports of this card and I reach 680Mbp reading and 750 Reading. Great.

My conclusion is that JunLoader has a problem with Realtek 8111C, PCIe Gb LAN controller on the Asus P5A SE2.Cheers

-

Anyone had this problem?

-

Flyride, perfect answer, you're the winner

-

Hello,

I would like to reset the server without loosing the data. Everywhere it is written to use a paper clip on the back of the server, but as I have an Xpenology, how would I do that?

In the control panel, there is a factory reset button : "Erase All Data"... but it worries me to do that as it is "All data", so i presume all my settings.

Thanks for your help

-

Hello,

I am using the latest DSM v6.1.6-15266 with Jun Loader latest.

I recently did an upgrade from DSM5 with XpenologyBoot. Things were fine before...I did a read/write test on LAN and noticed that my Writing to the server was 420 Mbps and the Reading was 18Mbps!!!

I do not know how to find the problem. Is it the loader? Is it a driver? Is it a DSM config?

Thanks for helping me. -

Hello,

Did any of you have done an update from 6.1.4 to 6.1.6 using the latest JunLoader 1.02b

Did it worked ok? Is it as straighforward as the other updates? Do you simply install it inside the GUI of the Synology software?

Thanks

-

Hi Flyride and thanks for your reply.

It is not the reply I was expecting as I was looking for a package to install to run a benchmark test.

I totally understand your answer, but I suspect my hardware settings is not running my CPU the way it should, that's why I wanted to run my own test. You know you can slowdown (or overclock) a CPU and that is another reason why I wanted to do a benchmark.

DANGER : Raid crashed. Help me restore my data!

in General Post-Installation Questions/Discussions (non-hardware specific)

Posted

Hi IG-88,

I will try to follow the commands given by Flyride (And hope he joins this thread too) that will give you an understanding of my situation. I am not that worried because I did not mess the data, it is a combination of bad lucks.

First, a bit of background story.

I moved to another place the server. Then i restarted it and totally forgot that I add to plug 2 RJ45 cables to its intel network card to see it on the network as the connection where binded to have a better speed. So I kept on rebooting it and could not see it. My bad is that I forgot to replug both network cable. So I took of the network cart thinking it was ******* up and went for the default one that i used previously. It now works like this.

In moving the network card, I broke a sata connector of a drive. The one that is now in partition failed state.

I also have a drive that is physically getting damage with SMART errors... i tried to repair that but got stuck at 90%.

Then I recreated a USB key with same JunMod, i booted on it but did not install the PAT of synology. I took of this new USB and reverted to the previous one...

So now I boot in the NAS but I do not see data.

So there are 4 drives and here is the results of the commands

root@DiskStation:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 2925531648 (2790.00 GiB 2995.74 GB) Raid Devices : 4 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sat Apr 4 16:00:56 2020 State : clean, FAILED Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : VirDiskStation:2 UUID : 75762e2e:4629b4db:259f216e:a39c266d Events : 15401 Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed 2 8 21 2 active sync /dev/sdb5 4 8 53 3 active sync /dev/sdd5root@DiskStation:~# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Sun Mar 29 11:48:30 2020 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 3 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Sat Apr 4 15:59:32 2020 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 UUID : 147c15b1:a7d68154:3017a5a8:c86610be (local to host DiskStation) Events : 0.26 Number Major Minor RaidDevice State 0 8 18 0 active sync /dev/sdb2 1 8 34 1 active sync /dev/sdc2 2 8 50 2 active sync /dev/sdd2 - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removedroot@DiskStation:~# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Sat Jun 4 18:58:23 2016 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 2 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Apr 4 16:17:00 2020 State : clean, degraded Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 UUID : b46ca73c:a07c1c08:3017a5a8:c86610be (local to host DiskStation) Events : 0.2615542 Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed 2 8 17 2 active sync /dev/sdb1 3 8 49 3 active sync /dev/sdd1 - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removedroot@DiskStation:~# ls /dev/sd* /dev/sda /dev/sdb1 /dev/sdb5 /dev/sdc1 /dev/sdc5 /dev/sdd1 /dev/sdd5 /dev/sdb /dev/sdb2 /dev/sdc /dev/sdc2 /dev/sdd /dev/sdd2 root@DiskStation:~# ls /dev/md* /dev/md0 /dev/md1 /dev/md2 root@DiskStation:~# ls /dev/vg* /dev/vga_arbitermdadm --examine /dev/sd[bcdefklmnopqr]5 >>/tmp/raid.status

Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 75762e2e:4629b4db:259f216e:a39c266d Name : VirDiskStation:2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Raid Devices : 4 Avail Dev Size : 5851063680 (2790.00 GiB 2995.74 GB) Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=384 sectors State : clean Device UUID : 7eee55dc:dbbf5609:e737801d:87903b6c Update Time : Sat Apr 4 16:00:56 2020 Checksum : b039a921 - correct Events : 15401 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : ..AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x2 Array UUID : 75762e2e:4629b4db:259f216e:a39c266d Name : VirDiskStation:2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Raid Devices : 4 Avail Dev Size : 5851063680 (2790.00 GiB 2995.74 GB) Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Recovery Offset : 0 sectors Unused Space : before=1968 sectors, after=384 sectors State : clean Device UUID : 6ba575e4:53121f53:a8fe4876:173d11a9 Update Time : Sun Mar 22 14:01:34 2020 Checksum : 17b3f446 - correct Events : 15357 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 75762e2e:4629b4db:259f216e:a39c266d Name : VirDiskStation:2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Raid Devices : 4 Avail Dev Size : 5851063680 (2790.00 GiB 2995.74 GB) Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=384 sectors State : clean Device UUID : fb417ce4:fcdd58fb:72d35e06:9d7098b5 Update Time : Sat Apr 4 16:00:56 2020 Checksum : dc9d9663 - correct Events : 15401 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : ..AA ('A' == active, '.' == missing, 'R' == replacing)and this command below does not do anything - or I dont know how to use it:

# mdadm --examine /dev/sd[bcdefklmnopqr]5 | egrep 'Event|/dev/sd'

What is the next step you think?

Also, you are fully right, I will backup outside this NAS now. Probably with the service you point at me.

Please continue helping/guiding me.

Thanks