jbesclapez

-

Posts

188 -

Joined

-

Last visited

Posts posted by jbesclapez

-

-

root@DiskStation:~# mdadm --examine /dev/sd[abcd]5 | egrep 'Event|/dev/sd' /dev/sdb5: Events : 15417 /dev/sdc5: Events : 15357 /dev/sdd5: Events : 15417root@DiskStation:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 2925531648 (2790.00 GiB 2995.74 GB) Raid Devices : 4 Total Devices : 2 Persistence : Superblock is persistent Update Time : Fri Apr 10 08:00:50 2020 State : clean, FAILED Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : VirDiskStation:2 UUID : 75762e2e:4629b4db:259f216e:a39c266d Events : 15417 Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed 2 8 21 2 active sync /dev/sdb5 4 8 53 3 active sync /dev/sdd5I have done a bit of research and your "--re-add" should have worked as the Events are not far from each other. Maybe not close enough... no idea.

-

root@DiskStation:~# mdadm /dev/md2 --re-add /dev/sdc5 mdadm: --re-add for /dev/sdc5 to /dev/md2 is not possible🤔

-

Here is the result

root@DiskStation:~# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 root@DiskStation:~# mdadm -v --assemble --force /dev/md2 --uuid 75762e2e:4629b4db:259f216e:a39c266d mdadm: looking for devices for /dev/md2 mdadm: no recogniseable superblock on /dev/synoboot3 mdadm: Cannot assemble mbr metadata on /dev/synoboot2 mdadm: Cannot assemble mbr metadata on /dev/synoboot1 mdadm: Cannot assemble mbr metadata on /dev/synoboot mdadm: no recogniseable superblock on /dev/md1 mdadm: no recogniseable superblock on /dev/md0 mdadm: No super block found on /dev/sdd2 (Expected magic a92b4efc, got 31333231) mdadm: no RAID superblock on /dev/sdd2 mdadm: No super block found on /dev/sdd1 (Expected magic a92b4efc, got 00000131) mdadm: no RAID superblock on /dev/sdd1 mdadm: No super block found on /dev/sdd (Expected magic a92b4efc, got 00000000) mdadm: no RAID superblock on /dev/sdd mdadm: No super block found on /dev/sdc2 (Expected magic a92b4efc, got 31333231) mdadm: no RAID superblock on /dev/sdc2 mdadm: No super block found on /dev/sdc1 (Expected magic a92b4efc, got 00000131) mdadm: no RAID superblock on /dev/sdc1 mdadm: No super block found on /dev/sdc (Expected magic a92b4efc, got 00000000) mdadm: no RAID superblock on /dev/sdc mdadm: No super block found on /dev/sdb2 (Expected magic a92b4efc, got 31333231) mdadm: no RAID superblock on /dev/sdb2 mdadm: No super block found on /dev/sdb1 (Expected magic a92b4efc, got 00000131) mdadm: no RAID superblock on /dev/sdb1 mdadm: No super block found on /dev/sdb (Expected magic a92b4efc, got 00000000) mdadm: no RAID superblock on /dev/sdb mdadm: Cannot read superblock on /dev/sda mdadm: no RAID superblock on /dev/sda mdadm: /dev/sdd5 is identified as a member of /dev/md2, slot 3. mdadm: /dev/sdc5 is identified as a member of /dev/md2, slot 1. mdadm: /dev/sdb5 is identified as a member of /dev/md2, slot 2. mdadm: no uptodate device for slot 0 of /dev/md2 mdadm: added /dev/sdc5 to /dev/md2 as 1 (possibly out of date) mdadm: added /dev/sdd5 to /dev/md2 as 3 mdadm: added /dev/sdb5 to /dev/md2 as 2 mdadm: /dev/md2 assembled from 2 drives - not enough to start the array.

-

6 hours ago, flyride said:

That was going to be my next suggestion. But, are you sure there was not more output from the last command? For verbose mode, it sure didn't say very much. Can you post a mdstat please?

After that, if it still only has assembled with two instead of three drives, let's try:

# mdadm --stop /dev/md2 # mdadm -v --assemble --force /dev/md2 --uuid 75762e2e:4629b4db:259f216e:a39c266dYou asked me to do the mdstat. I restarted my server as the md2 was stopped and I did this

root@DiskStation:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdb5[2] sdd5[4] 8776594944 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/2] [__UU] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] 2097088 blocks [12/3] [UUU_________] md0 : active raid1 sdb1[2] sdd1[3] 2490176 blocks [12/2] [__UU________]So, should I retry the post 39 and then try with post 41 after a reboot?

-

Should we use the array UUID like

# mdadm --assemble /dev/md2 --uuid 75762e2e:4629b4db:259f216e:a39c266d -

3 hours ago, flyride said:

I'm also a bit perplexed about /dev/sdc not coming online with the commands we've used. But I think I know why it isn't joining the array - it has a "feature map" bit set which flags the drive as being in the middle of an array recovery operation. So it is reluctant to include the drive in the array assembly.

In my opinion zapping off the superblocks is a last resort, only when nothing else will work. There is a lot of consistency information that is embedded in the superblock (evidence by the --detail command output) and the positional information of the disk within the stripe, and all that is lost when we zero a superblock.

Before we go doing that, let's repeat the last command with verbose mode on and change the syntax a bit:

mdadm --stop /dev/md2 mdadm -v --assemble --scan --force --run /dev/md2 /dev/sdb5 /dev/sdc5 /dev/sdd5If that doesn't work, we'll come up with something to clear the feature map bit.

Oh god... nothing gets the job done.

root@DiskStation:~# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 root@DiskStation:~# mdadm -v --assemble --scan --force --run /dev/md2 /dev/sdb5 /dev/sdc5 /dev/sdd5 mdadm: /dev/md2 not identified in config file. mdadm: /dev/sdb5 not identified in config file. mdadm: /dev/sdc5 not identified in config file. mdadm: /dev/sdd5 not identified in config file.

-

-

1 hour ago, IG-88 said:

mdadm --stop /dev/md2 mdadm --assemble --force --verbose /dev/md2 /dev/sdc5 /dev/sdb5 /dev/sdd5 mdadm --detail /dev/md2Hi IG-88!

As you said, I think the bad drive broke and I never saw it. Then I have no idea why my other drive got unsycn.

Here are the commands I just did

root@DiskStation:~# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 root@DiskStation:~# mdadm --assemble --force --verbose /dev/md2 /dev/sdc5 /dev/sdb5 /dev/sdd5 mdadm: looking for devices for /dev/md2 mdadm: /dev/sdc5 is identified as a member of /dev/md2, slot 1. mdadm: /dev/sdb5 is identified as a member of /dev/md2, slot 2. mdadm: /dev/sdd5 is identified as a member of /dev/md2, slot 3. mdadm: no uptodate device for slot 0 of /dev/md2 mdadm: added /dev/sdc5 to /dev/md2 as 1 (possibly out of date) mdadm: added /dev/sdd5 to /dev/md2 as 3 mdadm: added /dev/sdb5 to /dev/md2 as 2 mdadm: /dev/md2 assembled from 2 drives - not enough to start the array. root@DiskStation:~# mdadm --detail /dev/md2 mdadm: cannot open /dev/md2: No such file or directoryAnd unfortunately, as you can see, it is not working. I will follow your other recommendation and wait for Flyride too!

I will also leave the NAS on.

-

7 hours ago, IG-88 said:

i haven't done it this often and have not seen anything like this, /dev/sdc5 looked like it would be easy to force it back into the array like you already tried by stopping /dev/md2

and then "force" the drive back into the raid - as you already tried

thst would have been my approach, its the same as you already tiedmdadm --stop /dev/md2 mdadm --assemble --force /dev/md2 /dev/sd[bcd]5 mdadm --detail /dev/md2doing more advanced steps would be experimental for me and i don't like suggesting stuff i haven't tried myself before

here is the procedure for recreating the whole /dev/md2 instead of assemble it

https://raid.wiki.kernel.org/index.php/RAID_Recovery

drive 0 is missing (sda5), sdc5 ist device 1 (odd but it say's so in the status in examine), sdb5 is device 2 and sdd5 is device 3

Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) from status -> divided by two its 2925531648

i came up with this to do it

mdadm --create --assume-clean --level=5 --raid-devices=4 --size=2925531648 /dev/md2 missing /dev/sdc5 /dev/sdb5 /dev/sdd5its a suggestion, nothing more (or its less then a suggestion? - what would be the name for this?)

edit: maybe try this before trying a create

mdadm --assemble --force --verbose /dev/md2 /dev/sdc5 /dev/sdb5 /dev/sdd5Hello IG-88 and thanks for speending time looking into my problem.

Even if I can not wait to find the solution for that problem, I am ready to give it some time to find the correct solution : I following your first recommendation I will try not to do any irreversible action as you said it might damage more the array.

So, if I understand your post correctly it is OK for me to try now the first 3 commands below without creating more issue for that array : In other word, it is not irreversible and can not damange more

Do you recommend me to have also the opinion of Flyride on those steps below?

And another question for you : Do you recommend that I turn off my NAS when not using it to do those commands? If so, will it affect the array?

mdadm --stop /dev/md2 mdadm --assemble --force /dev/md2 /dev/sd[bcd]5 mdadm --detail /dev/md2 -

On 4/4/2020 at 7:12 PM, IG-88 said:

if flyride has some time to help you here its good for you, he's defiantly better at this then i am

hi IG88, do you mind steping back in this thread as Flyride is super busy those days! So far, it is not too complicated, even for me

I just want to be sure that I am not making any mistake... Thanks!

I just want to be sure that I am not making any mistake... Thanks!

-

1 hour ago, flyride said:

Hello, sorry I had to work (I work in health care so very busy lately).

These commands we are trying have not started the array yet, but you are no worse off. I don't quite understand why the drive hasn't unflagged, but let's try one more combination before telling it to create a new array metadata.

# mdadm --stop /dev/md2 # mdadm --assemble --run --force /dev/md2 /dev/sd[bcd]5Hi there! I totally understand if you work in health. I am from Europe and we are in the same ******* here! Even if it is slowing down in some countries. Stay safe

Here is are the results

root@DiskStation:~# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 root@DiskStation:~# mdadm --assemble --run --force /dev/md2 /dev/sd[bcd]5 mdadm: /dev/md2 has been started with 2 drives (out of 4).

-

5 o r10 mins after I did that. Hope it helps

root@DiskStation:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF 1] md2 : active raid5 sdb5[2] sdd5[4] 8776594944 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/2] [__UU] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] 2097088 blocks [12/3] [UUU_________] md0 : active raid1 sdb1[2] sdd1[3] 2490176 blocks [12/2] [__UU________] unused devices: <none>

-

17 minutes ago, flyride said:

Ok, the goal here is to flag the out of sequence drive for use. Try this:

# mdadm --stop /dev/md2 # mdadm --assemble --run /dev/md2 /dev/sd[bcd]5Post results as before.

Here is the result

root@DiskStation:~# mdadm --assemble --run /dev/md2 /dev/sd[bcd]5 mdadm: /dev/md2 has been started with 2 drives (out of 4). -

8 minutes ago, flyride said:

Yes, it's hard to do all this remotely and from memory.

# mdadm --stop /dev/md2then

# mdadm --assemble --force /dev/md2 /dev/sd[bcd]5Sorry for the false start.

Good to hear that. At least it proves I have done good homework LOL.

After the stop mdadm i get this

root@DiskStation:~# mdadm --assemble --force /dev/md2 /dev/sd[bcd]5 mdadm: /dev/md2 assembled from 2 drives - not enough to start the array.Is it bad? Now I will go and do some research about it... scary

-

Should I havve done a

# mdadm --stop /dev/md2Before doing the assemble? (I did some research and last time you did it like this .... but I prefer asking you

)

)

-

2 hours ago, flyride said:

The pound sign I typed was to represent the operating system prompt and so you knew the command was to be run with elevated privilege. When you entered the command with a preceding pound sign, you made it into a comment and exactly nothing was done.

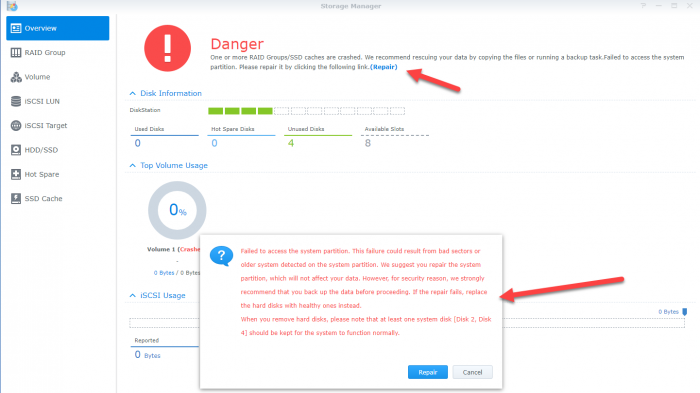

Please do not click that repair button right now. It won’t be helpful.

OK, I did teh assemble before and it seemed to be OK

root@DiskStation:~# mdadm --assemble --force /dev/md2 /dev/sd[bcd]5 mdadm: /dev/sdb5 is busy - skipping mdadm: /dev/sdd5 is busy - skipping mdadm: Found some drive for an array that is already active: /dev/md2 mdadm: giving up.

And thenroot@DiskStation:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF 1] md2 : active raid5 sdb5[2] sdd5[4] 8776594944 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/2] [__UU] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] 2097088 blocks [12/3] [UUU_________] md0 : active raid1 sdb1[2] sdd1[3] 2490176 blocks [12/2] [__UU________] unused devices: <none>

-

3 minutes ago, flyride said:

The pound sign I typed was to represent the operating system prompt and so you knew the command was to be run with elevated privilege. When you entered the command with a preceding pound sign, you made it into a comment and exactly nothing was done.

Please do not click that repair button right now. It won’t be helpful.

should it be assemble first??

mdadm --assemble --force /dev/md2 /dev/sd[bcd]5

-

1 minute ago, flyride said:

The pound sign I typed was to represent the operating system prompt and so you knew the command was to be run with elevated privilege. When you entered the command with a preceding pound sign, you made it into a comment and exactly nothing was done.

Please do not click that repair button right now. It won’t be helpful.

Shame on me. I should have noticed that. Sorry.

Update

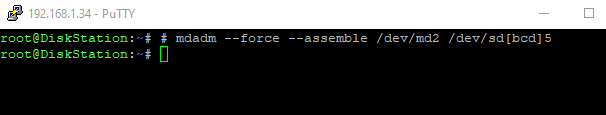

root@DiskStation:~# mdadm --force --assemble /dev/md2 /dev/sd[bcd]5 mdadm: --force does not set the mode, and so cannot be the first option. -

-

5 minutes ago, flyride said:

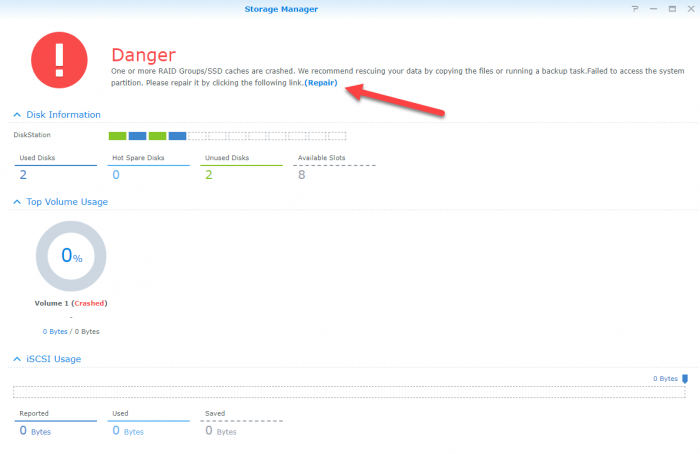

Well it looks like that drive 1 is not worth trying to use. Lots of bad sectors and no partitions with obvious data on them.

Let's try restarting your array in degraded mode using the remaining drives.

# mdadm --force --assemble /dev/md2 /dev/sd[bcd]5Post the output exactly.

I do not get anything from that command. What I am doing wrong?

-

Hi Flyride an

1 hour ago, flyride said:When you say something like this, be very specific as to which disk it is. Is it disk #1 or disk #3? (please answer)

This is not too bad, there might be some modest data corruption (IG-88 quantified), but most files would be ok.

Do you know if your filesystem is btrfs or ext4? (please answer)

And please answer the two questions I asked in the first place.

Sorry for not being precise enought. I am in a learning process now, and to be honest, it is not that simple

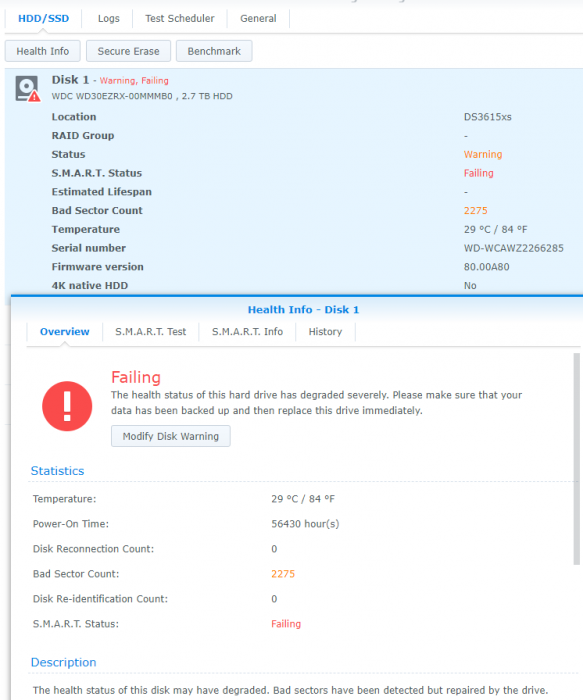

So, you asked me to be specific about the disk I did a smart test on. The problem here is that I can get the disk model and serial from DSM so the model is WD30EZRX-00MMMB0 and the serial is WD-WCAWZ2266285 but that is all I can get. I tried hwinfo --disk, sudoblkid, lsblk -f, command but they do not work. I do not know how to recover the UUID as I think it is what you need no? What I am sure of it that in DSM this is stated as Disk1.

Regarding the filesytem, I had to do some research. The RAID type was SHR but the filesystem I can not find it, I am sorry. Do you know a command to find that? I cannot find it...

Regarding the Warning of Disk 1 it is about the SMART test and it says Warning, Failing (see below)

Regarding what caused the failure, it is probably because after I moved the server I broke the SATA cable, it lost the drive. I tried to repair the cable and plugged it in. Then it was discovered and there was a system partition failed and I repaired - but the repair stopped in the middle I think, I restarted it.

Thanks Flyride for spending time on helping a stranger! Really appreciated. Can I get you a drink ;-)

-

13 hours ago, flyride said:

Before you do anything else, heed IG-88's advice to understand what happened and hopefully determine that it won't happen again. From what you have posted, DSM cannot see any data on disk #1 (/dev/sda). There is an incomplete Warning message that might tell us more about /dev/sda. Also disk #3 (/dev/sdc) MIGHT have data on it but we aren't sure yet. In order to effect a recovery, one of those two drives has to be functional and contain data.

So first, please investigate and report on the circumstances that caused the failure. Also, consider power-cycling the NAS and/or reset the drive connector on disk #1. Once /dev/sda is up and running (or you are absolutely certain that it won't come up), complete the last investigation step IG-88 proposed.

For good news, you have a simple RAID (not SHR). The array you are concerned with is /dev/md2. Anything relating to /dev/vg* or managing LVM does not apply to you.

You only have four drives. So adapt the last command as follows:

# mdadm --examine /dev/sd[abcd]5 | egrep 'Event|/dev/sd'What is the next step guys?

-

1 hour ago, IG-88 said:

if flyride has some time to help you here its good for you, he's defiantly better at this then i am

I dont know if it is important but as a reminder, I did a SMART extended on the "broken" disk- it is now failing.... slowly...

-

Thanks for of you for stepping up and helping me like this. Really appreciated. You guessed from what I wrote that I do not always understand what I do as I am not experienced in this. So I will try my best! I also add difficulty copying the message from raid.status with VI. That might explain why the message got cut.

Here is the commands IG88 wanted me to write originally :

root@DiskStation:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 2925531648 (2790.00 GiB 2995.74 GB) Raid Devices : 4 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sat Apr 4 19:33:15 2020 State : clean, FAILED Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : VirDiskStation:2 UUID : 75762e2e:4629b4db:259f216e:a39c266d Events : 15405 Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed 2 8 21 2 active sync /dev/sdb5 4 8 53 3 active sync /dev/sdd5 root@DiskStation:~# mdadm --examine /dev/sd[abcd]5 | egrep 'Event|/dev/sd' /dev/sdb5: Events : 15405 /dev/sdc5: Events : 15357 /dev/sdd5: Events : 15405Here is the second one :

root@DiskStation:~# vi /tmp/raid.status /dev/sdb5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 75762e2e:4629b4db:259f216e:a39c266d Name : VirDiskStation:2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Raid Devices : 4 Avail Dev Size : 5851063680 (2790.00 GiB 2995.74 GB) Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=384 sectors State : clean Device UUID : 7eee55dc:dbbf5609:e737801d:87903b6c Update Time : Sat Apr 4 19:33:15 2020 Checksum : b039dae8 - correct Events : 15405 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : ..AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x2 Array UUID : 75762e2e:4629b4db:259f216e:a39c266d Name : VirDiskStation:2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Raid Devices : 4 Avail Dev Size : 5851063680 (2790.00 GiB 2995.74 GB) Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Recovery Offset : 0 sectors Unused Space : before=1968 sectors, after=384 sectors State : clean Device UUID : 6ba575e4:53121f53:a8fe4876:173d11a9 Update Time : Sun Mar 22 14:01:34 2020 Checksum : 17b3f446 - correct Events : 15357 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 75762e2e:4629b4db:259f216e:a39c266d Name : VirDiskStation:2 Creation Time : Mon May 13 08:39:01 2013 Raid Level : raid5 Raid Devices : 4 Avail Dev Size : 5851063680 (2790.00 GiB 2995.74 GB) Array Size : 8776594944 (8370.01 GiB 8987.23 GB) Used Dev Size : 5851063296 (2790.00 GiB 2995.74 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=384 sectors State : clean Device UUID : fb417ce4:fcdd58fb:72d35e06:9d7098b5 Update Time : Sat Apr 4 19:33:15 2020 Checksum : dc9dc82a - correct Events : 15405 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : ..AA ('A' == active, '.' == missing, 'R' == replacing) "/tmp/raid.status" 85L, 2674Cand the latest command to be sure

root@DiskStation:~# ls /dev/sd* /dev/sda /dev/sdb /dev/sdb1 /dev/sdb2 /dev/sdb5 /dev/sdc /dev/sdc1 /dev/sdc2 /dev/sdc5 /dev/sdd /dev/sdd1 /dev/sdd2 /dev/sdd5

DANGER : Raid crashed. Help me restore my data!

in General Post-Installation Questions/Discussions (non-hardware specific)

Posted

I have also tried

mdadm --manage /dev/md2 --re-add /dev/sdc5and I get the same error

mdadm: --re-add for /dev/sdc5 to /dev/md2 is not possible