pdavey

Member-

Posts

25 -

Joined

-

Last visited

Everything posted by pdavey

-

Thank you again.

-

Can I use a USB drive and copy files to that? If so how can I copy ALL files in a directory to the USB?

-

I don't know how to thank you, to say you are a Guru Master is an understatement. If I could I would give you a PhD ... DR Flyride. Now the million dollar question ... WHY? What should I do next? Can I power off or use the web interface? Should I scrap the HDDs and replace with 3 x 8TB (non SMR) in RAID 5?

-

You Beauty ... they're there

-

root@DS415:~# mount -v -o ro /dev/vg1000/lv /volume1 mount: /dev/vg1000/lv mounted on /volume1.

-

Sorry, got carried away with the excitement No volume1 didn't mount root@DS415:~# pvs PV VG Fmt Attr PSize PFree /dev/md2 vg1000 lvm2 a-- 3.63t 0 /dev/md3 vg1000 lvm2 a-- 1.82t 0 /dev/md4 vg1000 lvm2 a-- 1.82t 0 /dev/md5 vg1001 lvm2 a-- 1.81t 0 root@DS415:~# vgs VG #PV #LV #SN Attr VSize VFree vg1000 3 1 0 wz--n- 7.27t 0 vg1001 1 1 0 wz--n- 1.81t 0 root@DS415:~# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert lv vg1000 -wi-a----- 7.27t lv vg1001 -wi-ao---- 1.81t root@DS415:~# pvdisplay --- Physical volume --- PV Name /dev/md5 VG Name vg1001 PV Size 1.81 TiB / not usable 3.19 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 475752 Free PE 0 Allocated PE 475752 PV UUID p7cJsO-la6l-vXp7-ga51-4ugu-he7H-SeoHgZ --- Physical volume --- PV Name /dev/md2 VG Name vg1000 PV Size 3.63 TiB / not usable 1.44 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 951505 Free PE 0 Allocated PE 951505 PV UUID 2NkG9U-9Rh6-5xFW-M1iM-GA0f-nbOd-aJHEUS --- Physical volume --- PV Name /dev/md4 VG Name vg1000 PV Size 1.82 TiB / not usable 128.00 KiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 476925 Free PE 0 Allocated PE 476925 PV UUID nolEeP-0392-QvMt-ZOkW-1JDr-COn4-QybVK7 --- Physical volume --- PV Name /dev/md3 VG Name vg1000 PV Size 1.82 TiB / not usable 1.31 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 476927 Free PE 0 Allocated PE 476927 PV UUID bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9 root@DS415:~# vgdisplay --- Volume group --- VG Name vg1001 System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 1 Act PV 1 VG Size 1.81 TiB PE Size 4.00 MiB Total PE 475752 Alloc PE / Size 475752 / 1.81 TiB Free PE / Size 0 / 0 VG UUID pHhunz-cg0H-Fkcg-na1y-AAcT-D9fU-gdDTet --- Volume group --- VG Name vg1000 System ID Format lvm2 Metadata Areas 3 Metadata Sequence No 13 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 0 Max PV 0 Cur PV 3 Act PV 3 VG Size 7.27 TiB PE Size 4.00 MiB Total PE 1905357 Alloc PE / Size 1905357 / 7.27 TiB Free PE / Size 0 / 0 VG UUID kPgiVt-X4fO-Eoxr-f0GL-rsKm-s4fE-Zl6u4Z root@DS415:~# lvdisplay --- Logical volume --- LV Path /dev/vg1001/lv LV Name lv VG Name vg1001 LV UUID Pl33so-ldeW-HS2w-QGeE-3Zwh-QLuG-pqC1TE LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 1.81 TiB Current LE 475752 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 4096 Block device 253:0 --- Logical volume --- LV Path /dev/vg1000/lv LV Name lv VG Name vg1000 LV UUID Zqg7q0-2u5X-oQcl-ejyL-zh1Y-iUA5-531Ls9 LV Write Access read/write LV Creation host, time , LV Status available # open 0 LV Size 7.27 TiB Current LE 1905357 Segments 4 Allocation inherit Read ahead sectors auto - currently set to 512 Block device 253:1

-

root@DS415:~# vgchange -ay 1 logical volume(s) in volume group "vg1001" now active 1 logical volume(s) in volume group "vg1000" now active root@DS415:~# mount /dev/md0 on / type ext4 (rw,relatime,journal_checksum,barrier,data=ordered) none on /dev type devtmpfs (rw,nosuid,noexec,relatime,size=1013044k,nr_inodes=253261,mode=755) none on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000) none on /proc type proc (rw,nosuid,nodev,noexec,relatime) none on /sys type sysfs (rw,nosuid,nodev,noexec,relatime) /tmp on /tmp type tmpfs (rw,relatime) /run on /run type tmpfs (rw,nosuid,nodev,relatime,mode=755) /dev/shm on /dev/shm type tmpfs (rw,nosuid,nodev,relatime) none on /sys/fs/cgroup type tmpfs (rw,relatime,size=4k,mode=755) cgmfs on /run/cgmanager/fs type tmpfs (rw,relatime,size=100k,mode=755) cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,relatime,cpuset,release_agent=/run/cgmanager/agents/cgm-release-agent.cpuset,clone_children) cgroup on /sys/fs/cgroup/cpu type cgroup (rw,relatime,cpu,release_agent=/run/cgmanager/agents/cgm-release-agent.cpu) cgroup on /sys/fs/cgroup/cpuacct type cgroup (rw,relatime,cpuacct,release_agent=/run/cgmanager/agents/cgm-release-agent.cpuacct) cgroup on /sys/fs/cgroup/memory type cgroup (rw,relatime,memory,release_agent=/run/cgmanager/agents/cgm-release-agent.memory) cgroup on /sys/fs/cgroup/devices type cgroup (rw,relatime,devices,release_agent=/run/cgmanager/agents/cgm-release-agent.devices) cgroup on /sys/fs/cgroup/freezer type cgroup (rw,relatime,freezer,release_agent=/run/cgmanager/agents/cgm-release-agent.freezer) cgroup on /sys/fs/cgroup/blkio type cgroup (rw,relatime,blkio,release_agent=/run/cgmanager/agents/cgm-release-agent.blkio) none on /proc/bus/usb type devtmpfs (rw,nosuid,noexec,relatime,size=1013044k,nr_inodes=253261,mode=755) none on /sys/kernel/debug type debugfs (rw,relatime) securityfs on /sys/kernel/security type securityfs (rw,relatime) /dev/mapper/vg1001-lv on /volume2 type btrfs (rw,relatime,synoacl,space_cache=v2,auto_reclaim_space,metadata_ratio=50) none on /config type configfs (rw,relatime) none on /proc/fs/nfsd type nfsd (rw,relatime)

-

root@DS415:~# pvcreate --uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9 --restorefile /etc/lvm/backup/vg1000 /dev/md3 Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. Physical volume "/dev/md3" successfully created root@DS415:~# vgcfgrestore vg1000 Restored volume group vg1000 root@DS415:~# pvs PV VG Fmt Attr PSize PFree /dev/md2 vg1000 lvm2 a-- 3.63t 0 /dev/md3 vg1000 lvm2 a-- 1.82t 0 /dev/md4 vg1000 lvm2 a-- 1.82t 0 /dev/md5 vg1001 lvm2 a-- 1.81t 0 I assume that's good ???

-

Thanks for the explanation, I'm not sure SHR was a wise choice., my ignorance has come back to bite me. I was just reading the following and they confirm your wise advice. https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/configuring_and_managing_logical_volumes/troubleshooting-lvm_configuring-and-managing-logical-volumes Here goes

-

root@DS415:/# blkid | grep "/dev/md." /dev/md0: LABEL="1.42.6-5004" UUID="1dd621d5-e876-4e53-81e7-b9855ac902f0" TYPE="ext4" /dev/md1: UUID="bf6d195b-d017-42af-ac08-5c33cf88fb75" TYPE="swap" /dev/md5: UUID="p7cJsO-la6l-vXp7-ga51-4ugu-he7H-SeoHgZ" TYPE="LVM2_member" /dev/md4: UUID="nolEeP-0392-QvMt-ZOkW-1JDr-COn4-QybVK7" TYPE="LVM2_member" /dev/md2: UUID="2NkG9U-9Rh6-5xFW-M1iM-GA0f-nbOd-aJHEUS" TYPE="LVM2_member" Forgive my ignorance but surely the whole point of a RAID is that it can cope with the loss of 1 drive. Why then when I remove sda does it not give me the option to repair and rebuild the array on a new drive? Is its because sdc is missing also? Also if we use PVCREATE does this create a physical partition on the disk or a logical volume inside a partition. If its the former I am not sure how the HDD will do it without destroying the rest of the data on the disk. Would it be better manually removing the Partition and remove the LV from the array

-

OK No Problem ... many thanks BTW. I could take the drives out and mount on a Linux box if that would be better?

-

root@DS415:/# pvcreate --uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9 /dev/md3 --restorefile is required with --uuid Run `pvcreate --help' for more information. root@DS415:/# pvcreate --help pvcreate: Initialize physical volume(s) for use by LVM pvcreate [--norestorefile] [--restorefile file] [--commandprofile ProfileName] [-d|--debug] [-f[f]|--force [--force]] [-h|-?|--help] [--labelsector sector] [-M|--metadatatype 1|2] [--pvmetadatacopies #copies] [--bootloaderareasize BootLoaderAreaSize[bBsSkKmMgGtTpPeE]] [--metadatasize MetadataSize[bBsSkKmMgGtTpPeE]] [--dataalignment Alignment[bBsSkKmMgGtTpPeE]] [--dataalignmentoffset AlignmentOffset[bBsSkKmMgGtTpPeE]] [--setphysicalvolumesize PhysicalVolumeSize[bBsSkKmMgGtTpPeE] [-t|--test] [-u|--uuid uuid] [-v|--verbose] [-y|--yes] [-Z|--zero {y|n}] [--version] PhysicalVolume [PhysicalVolume...]

-

Yahoo .. it is . (I tried Synology but they refused to do anything using the CLI on the grounds I might do irreversible damage, as if! ) BTW they also told me my HDD werent compatible with my NAS that's when I went down the SMR route. Fingers crossed

-

Yes I have rebooted the NAS. I took out sda and rebooted. Then put it back and rebooted to see if it would offer me a repair. root@DS415:/# lvm pvscan Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. PV /dev/md5 VG vg1001 lvm2 [1.81 TiB / 0 free] PV /dev/md2 VG vg1000 lvm2 [3.63 TiB / 0 free] PV /dev/md4 VG vg1000 lvm2 [1.82 TiB / 0 free] PV unknown device VG vg1000 lvm2 [1.82 TiB / 0 free] Total: 4 [9.08 TiB] / in use: 4 [9.08 TiB] / in no VG: 0 [0 ] root@DS415:/etc/lvm/backup# dir total 20 drwxr-xr-x 2 root root 4096 Dec 10 2019 . drwxr-xr-x 5 root root 4096 May 27 2020 .. -rw-r--r-- 1 root root 2261 Dec 10 2019 vg1000 -rw-r--r-- 1 root root 1215 Dec 10 2019 vg1001 -rw-r--r-- 1 root root 1422 Apr 10 2015 vg3 root@DS415:/etc/lvm/backup# cat vg1000 # Generated by LVM2 version 2.02.132(2)-git (2015-09-22): Tue Dec 10 02:52:40 2019 contents = "Text Format Volume Group" version = 1 description = "Created *after* executing '/sbin/lvextend --alloc inherit /dev/vg1000/lv -l100%VG'" creation_host = "DS415" # Linux DS415 3.10.105 #24922 SMP Wed Jul 3 16:37:24 CST 2019 x86_64 creation_time = 1575946360 # Tue Dec 10 02:52:40 2019 vg1000 { id = "kPgiVt-X4fO-Eoxr-f0GL-rsKm-s4fE-Zl6u4Z" seqno = 12 format = "lvm2" # informational status = ["RESIZEABLE", "READ", "WRITE"] flags = [] extent_size = 8192 # 4 Megabytes max_lv = 0 max_pv = 0 metadata_copies = 0 physical_volumes { pv0 { id = "2NkG9U-9Rh6-5xFW-M1iM-GA0f-nbOd-aJHEUS" device = "/dev/md2" # Hint only status = ["ALLOCATABLE"] flags = [] dev_size = 7794731904 # 3.6297 Terabytes pe_start = 1152 pe_count = 951505 # 3.6297 Terabytes } pv1 { id = "nolEeP-0392-QvMt-ZOkW-1JDr-COn4-QybVK7" device = "/dev/md4" # Hint only status = ["ALLOCATABLE"] flags = [] dev_size = 3906970496 # 1.81932 Terabytes pe_start = 1152 pe_count = 476925 # 1.81932 Terabytes } pv2 { id = "bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9" device = "/dev/md3" # Hint only status = ["ALLOCATABLE"] flags = [] dev_size = 3906988672 # 1.81933 Terabytes pe_start = 1152 pe_count = 476927 # 1.81933 Terabytes } } logical_volumes { lv { id = "Zqg7q0-2u5X-oQcl-ejyL-zh1Y-iUA5-531Ls9" status = ["READ", "WRITE", "VISIBLE"] flags = [] segment_count = 4 segment1 { start_extent = 0 extent_count = 951505 # 3.6297 Terabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv0", 0 ] } segment2 { start_extent = 951505 extent_count = 238462 # 931.492 Gigabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv1", 0 ] } segment3 { start_extent = 1189967 extent_count = 476927 # 1.81933 Terabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv2", 0 ] } segment4 { start_extent = 1666894 extent_count = 238463 # 931.496 Gigabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv1", 238462 ] } } } } root@DS415:/etc/lvm/backup# cat vg1001 # Generated by LVM2 version 2.02.132(2)-git (2015-09-22): Tue Dec 10 10:09:16 2019 contents = "Text Format Volume Group" version = 1 description = "Created *after* executing '/sbin/lvcreate /dev/vg1001 -n lv -l100%FREE'" creation_host = "DS415" # Linux DS415 3.10.105 #24922 SMP Wed Jul 3 16:37:24 CST 2019 x86_64 creation_time = 1575972556 # Tue Dec 10 10:09:16 2019 vg1001 { id = "pHhunz-cg0H-Fkcg-na1y-AAcT-D9fU-gdDTet" seqno = 2 format = "lvm2" # informational status = ["RESIZEABLE", "READ", "WRITE"] flags = [] extent_size = 8192 # 4 Megabytes max_lv = 0 max_pv = 0 metadata_copies = 0 physical_volumes { pv0 { id = "p7cJsO-la6l-vXp7-ga51-4ugu-he7H-SeoHgZ" device = "/dev/md5" # Hint only status = ["ALLOCATABLE"] flags = [] dev_size = 3897366912 # 1.81485 Terabytes pe_start = 1152 pe_count = 475752 # 1.81485 Terabytes } } logical_volumes { lv { id = "Pl33so-ldeW-HS2w-QGeE-3Zwh-QLuG-pqC1TE" status = ["READ", "WRITE", "VISIBLE"] flags = [] segment_count = 1 segment1 { start_extent = 0 extent_count = 475752 # 1.81485 Terabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv0", 0 ] } } } } root@DS415:/etc/lvm/backup# cat vg3 # Generated by LVM2 version 2.02.38 (2008-06-11): Fri Apr 10 03:35:38 2015 contents = "Text Format Volume Group" version = 1 description = "Created *after* executing '/sbin/lvcreate /dev/vg3 -n volume_3 -l100%FREE'" creation_host = "DS415" # Linux DS415 3.2.40 #5022 SMP Wed Jan 7 14:19:49 CST 2015 x86_64 creation_time = 1428629738 # Fri Apr 10 03:35:38 2015 vg3 { id = "B3OU2S-jllW-8UN6-M9s6-hVwO-q8aK-Lqu8rE" seqno = 3 status = ["RESIZEABLE", "READ", "WRITE"] extent_size = 8192 # 4 Megabytes max_lv = 0 max_pv = 0 physical_volumes { pv0 { id = "3eYQCk-V8MJ-3LVt-m7Th-1oAM-CKWY-vJ8Txd" device = "/dev/md4" # Hint only status = ["ALLOCATABLE"] dev_size = 5850870528 # 2.72452 Terabytes pe_start = 1152 pe_count = 714217 # 2.72452 Terabytes } } logical_volumes { syno_vg_reserved_area { id = "Epdama-h0ex-swu2-jsyB-nsVq-43rJ-hW4m4I" status = ["READ", "WRITE", "VISIBLE"] segment_count = 1 segment1 { start_extent = 0 extent_count = 3 # 12 Megabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv0", 0 ] } } volume_3 { id = "889ZKw-87Vv-nVY9-PvBk-ytwK-ZvJX-AaHcue" status = ["READ", "WRITE", "VISIBLE"] segment_count = 1 segment1 { start_extent = 0 extent_count = 714214 # 2.72451 Terabytes type = "striped" stripe_count = 1 # linear stripes = [ "pv0", 3 ] } } } }

-

What does the -m flag signify on the PV? root@DS415:/# pvs Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. PV VG Fmt Attr PSize PFree /dev/md2 vg1000 lvm2 a-- 3.63t 0 /dev/md4 vg1000 lvm2 a-- 1.82t 0 /dev/md5 vg1001 lvm2 a-- 1.81t 0 unknown device vg1000 lvm2 a-m 1.82t 0

-

I am guessing the PV Name should be /dev/md3 PV Name unknown device VG Name vg1000 PV Size 1.82 TiB / not usable 1.31 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 476927 Free PE 0 Allocated PE 476927 PV UUID bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9

-

root@DS415:/# pvs Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. PV VG Fmt Attr PSize PFree /dev/md2 vg1000 lvm2 a-- 3.63t 0 /dev/md4 vg1000 lvm2 a-- 1.82t 0 /dev/md5 vg1001 lvm2 a-- 1.81t 0 unknown device vg1000 lvm2 a-m 1.82t 0 root@DS415:/# vgs Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. VG #PV #LV #SN Attr VSize VFree vg1000 3 1 0 wz-pn- 7.27t 0 vg1001 1 1 0 wz--n- 1.81t 0 root@DS415:/# lvs Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert lv vg1000 -wi-----p- 7.27t lv vg1001 -wi-ao---- 1.81t root@DS415:/# pvdisplay --- Physical volume --- PV Name /dev/md5 VG Name vg1001 PV Size 1.81 TiB / not usable 3.19 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 475752 Free PE 0 Allocated PE 475752 PV UUID p7cJsO-la6l-vXp7-ga51-4ugu-he7H-SeoHgZ Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. --- Physical volume --- PV Name /dev/md2 VG Name vg1000 PV Size 3.63 TiB / not usable 1.44 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 951505 Free PE 0 Allocated PE 951505 PV UUID 2NkG9U-9Rh6-5xFW-M1iM-GA0f-nbOd-aJHEUS --- Physical volume --- PV Name /dev/md4 VG Name vg1000 PV Size 1.82 TiB / not usable 128.00 KiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 476925 Free PE 0 Allocated PE 476925 PV UUID nolEeP-0392-QvMt-ZOkW-1JDr-COn4-QybVK7 --- Physical volume --- PV Name unknown device VG Name vg1000 PV Size 1.82 TiB / not usable 1.31 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 476927 Free PE 0 Allocated PE 476927 PV UUID bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9 root@DS415:/# vgdisplay --- Volume group --- VG Name vg1001 System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 1 Act PV 1 VG Size 1.81 TiB PE Size 4.00 MiB Total PE 475752 Alloc PE / Size 475752 / 1.81 TiB Free PE / Size 0 / 0 VG UUID pHhunz-cg0H-Fkcg-na1y-AAcT-D9fU-gdDTet Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. --- Volume group --- VG Name vg1000 System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 12 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 0 Max PV 0 Cur PV 3 Act PV 2 VG Size 7.27 TiB PE Size 4.00 MiB Total PE 1905357 Alloc PE / Size 1905357 / 7.27 TiB Free PE / Size 0 / 0 VG UUID kPgiVt-X4fO-Eoxr-f0GL-rsKm-s4fE-Zl6u4Z root@DS415:/# lvdisplay --- Logical volume --- LV Path /dev/vg1001/lv LV Name lv VG Name vg1001 LV UUID Pl33so-ldeW-HS2w-QGeE-3Zwh-QLuG-pqC1TE LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 1.81 TiB Current LE 475752 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 4096 Block device 253:0 Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. --- Logical volume --- LV Path /dev/vg1000/lv LV Name lv VG Name vg1000 LV UUID Zqg7q0-2u5X-oQcl-ejyL-zh1Y-iUA5-531Ls9 LV Write Access read/write LV Creation host, time , LV Status NOT available LV Size 7.27 TiB Current LE 1905357 Segments 4 Allocation inherit Read ahead sectors auto root@DS415:/# cat /etc/fstab none /proc proc defaults 0 0 /dev/root / ext4 defaults 1 1 /dev/vg1000/lv /volume1 btrfs auto_reclaim_space,synoacl,relatime 0 0 /dev/vg1001/lv /volume2 btrfs auto_reclaim_space,synoacl,relatime 0 0 root@DS415:/#

-

root@DS415:/# vgchange -ay 1 logical volume(s) in volume group "vg1001" now active Couldn't find device with uuid bocvSr-hmj0-LUH0-BM8g-BXBS-TicT-LbjYQ9. Refusing activation of partial LV vg1000/lv. Use '--activationmode partial' to override. 0 logical volume(s) in volume group "vg1000" now active

-

I think I understand the RAID1 thing now, the most efficient way to put RAID5 over two drives is just mirroring. So that explains the removed drive from /dev/md3 its /dev/sda7 Why didn't the NAS recognise the healthy drive sda when I put it back in. Is there a flag to tell the system its dirty?

-

Sorry, I'd already done them but forgot to include them. I got side tracked reading the documentation so I could understand whats going on. I figured out it was /dev/sda and I can see its missing from both /dev/md2 and /dev/md4 but I dont understand how its linked to /dev/md3 which is Raid 1? Is one array using partitions {sda5, sdb5, sdc5} (1.8T), another {sda6,sdb6,sdc6} (931G) and another {sda7, sdb7} (1.8T) and another I am bit lost now, does each one have a separate volume and the total combined is the pool? root@DS415:~# mdadm --detail /dev/md4 /dev/md4: Version : 1.2 Creation Time : Mon Dec 2 08:31:27 2019 Raid Level : raid5 Array Size : 1953485824 (1862.99 GiB 2000.37 GB) Used Dev Size : 976742912 (931.49 GiB 1000.18 GB) Raid Devices : 3 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sun Feb 21 18:23:01 2021 State : clean, degraded Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : DS415:4 (local to host DS415) UUID : 0f8073d8:3666a524:faf4218d:785d611c Events : 12147 Number Major Minor RaidDevice State 0 8 38 0 active sync /dev/sdc6 1 8 22 1 active sync /dev/sdb6 - 0 0 2 removed root@DS415:~# root@DS415:~# mdadm --detail /dev/md3 /dev/md3: Version : 1.2 Creation Time : Tue Dec 10 02:52:19 2019 Raid Level : raid1 Array Size : 1953494912 (1863.00 GiB 2000.38 GB) Used Dev Size : 1953494912 (1863.00 GiB 2000.38 GB) Raid Devices : 2 Total Devices : 1 Persistence : Superblock is persistent Update Time : Sun Feb 21 18:22:58 2021 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : DS415:3 (local to host DS415) UUID : a508b67e:6933bca7:1bf77190:96030000 Events : 32 Number Major Minor RaidDevice State 0 8 23 0 active sync /dev/sdb7 - 0 0 1 removed

-

root@DS415:/# fdisk -l /dev/sda Disk /dev/sda: 4.6 TiB, 5000981078016 bytes, 9767541168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 3AB98EE9-A4F3-4746-B2BC-AA0BEA61A05B Device Start End Sectors Size Type /dev/sda1 2048 4982527 4980480 2.4G Linux RAID /dev/sda2 4982528 9176831 4194304 2G Linux RAID /dev/sda5 9453280 3906822239 3897368960 1.8T Linux RAID /dev/sda6 3906838336 5860326239 1953487904 931.5G Linux RAID /dev/sda7 5860342336 9767334239 3906991904 1.8T Linux RAID root@DS415:/# fdisk -l /dev/sdb Disk /dev/sdb: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: F27E8497-B367-4862-864C-39FDB67E8EB2 Device Start End Sectors Size Type /dev/sdb1 2048 4982527 4980480 2.4G Linux RAID /dev/sdb2 4982528 9176831 4194304 2G Linux RAID /dev/sdb5 9453280 3906822239 3897368960 1.8T Linux RAID /dev/sdb6 3906838336 5860326239 1953487904 931.5G Linux RAID /dev/sdb7 5860342336 9767334239 3906991904 1.8T Linux RAID root@DS415:/# fdisk -l /dev/sdc Disk /dev/sdc: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 604C99D8-6C7A-4465-91A4-591796CEBE56 Device Start End Sectors Size Type /dev/sdc1 2048 4982527 4980480 2.4G Linux RAID /dev/sdc2 4982528 9176831 4194304 2G Linux RAID /dev/sdc5 9453280 3906822239 3897368960 1.8T Linux RAID /dev/sdc6 3906838336 5860326239 1953487904 931.5G Linux RAID

-

root@DS415:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Mon Apr 20 12:20:53 2015 Raid Level : raid5 Array Size : 3897366528 (3716.82 GiB 3990.90 GB) Used Dev Size : 1948683264 (1858.41 GiB 1995.45 GB) Raid Devices : 3 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sun Feb 21 18:23:01 2021 State : clean, degraded Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : DS415:2 (local to host DS415) UUID : 20106822:98678da8:508d800e:b196f334 Events : 610085 Number Major Minor RaidDevice State 3 8 37 0 active sync /dev/sdc5 - 0 0 1 removed 5 8 21 2 active sync /dev/sdb5

-

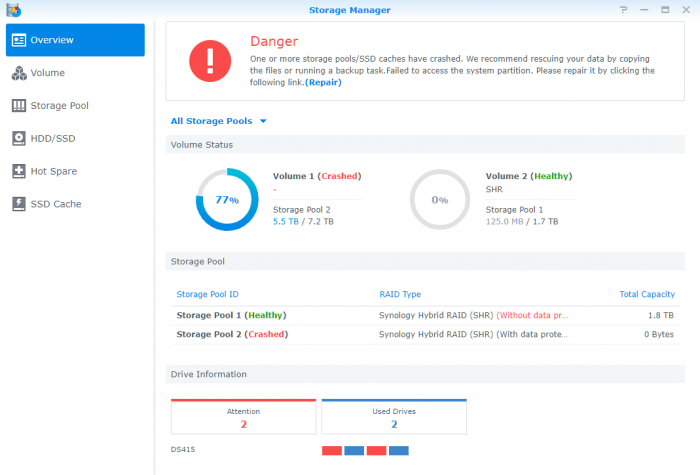

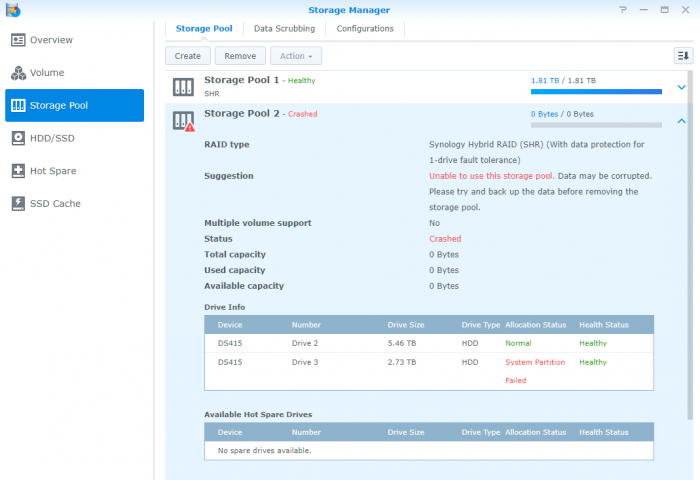

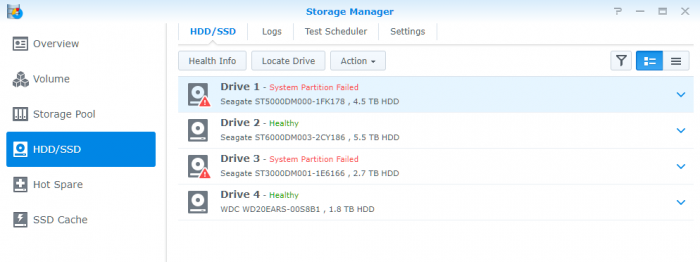

I have a Synology DS415+ and the storage pool crashed without warning. All drives were showing Healthy and I need help identifying what the problem is and how to recover the data (if possible). I was going to try what Synology suggest and load Ubuntu on my PC and try and restore the volume using the following. root@ubuntu:~$ mdadm -Asf && vgchange -ay $ mount ${device_path} ${mount_point} -o ro Before attempting this, I came across this forum and read some of the advice given to others about putting the drives back into the NAS and repairing the volume locally. Any advice or guidance on my journey to recover the data and identify the problem would be gratefully received. My NAS is configured as follows: Storage Pool 1 single 1.81 TB Drive 4 Healthy Storage Pool 2 three Drives - Crashed Drive 1. - 4.5TB System Partition Failed (40 Bad Sectors) passed Extended SMART Drive 2 - 5.5 TB Healthy Drive 3 – 2.7TB System Partition Failed root@DS415:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md2 : active raid5 sdc5[3] sdb5[5] 3897366528 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/2] [U_U] md4 : active raid5 sdc6[0] sdb6[1] 1953485824 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/2] [UU_] md3 : active raid1 sdb7[0] 1953494912 blocks super 1.2 [2/1] [U_] md5 : active raid1 sdd5[0] 1948683456 blocks super 1.2 [1/1] [U] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] 2097088 blocks [4/4] [UUUU] md0 : active raid1 sdb1[3] sdd1[2] 2490176 blocks [4/2] [__UU] unused devices: <none>

-

Hi all, I am a lecturer at Plymouth University. I understand HDD technology but haven't explored the intricacies of NAS, yet... I have a crashed Volume on my Synology NAS and I am trying to understand why it happened and how to fix it. I am trying to find out if it is due to Timeout mismatch due to the drives using SMR. If I am able to recover the data do I need to bin the drives or can I change a setting to fix it?