Rihc0

Member-

Posts

23 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Rihc0's Achievements

Junior Member (2/7)

0

Reputation

-

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

Rightnow I use reclaime pro recovery software from a friend of mine and I can see the data, and try to get it of the raid. hopefully it works -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

Okay, I'll wait for flyride -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

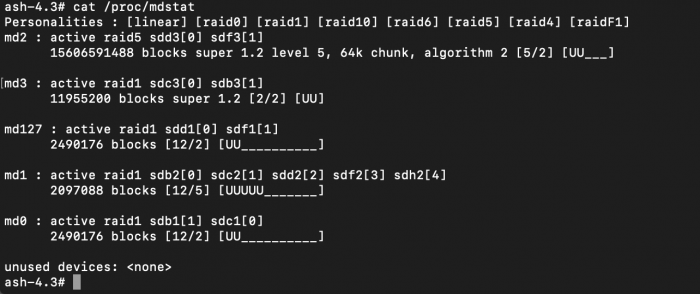

I shut down the server and removed the GPU that was causing problems. I started the server and the virtual machine and this is the output of the commands you have send me. this is with the harddrives the xpenology VM had in the first place I won't touch it unless you say so ^^. sorry for doing many things wrong output of "cat /proc/mdstat" ash-4.3# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md3 : active raid5 sdg3[0] sde3[1] 15606591488 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/2] [UU___] md2 : active raid1 sdb3[0] sdc3[1] 11955200 blocks super 1.2 [2/2] [UU] md127 : active raid1 sde1[1] sdg1[0] 2490176 blocks [12/2] [UU__________] md1 : active raid1 sdb2[0] sdc2[1] sde2[2] sdg2[3] sdh2[4] 2097088 blocks [12/5] [UUUUU_______] md0 : active raid1 sdb1[0] sdc1[1] 2490176 blocks [12/2] [UU__________] unused devices: <none> output of "mdadm --detail /dev/md3" ash-4.3# mdadm --detail /dev/md3 /dev/md3: Version : 1.2 Creation Time : Sat Jun 20 00:46:08 2020 Raid Level : raid5 Array Size : 15606591488 (14883.61 GiB 15981.15 GB) Used Dev Size : 3901647872 (3720.90 GiB 3995.29 GB) Raid Devices : 5 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sun Nov 22 18:24:28 2020 State : clean, FAILED Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : Dabadoo:2 UUID : ff64862b:9edfe233:c498ea84:9d4b9ffd Events : 39112 Number Major Minor RaidDevice State 0 8 99 0 active sync /dev/sdg3 1 8 67 1 active sync /dev/sde3 - 0 0 2 removed - 0 0 3 removed - 0 0 output of "mdadm --examine /dev/sd[efgh]3" ash-4.3# mdadm --examine /dev/sd[efgh]3 /dev/sde3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : ff64862b:9edfe233:c498ea84:9d4b9ffd Name : Dabadoo:2 Creation Time : Sat Jun 20 00:46:08 2020 Raid Level : raid5 Raid Devices : 5 Avail Dev Size : 7803295744 (3720.90 GiB 3995.29 GB) Array Size : 15606591488 (14883.61 GiB 15981.15 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a5de7554:193b06b9:b1f1a8df:3c917e8b Update Time : Sun Nov 22 18:24:28 2020 Checksum : 381624 - correct Events : 39112 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AA... ('A' == active, '.' == missing, 'R' == replacing) /dev/sdg3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : ff64862b:9edfe233:c498ea84:9d4b9ffd Name : Dabadoo:2 Creation Time : Sat Jun 20 00:46:08 2020 Raid Level : raid5 Raid Devices : 5 Avail Dev Size : 7803295744 (3720.90 GiB 3995.29 GB) Array Size : 15606591488 (14883.61 GiB 15981.15 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : e0c37824:42d56226:4bb0cdcc:d29cca2f Update Time : Sun Nov 22 18:24:28 2020 Checksum : cf085fb5 - correct Events : 39112 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AA... ('A' == active, '.' == missing, 'R' == replacing) mdadm: No md superblock detected on /dev/sdh3. ash-4.3# -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

Sorry for not saying the purple screen happend, totally forgot it due some personal issues. I have removed the gpu which cause the purple screen and will be uploading the output of the commands. And wont touch the server until you guys say so . Sorry for the trouble, I appreciate you guys helping me. -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

The whole server rebooted because i got a purple screen view days ago. Also removed a few hard drives but those did not belong to the original raid, maybe i added them accidentally to the virtual machine en removed them when i pulled them out of the server. you still think this can work ? -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

ash-4.3# fdisk -l /dev/sd* Disk /dev/sdb: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x22d5f435 Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdb2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdb3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdb1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x8504927a Device Boot Start End Sectors Size Id Type /dev/sdc1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdc2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdc3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdc1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: EB6537B4-CC88-4A1B-99A4-C235A327CFB6 Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sde1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes The primary GPT table is corrupt, but the backup appears OK, so that will be used. Disk /dev/sdf: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: E3D1C69D-D406-4A97-BF00-168262F1025C Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdg: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: AB3D10CC-2A35-4075-AF8B-135C47B30870 Device Start End Sectors Size Type /dev/sdg1 2048 4982527 4980480 2.4G Linux RAID /dev/sdg2 4982528 9176831 4194304 2G Linux RAID /dev/sdg3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdg1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: F681C773-F2AE-452F-8E71-55511FA5AEE0 Device Start End Sectors Size Type /dev/sdh1 2048 4982527 4980480 2.4G Linux RAID /dev/sdh2 4982528 9176831 4194304 2G Linux RAID /dev/sdh3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdh1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdm3: 4 MiB, 4177408 bytes, 8159 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes I don't know what happend but according to the log it rebooted yea -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

so I did the first 3 commands, and with the third command I got this. output of: # mdadm -v --create --assume-clean -e1.2 -n5 -l5 /dev/md3 /dev/sdg3 /dev/sde3 /dev/sdf3 /dev/sdh3 missing -uff64862b:9edfe233:c498ea84:9d4b9ffd ash-4.3# mdadm -v --create --assume-clean -e1.2 -n5 -l5 /dev/md3 /dev/sdg3 /dev/sde3 /dev/sdf3 /dev/sdh3 missing -uff64862b:9edfe233:c498ea84:9d4b9ffd mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 64K mdadm: /dev/sdg3 appears to be part of a raid array: level=raid5 devices=5 ctime=Sat Jun 20 00:46:08 2020 mdadm: /dev/sde3 appears to be part of a raid array: level=raid5 devices=5 ctime=Sat Jun 20 00:46:08 2020 mdadm: cannot open /dev/sdf3: No such file or directory ash-4.3# -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

I see, I thought I used raid 5, but not sure, and filesystem is ext4 for sure. lets try. Btw, how did you configure your nas? I want to do it the best way but at the moment I learn by making mistakes, and I don't know what is the best way to set it up. -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

I don't, I guess that is something that shows how the raid is configured? -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

Okay, Sorry for the late reply, the lifecycle controller on my server was not doing great and had to troubleshoot it. Ill redo all the commands because there might have been some changes. output of sudo fdisk -l /dev/sd* ash-4.3# sudo fdisk -l /dev/sd* Disk /dev/sdb: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x22d5f435 Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdb2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdb3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdb1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x8504927a Device Boot Start End Sectors Size Id Type /dev/sdc1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdc2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdc3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdc1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: EB6537B4-CC88-4A1B-99A4-C235A327CFB6 Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sde1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes The primary GPT table is corrupt, but the backup appears OK, so that will be used. Disk /dev/sdf: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: E3D1C69D-D406-4A97-BF00-168262F1025C Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdg: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: AB3D10CC-2A35-4075-AF8B-135C47B30870 Device Start End Sectors Size Type /dev/sdg1 2048 4982527 4980480 2.4G Linux RAID /dev/sdg2 4982528 9176831 4194304 2G Linux RAID /dev/sdg3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdg1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: F681C773-F2AE-452F-8E71-55511FA5AEE0 Device Start End Sectors Size Type /dev/sdh1 2048 4982527 4980480 2.4G Linux RAID /dev/sdh2 4982528 9176831 4194304 2G Linux RAID /dev/sdh3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdh1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdm3: 4 MiB, 4177408 bytes, 8159 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes output of cat /proc/mdstat ash-4.3# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md3 : active raid5 sdg3[0] sde3[1] 15606591488 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/2] [UU___] md2 : active raid1 sdb3[0] sdc3[1] 11955200 blocks super 1.2 [2/2] [UU] md127 : active raid1 sde1[1] sdg1[0] 2490176 blocks [12/2] [UU__________] md1 : active raid1 sdb2[0] sdc2[1] sde2[2] sdg2[3] sdh2[4] 2097088 blocks [12/5] [UUUUU_______] md0 : active raid1 sdb1[0] sdc1[1] 2490176 blocks [12/2] [UU__________] unused devices: <none> output of: mdadm --detail /dev/md3 ash-4.3# mdadm --detail /dev/md3 /dev/md3: Version : 1.2 Creation Time : Sat Jun 20 00:46:08 2020 Raid Level : raid5 Array Size : 15606591488 (14883.61 GiB 15981.15 GB) Used Dev Size : 3901647872 (3720.90 GiB 3995.29 GB) Raid Devices : 5 Total Devices : 2 Persistence : Superblock is persistent Update Time : Tue Nov 17 03:41:41 2020 State : clean, FAILED Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : Dabadoo:2 UUID : ff64862b:9edfe233:c498ea84:9d4b9ffd Events : 39101 Number Major Minor RaidDevice State 0 8 99 0 active sync /dev/sdg3 1 8 67 1 active sync /dev/sde3 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed output of: mdadm --examine /dev/sd[defgh]3 | egrep 'Event|/dev/sd' ash-4.3# mdadm --examine /dev/sd[defgh]3 | egrep 'Event|/dev/sd' mdadm: No md superblock detected on /dev/sdh3. /dev/sde3: Events : 39101 /dev/sdg3: Events : 39101 mdadm --examine /dev/sdg3 ash-4.3# mdadm --examine /dev/sdg3 /dev/sdg3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : ff64862b:9edfe233:c498ea84:9d4b9ffd Name : Dabadoo:2 Creation Time : Sat Jun 20 00:46:08 2020 Raid Level : raid5 Raid Devices : 5 Avail Dev Size : 7803295744 (3720.90 GiB 3995.29 GB) Array Size : 15606591488 (14883.61 GiB 15981.15 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : e0c37824:42d56226:4bb0cdcc:d29cca2f Update Time : Tue Nov 17 03:41:41 2020 Checksum : cf00f943 - correct Events : 39101 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AA... ('A' == active, '.' == missing, 'R' == replacing) mdadm --examine /dev/sdh3 ash-4.3# mdadm --examine /dev/sdh3 mdadm: No md superblock detected on /dev/sdh3. -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

Okay, I had to do this on an another virtual machine because I deleted the other one because i thought it was hopeless :P. output of sudo fdisk -l /dev/sd* ash-4.3# sudo fdisk -l /dev/sd* Disk /dev/sdb: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x22d5f435 Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdb2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdb3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdb1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x8504927a Device Boot Start End Sectors Size Id Type /dev/sdc1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdc2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdc3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdc1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd: 3.7 TiB, 3999688294400 bytes, 7811891200 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: 88AB940C-A74C-425C-B303-3DD15285C607 Device Start End Sectors Size Type /dev/sdd1 2048 7811889152 7811887105 3.7T unknown Disk /dev/sdd1: 3.7 TiB, 3999686197760 bytes, 7811887105 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: EB6537B4-CC88-4A1B-99A4-C235A327CFB6 Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sde1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sde3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdf: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: AB3D10CC-2A35-4075-AF8B-135C47B30870 Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdf1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdf2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdf3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: F681C773-F2AE-452F-8E71-55511FA5AEE0 Device Start End Sectors Size Type /dev/sdg1 2048 4982527 4980480 2.4G Linux RAID /dev/sdg2 4982528 9176831 4194304 2G Linux RAID /dev/sdg3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdg1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes The primary GPT table is corrupt, but the backup appears OK, so that will be used. Disk /dev/sdh: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: E3D1C69D-D406-4A97-BF00-168262F1025C Device Start End Sectors Size Type /dev/sdh1 2048 4982527 4980480 2.4G Linux RAID /dev/sdh2 4982528 9176831 4194304 2G Linux RAID /dev/sdh3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdi: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdm3: 4 MiB, 4177408 bytes, 8159 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes ash-4.3# output of cat /proc/mdstat ash-4.3# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md3 : active raid5 sdf3[0] sde3[1] 15606591488 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/2] [UU___] md2 : active raid1 sdb3[0] sdc3[1] 11955200 blocks super 1.2 [2/2] [UU] md127 : active raid1 sde1[1] sdf1[0] 2490176 blocks [12/2] [UU__________] md1 : active raid1 sdb2[0] sdc2[1] sde2[2] sdf2[3] sdg2[4] 2097088 blocks [12/5] [UUUUU_______] md0 : active raid1 sdb1[0] sdc1[1] 2490176 blocks [12/2] [UU__________] unused devices: <none> ash-4.3# output of: mdadm --detail /dev/md3 ash-4.3# mdadm --detail /dev/md3 /dev/md3: Version : 1.2 Creation Time : Sat Jun 20 00:46:08 2020 Raid Level : raid5 Array Size : 15606591488 (14883.61 GiB 15981.15 GB) Used Dev Size : 3901647872 (3720.90 GiB 3995.29 GB) Raid Devices : 5 Total Devices : 2 Persistence : Superblock is persistent Update Time : Fri Nov 13 02:22:44 2020 State : clean, FAILED Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : Dabadoo:2 UUID : ff64862b:9edfe233:c498ea84:9d4b9ffd Events : 39091 Number Major Minor RaidDevice State 0 8 83 0 active sync /dev/sdf3 1 8 67 1 active sync /dev/sde3 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed ash-4.3# output of: mdadm --examine /dev/sd[defgh]3 | egrep 'Event|/dev/sd' ash-4.3# mdadm --examine /dev/sd[defgh]3 | egrep 'Event|/dev/sd' mdadm: No md superblock detected on /dev/sdg3. /dev/sde3: Events : 39091 /dev/sdf3: Events : 39091 ash-4.3# -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

-

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

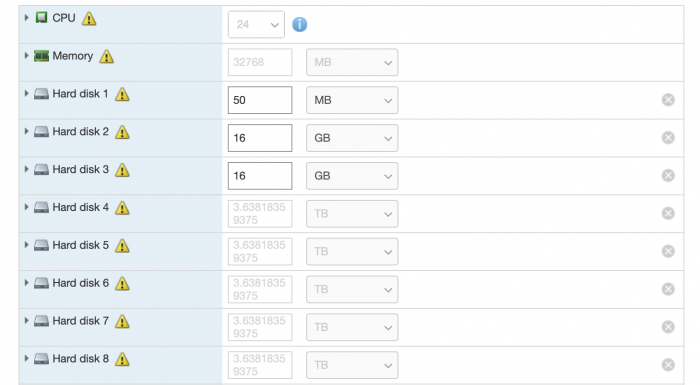

I created the 2x 16gb so I could boot the Synology Nas. I had a ds918+ but the hardware was slow :(. I asked if you think I won't be able to repair this because if not then, I know it is pointless on working on this problem. I should learn more abut the raid and how it works, I am too unfamiliar with it -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

you think I won't be able to repair this? -

DSM 6.2 on ESXi 6,7, storage pool crashed (Raid5, ext4)

Rihc0 replied to Rihc0's topic in The Noob Lounge

Disk /dev/sdb: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xa43eb840 Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdb2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdb3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdb1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdb3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc: 16 GiB, 17179869184 bytes, 33554432 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x7a551fe7 Device Boot Start End Sectors Size Id Type /dev/sdc1 2048 4982527 4980480 2.4G fd Linux raid autodetect /dev/sdc2 4982528 9176831 4194304 2G fd Linux raid autodetect /dev/sdc3 9437184 33349631 23912448 11.4G fd Linux raid autodetect Disk /dev/sdc1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc3: 11.4 GiB, 12243173376 bytes, 23912448 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: AB3D10CC-2A35-4075-AF8B-135C47B30870 Device Start End Sectors Size Type /dev/sdd1 2048 4982527 4980480 2.4G Linux RAID /dev/sdd2 4982528 9176831 4194304 2G Linux RAID /dev/sdd3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdd1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes The primary GPT table is corrupt, but the backup appears OK, so that will be used. Disk /dev/sde: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: E3D1C69D-D406-4A97-BF00-168262F1025C Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdf: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: EB6537B4-CC88-4A1B-99A4-C235A327CFB6 Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdf1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdf2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdf3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdg: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh: 3.7 TiB, 4000225165312 bytes, 7812939776 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: F681C773-F2AE-452F-8E71-55511FA5AEE0 Device Start End Sectors Size Type /dev/sdh1 2048 4982527 4980480 2.4G Linux RAID /dev/sdh2 4982528 9176831 4194304 2G Linux RAID /dev/sdh3 9437184 7812734975 7803297792 3.6T Linux RAID Disk /dev/sdh1: 2.4 GiB, 2550005760 bytes, 4980480 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh2: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdh3: 3.6 TiB, 3995288469504 bytes, 7803297792 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdm3: 4 MiB, 4177408 bytes, 8159 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes the 5 drives in my Synology right now, are the drives I had in the first place.