StanG

Transition Member-

Posts

14 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

StanG's Achievements

Newbie (1/7)

2

Reputation

-

Depends what DSM model you choose. The newer ones will not run on your CPU.

-

The serial number I see shown in "DSM control panel -> Infocenter" is different that the one in grub.conf on the synoboot.img bootdisk. I believe it's the serial number I made up when I clean installed to test the new version. However I have since changed the synoboot.img and there is a different serial number in the grub.cfg file. But the serial number in DSM still is the old one. How can I change the serial in DSM to be like the one that is now in synoboot.img? Thanks I'm using Xpenology on ESXi 6.7. I'm using DS3617XS with loader jun 1.03b. I'm running DSM 6.2.3

-

Okay, thanks for the answer. Made things clearer for me. Will try it that way!

-

I'm really struggling with the update of my production XPEnology system to 6.2.3 Model: DS3617XS with loader1.03b Virtual (VM) on ESXi 6.7 DSM currently on: 6.2.2 updating to 6.2.3 via automatic update in control panel. I had previously replaced the extra.lzma when updating to 6.2.2 because of problems with VMXNET3 after that update. From reading the topics, I need to revert back to the original extra.lzma in the loader1.03b. So what I've done. 1) Started the update in control panel. 2) When the VM reboots, I shut it down. 3) Edit the synoboot.img with OSFMOUNT, replace the files: rd.gz, extra.lzma and delete the synochecksum files. 4) Put the changed synoboot.img back on the VM. 5) Boot the VM. 6) Using serial connection I see some upgrade actions, and then errors after ==== start udevd ===== ==================== start udevd ==================== [ 57.088976] BUG: unable to handle kernel paging request at 0000000700000005 [ 57.090778] IP: [<ffffffff81279455>] strnlen+0x5/0x40 [ 57.092057] PGD 1b2c6e067 PUD 0 [ 57.092925] Oops: 0000 [#1] SMP [ 57.093791] Modules linked in: e1000e(OF+) dca(F) vxlan fuse vfat fat crc32c_intel aesni_intel glue_helper lrw gf128mul ablk_helper arc4 cryptd ecryptfs sha256_generic sha1_generic ecb aes_x86_64 authenc des_generic ansi_cprng cts md5 cbc cpufreq_conservative cpufreq_powersave cpufreq_performance cpufreq_ondemand mperf processor thermal_sys cpufreq_stats freq_table dm_snapshot crc_itu_t(F) crc_ccitt(F) quota_v2 quota_tree psnap p8022 llc sit tunnel4 ip_tunnel ipv6 zram(C) sg etxhci_hcd nvme(F) hpsa(F) isci(F) arcmsr(F) mvsas(F) mvumi(F) 3w_xxxx(F) 3w_sas(F) 3w_9xxx(F) aic94xx(F) aacraid(F) sx8(F) mpt3sas(OF) mpt2sas(OF) megaraid_sas(F) megaraid(F) megaraid_mbox(F) megaraid_mm(F) BusLogic(F) usb_storage xhci_hcd uhci_hcd ohci_hcd(F) ehci_pci(F) ehci_hcd(F) usbcore usb_common mv14xx(O) nszj(OF) [last unloaded: broadwell_synobios] [ 57.114013] CPU: 1 PID: 8526 Comm: insmod Tainted: PF C O 3.10.105 #25426 [ 57.115782] Hardware name: VMware, Inc. VMware Virtual Platform/440BX Desktop Reference Platform, BIOS 6.00 12/12/2018 [ 57.118322] task: ffff8801b2c4e800 ti: ffff8801a6884000 task.ti: ffff8801a6884000 [ 57.120118] RIP: 0010:[<ffffffff81279455>] [<ffffffff81279455>] strnlen+0x5/0x40 [ 57.121949] RSP: 0018:ffff8801a68879b0 EFLAGS: 00010086 [ 57.123286] RAX: ffffffff816f5ff2 RBX: ffffffff81972204 RCX: 0000000000000000 [ 57.124996] RDX: 0000000700000005 RSI: ffffffffffffffff RDI: 0000000700000005 [ 57.126708] RBP: 0000000700000005 R08: 000000000000ffff R09: 000000000000ffff [ 57.128418] R10: 0000000000000000 R11: ffff8801b494b000 R12: ffffffff819725e0 [ 57.130134] R13: 00000000ffffffff R14: 0000000000000000 R15: ffffffff8173eaf4 [ 57.131845] FS: 00007f65d06ce700(0000) GS:ffff8801bfc80000(0000) knlGS:0000000000000000 [ 57.133784] CS: 0010 DS: 0000 ES: 0000 CR0: 000000008005003b [ 57.135181] CR2: 0000000700000005 CR3: 00000001a626c000 CR4: 00000000000007e0 [ 57.136924] DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 [ 57.138659] DR3: 0000000000000000 DR6: 00000000ffff0ff0 DR7: 0000000000000400 [ 57.140377] Stack: [ 57.140887] ffffffff8127b316 ffffffff81972204 ffffffff819725e0 ffff8801a6887b70 [ 57.142826] 00000000000003e0 ffffffff8173eaf4 ffffffff8127c47d ffffffff00000013 [ 57.144746] 0000000000000004 ffffffff81972200 0000000000000001 ffffffffff0a0004 [ 57.146667] Call Trace: [ 57.147282] [<ffffffff8127b316>] ? string.isra.5+0x36/0xe0 [ 57.148627] [<ffffffff8127c47d>] ? vsnprintf+0x1dd/0x6a0 [ 57.150098] [<ffffffff8127c949>] ? vscnprintf+0x9/0x30 [ 57.151378] [<ffffffff81078613>] ? vprintk_emit+0xb3/0x4e0 [ 57.152730] [<ffffffff813044a0>] ? dev_vprintk_emit+0x40/0x50 [ 57.154159] [<ffffffff81023d18>] ? io_apic_setup_irq_pin+0x1a8/0x2d0 [ 57.155720] [<ffffffff813044e9>] ? dev_printk_emit+0x39/0x40 [ 57.157151] [<ffffffff81304a57>] ? dev_err+0x57/0x60 [ 57.158378] [<ffffffffa04e2c19>] ? e1000_probe+0x729/0xe40 [e1000e] [ 57.159912] [<ffffffff8116c514>] ? sysfs_do_create_link_sd+0xc4/0x1f0 [ 57.161483] [<ffffffff8129d2f0>] ? pci_device_probe+0x60/0xa0 [ 57.162890] [<ffffffff81307f2a>] ? really_probe+0x5a/0x220 [ 57.164236] [<ffffffff813081b1>] ? __driver_attach+0x81/0x90 [ 57.165619] [<ffffffff81308130>] ? __device_attach+0x40/0x40 [ 57.167004] [<ffffffff81306223>] ? bus_for_each_dev+0x53/0x90 [ 57.168409] [<ffffffff813076c8>] ? bus_add_driver+0x158/0x250 [ 57.169849] [<ffffffffa0504000>] ? 0xffffffffa0503fff [ 57.171094] [<ffffffff813087b8>] ? driver_register+0x68/0x150 [ 57.172503] [<ffffffffa0504000>] ? 0xffffffffa0503fff [ 57.173747] [<ffffffff810003aa>] ? do_one_initcall+0xea/0x140 [ 57.175151] [<ffffffff8108be04>] ? load_module+0x1a04/0x2120 [ 57.176537] [<ffffffff81088fc0>] ? store_uevent+0x40/0x40 [ 57.177862] [<ffffffff810f9e53>] ? vfs_read+0xf3/0x160 [ 57.179153] [<ffffffff8108c64d>] ? SYSC_finit_module+0x6d/0x70 [ 57.180590] [<ffffffff814c4dc4>] ? system_call_fastpath+0x22/0x27 [ 57.182083] [<ffffffff814c4d11>] ? system_call_after_swapgs+0xae/0x13f [ 57.183673] Code: 84 00 00 00 00 00 80 3f 00 74 18 48 89 f8 0f 1f 84 00 00 00 00 00 48 83 c0 01 80 38 00 75 f7 48 29 f8 c3 31 c0 c3 48 85 f6 74 32 <80> 3f 00 74 2d 48 8d 47 01 48 01 fe eb 0f 0f 1f 44 00 00 48 83 [ 57.190541] RIP [<ffffffff81279455>] strnlen+0x5/0x40 [ 57.191827] RSP <ffff8801a68879b0> [ 57.192680] CR2: 0000000700000005 [ 57.193496] ---[ end trace 612c7642fa8a6c65 ]--- [ 57.204039] Intel(R) Gigabit Ethernet Linux Driver - version 5.3.5.39 [ 57.205657] Copyright(c) 2007 - 2019 Intel Corporation. [ 57.214950] Intel(R) 10GbE PCI Express Linux Network Driver - version 5.6.3 [ 57.216690] Copyright(c) 1999 - 2019 Intel Corporation. [ 57.222199] i40e: Intel(R) 40-10 Gigabit Ethernet Connection Network Driver - version 2.4.10 [ 57.224262] i40e: Copyright(c) 2013 - 2018 Intel Corporation. [ 57.230325] tn40xx: Tehuti Network Driver, 0.3.6.17.2 [ 57.231583] tn40xx: Supported phys : MV88X3120 MV88X3310 MV88E2010 QT2025 TLK10232 AQR105 MUSTANG [ 57.259568] qed_init called [ 57.260354] QLogic FastLinQ 4xxxx Core Module qed 8.33.9.0 [ 57.261836] creating debugfs root node [ 57.269471] qede_init: QLogic FastLinQ 4xxxx Ethernet Driver qede 8.33.9.0 [ 57.275970] Loading modules backported from Linux version v3.18.1-0-g39ca484 [ 57.277732] Backport generated by backports.git v3.18.1-1-0-g5e9ec4c [ 57.310196] Compat-mlnx-ofed backport release: c22af88 [ 57.311504] Backport based on mlnx_ofed/mlnx-ofa_kernel-4.0.git c22af88 [ 57.313155] compat.git: mlnx_ofed/mlnx-ofa_kernel-4.0.git [ 57.677368] bnx2x: Broadcom NetXtreme II 5771x/578xx 10/20-Gigabit Ethernet Driver bnx2x 1.78.17-0 (2013/04/11) [ 57.726815] bio: create slab <bio-1> at 1 [ 57.728208] Btrfs loaded [ 57.734652] exFAT: Version 1.2.9 [ 57.778162] jme: JMicron JMC2XX ethernet driver version 1.0.8 [ 57.787956] sky2: driver version 1.30 [ 57.797085] pch_gbe: EG20T PCH Gigabit Ethernet Driver - version 1.01 [ 57.806868] QLogic 1/10 GbE Converged/Intelligent Ethernet Driver v5.2.42 [ 57.815936] QLogic/NetXen Network Driver v4.0.80 [ 57.821052] Solarflare NET driver v3.2 [ 57.825916] e1000: Intel(R) PRO/1000 Network Driver - version 7.3.21-k8-NAPI [ 57.827671] e1000: Copyright (c) 1999-2006 Intel Corporation. [ 57.832748] pcnet32: pcnet32.c:v1.35 21.Apr.2008 tsbogend@alpha.franken.de [ 57.838085] VMware vmxnet3 virtual NIC driver - version 1.1.30.0-k-NAPI And then it hangs on that last line [ 57.838085] VMware vmxnet3 virtual NIC driver - version 1.1.30.0-k-NAPI And nothing happens further. DS Assistant does not see the XPEnology. If I change the NIC to E1000e instead of VMXNET3 the XPEnology boots further and I can login on serial but there is no NIC only "lo" when I do ifconfig. What am I doing wrong? Thanks for your help

-

You can also connect two serial ports on 2 VM's together. So you configure serial port as named pipe "DSMserial1", near end: server, connect to virtual machine. On a another (management) VM configure serial port as named pipe "DSMserial1", near end: client, connect to virtual machine. Now on the management VM open putty (or other terminal software) with serial port COM1 and you will get the output of the serial port of the DSM VM. Like a virtual serial cable from one VM to the other.

- 13 replies

-

- 1

-

-

- vm

- serial port

-

(and 1 more)

Tagged with:

-

I replaced the extra.lzma file from the "extra.lzma for loader 1.03b ds3617 DSM 6.2.3 v0.11.2_test" download found here on the forums. I replaced the rd.gz and the zImage with the files from the DSM_DS3617xs_25426.pat file. Unfortunately the VM will not boot. Serial output shows: ... linuxrc.syno executed successfully. Post init ==================== start udevd ==================== [ 19.216538] BUG: unable to handle kernel paging request at 0000000700000005 [ 19.218259] IP: [<ffffffff81279455>] strnlen+0x5/0x40 [ 19.219485] PGD 1b291c067 PUD 0 [ 19.220325] Oops: 0000 [#1] SMP [ 19.221162] Modules linked in: e1000e(OF+) dca(F) vxlan fuse vfat fat crc32c_intel aesni_intel glue_helper lrw gf128mul ablk_helper arc4 cryptd ecryptfs sha256_generic sha1_generic ecb ae s_x86_64 authenc des_generic ansi_cprng cts md5 cbc cpufreq_conservative cpufreq_powersave cpufreq_performance cpufreq_ondemand mperf processor thermal_sys cpufreq_stats freq_table dm_snapsh ot crc_itu_t(F) crc_ccitt(F) quota_v2 quota_tree psnap p8022 llc sit tunnel4 ip_tunnel ipv6 zram(C) sg etxhci_hcd nvme(F) hpsa(F) isci(F) arcmsr(F) mvsas(F) mvumi(F) 3w_xxxx(F) 3w_sas(F) 3w_ 9xxx(F) aic94xx(F) aacraid(F) sx8(F) mpt3sas(OF) mpt2sas(OF) megaraid_sas(F) megaraid(F) megaraid_mbox(F) megaraid_mm(F) BusLogic(F) usb_storage xhci_hcd uhci_hcd ohci_hcd(F) ehci_pci(F) ehc i_hcd(F) usbcore usb_common mv14xx(O) uuu(OF) [last unloaded: broadwell_synobios] [ 19.240818] CPU: 3 PID: 7521 Comm: insmod Tainted: PF C O 3.10.105 #25426 [ 19.242538] Hardware name: VMware, Inc. VMware Virtual Platform/440BX Desktop Reference Platform, BIOS 6.00 12/12/2018 [ 19.245021] task: ffff8801b4a64040 ti: ffff8801b30e0000 task.ti: ffff8801b30e0000 [ 19.246772] RIP: 0010:[<ffffffff81279455>] [<ffffffff81279455>] strnlen+0x5/0x40 [ 19.248550] RSP: 0018:ffff8801b30e39b0 EFLAGS: 00010086 [ 19.249795] RAX: ffffffff816f5ff2 RBX: ffffffff81972204 RCX: 0000000000000000 [ 19.251455] RDX: 0000000700000005 RSI: ffffffffffffffff RDI: 0000000700000005 [ 19.253120] RBP: 0000000700000005 R08: 000000000000ffff R09: 000000000000ffff [ 19.254789] R10: 0000000000000000 R11: 0000000000000a60 R12: ffffffff819725e0 [ 19.256453] R13: 00000000ffffffff R14: 0000000000000000 R15: ffffffff8173eaf4 [ 19.258124] FS: 00007f540bef8700(0000) GS:ffff8801bfd80000(0000) knlGS:0000000000000000 [ 19.260007] CS: 0010 DS: 0000 ES: 0000 CR0: 000000008005003b [ 19.261354] CR2: 0000000700000005 CR3: 00000001b6b2c000 CR4: 00000000000007e0 [ 19.263031] DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 [ 19.264727] DR3: 0000000000000000 DR6: 00000000ffff0ff0 DR7: 0000000000000400 [ 19.266384] Stack: [ 19.266875] ffffffff8127b316 ffffffff81972204 ffffffff819725e0 ffff8801b30e3b70 [ 19.268736] 00000000000003e0 ffffffff8173eaf4 ffffffff8127c47d ffffffff00000013 [ 19.270593] 0000000000000004 ffffffff81972200 0000000000000001 ffffffffff0a0004 [ 19.272460] Call Trace: [ 19.273055] [<ffffffff8127b316>] ? string.isra.5+0x36/0xe0 [ 19.274365] [<ffffffff8127c47d>] ? vsnprintf+0x1dd/0x6a0 [ 19.275642] [<ffffffff8127c949>] ? vscnprintf+0x9/0x30 [ 19.276874] [<ffffffff81078613>] ? vprintk_emit+0xb3/0x4e0 [ 19.278182] [<ffffffff813044a0>] ? dev_vprintk_emit+0x40/0x50 [ 19.279574] [<ffffffff81023d18>] ? io_apic_setup_irq_pin+0x1a8/0x2d0 [ 19.281081] [<ffffffff8102516d>] ? io_apic_setup_irq_pin_once+0x2d/0x40 [ 19.282657] [<ffffffff8101f7e2>] ? mp_register_gsi+0xa2/0x1a0 [ 19.284024] [<ffffffff813044e9>] ? dev_printk_emit+0x39/0x40 [ 19.285382] [<ffffffff81304a57>] ? dev_err+0x57/0x60 [ 19.286578] [<ffffffffa04cac19>] ? e1000_probe+0x729/0xe40 [e1000e] [ 19.288069] [<ffffffff8116c514>] ? sysfs_do_create_link_sd+0xc4/0x1f0 [ 19.289594] [<ffffffff8129d2f0>] ? pci_device_probe+0x60/0xa0 [ 19.290963] [<ffffffff81307f2a>] ? really_probe+0x5a/0x220 [ 19.292272] [<ffffffff813081b1>] ? __driver_attach+0x81/0x90 [ 19.293621] [<ffffffff81308130>] ? __device_attach+0x40/0x40 [ 19.294971] [<ffffffff81306223>] ? bus_for_each_dev+0x53/0x90 [ 19.296340] [<ffffffff813076c8>] ? bus_add_driver+0x158/0x250 [ 19.297725] [<ffffffffa04ec000>] ? 0xffffffffa04ebfff [ 19.298931] [<ffffffff813087b8>] ? driver_register+0x68/0x150 [ 19.300302] [<ffffffffa04ec000>] ? 0xffffffffa04ebfff [ 19.301509] [<ffffffff810003aa>] ? do_one_initcall+0xea/0x140 [ 19.302879] [<ffffffff8108be04>] ? load_module+0x1a04/0x2120 [ 19.304229] [<ffffffff81088fc0>] ? store_uevent+0x40/0x40 [ 19.305517] [<ffffffff810f9e53>] ? vfs_read+0xf3/0x160 [ 19.306745] [<ffffffff8108c64d>] ? SYSC_finit_module+0x6d/0x70 [ 19.308135] [<ffffffff814c4dc4>] ? system_call_fastpath+0x22/0x27 [ 19.309587] [<ffffffff814c4d11>] ? system_call_after_swapgs+0xae/0x13f [ 19.311132] Code: 84 00 00 00 00 00 80 3f 00 74 18 48 89 f8 0f 1f 84 00 00 00 00 00 48 83 c0 01 80 38 00 75 f7 48 29 f8 c3 31 c0 c3 48 85 f6 74 32 <80> 3f 00 74 2d 48 8d 47 01 48 01 fe eb 0f 0f 1f 44 00 00 48 83 [ 19.317836] RIP [<ffffffff81279455>] strnlen+0x5/0x40 [ 19.319084] RSP <ffff8801b30e39b0> [ 19.319914] CR2: 0000000700000005 [ 19.320710] ---[ end trace 1d4f8fc3e63e817d ]--- [ 19.330554] Intel(R) Gigabit Ethernet Linux Driver - version 5.3.5.39 [ 19.332094] Copyright(c) 2007 - 2019 Intel Corporation. [ 19.341463] Intel(R) 10GbE PCI Express Linux Network Driver - version 5.6.3 [ 19.343215] Copyright(c) 1999 - 2019 Intel Corporation. [ 19.348697] i40e: Intel(R) 40-10 Gigabit Ethernet Connection Network Driver - version 2.4.10 [ 19.350674] i40e: Copyright(c) 2013 - 2018 Intel Corporation. [ 19.356763] tn40xx: Tehuti Network Driver, 0.3.6.17.2 [ 19.357991] tn40xx: Supported phys : MV88X3120 MV88X3310 MV88E2010 QT2025 TLK10232 AQR105 MUSTANG [ 19.384929] qed_init called [ 19.385721] QLogic FastLinQ 4xxxx Core Module qed 8.33.9.0 [ 19.387060] creating debugfs root node [ 19.394751] qede_init: QLogic FastLinQ 4xxxx Ethernet Driver qede 8.33.9.0 [ 19.400856] Loading modules backported from Linux version v3.18.1-0-g39ca484 [ 19.402574] Backport generated by backports.git v3.18.1-1-0-g5e9ec4c [ 19.436087] Compat-mlnx-ofed backport release: c22af88 [ 19.437434] Backport based on mlnx_ofed/mlnx-ofa_kernel-4.0.git c22af88 [ 19.439123] compat.git: mlnx_ofed/mlnx-ofa_kernel-4.0.git [ 19.809578] bnx2x: Broadcom NetXtreme II 5771x/578xx 10/20-Gigabit Ethernet Driver bnx2x 1.78.17-0 (2013/04/11) [ 19.862782] bio: create slab <bio-1> at 1 [ 19.864133] Btrfs loaded [ 19.870727] exFAT: Version 1.2.9 [ 19.912159] jme: JMicron JMC2XX ethernet driver version 1.0.8 [ 19.921916] sky2: driver version 1.30 [ 19.930360] pch_gbe: EG20T PCH Gigabit Ethernet Driver - version 1.01 [ 19.939296] QLogic 1/10 GbE Converged/Intelligent Ethernet Driver v5.2.42 [ 19.949413] QLogic/NetXen Network Driver v4.0.80 [ 19.955714] Solarflare NET driver v3.2 [ 19.961677] e1000: Intel(R) PRO/1000 Network Driver - version 7.3.21-k8-NAPI [ 19.964106] e1000: Copyright (c) 1999-2006 Intel Corporation. [ 19.970218] pcnet32: pcnet32.c:v1.35 21.Apr.2008 tsbogend@alpha.franken.de [ 19.975360] VMware vmxnet3 virtual NIC driver - version 1.1.30.0-k-NAPI I have a second VM where I tested the upgrade first and it worked well with replacing the files, but it doesn't work on my LIVE Xpenolgy I even copied the synoboot.img file from the working 6.2.3 test VM, replaced the grub.conf with the one for the LIVE VM and it still will not boot. I'm baffled Any hints?

-

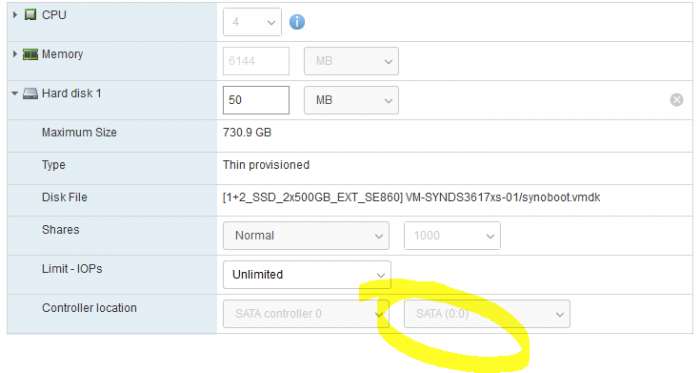

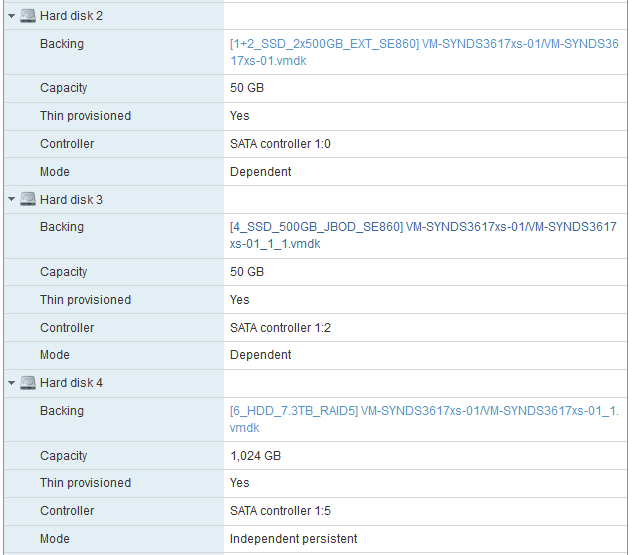

offtopic: Does the bootloader disk of 50MB need to be Indepentdent - not persistent?

-

WetJet's Easy All-In-One ds3617 tutorial for esxi 6.7

StanG replied to WetJet43's topic in Tutorials and Guides

Hmmm I thought the 50MB drive should be on SATA 0:0 It's like that on my install. The other drives, used for the volumes in DSM are on SATA 1:x -

I tried updating to 6.2.3 the other day. And it would not come back after reboot. Couldn't find it with find.synology.com or DSAssistant. I tried changing the NIC's to E1000e instead of VMWNET3 and removing the USB adapter, but no luck. Had to restore a backup. Do I need to edit the synoboot.img and replace the extra.lzma again? I read the post, but it suggests it will just ignore the drivers in extra.lzma I put the SynoBoot.sh fix in /usr/etc/rc.local before the update, but from what I've read it's only to stop showing the boot device in DSM and not even mandatory. Any advice?

-

Hello IG-88, Thank you for your message. I can confirm, VMXNET3 and USB 3.0 controller works perfectly with your updated extra.lzma file. Thanks!

-

Just a friendly warning for the other noobs like me who spent some hours troubleshooting. Using DS3617xs with loader 1.03 on ESXi 6.7. With the DSM 6.2 version VMXNET3 adapters work fine. USB controller (2.0) can be present on the VM without issues. If you upgrade to 6.2.1+ you need to switch the network card(s) to E100e (this information is in the loader matrix). But you also need to remove the USB 2.0 controller from the VM or DSM won't boot. That information is not present in the loader matrix. I had to add a serial port that outputs to file to see DSM hanging on EHCI controller to realize the issue. So maybe it will work with a USB3.0 controller, others can maybe confirm or deny, or we will find out later when we try.

-

StanG joined the community

-

Will it work on an Intel(R) Xeon(R) CPU E5645? I'm have no luck with the DS918+ loader. Does't show up in assistant or find.synology.com The DS3516XS works fine.

- 4 replies

-

- esxi 6.7

- microserver gen8

-

(and 1 more)

Tagged with: