Silvas

Rookie-

Posts

4 -

Joined

-

Last visited

Everything posted by Silvas

-

Well I've given up on this. I had this working once upon a time, and everything was perfectly fine until I had to shut down my server to add a PCIe card for another VM. Shut down the VMs gracefully, shut down VMWare gracefully, powered off the server and disconnected all the cables to I could pull it out and open it, put in the card, plugged all the cables back in to the same spots (yes I made sure the SAS cables went back to the same ports they had been plugged into before, didn't swap them), and when I rebooted the VM, my array crashed and that's when the saga of missing disks began. After I got it up the day before yesterday with the duplicate disk and got an array setup (not using the duplicate disk), I once again had to reboot the server for other VM stuff. Had to turn on passthrough on another card and that needed an esxi reboot. didn't even unplug cables this time. When I started my Xpenology VM up, it said my disks had been moved from another synology unit and I had to reinstall. Failed to format the disk. So I shut the vm down, attached the sas controller to a linux vm and formatted the drives, went back to xpenology... failed to format the disk. Deleted the VM, started over from scratch, formatted and/or wiped the drives in windows, in linux, 0 filled the first 5GB of the drives, made them into vmware datastores then wiped them (this has worked in the past to get me past the failed to format the disk error). Nothing. Every time I would start up the VM and try to install, failed to format the disk. More than 8 hours I spent trying to get this damn thing to format the disks again. The drives work fine everywhere else I attach them - linux, windows, vmware, and now unraid. All say they are smart status healthy, none have given me any errors elsewhere. It's only in DSM in my VM that I seem to be running into so much trouble. I don't want to let VMWare handle the drives and just attach them to the xpenology VM as RDMs is because then I lose the ability to use my SSDs for cache, and from what I've read there is a performance penalty to using drives as RDMs vs. passing the controller through and letting the VM directly go to the hardware since you're adding another abstraction layer. From what I can see on here, the mpt2sas driver (which is what the LSI SAS card would be using) seems to work fine for people, so I don't think it's the fact that I'm using a SAS card and SAS drives. Besides, I have had this working before. I suspect that it's something to do with using the SAS enclosure (maybe the particular enclosure I'm using does something that DSM doesn't like), if I had a 12 bay LFF server it might work better. In any case, for my situation it seems like DSM with the xpenology loader in a VM just isn't going to work for me. I wanted to make this work because I love my DS918+, DSM has so many features and is so user friendly. I'm an IT guy so I can deal with less friendly systems, but it's nice to have one that just makes doing things quick and simple. But if I can't have it running stable, and I'm going to have to worry about whether it's going to suddenly freak out on me after a graceful VM and server reboot, then I'm going to have to abandon it

-

Yes, I only have sata controller 0, and 1 VHD on Sata0:0 for the vmdk that points to synoboot.img I also have the LSI SAS HBA passed through to the VM. This is the process I am using to setup xpenology (except for at the end, instead of adding VM disks for the data drives, I just add the SAS HBA)

-

The hardware: HP DL380p G8 2x E5-2640 256GB RAM LSI 9200-16e SAS HBA (2 SAS links to enclosure, 1 to each controller) Xyratex HB-1235 12 Bay Enclosure with dual SAS 6GB/s controllers 6x HGST 4TB 12Gb/s SAS HDDs 1x HP Seagate 2TB 6Gb/s SAS HDD 2x 250gb SATA 6Gb/s SSDs (with SAS-SATA interposers) The Virtual environment: ESXi 6.5u3 HP customized image for DL380p G8 The VM: 4cores 32GB RAM 50MB VHD on Vmware datastore E1000e NIC LSI SAS HBA passthrough to VM Xpenology: Using Jun's loader 1.03b DS3617 image DSM 6.2.2-24922 update 6 Using the 6x 4TB HGST drives in a RAID5 volume, not currently using the 2TB, 2x250GB SSDs as cache (RAID1) The problem: Either drives all show up in the shell using fdisk -l but some are missing in DSM, or (the newest iteration) drives are duplicating in DSM I've tried modifying the internal port and number of drives parameters - making it more, making it less, to no avail I've tried varying combinations of driver extensions with no effect or making the problem worse For weeks I struggled on this, with only 4 of the 6 drives and sometimes only 1 of the 2 SSDs showing up in DSM unless I put them in different slots in the enclosure (there were 3 slots in the controller the drives would show up on server boot, would show up in ESXI if I stopped passing the HBA through, would show up in other VMs, would show up in the shell on my Xpenology VM but would not show up in DSM) At this time my HBA was on P14 FW. I swapped over to a 9200-8e running P20.00.70.00, same results. I swapped out SAS cables between the HBA and enclosure with brand new ones, same results. I gave up for a month or so, was about to spin up an unraid VM and see how it looked and worked and maybe just swap to unraid permanently, but I decided to take another crack at it beforehand. I swapped my 9200-16e back in, upgraded the FW and BIOS to latest P20.00.70.00, and wiped all the drives in the enclosure clean, and re setup my xpenology VM Now all the drives show up. They show up as 33-42, but I don't really care about the numbering. What bothers me is that DSM is showing 1 more drive than I should have. Drives 36 and 37 are duplicates of each other (they show the same SN). None of the other drives show up duplicated in DSM, and all drives appear as I would expect in the shell using fdisk -l (it shows 12x 4TB, 2x 2TB, and 4x 250GB - exactly what I expect given the dual links to the enclosure. The enumeration is wonky AF, it starts at /dev/sdy and goes to /dev/sdap. I can't figure out why in the hell it's skipping all the way to /dev/sdy to start enumerating, but everywhere I look I read about LSI enumeration shenanigans with enclosures, and it seems that there's nothing that can be done about it. Regardless of that, the drives are enumerating, and always have no matter what DSM was doing.) Right now at least all my drives are showing up, but given that now it's duplicating one it clearly still isn't quite right and that makes me concerned that if I have to shut down the VM or the server for some reason, one time I'm going to come back up back to drives missing again. I'm hesitant to trust it with any data. Any ideas?

-

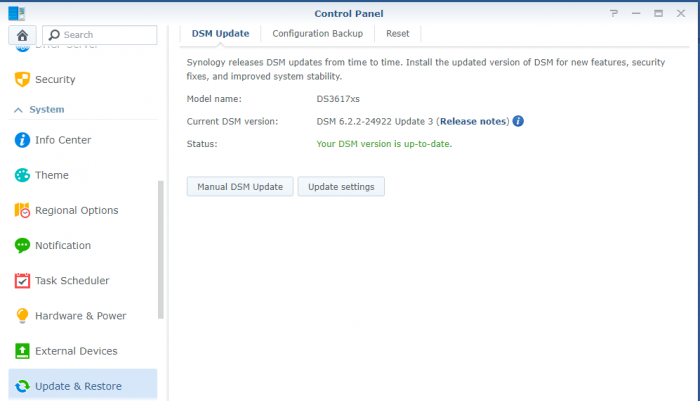

Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.2.2-24922 - Loader version and model: JUN'S LOADER v1.03b - DS3617xs - Using custom extra.lzma: NO - Installation type: VM - ESXI 6.5u3 on HP DL380p G8, LSI 2116 w/SAS drives (mapped as raw drive to SATA1:x in the VM) - Additional comments: Make sure USB 2.0 controller is removed or changed to USB 3.0, and NIC type is E1000e BEFORE update. The update only showed up on Synology's site after I went to the upgrade path tool and set it to upgrade from 6.2.2-23739 to 6.2.2.24922. It then had 6.2.2.24922 as first step and then 6.2.2.24922-3 as second step.