NooL

Member-

Posts

147 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

NooL's Achievements

Advanced Member (4/7)

21

Reputation

-

new sata/ahci cards with more then 4 ports (and no sata multiplexer)

NooL replied to IG-88's topic in Hardware Modding

@IG-88 Have you had any experience with these? https://www.aliexpress.com/item/1005004220424617.html -

Hi Hammond I believe the default ssh password is: Redp1IL-1s-4weSomE Best regards

-

What does the option to show attached drives (in the loader) look like? That will help

-

Hi Jurgen No need to apologize for language, I think that most of us in here is not native english speakers - so no problem there In regards to your problems. The PSU warnings is a known limitation of the RS4021xs model at the moment, as far as I know there has been no attempts to try and find/modify the warnings that come with it - So for now its just something that you have to live with. In regards to the HBA order - This is a known issue, DSM will put the HBA drives in a random order at boot, I believe that there was efforts made into a work-around to this in the following thread, but I have no idea if it works etc.

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

Well reading a bit more, it seems there is also something with the NIC driver as well as this does not seem right?: [ 9.600202] ixgbe 0000:06:11.0: Multiqueue Enabled: Rx Queue count = 6, Tx Queue count = 6 [ 9.686150] ixgbe 0000:06:11.0: 0.000 Gb/s available PCIe bandwidth (Unknown speed x255 link) [ 9.735199] ixgbe 0000:06:11.0 eth0: MAC: 4, PHY: 9, PBA No: H86377-005 [ 9.735807] ixgbe 0000:06:11.0: 00:11:32:a9:b0:a6 [ 9.736211] ixgbe 0000:06:11.0 eth0: Enabled Features: RxQ: 6 TxQ: 6 FdirHash vxlan_rx [ 9.742887] ixgbe 0000:06:11.0 eth0: Intel(R) 10 Gigabit Network Connection [ 10.126559] ixgbe 0000:06:11.1: Multiqueue Enabled: Rx Queue count = 6, Tx Queue count = 6 [ 10.212914] ixgbe 0000:06:11.1: 0.000 Gb/s available PCIe bandwidth (Unknown speed x255 link) [ 10.266756] ixgbe 0000:06:11.1 eth1: MAC: 4, PHY: 9, PBA No: H86377-005 [ 10.267428] ixgbe 0000:06:11.1: 00:11:32:a9:b0:a7 [ 10.267902] ixgbe 0000:06:11.1 eth1: Enabled Features: RxQ: 6 TxQ: 6 FdirHash vxlan_rx [ 10.274831] ixgbe 0000:06:11.1 eth1: Intel(R) 10 Gigabit Network Connection -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

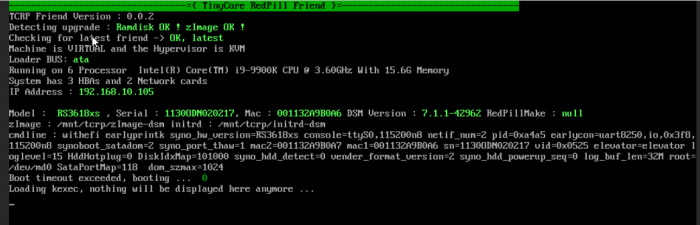

Hi Peter Strange indeed. I tried to capture what I could during the startup, before it shuts down again: (is there a way to save it all to disk as the buffer seems to cut off before i can copy it all?) It stays there at Fuse init until i try to access it via http it seems, then it begins shutdown. I notice there are a few "taints kernel" it that normal? I've tried just now to install DS3622xs with arpl an that works fine (I've also previously used your own RS4021xs with the same setup, but stopped due to the Power supply warnings) so im not sure this is a network card issue, or maybe multiple issues? -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

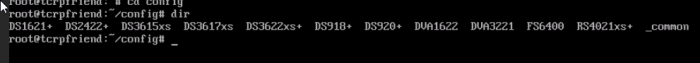

Hi Peter Its just a matter of clicking Add Device (Pci device) selecting the one you want to pass through and thats it, perhaps select "All Functions" but thats it Not sure why its failing on my end as this is a fairly simple setup. Any idea why are those conf folders missing for RS3618xs? They are there for all the other models - Seems like an error somewhere? -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

Thank you If it's any help this is the VM: First PCI device is SATA controller Second is Intel X520-T2 Dual port NIC. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

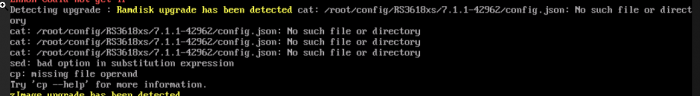

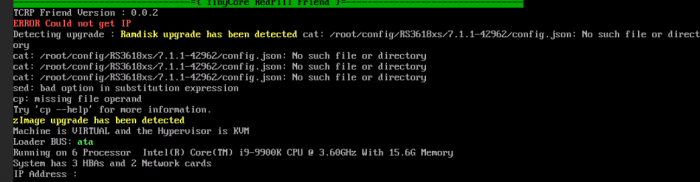

Hi @Peter Suh and @pocopico Thank you for your reply - Much appreciated I tried this just now with a fresh install, but unfortunately the result remains the same. After installing the pat file and the system doing its installation reboot i get the screen as shown: After ctrl-c and doing the ./boot.sh patchkernel Patching Kernel Kernel patched, Sha256sum: (long signature) Then i do ./boot.sh I can see after a bit that the system starts responding to pings again. I can hear the harddisks working, but after a while the system VM shuts down (i can hear the disks spinning down and then the vm turns off) I can reproduce this every time. If i look in the config folder: There is no RS3618xs folder - Which is also what the system complains about in the first screenshot i think? Shouldnt this folder/subfolder etc be there? -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

Unfortunately this seems to be not just a one time thing - I just tried again, exact same thing happens. There is no RS3618xs folder in config folder so it fails from there it seems. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

NooL replied to Peter Suh's topic in Software Modding

Hi @Peter Suh I tried to Install the RS3618xs with the Friend option, but was not successfull. The system boots, i find it in web assistant, upload the pat file - it installs but after first reboot the TCRP Friend looks like this: And then i either get an install loop (Prompted to install it again) or my VM simply shuts down. Any idea whats wrong? This is a Proxmox Virtual install. Disgard the "Could not get IP" - The system gets an ip 10secs after this. -

I believe you need this: (Which I also believe is selectable as a addon in ARPL?)