chickenstealers

-

Posts

12 -

Joined

-

Last visited

Posts posted by chickenstealers

-

-

3 minutes ago, flyride said:

Just for fun,

# mkdir /mnt/volume1

# mount -o recovery,ro /dev/md2 /mnt/volume1

and see if you can mount the filesystem somewhere else.

If nothing works, you may want to do a btrfs file restore

I mount to volume2 because volume2 has 1 TB space and works well

IPUserverOLD:~# mkdir /volume2/volume1 IPUserverOLD:~# mount -o recovery,ro /dev/md2 /volume2/volume1 mount: wrong fs type, bad option, bad superblock on /dev/md2, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so.and this is the dmesg | tail response

IPUserverOLD:~# dmesg | tail [ 1516.107893] md/raid1:md2: syno_raid1_self_heal_set_and_submit_read_bio(1226): No suitable device for self healing retry read at round 2 at sector 330375376 [ 1516.108290] md/raid1:md2: syno_raid1_self_heal_set_and_submit_read_bio(1226): No suitable device for self healing retry read at round 2 at sector 330375368 [ 1516.108688] parent transid verify failed on 165385699328 wanted 587257 found 587265 [ 1516.108693] BTRFS error (device md2): BTRFS: md2 failed to repair parent transid verify failure on 165385699328, mirror = 2 [ 1516.131426] BTRFS: open_ctree failed [ 1539.876552] CPU count larger than MAX_CPU 2: 2 [ 1539.876556] CPU count larger than MAX_CPU 2: 2 [ 1600.059867] CPU count larger than MAX_CPU 2: 2 [ 1600.059871] CPU count larger than MAX_CPU 2: 2 -

12 minutes ago, flyride said:

Stale file handle is an odd error message. Have you rebooted this NAS? Are you using NFS? If you are, disable it.

I have rebooted the NAS, but still the same got errorIPUserverOLD:~# sudo mount -o recovery,ro /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handleNFS is disable

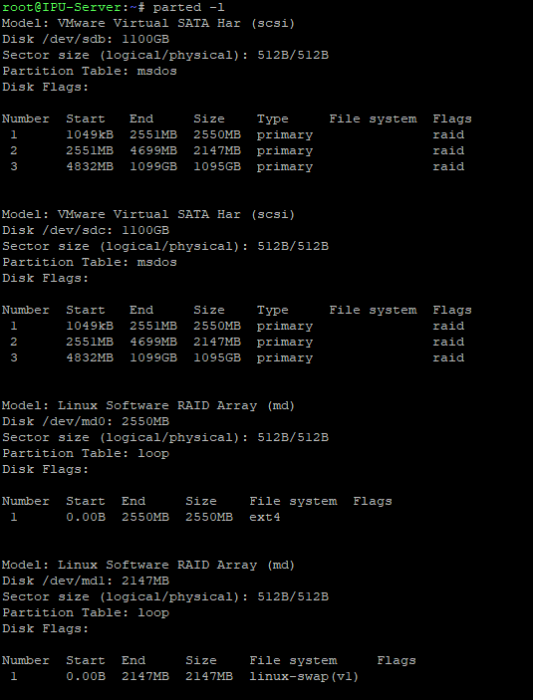

Also i'm using VMware ESXi 6.7 and install Xpenology inside ESXi using virtual drive

-

Result of next command based on the thread you recommend

btrfs check --init-extent-tree /dev/md2

root@IPUserverOLD:~# btrfs check --init-extent-tree /dev/md2 Syno caseless feature off. Checking filesystem on /dev/md2 UUID: 9021108d-e771-4e90-8b1c-ec4f5da3de97 Creating a new extent tree Failed to find [164311859200, 168, 16384] btrfs unable to find ref byte nr 165385601024 parent 0 root 1 owner 1 offset 0 Failed to find [406977511424, 168, 16384] btrfs unable to find ref byte nr 408051253248 parent 0 root 1 owner 0 offset 1 parent transid verify failed on 408051269632 wanted 587260 found 587262 Ignoring transid failure Failed to find [165385633792, 168, 16384] btrfs unable to find ref byte nr 165385682944 parent 0 root 1 owner 0 offset 1 checking extents parent transid verify failed on 138367680512 wanted 587198 found 587196 parent transid verify failed on 138367680512 wanted 587198 found 587196 parent transid verify failed on 138367680512 wanted 587198 found 587196 parent transid verify failed on 138367680512 wanted 587198 found 587196 Ignoring transid failure leaf parent key incorrect 138367680512 bad block 138367680512 Errors found in extent allocation tree or chunk allocation Error: could not find extent items for root 257btrfs check --init-csum-tree /dev/md2

root@IPUserverOLD:~# btrfs check --init-csum-tree /dev/md2 Creating a new CRC tree Syno caseless feature off. Checking filesystem on /dev/md2 UUID: 9021108d-e771-4e90-8b1c-ec4f5da3de97 Reinit crc root checking extents parent transid verify failed on 138367565824 wanted 587198 found 587196 parent transid verify failed on 138367565824 wanted 587198 found 587196 parent transid verify failed on 138367565824 wanted 587198 found 587196 parent transid verify failed on 138367565824 wanted 587198 found 587196 Ignoring transid failure bad block 138367565824 Errors found in extent allocation tree or chunk allocation Error: could not find extent items for root 257Trying to repair with

btrfs check --repair /dev/md2 and mount using mount -o recovery,ro /dev/md2 /volume1 and still no luckroot@IPUserverOLD:~# btrfs check --repair /dev/md2 enabling repair mode Syno caseless feature off. Checking filesystem on /dev/md2 UUID: 9021108d-e771-4e90-8b1c-ec4f5da3de97 checking extents parent transid verify failed on 138367565824 wanted 587198 found 587196 parent transid verify failed on 138367565824 wanted 587198 found 587196 parent transid verify failed on 138367565824 wanted 587198 found 587196 parent transid verify failed on 138367565824 wanted 587198 found 587196 Ignoring transid failure bad block 138367565824 Errors found in extent allocation tree or chunk allocation Error: could not find extent items for root 257 root@IPUserverOLD:~# mount -o recovery,ro /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handle -

14 minutes ago, flyride said:

In that case you only have filesystem repair options, or extract files in a recovery mode.

If you have no other plan, start here and follow the subsequent posts until resolution.

Hi, thanks for your reply

This is the result of first suggestion

sudo mount -o recovery,ro /dev/md2 /volume1IPUserverOLD:~# sudo mount -o recovery,ro /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handlesudo btrfs rescue super /dev/md2

IPUserverOLD:~# sudo btrfs rescue super /dev/md2 All supers are valid, no need to recoversudo btrfs-find-root /dev/md2

root@IPUserverOLD:~# sudo btrfs-find-root /dev/md2 Superblock thinks the generation is 587261 Superblock thinks the level is 1 Found tree root at 165385601024 gen 587261 level 1 Well block 30048256(gen: 587252 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 31604736(gen: 587251 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 30998528(gen: 587246 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 30982144(gen: 587246 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 83244351488(gen: 587245 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 30425088(gen: 587244 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 30408704(gen: 587243 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 30261248(gen: 587243 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138005200896(gen: 587242 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 31391744(gen: 587232 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 110624768000(gen: 587227 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 31457280(gen: 587221 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 31014912(gen: 587200 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138368532480(gen: 587199 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138367811584(gen: 587196 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138367041536(gen: 587195 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138366976000(gen: 587195 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138366943232(gen: 587195 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138359455744(gen: 587194 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138357948416(gen: 587193 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138356752384(gen: 587191 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138353852416(gen: 587190 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138353360896(gen: 587189 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138352197632(gen: 587188 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138351607808(gen: 587187 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138350362624(gen: 587186 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138348380160(gen: 587185 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138347741184(gen: 587184 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138347659264(gen: 587184 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138344759296(gen: 587183 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 ------ i skip so many result, i just post part of them ----- Well block 138371039232(gen: 579540 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138370449408(gen: 579539 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138369794048(gen: 579538 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138369449984(gen: 579537 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138369024000(gen: 579535 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138367467520(gen: 579533 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138191585280(gen: 579327 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 138147512320(gen: 579257 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 221593600(gen: 576729 level: 1) seems good, but generation/level doesn't match, want gen: 587261 level: 1 Well block 218923008(gen: 576721 level: 0) seems good, but generation/level doesn't match, want gen: 587261 level: 1sudo btrfs insp dump-s -f /dev/md2

root@IPUserverOLD:~# sudo btrfs insp dump-s -f /dev/md2 superblock: bytenr=65536, device=/dev/md2 --------------------------------------------------------- csum 0x0f515cd5 [match] bytenr 65536 flags 0x1 ( WRITTEN ) magic _BHRfS_M [match] fsid 9021108d-e771-4e90-8b1c-ec4f5da3de97 label 2019.06.21-04:04:23 v23824 generation 587261 root 165385601024 sys_array_size 129 chunk_root_generation 582947 root_level 1 chunk_root 20971520 chunk_root_level 1 log_root 0 log_root_transid 0 log_root_level 0 total_bytes 1094573883392 bytes_used 452051353600 sectorsize 4096 nodesize 16384 leafsize 16384 stripesize 4096 root_dir 6 num_devices 1 compat_flags 0x0 compat_ro_flags 0x0 incompat_flags 0x16b ( MIXED_BACKREF | DEFAULT_SUBVOL | COMPRESS_LZO | BIG_METADATA | EXTENDED_IREF | SKINNY_METADATA ) csum_type 0 csum_size 4 cache_generation 18446744073709551615 uuid_tree_generation 587257 dev_item.uuid 1d711bdd-14d2-4ad2-89cd-3c343a27ab81 dev_item.fsid 9021108d-e771-4e90-8b1c-ec4f5da3de97 [match] dev_item.type 0 dev_item.total_bytes 1094573883392 dev_item.bytes_used 470315696128 dev_item.io_align 4096 dev_item.io_width 4096 dev_item.sector_size 4096 dev_item.devid 1 dev_item.dev_group 0 dev_item.seek_speed 0 dev_item.bandwidth 0 dev_item.generation 0 sys_chunk_array[2048]: item 0 key (FIRST_CHUNK_TREE CHUNK_ITEM 20971520) chunk length 8388608 owner 2 stripe_len 65536 type SYSTEM|DUP num_stripes 2 stripe 0 devid 1 offset 20971520 dev uuid: 1d711bdd-14d2-4ad2-89cd-3c343a27ab81 stripe 1 devid 1 offset 29360128 dev uuid: 1d711bdd-14d2-4ad2-89cd-3c343a27ab81 backup_roots[4]: backup 0: backup_tree_root: 165385797632 gen: 587256 level: 1 backup_chunk_root: 20971520 gen: 582947 level: 1 backup_extent_root: 165385814016 gen: 587256 level: 2 backup_fs_root: 29638656 gen: 8 level: 0 backup_dev_root: 165385879552 gen: 587256 level: 1 backup_csum_root: 138367549440 gen: 587199 level: 2 backup_total_bytes: 1094573883392 backup_bytes_used: 452051238912 backup_num_devices: 1 backup 1: backup_tree_root: 165385601024 gen: 587257 level: 1 backup_chunk_root: 20971520 gen: 582947 level: 1 backup_extent_root: 165385617408 gen: 587257 level: 2 backup_fs_root: 29638656 gen: 8 level: 0 backup_dev_root: 165385699328 gen: 587257 level: 1 backup_csum_root: 138367549440 gen: 587199 level: 2 backup_total_bytes: 1094573883392 backup_bytes_used: 452051238912 backup_num_devices: 1 backup 2: backup_tree_root: 165387862016 gen: 587254 level: 1 backup_chunk_root: 20971520 gen: 582947 level: 1 backup_extent_root: 165387878400 gen: 587254 level: 2 backup_fs_root: 29638656 gen: 8 level: 0 backup_dev_root: 165387730944 gen: 587253 level: 1 backup_csum_root: 138367549440 gen: 587199 level: 2 backup_total_bytes: 1094573883392 backup_bytes_used: 452051238912 backup_num_devices: 1 backup 3: backup_tree_root: 165385601024 gen: 587255 level: 1 backup_chunk_root: 20971520 gen: 582947 level: 1 backup_extent_root: 165385617408 gen: 587255 level: 2 backup_fs_root: 29638656 gen: 8 level: 0 backup_dev_root: 165385699328 gen: 587255 level: 1 backup_csum_root: 138367549440 gen: 587199 level: 2 backup_total_bytes: 1094573883392 backup_bytes_used: 452051238912 backup_num_devices: 1I'm also trying this command

btrfs rescue chunk-recover /dev/md2root@IPUserverOLD:~# btrfs rescue chunk-recover /dev/md2 Scanning: DONE in dev0 Check chunks successfully with no orphans Chunk tree recovered successfullyand this is dmesg result

IPUserverOLD:~# dmesg | tail -f [103406.238414] BTRFS: error (device md2) in __btrfs_free_extent:6865: errno=-2 No such entry [103406.238653] BTRFS: error (device md2) in btrfs_run_delayed_refs:3086: errno= -2 No such entry [103406.238887] BTRFS warning (device md2): Skipping commit of aborted transacti on. [103406.238889] BTRFS: error (device md2) in cleanup_transaction:1884: errno=-2 No such entry [103406.239166] BTRFS error (device md2): commit super ret -30 [103406.239379] BTRFS error (device md2): cleaner transaction attach returned -3 0 [103455.164837] CPU count larger than MAX_CPU 2: 2 [103455.164842] CPU count larger than MAX_CPU 2: 2 [103515.353971] CPU count larger than MAX_CPU 2: 2 [103515.353975] CPU count larger than MAX_CPU 2: 2 -

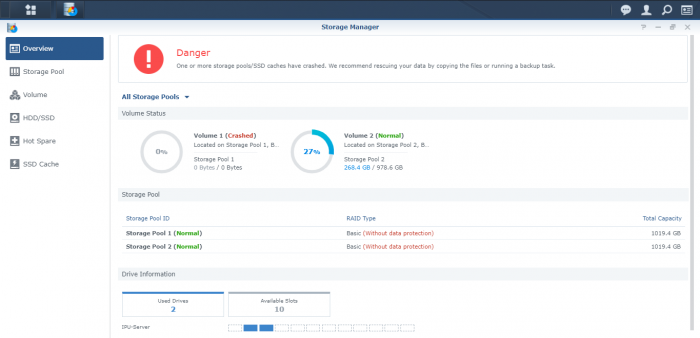

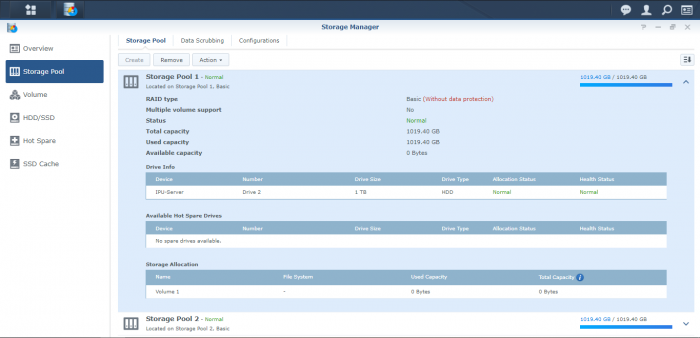

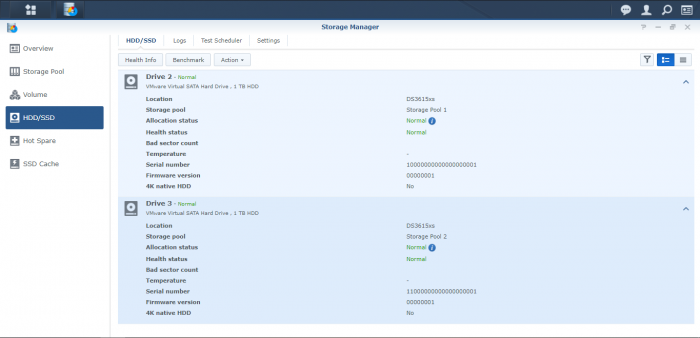

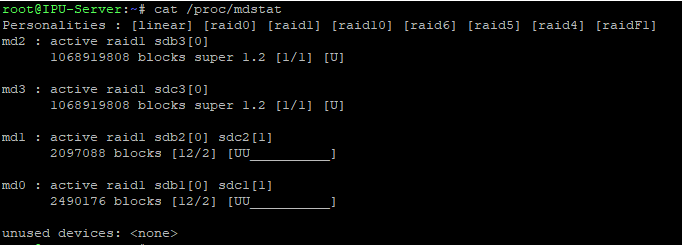

My volume only contain 1 disk each

IPUserverOLD:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid1 sdb3[0] 1068919808 blocks super 1.2 [1/1] [U] md3 : active raid1 sdc3[0] 1068919808 blocks super 1.2 [1/1] [U] md1 : active raid1 sdb2[0] sdc2[1] 2097088 blocks [12/2] [UU__________] md0 : active raid1 sdb1[0] sdc1[1] 2490176 blocks [12/2] [UU__________] unused devices: <none> IPUserverOLD:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Fri Jun 21 11:03:51 2019 Raid Level : raid1 Array Size : 1068919808 (1019.40 GiB 1094.57 GB) Used Dev Size : 1068919808 (1019.40 GiB 1094.57 GB) Raid Devices : 1 Total Devices : 1 Persistence : Superblock is persistent Update Time : Thu Apr 16 15:55:40 2020 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : IPU-Server:2 UUID : 39208021:ee300a80:79740a60:f57758f5 Events : 77 Number Major Minor RaidDevice State 0 8 19 0 active sync /dev/sdb3 IPUserverOLD:~# mdadm --examine /dev/sd[abcd]3 | egrep 'Event|/dev/sd' /dev/sdb3: Events : 77 /dev/sdc3: Events : 72 IPUserverOLD:~# mdadm -Asf && vgchange -ay mdadm: No arrays found in config file or automatically IPUserverOLD:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Fri Jun 21 11:03:51 2019 Raid Level : raid1 Array Size : 1068919808 (1019.40 GiB 1094.57 GB) Used Dev Size : 1068919808 (1019.40 GiB 1094.57 GB) Raid Devices : 1 Total Devices : 1 Persistence : Superblock is persistent Update Time : Thu Apr 16 15:55:40 2020 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : IPU-Server:2 UUID : 39208021:ee300a80:79740a60:f57758f5 Events : 77 Number Major Minor RaidDevice State 0 8 19 0 active sync /dev/sdb3

-

5 hours ago, supermounter said:

Well, here I suffer a lot

after days of rsync to backup my data to another Nas

and rsync again backin to the recreated volume 2

I discover now others issues (seem to be not a good procedure to rsync ALL with the @EADIR and @DS_STORE folders included)

All my data are back into my volume 2 but the DSM hangs a lot when I use filestation

and some folders got now root as owner.

Once , the system completly hangs I asked a reboot with ssh and DSM didn't came back.

I had completly shutdown, remove my 4 disk (volume from the nas, restart the nas with Only volume1 and the DSM came back.

Shutdown again, put back my 4 disk and restart, the DSM came back with the 2 volumes and all my data.

But it is now making a consistency check of my volume 2 and my disk 3 say error file system, with the storage manager that propose me to fix it.

I'm waiting now the end of the consistency check before do anything else.

But I'm afraid I will need to delete my volume again and again do an rsync WITHOUT @EADIR and @DS_STORE folder this time.

what a painy a... situation and ways time here for me.

for your case what's your result with the command # vgchange -ay

I think you maybe not working on the correct device here as you got a VOLUME with SHR where md2 is the disk device

you need to work on the logical volume created by the raid array here this is why I ask you to do a # vgdisplay -v to see it

did you tried yet to mount the volume in read only mode without his system file cache ? # mount -o clear_cache /dev/vg1000/lv /volume1

Here is the resultIPUserverOLD:~# vgchange -ay IPUserverOLD:~# vgdisplay -v Using volume group(s) on command line. No volume groups found. IPUserverOLD:~# mount -o clear_cache /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handle IPUserverOLD:~# mount -o clear_cache /dev/vg1000/lv /volume1 mount: special device /dev/vg1000/lv does not existWell i install xpenology using VMware ESXi 6.7

and my disk is virtual vmdk, i use two virtual disk 1TB each

1 for volume1 which is had problem with volume crash

1 for volume2 that is working -

IPUserverOLD:~# pvdisplay IPUserverOLD:~# btrfs check /dev/md2 Syno caseless feature off. Checking filesystem on /dev/md2 UUID: 9021108d-e771-4e90-8b1c-ec4f5da3de97 checking extents parent transid verify failed on 138367680512 wanted 587198 found 587196 parent transid verify failed on 138367680512 wanted 587198 found 587196 parent transid verify failed on 138367680512 wanted 587198 found 587196 parent transid verify failed on 138367680512 wanted 587198 found 587196 Ignoring transid failure leaf parent key incorrect 138367680512 bad block 138367680512 Errors found in extent allocation tree or chunk allocation Error: could not find extent items for root 257 IPUserverOLD:~# btrfs rescue super-recover /dev/md2 All supers are valid, no need to recover IPUserverOLD:~# btrfs restore -D -v /dev/md2 /volume1/MOUNTNFS/volume2 This is a dry-run, no files are going to be restored Restoring /volume1/MOUNTNFS/volume2/@syno parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 Ignoring transid failure Done searching /@syno Reached the end of the tree searching the directoryOnly @syno folder that is recover

-

On 4/11/2020 at 1:12 AM, supermounter said:

Hello.

I'm facing the same problem than you, and looking for a solution from days now.

Apparently you got ext4 for your file system, then it will be more easy to refund and mount your volume.

Myn is a btfrs and is much more complicate as synology use his own btfrs repositery and this create some behavior to make the btfrs command work as expected.

Can you make those commands and send back the result ?

vpdisplay

vgdisplay

No It's btrfs filesystem not ext4

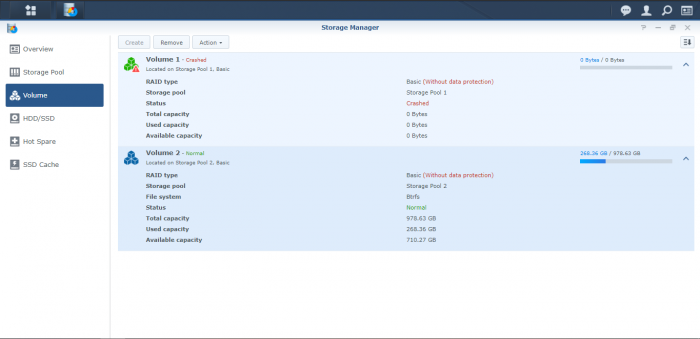

IPUserverOLD:~# btrfs restore /dev/md2 /volume1 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 Ignoring transid failure IPUserverOLD:~# mdadm --assemble --scan -v mdadm: looking for devices for further assembly mdadm: no recogniseable superblock on /dev/md2 mdadm: no recogniseable superblock on /dev/md3 mdadm: no recogniseable superblock on /dev/zram3 mdadm: no recogniseable superblock on /dev/zram2 mdadm: no recogniseable superblock on /dev/zram1 mdadm: no recogniseable superblock on /dev/zram0 mdadm: no recogniseable superblock on /dev/md1 mdadm: no recogniseable superblock on /dev/md0 mdadm: /dev/sdc3 is busy - skipping mdadm: /dev/sdc2 is busy - skipping mdadm: /dev/sdc1 is busy - skipping mdadm: Cannot assemble mbr metadata on /dev/sdc mdadm: /dev/sdb3 is busy - skipping mdadm: /dev/sdb2 is busy - skipping mdadm: /dev/sdb1 is busy - skipping mdadm: Cannot assemble mbr metadata on /dev/sdb mdadm: no recogniseable superblock on /dev/sdm3 mdadm: Cannot assemble mbr metadata on /dev/sdm2 mdadm: Cannot assemble mbr metadata on /dev/sdm1 mdadm: Cannot assemble mbr metadata on /dev/sdm mdadm: No arrays found in config file or automatically IPUserverOLD:~# cat /etc/fstab none /proc proc defaults 0 0 /dev/root / ext4 defaults 1 1 /dev/md2 /volume1 btrfs auto_reclaim_space,synoacl,relatime 0 0 /dev/md3 /volume2 btrfs auto_reclaim_space,synoacl,relatime 0 0 IPUserverOLD:~# sudo mount /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handle IPUserverOLD:~# sudo mount -o clear_cache /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handle IPUserverOLD:~# sudo mount -o recovery /dev/md2 /volume1 mount: mount /dev/md2 on /volume1 failed: Stale file handleVolume 1 is crashed (md2)

Volume 2 is healthy (md3)

Have you manage to restore the files?

-

-

On 11/13/2017 at 11:11 AM, shayun said:

Dear all

I Have server Lenovo X3500 M5,

For xpnology dsm 5.2 with xpenboot sucessBut I try to use jun loader alwasy error 38, when install the package DSM,

can help me please? what problemthanks before

Hi, Do you successfully manage to install Xpenology on Lenovo X3500 M5?

I try to install DSM 6.2 but got error Hard disk not found

-

🤪

[HELP] Xpenology Volume 1 Crashed - System using ESXi 6.7

in General Questions

Posted

Restore is also doesn't work

IPUserverOLD:~# btrfs restore /dev/md2 /volume2/volume1 parent transid verify failed on 165385699328 wanted 587257 found 587265 parent transid verify failed on 165385699328 wanted 587257 found 587265 parent transid verify failed on 165385699328 wanted 587257 found 587265 parent transid verify failed on 165385699328 wanted 587257 found 587265 Ignoring transid failure parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 parent transid verify failed on 138367598592 wanted 587198 found 587196 Ignoring transid failureIPUserverOLD:~# btrfs-find-root /dev/md2 parent transid verify failed on 165385699328 wanted 587257 found 587265 parent transid verify failed on 165385699328 wanted 587257 found 587265 parent transid verify failed on 165385699328 wanted 587257 found 587265 parent transid verify failed on 165385699328 wanted 587257 found 587265 Ignoring transid failure Superblock thinks the generation is 587267 Superblock thinks the level is 1 Found tree root at 165385601024 gen 587267 level 1 Well block 30048256(gen: 587252 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 31604736(gen: 587251 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 30998528(gen: 587246 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 30982144(gen: 587246 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 83244351488(gen: 587245 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 30425088(gen: 587244 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 30408704(gen: 587243 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 30261248(gen: 587243 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138005200896(gen: 587242 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 31391744(gen: 587232 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 110624768000(gen: 587227 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 31457280(gen: 587221 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 31014912(gen: 587200 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138368532480(gen: 587199 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138367811584(gen: 587196 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138367041536(gen: 587195 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138366976000(gen: 587195 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138366943232(gen: 587195 level: 0) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138359455744(gen: 587194 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138357948416(gen: 587193 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138356752384(gen: 587191 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138353852416(gen: 587190 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1 Well block 138353360896(gen: 587189 level: 1) seems good, but generation/level doesn't match, want gen: 587267 level: 1IPUserverOLD:~# btrfs restore -t 221593600 /dev/md2 /volume2/volume1 parent transid verify failed on 221593600 wanted 587267 found 576729 parent transid verify failed on 221593600 wanted 587267 found 576729 parent transid verify failed on 221593600 wanted 587267 found 576729 parent transid verify failed on 221593600 wanted 587267 found 576729 Ignoring transid failure parent transid verify failed on 221102080 wanted 576729 found 584508 parent transid verify failed on 221102080 wanted 576729 found 584508 parent transid verify failed on 221102080 wanted 576729 found 584508 parent transid verify failed on 221102080 wanted 576729 found 584508 Ignoring transid failure parent transid verify failed on 212533248 wanted 576712 found 584505 parent transid verify failed on 212533248 wanted 576712 found 584505 parent transid verify failed on 212533248 wanted 576712 found 584505 parent transid verify failed on 212533248 wanted 576712 found 584505 Ignoring transid failure parent transid verify failed on 221626368 wanted 576729 found 576731 parent transid verify failed on 221626368 wanted 576729 found 576731 parent transid verify failed on 221626368 wanted 576729 found 576731 parent transid verify failed on 221626368 wanted 576729 found 576731 Ignoring transid failure parent transid verify failed on 212418560 wanted 576712 found 584505 parent transid verify failed on 212418560 wanted 576712 found 584505 parent transid verify failed on 212418560 wanted 576712 found 584505 parent transid verify failed on 212418560 wanted 576712 found 584505 Ignoring transid failure IPUserverOLD:~# cd /volume2/volume1 IPUserverOLD:/volume2/volume1# ls @syno IPUserverOLD:/volume2/volume1# btrfs restore -t 138383376384 /dev/md2 /volume2/volume1 parent transid verify failed on 138383376384 wanted 587267 found 579558 parent transid verify failed on 138383376384 wanted 587267 found 579558 parent transid verify failed on 138383376384 wanted 587267 found 579558 parent transid verify failed on 138383376384 wanted 587267 found 579558 Ignoring transid failure parent transid verify failed on 138293297152 wanted 579428 found 587147 parent transid verify failed on 138293297152 wanted 579428 found 587147 parent transid verify failed on 138293297152 wanted 579428 found 587147 parent transid verify failed on 138293297152 wanted 579428 found 587147 Ignoring transid failure parent transid verify failed on 138293690368 wanted 579428 found 587147 parent transid verify failed on 138293690368 wanted 579428 found 587147 parent transid verify failed on 138293690368 wanted 579428 found 587147 parent transid verify failed on 138293690368 wanted 579428 found 587147 Ignoring transid failure leaf parent key incorrect 138293690368 parent transid verify failed on 138279387136 wanted 579406 found 587146 parent transid verify failed on 138279387136 wanted 579406 found 587146 parent transid verify failed on 138279387136 wanted 579406 found 587146 parent transid verify failed on 138279387136 wanted 579406 found 587146 Ignoring transid failure IPUserverOLD:/volume2/volume1# ls @syno