mrwiggles

Member-

Posts

20 -

Joined

-

Last visited

Everything posted by mrwiggles

-

@flyride That is a fantastic explanation. Thank you. I really hope that others are helped in similar circumstances. I searched far and wide for a simple tutorial on 'all things disk mapping' given the various options and the impact of those options. But like you said, the hardware environment plays a role here and that will vary by user of course. I never would have made the association that I could delete the 16gb vdisk/controller without your help. So, again, thank you.

-

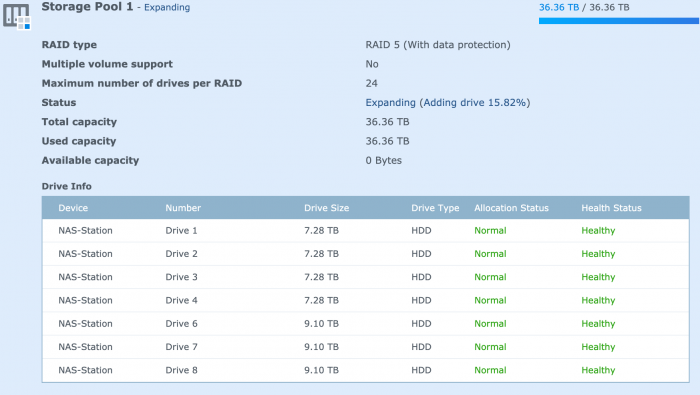

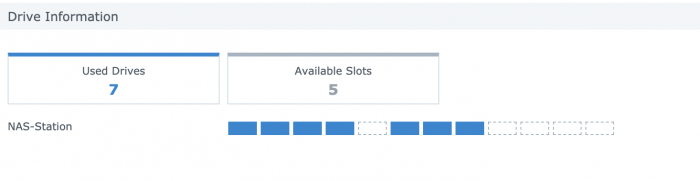

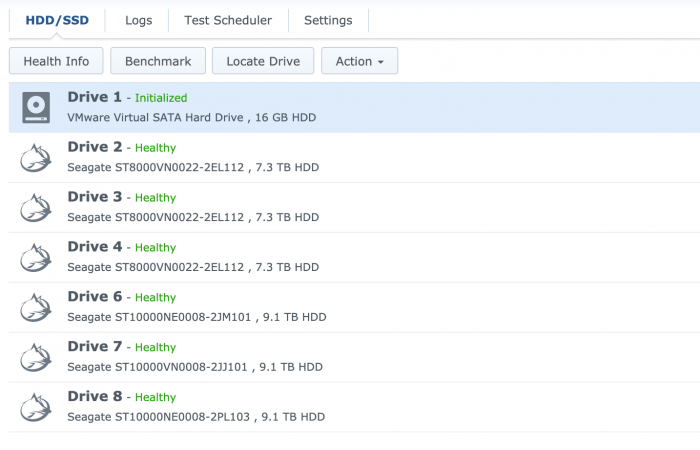

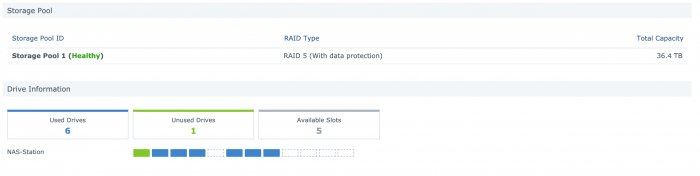

@flyride So I deleted the 16gb vdisk and vcontroller and powered back up into the vm. This time all 7 disks were recognized with the previously missing one being available as 'unused' and I added back into the array. At this point all looks normal and the array is expanding into the newly added disk. Thank you for all your help with this. Would I would love to know is why this happened to begin with. And why - attached image - slot 5 shows a blank in SM Overview. The storage pool view also shows as Drive 5 as missing. If you have any insights to share then great.

-

Good to know. Thank you for the clarification. Will delete immediately and update here with outcome.

-

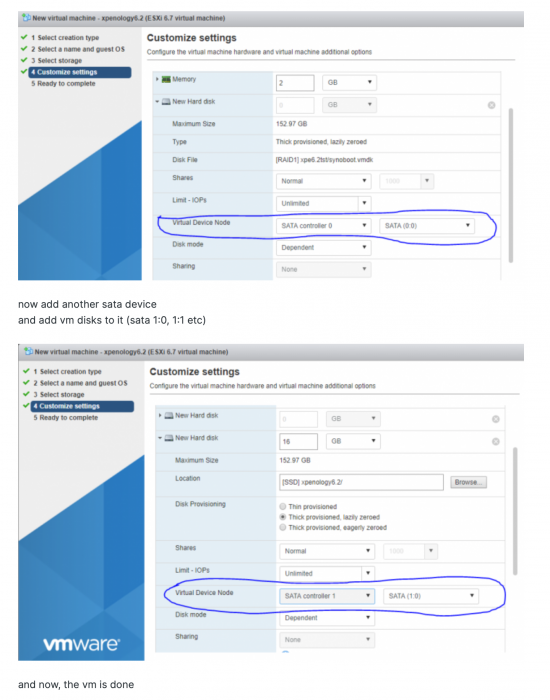

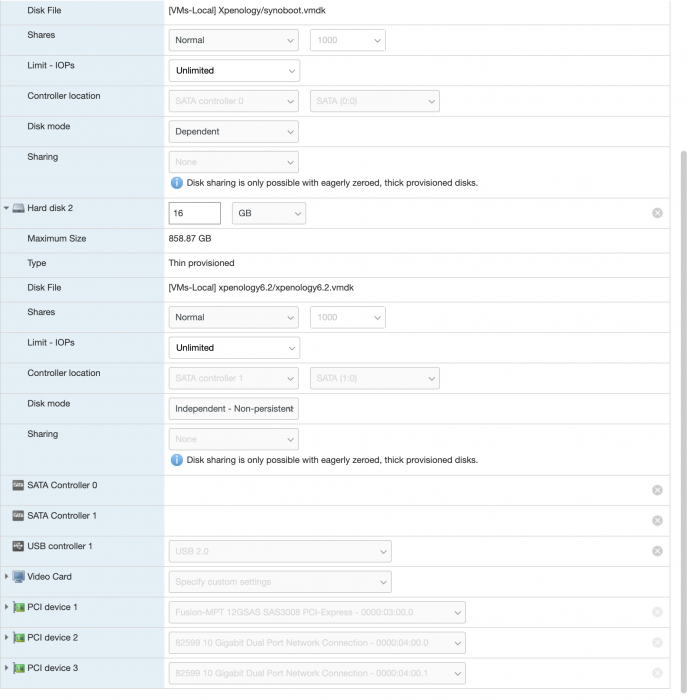

So, just to confirm, I can delete the entire 2nd SATA Controller AND the 16gb virtual disk that has xpenology6.2? So I would be left with the single vController that is 50mg with synoboot. What is the point of creating the 16gb disk and 2nd controller in the first place as per the tutorial? I had thought that 16gb disk was where DSM lives (along with any DSM apps I install) so a little unclear on that point. Pretty sure DSM is not on the array itself. Just trying to understand. I will back up the VM and delete that controller as you suggest and see what happens. Will report back. Thank you @flyride

-

And note that I bought brand new cables to go with the new LSI controller since the connectors were different on the card itself. Not that that means there isn't a cable issue but I'll do the cable/connector move on the disk side tomorrow just to see if that shows a currently recognized disk disappearing. If not then not sure what is up given the BIOS confirms of all 7 disks being available. Ugh. BTW: is there a way to hide the 16gb vdisk from showing up in DSM?

-

Repeated reboots show the LSI BIOS seeing all 7 disks. Every time. I've isolated the missing disk to be the first disk in my case, i.e. physical slot 1. But if I remove that disk - even though DSM doesn't appear to 'see' it, then the array shows an error and goes into a degraded state again. Weird. I will swap the cable to one of the other disks just to see if that makes a difference. That will force that missing disk on to another controller port I guess. Hoping that doesn't mess with array integrity.

-

Thought I had followed these settings from original tutorial...I see that I should have that disk as 'Dependent' and 'Thick provisioned' given the original tutorial. Not sure if that matters or not.

-

Actually I don't know why. I thought it came with the original VM setup tutorial and I never questioned it. But no, not using it with any storage pool. Is this a misconfiguration on my part? The 16gb virtual disk shows up in my list of HDD and it takes a slot in the storage pool so was wondering how to get rid of that. Is that related to my problem of the missing 8tb disk?

-

-

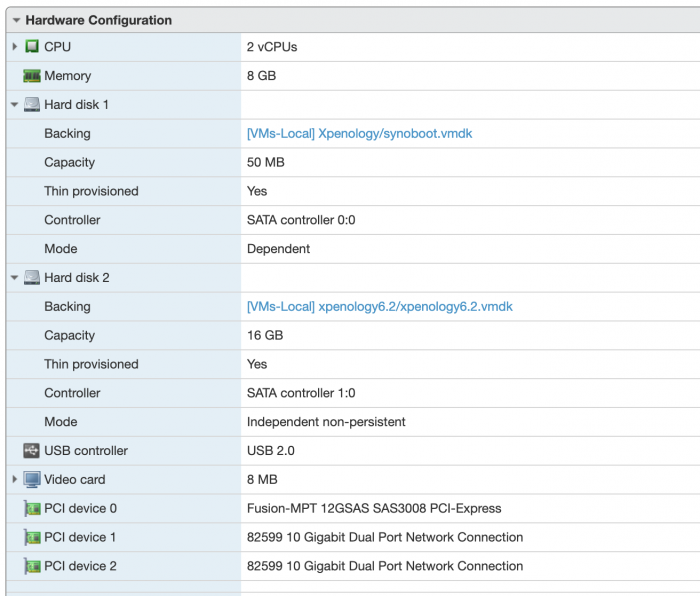

@flyride I really appreciate your response and the help with this. I usually persevere with these things after much searching but this one has me stumped. In answer to your question: here is what my VM looks like: I think I have it configured correctly given the original ESXi instructions. So I guess I have three controllers then? The two virtual controllers and the SAS3008 LSI that I am passing through to DSM? Happy to share more config info from grub.cfg or syninfo.conf if helpful. Thanks again for the guidance. I almost destroyed this array an hour ago by attempting to move the disks in their physical slots. BTW: all the disks show up in the BIOS during boot sequence so I know the LSI sees them.

-

Running Jun loader 1.03b DS3615xs | ESXi 7.01 | LSI 3008 passthrough controller Seeking help as I am struggling with an issue where one of the disks on my passthrough controller is missing from Storage Manager. I have 7 pass through disks but only 6 show up. The back story is that I had ESXi 6.7 running great with these disks running on a MB LSI storage controller. For other reasons I needed to upgrade to ESXi 7 and my controller was no longer supported. So I installed new LSI3008 controller and moved my disks to that. Booting my Xpenology VM resulted in my pretty much having to start over and create a new storage volume. That all worked fine with the 6 original disks until 1 of the 8tb disks just dropped out the next day and my array was DEGRADED. I added a new 10tb disk to save the array and that worked. But the original 8tb disk is still missing. I suspect this has something to do with Synoinfo.conf or grub.cfg file settings but after reading a ton of forum posts I am now lost and beyond my abilities to fix this. The missing disk just refuses to show up in Storage Manager. Things I have tried: - I have run fixsynoboot.sh and confirmed it is installed properly. - I edited the grub.cfg file to update the DiskIdxMap setting to 0C00 from 0C as per @flyride in another forum post. Doing this remapped my disks to move my 16gb virtual boot disk to slot 1. But my missing 8tb drive is still missing. Not sure where to go from here. I added another 10tb drive last night so have a total of 7 physical disks installed but only 6 showing in DSM. See images below. Would really appreciate some advice and help on resolving this.

-

I have the same question. Have never had to do an update to Xpenology for compatibility reasons. Running on ESI 6.7U3 and same model: DS3615xs. Appreciate any input.

-

For my use case this approach works fine. I’m not trying to back up a lot of large VMs on a regular basis. As I said in my original post, I have just the Xpenology VM and want to copy that to a remote volume in case of hardware failure. I understand that simple copy wouldn’t be a good option for many use cases and with many VMs. But in my case I just enabled NFS on my Synology NAS and mounted that volume from within ESXi so that I could copy the Xpenology VM to the other Synology. Combined with taking a snapshot also, this gives me what I need for failure protection.

-

For anyone else having my same 'vm backup' questions I found this video which summarizes a simple vm copy to a another host.

-

Kind of answered my own question: looks like I can just mount a NFS share on my Synology from ESXi datastore. Would it then be as simple as copying the VM to that remote volume? I've copied VMware Workstation VMs around in the past and could easily boot those after telling VMware that it as a copy. Just wonder if ESXi vm copies could work the same way.

-

I have the same setup. I have a small SSD that is my ESXi datastore but all the main spinning disks are passthrough via an LSI controller. So would it be as simply as just copying the files in my vm files as you suggest to a different system? Pardon my ignorance but I'm not an ESXi expert so how do I expose the SSD datastore as a shared file system? Or is in the inverse where I mount an external file system to ESXi and then copy the datastore files to the remote system from ESXi? Would appreciate the specifics there. Thanks.

-

Have Xpenology running great on ESXi - thank you to the devs and tutorials - but I’d like to backup my VM to avoid down time in the event I need to reinstall/recover from hardware failure. MOST of the 3rd party vm backup options require APIs that the free version of ESXi doesn’t support. And they seem overkill for just wanting a single vm backup. Any suggestions for how to do this? I think I can create snapshots locally to the ESXi instance - but have to shutdown the vm - but that doesn’t save me in the event of hardware failure on that server. So I’d prefer to back up the vm to another NAS or even an external drive if required. This seems like an obvious need for any ESXi users running Xpenology but I haven’t found anything in the forums on best practices for this. Thank you.

-

Thanks @flyride So I’m back to my original question: I want to backup my Xpenology VM to avoid a complete reinstall in the event of hardware failure. Is there a simple way to do this given that I am using free ESXi 6.7? How does everyone do this?

-

@foxbat I think the issue is that since I am using the free version of ESXi it doesn't have the backup API available. I'm pretty sure Veeam requires that API to work. Could be wrong. Is this correct?

-

Did a bunch of searching but didn't come up with an answer. So posting here as the question is specific to Xpenology VM on ESXi... How do people backup their VM? Given the risks of DSM updates, I want to create a backup/snapshot of my Xpenology VM before applying any updates. But I can't seem to find a simple way to do this. LOT'S of heavy VM backup solutions for ESXi but those are overkill or require a fully API license for ESXi. So what are the simple best practices here? I see a 'Snapshot' feature in ESXi Host but it doesn't appear to work on a running Xpen VM. I haven't tried using that feature after shutting down the VM, but hoping to find a solution that doesn't require me to take my whole NAS offline just to backup the VM. /m