Maxhawk

Member-

Posts

23 -

Joined

-

Last visited

Everything posted by Maxhawk

-

The numbers match the "Utilization" figure in Resource Monitor. I did look in Task Manager at the top memory users in the Services and Processes tab and I didn't see any one thing that was using up gigabytes of memory. The highest users were VMware Tools and snmpd, and they still are after restart. For future debug, I've saved screenshots of the Services and Processes tab for comparison later when the memory usage creeps up. FWIW, here the metric I'm using (that precisely matches the utilization per Resource Monitor): SELECT ((last("memTotalReal") -last("memAvailReal")-last("memBuffer")-last("memCached"))/last("memTotalReal"))

-

I have DSM 6.1.7 15284 installed with Jun 1.02b in an ESXi 6.5 VM. It's been running for over 1.5 years with little issue. One time in the past, I was not able to access the shared folders in Windows. I found the DSM GUI to be unresponsive and noticed that my memory utilization was >90%. (I use Grafana/Telegraf to gather stats on my network). After restarting DSM everything worked normally and memory utilization was back to "normal" (<10%). At the time I had allocated 8GB of RAM to the VM so I decided to double the allocation to 16GB. Fast forward to this week and I noticed that the memory utilization was increasing over time. I only capture a 30 day window of data, but you can see in the following graph that it went from around 15% to 45% in 30 days. I decided to restart DSM and the utilization dropped to 5%. https://i.imgur.com/SJMIagJh.png Has anyone else experienced memory usage creep like this? I have the following packages running in DSM: DDSM in docker, Active Backup for Business, Drive, Hyper Backup, Hyper Backup Vault, Log Center, Moments, Replication Service, Snapshot Replication, Universal Search, VMware Tools, and VPN Server. In the future as the memory usage continues to go up, is there a command I can run (CLI) that will tell me what's using the memory? I've tried htop and top but it wasn't obvious what was using the memory as the top users were only in the 100s of MB range. At the end of the day I can always manually reboot as memory usage rises, but it sure would be nice to find the root cause to prevent it from occurring in the first place.

-

Thanks for the suggestion, but as I recall, 1.03B with 6.2.x doesn't support 10 Gbe since there is no VMXNET3 driver. Or has there been advancements since I tried it a year ago?

-

I've got ESXi 6.7U3 running on a Dell R510. The NICs include: (1) the built-in dual Broadcom 1Gbe ports, which work as expected, and (2) an Aquantia AQN-107 Nbase-T PCIe card which is not playing nicely with XPE. XPE uses loader 1.02b with DS3615xs 6.1.7 Update 3. The network is set up as VMXNET3 which is required to support 10 Gbe. I have the Aquantia driver package installed in ESXi. ESXi reports it as a 10000 Mbps connection. I have an Ubuntu VM and an iperf test to it comes back at 10Gbit wire speed (940 Gbps) so I know ESXi and the NIC are working properly. With the AQN-107 I'm able to access the XPE GUI. The XPE network control panel reports a 10000 Mbps connection. However an iperf test fails and copying a large file from Windows (SMB) fails. Both tests work fine when I configure the VM to use the built-in Broadcom NIC. My thought is that since ESXi is providing a "virtual" network connection to XPE, no additional drivers should be necessary in the XPE loader. Any suggestions on what to try next? I have two other ESXi boxes (R720xd & R310) running XPE 6.1.7 U3 that both use a Mellanox ConnectX-2 card and they both run at 10 Gbit speeds with no issue. I want to use the Aquantia card in the R510 because I have extra copper ports available but no more SFP+ ports available.

-

I finally got it working! It turned out that none of the commands in set sata_args were being executed. I had to move DiskIdxMap, SataPortMap, and SasIdxMap to common_args_3615. Once I did that, I was able to figure out by trial and error that DSM sees 3 controllers. The trick that worked was to set DiskIdxMap=0C0D. This also resulted in proper numbering of the data drives starting with Drive 1. I think setting DiskIdxMap=0C0D00 would be more proper and would have worked too. I left SataPortMap=1 and SasIdxMap=0.

-

DSM version: 3615xs 6.1.7-15284 Update 3 Jun bootloader 1.02b Hardware: Dell 720xd with H310 mini (for my VMs) and PCIe H310 flashed to IT Software: VMWare ESXi 6.5U1 with PCIe H310 passed to Xpenology ---- I need some assistance with properly setting sata_args in synoboot.img in a virtual environment. I've had Xpenology running smoothly for a year and half as a VM on my Dell R720xd. The chassis holds 12 drives and for quite a while I've run with two volumes: (1) 8x8TB RAID10 and (2) 2x8TB RAID0. Other than setting the MAC address and S/N in grub.cfg I left everything "stock". Very recently I decided I wanted to fill the last 2 empty slots with a couple more 8TB drives and make a third volume. When I did that DSM reported that my RAID10 array had degraded and one of my original 8TB drives was missing, reporting only 11 drives. After a lot of trial and error, I determined that if there are 11 drives installed, DSM is OK. When a 12th drive is installed, I lose one of the originals. Am I running to an issue where my boot drive + 11 = 12 maximum drives? In ESXi, I have one drive defined which is the boot drive. This shows up as a SATA drive in DSM's dmesg. My pass-through drives all show up as SCSI drives. In dmesg I can see that the 12th disk is recognized (/dev/sdas), but the drive doesn't show up in DSM. My sata_args is as follows: set sata_args='sata_uid=1 sata_pcislot=5 synoboot_satadom=1 DiskIdxMap=0C SataPortMap=1 SasIdxMap=0' I played around with DiskIdxMap and SataPortMap and changes made zero difference. I found that DSM sees 32 SATA devices which means SataPortMap=1 wasn't doing anything. I found a thread where someone said that moving it to common_args_3615 would make the system recognize it. When I did that, the disk numbering drastically changed (my first data drive starts at drive 34 and it went to drive 2) but DSM could only see 6 drives and indicated my RAID10 volume was crashed. Does anyone have any ideas on what I can try next? Perhaps move DiskIdxMap to common_args_3615? I also tried modifying synoinfo.conf (usbportconfig, esataportconfig, internalportcfg, and maxdisks) but this made no difference. At this point even with the original synoboot.img without the missing drive, the volume is degraded and I'm going to have to do a repair to get it back to normal. The drive that was missing now says Initialized, Normal. I'm afraid to to do any changes that may cause more drives to drop from the array forcing me to restore everything from a backup. Thanks for any help.

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.2.1-23824 Update 4 - Loader version and model: JUN'S LOADER v1.03b - DS3615xs - Using custom extra.lzma: NO - Installation type: VM - ESXi 6.5U2 Dell R310 - Additional comments: none

-

Hardware and overall system/software topology questions

Maxhawk replied to Maxhawk's topic in The Noob Lounge

Sorry I've not. -

Hardware and overall system/software topology questions

Maxhawk replied to Maxhawk's topic in The Noob Lounge

I've had my R720xd since mid January and have had Xpenology running on ESXi since then. I've had zero issues and I'm very happy with the setup. I'm using the H310 mini to control two 2½" SSD drives in rear flex bays for VM storage and an IT-flashed H310 for the front 12-bays. The H310 is passed through in ESXi so Xpenology can control the drives directly, with access to the SMART data and temperature readings. I can't comment on whether this would be an upgrade to your Supermicro. I can only say that everything has been 100% stable with 9 total VMs and it seems I'm barely taxing my dual Xeon 2630L CPUs. -

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.1.6-15266 - Loader version and model: Jun's Loader v1.02b - DS3615xs - Using custom extra.lzma: NO - Installation type: BAREMETAL - Gigabyte GA-P35-DS3R - Additional comments: NO REBOOT REQUIRED

-

Anyone try Docker DDSM in an ESXi installation?

Maxhawk replied to Maxhawk's topic in Synology Packages

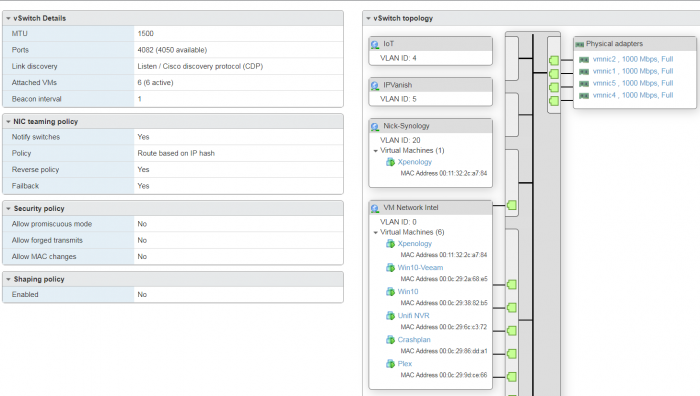

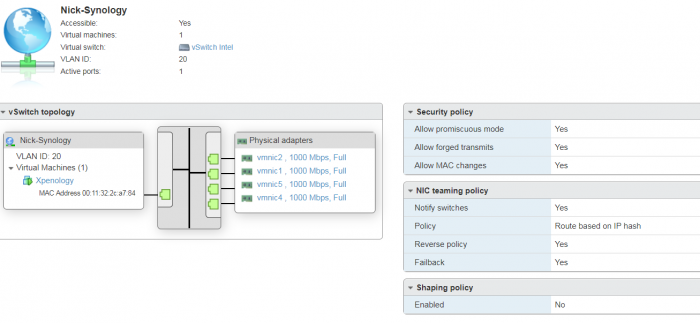

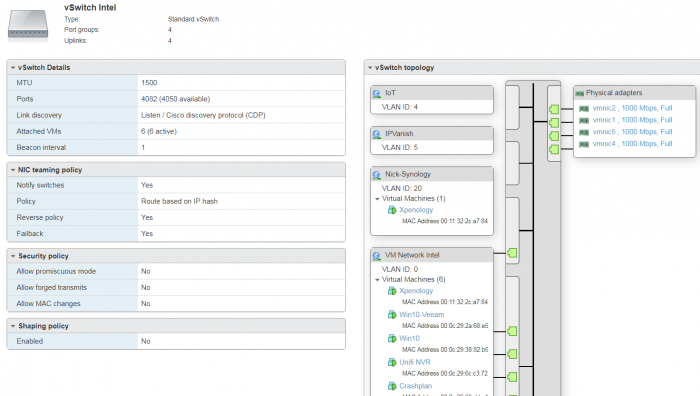

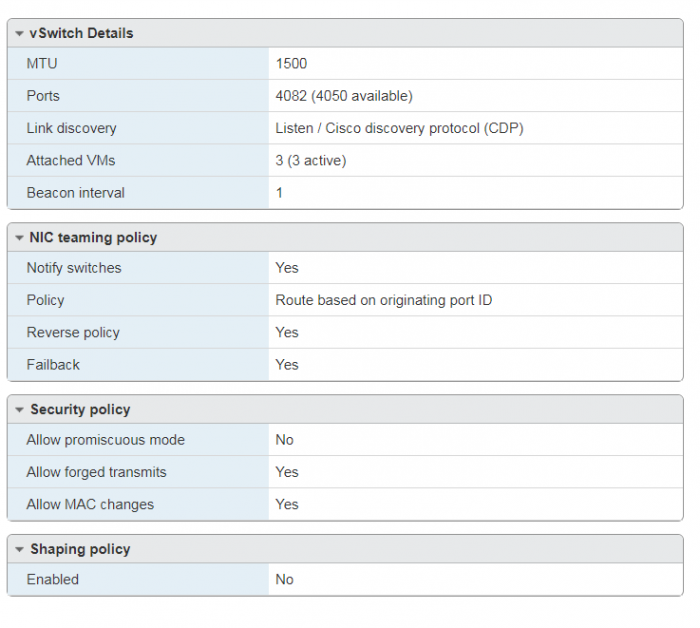

The DDSM network is called Nick-Synology. The only other changes I made to the default was to change NIC teaming to Route based on IP hash which should not affect this. I cant remember if I made changes to the security policy of the default vSwitch0 for the managment network, but I see that it's not all set to No FWIW. This switch is on a separate NIC port from vSwitch Intel. -

Anyone try Docker DDSM in an ESXi installation?

Maxhawk replied to Maxhawk's topic in Synology Packages

Thanks this worked! I know I tried one of those to Yes but not all three. Also it turns out you don't have to turn these on for the entire vSwitch. I did it only on the port group where I specified the VLAN ID and DDSM got an IP address first try. -

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.1.5-15254 Update 1 - Loader version and model: JUN'S LOADER v1.02b - DS3615xs - Using custom extra.lzma: NO - Installation type: VM - ESXi 6.5 U1 - Additional comments: REBOOT REQUIRED

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.1.5-15254 Update 1 - Loader version and model: JUN'S LOADER v1.02b - DS3615xs - Using custom extra.lzma: NO - Installation type: BAREMETAL - (Gigabyte GA-P35-DS3R) - Additional comments: REBOOT REQUIRED, manual update with previously downloaded file

-

I'm trying to install a second instance of DSM by using DDSM in Docker in an ESXi environment. The reason I can't spin up another XPE VM instance is because all 12 of my drive bays on the backplane are being passed through by an H310 controller (flashed IT mode=HBA). The issue I'm having with the ESXi VM instance is I can't get network connectivity on the DDSM. I've tried DDSM on a separate bare metal installation and it worked fine set up the same way. The only significant difference is one is bare metal while the other one is ESXi. On ESXi I've presented XPE with 2 virtual network cards on different subnets. DSM gets an IP address from both subnets but DDSM can talk to neither of them. This is an ESXi issue, but I can't exactly go asking on a Synology forum about use with ESXi. I was just hoping that someone here may have done it so I know it's possible. Everything looks right as configured in ESXi. Thanks for reading.

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.1.5-15254 - Loader version and model: Jun's Loader v1.02b - DS3615xs - Installation type: ESXi 6.5U1 on Dell R720xd - Additional comments: No Reboot Required

-

The physical hard disks as reported by DSM DS3615xs (6.1.x) don't start at 1. Is there any way to change this? It doesn't hurt anything but my OCD doesn't like it. I'm installed in a ESXi 6.5 VM running on a Dell R720xd with an H310 controller flashed to IT mode passed-through to XPE. There's a separate H310 mini mono in IR mode that ESXi uses. The drive numbers are actually counting backwards from 44 as I installed the 8TB disks first and later added the other drives. Thanks for any help or insight.

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.1.4 15217 Update 5 - Loader version and model: JUN'S LOADER v1.02b - DS3615xs - Installation type: Bare Metal (Gigabyte GA-P35-DS3R V1.0) - Additional comments: Requires reboot

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.1.4 15217 Update 5 - Loader version and model: JUN'S LOADER v1.02b - DS3615xs - Installation type: VM ESXi 6.5U1 (Dell R720xd) - Additional comments: Requires reboot

-

Thanks @Polanskiman, I hadn't paid attention to that tutorial since it was about migrating and not a fresh install, and as a noob I didn't realize the steps were relevant to a fresh install. Now it makes sense, but I didn't think to revisit the thread. Will DSM complain if I try to change the loader's serial number on an existing 6.1.4 installation?

-

When I used Jun's 1.02b loader on a spare PC it was a simple process with ESXi to create a VM, copy the synoboot.img and .vmdk files to the VM, and create a drive pointing to synoboot.vmdk. I did this multiple times while getting used to the installation process and trying ds3615xs vs ds3617xs. I used the exact same process on the R720xd I just received this week and couldn't get it working. Xpenology was not being assigned an IP address (I was looking at my router's lease table). I eventually found some internet threads saying you can go into the command line during boot and type in set mac1=00x11x32x2cxa7x85. This allowed DHCP to get an address and Xpenology worked as expected. The MAC address that showed up in my router matched the address that ESXi was assigning the network card. After a few iterations of this I decided to hard code the IP address in DSM (as opposed to setting static IP assignment by MAC that I normally do). This however still would not work unless I used the 'set mac1=' command during boot. After some research I found you could update the grub.cfg with a new MAC and serial number. I couldn't figure out how to extract grub.cfg from the synoboot.img but I was able to find MAC address in synoboot.img with a hex editor: I changed this to my ESXi assigned MAC address (00:0c:29:61:e4:a4) but I still couldn't the loader to get an IP address but would if I used set mac1=00x11x32x2cxa7x85. Is there a way to change the default MAC address in synoboot or am I stuck with manually assigning 00:11:32:2c:a7:85 to Xpenology?

-

Hardware and overall system/software topology questions

Maxhawk replied to Maxhawk's topic in The Noob Lounge

Thanks for the responses. I've installed ESXi and Xpenology 6.1 alpha on a machine I built with old spare parts just to get my feet wet. Due to the old CPU (Core 2 Duo E6750) I have to use ESXi 6.0 as this CPU is no longer supported in 6.5. I've got 5 WD 1TB green drives and a 60 GB SSD. The motherboard is a Gigabite GA-P35-DS3R and has 8 built in SATA ports. I've installed two HP NC360T (Intel 82571) for a total of 4 gigabit ports. 1. I notice I'm not able to create a datastore as RDM. Is this because I don't have a separate drive controller? Will RDM become an option if I have an H200/H310 in IT mode? 2. Since I can't use RDM I'm simply creating a virtual disk to present to Xpenology. I notice that DSM can't read the drive temperature but the S.M.A.R.T status says OK. Will temperature readings work when RDM is used? Is the S.M.A.R.T "ok" status a false positive the way I've connected the drives? 3. What's the proper way to do ethernet link aggregation with ESXi and Xpenology? I found within ESXi I can create a switch that does load balancing between two NICs and Xpenology sees only LAN 1. Alternatively I can present the Xpenology with two NICs and let DSM create an 802.3ad bond between LAN 1 and LAN 2. Is there any difference? 4. There are folks who consider Xpenology to be a hack and don't think it's reliable and stable. However I'm using a DS3617xs .PAT file that I downloaded directly from Synology so the DSM software certainly is "authentic". Is it the boot image that's considered the "hack"? 5. I'm now considering a Dell R720xd because it seems every generation of hardware comes with significant improvement in power efficiency. Two to three years of power bill savings will pay for the difference in hardware cost from the R510 I was eyeing before. I don't expect there to be any issues, but are there any known issues with using Xpenology with the R720xd? 6. I've seen some ESXi/Xpenology tutorials that say the boot drive should be set up as IDE (0:0). However when I use the 1.02b boot image and 1.01 .VMDK file, ESXi only lets me choose SCSI. Is that because the .VMDK file is set up to use SCSI instead of IDE? 7. My boot drive (SSD) shows up as one of the drives in DSM. Is there any harm in leaving it there? Is there a way to prevent DSM from seeing it? Thanks again for bearing with my noob questions. -

I currently have a 1511+ with 5x 4TB WD RED drives in SHR. With the demise of Crashplan's Home backup service and the current business version not playing nicely in headless installations, I've started looking into backing up to another Synology at a friend's house (we both have AT&T gigabit fiber and older 151x+ boxes). At first we thought about each buying a DX513 to use as a volume for remote backup but I found that the CPU in my 1511+ gets into the 90+% load range while the other is backing up, leaving little headroom if I need to do something myself with the Synology. Plus it's been announced that future DSM versions won't be supported with my aging hardware. With new 1517/1817+ being quite expensive, my new thought was to use Xpenology with a used Dell server such as an R510, R520, or R710 and build my own RS3617xs. There are some nice DIY solutions using mini-ITX boards but I want the external hot-swap bays that I'm used to having with my 1511+. Since the CPU in these servers are overkill for just Xpenology, I figured I could use ESXi to run Xpenology along with a handful of other Linux VMs to run my Ubiquiti controller, Ubiquiti NVR, Pi-hole, OpenVPN server, etc. I understand that I need a drive controller that supports HBA, so for these Dell models I would need either the H200 or H310. Some of these questions get into how ESXi works, but I figure there are folks here who may be familiar: 1. Is this a feasible system topology or is better to have just Xpenology running on the hardware and if so, is bare metal the preferred option? I think I read bare metal has consequences with driver support such as the standard Broadcom NICs, RAID controller, etc? 2. With a 12-bay server, could I dedicate 6 drives to Xpenology, 1 to NVR, 1 to various Linux VMs, with the ability to add 4 more drives to my Synology share in the future for expansion (do I need SHR or can RAID6 do this too)? 3. Among these 3 Dell models, is one easier to configure/maintain? The R710 is cheapest but least preferred since it only has six 3.5" bays. 4. How much memory does Xpenology need? Do you think 32GB total RAM would be enough to do what I have in mind? 5. If doing multiple VMs, do I need to dedicate a drive for ESXi or can all this be done from a USB stick/drive? 6. Is there something I'm forgetting? Is this a stupid idea? Thanks in advance for your attention and responses.