ilovepancakes

Member-

Posts

175 -

Joined

-

Last visited

-

Days Won

2

Everything posted by ilovepancakes

-

Now that Redpill loaders for DSM 7 can be reliably created (thanks to many recent contributions from @haydibe, @pocopico, others, and of course @ThorGroup's original code which was very well organized for others to understand), I am trying to focus down on some stability bugs for typical use cases, namely related to running Redpill DSM 7 on ESXi based virtual machines. I'm experiencing an issue where using SCSI based virtual disks (with virtual LSI SAS or Vmware PVSCSI controllers) isn't working properly within DSM, and first off I am curious if anyone else experiences the same symptoms or if it's just me. If I use mptsas or vmw_pvscsi extensions with Redpill, these virtual SCSI disks successfully show up in DSM, and can even be used to install DSM onto instead of SATA data disks. But, even though DSM install goes fine on them, and DSM runs fine, they are highly unstable when it comes to creating a storage pool in DSM on them. This seems to relate to SMART data not being reliably shimmed for these SCSI virtual disks. i.e. Fake SMART data (per TTG SMART shim in Redpill-LKM sometimes shows up, sometimes doesn't, when it does show up, it often causes disks to flip around positions in storage manager, flips the name of the disk back and forth between "Vmware Virtual Disk" and "null Virtual Disk", etc. Most of the time, those issues cause the disk to not report a health status at all or causes an unhealthy status, which of course prevents the disk from being used to create a storage pool in DSM 7. To note, if I add virtual SATA disks to the VM, they show up reliably in Storage Manager, and remain stable and fully usable with no issues. Also, the SCSI virtual disks don't seem to be disconnecting or exhibiting issues at the system level, just in Storage Manager/with health status in DSM itself. This is why DSM can be installed and run off these SCSI disks, but further use of them in DSM is not possible. That being said, I know others have successfully used mptsas (and mpt2sas/mpt3sas or even the built in DSM SCSI driver) extensions to used physical pass-through disks with DSM. I am guessing this works, because real SMART data gets passed through too, and DSM sees this and works with those disks correctly. That further leads me to believe the following (again, assuming others are able to test the above and get the same result): I believe the issue is with the SMART Shim in Redpill-LKM and/or the SAS > SATA Shim code TTG included on their latest commit to the lkm repo. From what I have been able to tell, these shims work through playing with "sd.c" the syno-modified SCSI driver. I'm wondering if the issue is the fact that for the above scenario, other drivers are being used and the shim isn't properly touching those of course. Again, I am first curious though if this issue is widespread or if others are able to get virtual SCSI disks on ESXi to work reliably in their configuration. I don't believe comparing or testing with Proxmox helps here because I think I remember TTG saying Proxmox emulates some SMART data to begin with and you can use VirtIO on Proxmox anyway which redpill is designed to work properly with out of the box. Although, if others DO having fully reliable SCSI virtual disks in DSM on Proxmox, this does further support the issue is likely with the shims not interacting with mptsas and vmw_pvscsi properly.

-

In latest version Poco preset the password to: P@ssw0rd

-

Redpill - extension driver/modules request

ilovepancakes replied to pocopico's topic in Developer Discussion Room

Tested, doesn't work on ESXi. Without Vmware tools or open-vm-tools installed, etc... it greys out the option to shutdown or restart the guest VM. Pretty sure those two options in ESXi work differently than typical ACPID events anyway. Was worth a shot. -

Yep... and would be great if TTG really is gone (still holding out hope though) to better organize efforts amongst community devs who are capable of continuing the project in various ways.

-

Redpill - extension driver/modules request

ilovepancakes replied to pocopico's topic in Developer Discussion Room

Ohh I was under the impression that only works with Proxmox and not ESXi, will have to try it out later. Agreed if this does shutdown and restart on ESXi, then IP address display isn't as important. Do you have the package/code anywhere on github for others to test with? Weird restart doesn't work if shutdown does... and I'm assuming it doesn't show IP address in ESXi at all? -

Redpill - extension driver/modules request

ilovepancakes replied to pocopico's topic in Developer Discussion Room

Could open-vm-tools somehow be implemented as a Redpill extension? It seems it can't be installed the conventional way (DSM Package) used in DSM 6.2 because DSM 7 doesn't allow packages to run as root, which seems to be needed for open-vm-tools to work 100%. This would allow data (IP address, etc.) to be passed from DSM to ESXi based installs and would allow ESXi VM Guest shutdown/restart features to work. -

I appreciate the compile option being there nonetheless as sometimes it's nice to be able to compile something ourselves completely from scratch, for security. Although I suppose we could still compile rp-lkm manually and just use the .ko output from that to be the static module used rather than yours. I'm impressed with the ease of use now to generate a loader. I did it via SSH as on ESXi for some reason the mouse doesn't work when trying to use the GUI. I only tried with a USB 3.1 controller added to the VM maybe it only works with USB 2.0 or something. Now to try and fix that pesky SCSI virtual disk issue....

-

Copy that. And upon changing that entry and downloading the modules file for correct matching of extensions, running the script seems to have worked flawlessly using the static rp-lkm option. Didn't try compile option yet. Well done!

-

The ID "bromolow-7.0.1-42218" on the custom_config.json included with the repo seems to reference jumkey's master branch, but isn't 7.0.1 included on jumkey's develop branch only?

-

Nice job on this project! When running on ESXi using a PV SCSI controller, I get the following from the auto detection attempt for modules. Found SAS Controller : pciid 15add000007c0 Required Extension : No matching extension What is your preferred approach to add that pciid to be associated with your vmw_pvscsi extension? You want to add/control them or should we open pull requests on github?

-

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

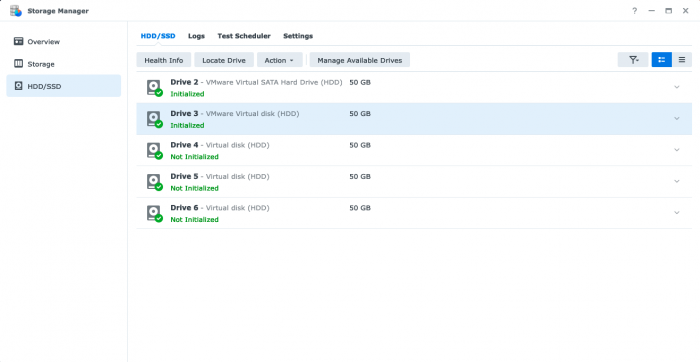

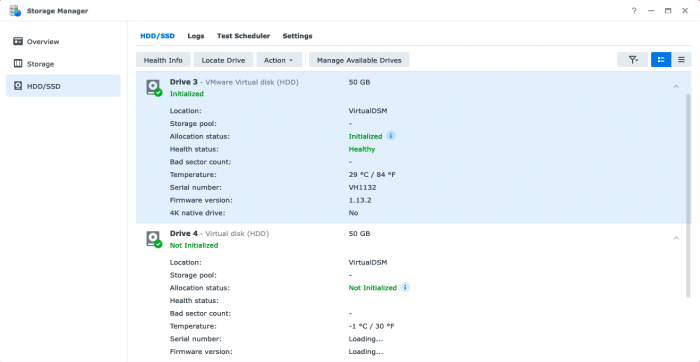

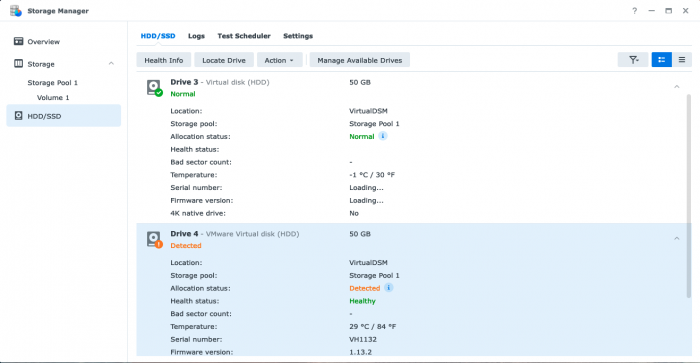

Thanks for the article, that helped me sort out the disk order/getting all disks to show up. But, it didn't solve the issue of virtual SCSI disks being wonky in DSM. I'll start by saying that SATA virtual disks with redpill continue to work fine and reliably. Using your extension for vmw_pvscsi (or mptsas) I am able to get virtual SCSI disks to show up in DSM. Attaching a few screenshots to walk through the issue. Here we can see all disks attached. Loader VMDK is Drive 1 (hidden when redpill SATA boot option is used instead of USB) and a test SATA virtual disk is Drive 2 on the same SATA controller as the loader. Drive 3-Drive 6 are PV SCSI disks attached to a SCSI controller. SataPortMap=24 and DiskIdxMap=0002 so all is showing correctly. If I create a storage pool and volume with SATA disk, it works. But notice how only the 1st SCSI disk is showing up as "VMware Virtual disk (HDD)" and the other just show "Virtual disk". And if I expand Drive 3 vs Drive 4 you can see Drive 3 has fake smart values for it, and as such, the SMART shim gets DSM to think it is "Healthy" but Drive 4 (and 5 & 6 too) don't get fake SMART data and don't show healthy status. As to be expected.... if I create a storage pool and volume with Drive 3, it works.... but Drive 4-6 don't even show up as selectable since they are not marked "Healthy". Here's where it got weird too. After creating the Storage Pool and Volume with Drive 3 (the 1st SCSI disk), it magically just flipped position with Drive 4.... The below shot shows Drive 4 as having the Storage Pool along with Drive 3 (that I just created with only Drive 3 though) and Drive 3 as now being one of the generic "Virtual disk" ones without SMART data. Very weird.... but after a minute, it flipped back.... and all was normal again, except Drive 4-6 still didn't have fake SMART data or a Healthy status allowing use in Storage Pools. So.... building off my previous post, I am wondering if something is going on here with SCSI disks and the SMART shim from @ThorGroup. The disks clearly show up correctly once the proper driver extension is used, but it seems they show up unreliably and have issues getting fake SMART data, making them unstable, and unusable for storage pools. That being said, I easily could be wrong and am curious what others are seeing especially those trying virtual SCSI disks. I am guessing (and I have no hardware on my end to try myself) that those using passthrough SCSI controllers are not seeing this issue, as I'm assuming SMART data passes through also, which means the redpill SMART shim isn't used to fake data. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

So that helped sort of. Using vmw_pvscsi virtual disks, I can get them to show up in DSM. BUT, If I use DiskIdxMap=0A00 and SataPortMap=58 (with the loader on SATA 0:0 and first data disk on SCSI 0:0) the loader disk doesn't show up at all, which is fine and normal with SATA boot entry on RedPill. And the single SCSI data disk shows up as "Drive 6" in DSM. If I change the SataPortMap to equal just "1" the SCSI virtual disk moves to "Drive 2" on DSM. This is all fine so far. But here is where it gets weird. If I add additional virtual disks to the same SCSI controller, they show up in Storage Manager but aren't usable for various reasons, mainly related to the Health status not showing up or showing "Not Supported" so something is failing with the SMART shim in redpill I guess? For example, if I leave DiskIdxMap=0A00 and SataPortMap=1 I see Drives listed on 2-7 (after adding a total of 6 virtual SCSI disks in ESXi). But, Drive 3-7 can't be used for a Storage Pool as the health status fails. Drive 2, the original drive DSM is installed on, shows Healthy and can be used. If I change SataPortMap to 58, like you use, now only 3 disks total show up in Storage Manager rather than all 6. And they show up as Drives 6-8 with only Drive 7 showing up as "Healthy" and being usable. If I reboot, Drive 7 and 8 show healthy now, if I reboot again, only Drive 8 shows healthy. So clearly, something is going on with the SMART shim. I do notice that for the drives that show healthy, when they do, it shows a serial number for the virtual disk and a firmware version. I wonder if this is being reported by ESXi since it is paravirtual SCSI disks? If so, could there be a condition where the SMART shim is conflicting with some sort of partial SMART data ESXi is actually presenting to DSM? To test that theory, I changed the virtual SCSI controller to LSI SAS instead of Vmware Paravirtual. With SataPortMap at 58 (to test original scenario) only 3 of the 6 data disks show up, with the listing starting at Drive 6. But they all show "Healthy". With SataPortMap=1 all 6 disks show up, starting at Drive 2 in Storage Manager, and all 6 are "Healthy" BUT when creating a storage pool, it fails. It just says DSM failed to create the storage pool using 1 more disks. And this is the case whether I try RAID1, RAID5, or even just a basic storage pool with 1 disk. It just doesn't like making a storage pool using a SCSI virtual disk.... Could it be the SataPortMap setting or DiskIdxMap? Is there another setting I could be missing. My config.json just has those two values defined plus a serial and mac of course. Extensions installed are the default TTG ones (boot-wait and virtio) plus vmxnet3, mptsas, and vmw_pvscsi. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

@pocopico Ahh, okay, I was using mpt2sas so I changed to using mptsas extension BUT I am still getting no drives detected when trying to install DSM. If I switch the virtual HDD to a SATA controller then it shows up as available to install DSM on.... So that being said, since I am using the correct driver now (mptsas) for LSI SAS virtual SCSI in ESXi.... is there something else I need to use to get virtual HDDs as SCSI to show up for DSM install? I also added the vmw_pvscsi extension to same loader and if I attach the same virtual HDD to a paravirtual SCSI controller, I get the same result, no drives detected for DSM install. Hoping I am just missing something simple here... EDIT: If I telnet into the VM when DSM loader boots, and run lsmod, it does show mptsas and vmw_pvscsi modules in that list. But I can't seem to run lspci in telnet console to check that way, the command isn't found. And here is the full output: https://pastebin.com/NyfN3N2R Seems like there is a point it does detect the SCSI disk because it decides to ignore it for a shim (which is correct I am assuming?), and then later on down when it runs the check mptsas script from the extension it says the module is not loader.... So maybe the mptsas and vmw_pvscsi modules really aren't getting loaded fully for some reason? Module Size Used by Tainted: PF usbhid 26503 0 hid 79391 1 usbhid bromolow_synobios 70388 0 adt7475 30106 0 i2c_i801 11053 0 nfsv3 23713 0 nfs 130128 1 nfsv3 lockd 72839 2 nfsv3,nfs sunrpc 202956 3 nfsv3,nfs,lockd i40e 350790 0 ixgbe 274157 0 igb 180080 0 i2c_algo_bit 5168 0 e1000e 212512 0 dca 4576 2 ixgbe,igb vxlan 17560 0 ip_tunnel 11368 1 vxlan vfat 10287 0 fat 51522 1 vfat sg 25890 0 etxhci_hcd 135146 0 vmxnet3 38645 0 vmw_pvscsi 16080 0 mptsas 38167 0 mptscsih 17934 1 mptsas mptbase 62090 2 mptsas,mptscsih usb_storage 48966 0 xhci_hcd 85621 0 uhci_hcd 23950 0 ehci_pci 3904 0 ehci_hcd 47493 1 ehci_pci usbcore 208589 7 usbhid,etxhci_hcd,usb_storage,xhci_hcd,uhci_hcd,ehci_pci,ehci_hcd usb_common 1560 1 usbcore redpill 146375 0 -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

I tried loading mpt2sas extension into 7.0.1 loader and used LSI SAS, LSI Parallel, and VMware paravirtual with a virtual SCSI disk on ESXi but that virtual disk doesn't show up in DSM at all. Only SATA virtual disks show up in DSM. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

@pocopico Does one of your driver extensions support using SCSI virtual disks in ESXi? I built an image using redpill-helper and 3615xs v7.0.1 from jumkey's branch but SCSI disks added to the VM don't show up for installing DSM, only SATA controller virtual disks. I thought I read somewhere once that 3615xs has SCSI natively in it already. Also, anyone know why on TTG redpill-load builds I can add redpill loader image as SATA 0 controller and then add SATA 1 controller for data disks, but on jumkey's 7.0.1 all virtual disks have to be on SATA 0 controller to be seen for DSM install? How do you use 2 separate SATA controllers as is typically recommended? No problem getting it to work like that on TTG repo based builds. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

I agree, and if this is the reason TTG stepped back it is a real damn shame. RedPill was finally something in this community that anyone could build on rather than the code being locked down to one person (Jun). It's what is necessary to make this community bigger and even better. It seems the only people sharing full images are people who don't know how to build the image in the first place..... which indicates they also likely don't understand the nuances of open source software/copyrighted software in the first place. Further proof they shouldn't be messing with RedPill... I pray TTG is okay first of all.... and that they end up coming back if the above is the reason. Please forgive our sins @ThorGroup. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Nice! An SPK of this would be great, but I see what you mean about DSM 7 and running SPKs as root. I am assuming this is why there is no open-vm-tools package for DSM 7 either? Hopefully there is a workaround for that too some day. What setup are you using to get 10G NIC on ESXi with redpill? Did you pass through a physical NIC or it's virtual? I can only get 1G max with an e1000e vNIC. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

+1 for this! Would be great to run Surveillance Station with all those deep learning features. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

It seems the latest release of rp-lkm/rp-load is causing install issues again with DSM v7 and ESXi (at least on 3615 platform). I have not tried 918 but using latest redpill, loader boots, but install fails like many others have posted. /var/log/messages reports an error mounting synoboot2 even though SATA boot option is used. Guessing something broke with the changes to SATA shimming in latest releases. Curious what @ThorGroup sees since I believe they posted elsewhere they tested on ESXi this time and all worked fine. Also curious if you all testing this same setup as well get synboot2 mounting errors in /var/log/messages. I get the gpio pin messages spam too by the way on the console output. I know a few others posted they were getting that on one of the GH issues. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Agreed, wondering if this is issue. VMDKs created by StarWinds V2V Converter so work in ESXi, but you have to choose "ESXi Pre-Allocated" as the option in StarWinds V2V Converter, which produces the flat file and the descriptor file. When I upload both of them to ESXi datastore, ESXi merges the two files it seems into 1 VMDK file, which then can be used with a VM. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Does /var/log/messages show anything related to face detection or Photos? -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

OK cool. Did that log you posted above showing the face detection plugin failing come from /var/log/messages or another log? -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Usually features in DSM that need to authenticate use a combination of real serial number, matching mac address to that specific serial, and both those have to match the model name too. If you are using a real serial/mac combo from a disk station matching the loader model version you are running, and it still doesn't work, then face detection sounds like it uses a different method of "authentication" than syno used in past for stuff like transcoding. Also possible face detection not working isn't an authentication problem, but something entirely different that just hasn't be solved yet with redpill loaders. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Right, I'm saying some sort of tool LIKE the one above probably has to be developed that activates face detection on redpill installs of DSM. And if it is developed, implementing that plus the code to activate video transcoding would be great. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

I'm guessing something like this will need to be developed. https://github.com/likeadoc/synocodectool-patch Would be awesome if a fix could even be implemented into the loader somehow so that way any redpill image comes with codecs for Video Station and Photos pre-activated along with Face Detection fix.