ilovepancakes

Member-

Posts

175 -

Joined

-

Last visited

-

Days Won

2

Everything posted by ilovepancakes

-

I was able to get all my instances of 7.1.1 updated to 7.2.1 pretty smoothly using that method. In fact, I changed loaders and re-built to 7.2.1 all in one step to save time, and that worked okay. The only hiccup I noticed when using your loader is that the SataPortMap and DiskIdxMap values set in your loader or even by manual editing of the user_config.json file are NOT saved when building the loader. After the build, both those values in the user_config.json file are reset to nothing and just show "". And when rebooting to boot into DSM, the loader SATA boot options line also shows no set values for these. I have to hit "e" add them in that screen manually to the SATA boot line, and then it seems to save them and use them in the future. Why would setting them in your loader build settings or user_config.json before build not be honored and actually save the values for use when booting DSM?

-

Thanks Peter. I remember a while ago you mentioning you were going to make an mshell version of TCRP, nice to see it now!! So, if I understand correctly, I should first switch from my current loader to your loader, without even updating DSM, just to get the loader itself switched? And I assume this just involves replacing the current loader VMDK (I am on ESXi) with your loader VMDK, then building in your loader using same settings as current loader/DSM? Then once that is all complete and DSM is working on 7.1.1 using your loader, then follow the steps you outlined to rebuild again for 7.2.1, then reboot, and then it will prompt to install 7.2.1? Do I have that all correct?

-

Been a while since I have updated DSM or had time to visit here. Wondering if someone can outline for me the proper steps to get on DSM 7.2. I am currently running TinyCore Redpill Friend 0.9.3.0 loader image and am on DSM 7.1.1 Update 5. What is the order and steps I need to do to get on latest DSM 7.2 along with getting to latest TCRP loader?

-

Mailboxes are per user. If you are using the same user account added to both email domains like in your example, there is one login for that user and one mailbox, it just adds both domains to that one user so they can use both. I believe you'll need to create separate user accounts to use each email address as its own. I don't know if gmail still works this way but I believe you used to be able to send emails to a gmail user via "username@gmail.com" or "username@googlemail.com" so kinda the same concept.... both are same user mailbox just two different addresses going to it.

-

Just boot tinycore RP on the same VM you already have running and use built-in generator to generate a SN then run commands again to build redpill using that SN. It will update your existing install to use the new SN. That being said, I don't know if using a generated serial # will work or not. Wondering if AME needs an actual real SN from a real SN unit.

-

Awesome, thank you! Activation works and initial testing seems to play HEVC files just fine now.

-

Advanced Media Extension will not activate dsm7.1-42661

ilovepancakes replied to phone guy's topic in Synology Packages

I am running TC Redpill with 3622xs loader on 7.1 Update 4. AME doesn't activate, so I changed the grub commands on boot to reflect netif_num=2 and added a 2nd mac address so 2 mac addresses and correct netif_num and AME still does NOT activate. Guess that netif_num isn't the solution? -

Redpill Loader information thread

ilovepancakes replied to ed_co's topic in Developer Discussion Room

Building off this, I have noticed in the past that many users experienced issues with docker, specifically databases that cause high CPU usage. This would cause Redpill to crash, and was something @ThorGroupwas going to look into before they disapeered. Just this week, I noticed crashing behavior in Redpill when Synology Drive attempts to index a lot of files, which sucks because it makes using Synology Drive pretty unstable on Redpill, which is a major feature to be missing out on. Indexing involves database operations in DSM of course so obviously there are still stability issues in Redpill with things that involve databases unless they are small databases. My concern is.... it seems that fixes to problems like that are handled in Redpill-LKM or even the base Redpill-Load. As far as I know, there have been zero developments to RP-LKM since TTG left. All of this work people have been doing lately to make installing Redpill easier like RPTC, etc. are amazing... but, they jump the gun in the sense that they are just allowing easier install of a buggy implementation of LKM. This is just going to cause headaches for everyone especially as more people install with these easy tools and then have problems like the above. I'm really hoping someone is capable of actually updating RP-LKM to make the underlying system more stable. -

Depends on the update. Some updates like 7.0.1 U3 and 7.1 require a newer version of loader generated by different branch versions of redpill-load some users have made. If the update you are going to is compatible with the loader you already built, you don't need to rebuild the loader simply for the update. I don't believe Tinycore-Redpill has been updated to work with 7.0.1 U3 or 7.1 yet. I know it's coming though. In the meantime: https://github.com/dogodefi/redpill-loader-action

-

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Ahh interesting. It seems then there is no practical downside to FMA3 missing from DS3622 then other than maybe slightly worse performance on code that has to multiply numbers and then add to them? -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

I could be completely off on this but doesn't 3622 use the newer kernel version (v4)? And if so, isn't that the reason 918 doesn't work on older CPUs? How would 3622 work then when 918 doesn't? -

You have to create a custom PAT file for now if you want to upgrade to U1/U2/U3 on 3617 as the format Synology used on their PATs changed so RP TinyCore can't patch them yet. See here:

-

Successfully migrated an install from Jun's 1.04b 6.2.3 to RP-TC 7.0.1 Update 2. Does the FixSynoboot.sh script need to be left "installed" or it can be removed and is not needed by RP to operate correctly?

-

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Awesome, as usual, you all rock. The ncurses interface sounds great…. Just when we all think the project is already in a state above and beyond it gets better! -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

OK that makes sense, so basically building the loader using data from the generated PAT for 7.1 rather than the original 7.1 PAT that can't be unpacked/read anymore by TC. I wonder if there is an eventual way to automate that building of the generated PAT. Unless we find a way to unpack the original without booting it and generating live from DSM telnet, I would imagine it would be pretty dang hard to automate your procedure you came up with since it seems to involve a running instance of DSM to be able to telnet into and build the PAT manually. -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

So is proper procedure to create the custom PAT file using an existing install..... then use that PAT file in a new TinyCore install as the PAT that TC patches? Then use that patched custom PAT file as the install PAT file when installing DSM for first time? -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Normally with Redpill so far I have been installing DSM via the PAT file provided directly from Synology's site after creating the loader and it installs and works just fine. But do you mean with U2, generating the PAT via those provided steps and then installing the U2 via that PAT is the only way to make it work? I tried the steps listed and rebuilt the loader by providing the custom U2 PAT file but upon rebooting it is in a boot loop from the GRUB menu, it starts to load the kernel then reboots immediately. Are you saying I should restore from a snapshot back to working 7.0.1 THEN use the PAT written by Redpill (which itself is based on custom PAT) to update to U2? -

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

I ran into the same issue of not being able to update 3622xs to 7.0.1 U2. I don't get what this newly generated PAT file does.... Is it just a gz version of the U2 PAT file from synology? And then why does that need to be re-run through tinycore? Tinycore has to modify redpill code using info from the U2 PAT file? -

- Outcome of the update: UNSUCCESSFUL - DSM version prior update: DSM 7.0.1-42218 UPDATE 2 - Loader version and model: Tinycore Redpill DS3615xs - Using custom extra.lzma: NO - Installation type: ESXi v7U3c - Additional comments: Update completes and reboots successfully but DSM installation wizard comes up after reboot and prompts for a recover/migration of disks. After choosing to "Recover" via button provided, DSM takes a few seconds to "Recover" then reboots again. Same recover prompt/wizard comes up again, so DSM won't load fully.

- 5 replies

-

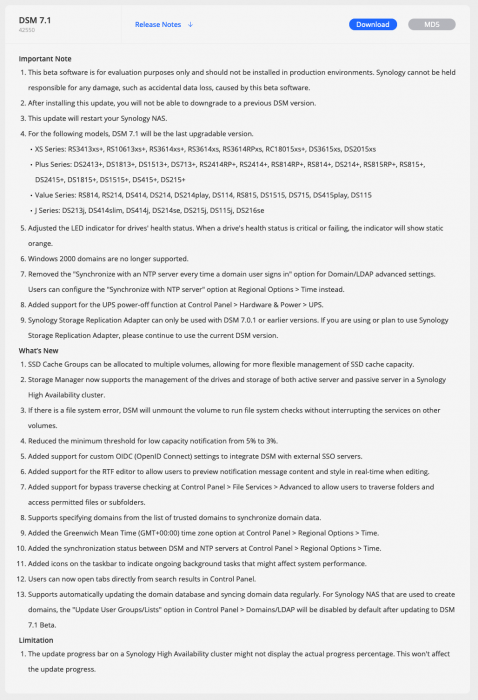

- dsm 7.1-42550

- major update

-

(and 1 more)

Tagged with:

-

Wonder if this is because it is beta/preview? Have they done this in past with major non-stable releases?

-

- 5 replies

-

- dsm 7.1-42550

- major update

-

(and 1 more)

Tagged with:

-

This page seems to have gone live recently but the download links don't work for me. https://prerelease.synology.com/en-global/download/dsm71_beta Anyone happen to have them work and try out if v7.1 is an easy update for Redpill/Tinycore to handle?

-

That's a good point and I believe correct. If I take a virtual disk used under a vSATA controller and move it to vSCSI controller, it will still be accessible. The only thing I thought that made SCSI more performant when virtualized is a more performant driver, like Vmware Paravirtual SCSI. But, if performance really is the same or so similar, it doesn't matter to me with DSM. It isn't like I'm running a giant database or 1,000 users trying to access and write/read at same time. There definitely are performance gains in other operating systems when using PVSCSI for example over vSATA controllers with the VM. Plenty of people have tested.

-

Thanks for the insight. I agree it isn't a "problem" with Redpill itself other than simply not being supported/coded (to make the SMART data shim work on virtual SCSI/SAS drives like Redpill does with SATA. That being said, on 1.04b 6.2.x I have used virtual SCSI disks in ESXi as the main DSM drives and have had 0 issues with them. The only reason I did this is because from my beginning days with Xpenology, I thought everyone would always say the virtual SCSI disks would be more performant than virtual SATA, especially with Vmware Paravirtual SCSI ones since that is made specifically for virtualization. But, if vSATA vs. vSCSI really doesn't make a a difference and both are equally as performant, then I'll just use SATA and call it a day with the SCSI stuff. Very rudimentary "benchmarks" lead me to believe they are in fact basically the same, but I didn't run anything scientific or in depth at all. Just compressed a few files and duplicated a few large files, etc... and the timing was basically the same between vSATA volume on DSM and a vSCSI volume on the same DSM VM.

-

RedPill - the new loader for 6.2.4 - Discussion

ilovepancakes replied to ThorGroup's topic in Developer Discussion Room

Sounds like a driver issue. What NIC do you have?