scoobdriver

Member-

Posts

193 -

Joined

-

Last visited

-

Days Won

3

Everything posted by scoobdriver

-

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

yep confirmed I use standard nic on 6.2.3 loader 1.03b no extra Lmza , also with a hba card. , on baremetal. G8 ms -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

I believe this changed. 6.2.3 supports onboard nic. I use the two standard nics on my gen 8 with no modifications. -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

Do you notice this makes any difference on gen8 Microserver (e3-1275 v2) ? -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

No I have a h220 card in the g8 , it’s flashed to IT mode , it’s basically an LSI 9207-8i (which supports 8 drives) I connect this to the internal 4 drive bay (with the existing Sas cable, I.e moving the existing Sas to backplane cable from the onboard connector to the LSI card ) , and mounted 3 ssd inside with a Sas to 4 Sata cable on the other port on the LSi. .This now allows me to still add 4 drives on the existing onboard Sas port , and a drive on the odd sata port. (I.e potential for 13 drives) conscious I’m going off topic on this thread so feel free to pm me if you need any ideas. -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

cool! I’ve put the backplane / 4 hdd on the lsi card (I think the standard onboard Sata used for the 4hdd only supports 3gbs on 2 ports and 6gps on 2) , also put 3 ssd’s on the lsi , which I’m using for applications , docker , vmm storage within xpe . if I go esxi , I can then pass through the lsi card with the 7 drives (on 6.7u3 as 7.0 not supported ) for my main xpenology storage , and add additional ssd’s on onboard sata/Sas achi to use in esxi for additional vm’s and the redpill boot image. (If that make sense) -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

This is my intention also , I was ready to do this with juns boot loader, as I have ran this as a test with RDM on another machine and works well, but with new development from the great team that are putting redpill together I figured I would wait and watch the project as it looks like it is going to be active and support 6.2.4 -7.0 and upwards. It would also release some of my compute to run other esxi guests /vms (rather than use synology vmm as I am at the moment , which doesn’t have the flexibility of esxi ) ( you can use a newer DSM version and still migrate btw ) one thing to note , I’m not sure if you are using the onboard Sata /raid b120i controller. If you are, if you pass that through in esxi you would not be able to add further vm’s Without an additional card. . I was going to pass my pcie hba card through and then use my onboard Sata for other esxi vm’s otherwise you could rdm your xpenology disks, (you don’t get smart data) -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

@WiteWulf I’ve not tried on bare metal yet, not Quite ready to take the jump yet, my g8 baremetal xpenology runs most my home dockers and a couple of VM’s , I also use a hba h220 card in my g8 Which I need to check works, so I need to schedule some downtime. Lol. I’ve only Tested my on esxi box so far , with 6.7 and 7.0 which boot on legacy bios , and not uefi -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

@WiteWulf I don’t see your signature? Perhaps as I’m on iPad. What is the cpu ? im on g8 with E3-1265L V2. Also reluctant to go virtual.. I run esxi on another box. -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

uefi doesn’t need to be enabled , are you using DS3615xs ? The cpu on gen8 does not support DS918 -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

DS3615XS Release Candidate 7.0.1 is now out . -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

Wish I could try it , but I'm using ESXi and there is no Sata Dom support , so It won't load . (So using 3615+ DSM 7.0 beta ) Does DS918+ 7.0 have the i915.ko ? -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

do you know if there are any models which support Sata DoM and HW transcoding please? -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

don’t think you need gpu pass through for face detection . I’m trying ds3615xs in esxi (because 918 doesn’t support sata dom ) and face detection works 3615 doesn’t support hw transcoding and I don’t pass through igpu -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

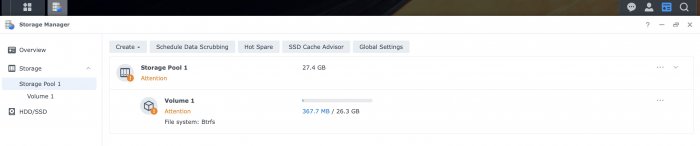

I was also able to use the VMware / ESxi Sata disk by first booting with a 6.2.4 loader, initialising/creating the pool /volume , and then swapping out the loader for 7.0 and migrating . The Disk is now usable (but does show attention ) -

How to tell if iGPU is been used for HW transcoding DS918+ (Esxi)

scoobdriver replied to scoobdriver's topic in DSM 6.x

Oh I also changed out the extra.lmza and extra2.lmza from Juns loader for extra.lzma/extra2.lzma for loader 1.04b ds918+ DSM 6.2.3 v0.13.3 , in this post I believe this replaces the i915.ko for the stock versions . -

How to tell if iGPU is been used for HW transcoding DS918+ (Esxi)

scoobdriver replied to scoobdriver's topic in DSM 6.x

I added the following under devices in the docker json file . (Iv'e now also done this with plex , and that is also HW transcoding with Plex Pass) "devices" : [ { "CgroupPermissions" : "rwm", "PathInContainer" : "/dev/dri", "PathOnHost" : "/dev/dri" } ], I also chmod 666 /dev/dri/ (not Sure if this was necessary . I added my docker user to the video group ) -

How to tell if iGPU is been used for HW transcoding DS918+ (Esxi)

scoobdriver replied to scoobdriver's topic in DSM 6.x

I have managed to validate this , to update - I installed Jellyfin in docker , and was able to observe that HW transcoding is been used , using the iGPU passed through from ESXi (Intel 630 UHD, on i5-8500T ) Good news ! a separate Graphics card is not needed for HW transcoding ! In Jellyfin I can select HW transcoding , which does not spike the CPU , (with HW transcoding disabled I see 90% cpu) -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

It sounds like you are overwriting your boot disk . Are you using the VMware / Esxi ? You need to select the Sata boot method from the grub menu . With those values you should assign boot .img to 0:0 and your data disk to 1:0 When you upload the .pat file / are in the installer screen it should only see 1 disk . (otherwise you are formatting /overwriting your boot .img , and on the next reboot you won't see the grub menu -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

Ok where at the same point then the bonus with those values are the data disk shows as disk 1 not 2 edit ; I think juns loader for esxi also uses DiskIdxMap=1000 SataPortMap=4 -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

OK , I will try with 6.2.4 . With 7.0 I get a message that the drive does not meet requirements , to create a pool / volume . . (it has been reported) my user_config.json is similar. Ok so I can create a storage pool / volume on 6.2.4 DS3615xs , but not on 7.0 ( 7.0 says the drive does not meet requirements, so something changed there between 6.2.4 and 7.0 ) -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

OK , I will try with 6.2.4 . With 7.0 I get a message that the drive does not meet requirements , to create a pool / volume . . (it has been reported) my user_config.json is similar. -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

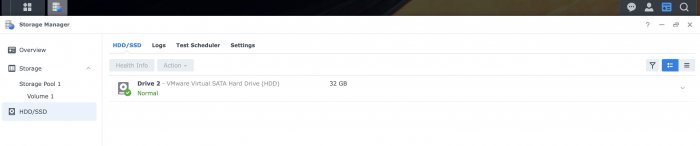

Is this with Passthrough ? I can can't get DSM 7.0 Bromolow DS3615xs to create a storage pool /volume with a virtualSATA -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

@haydibe Thanks for your work . Could you advise how I "Clean up" the docker tool . I'm running this on a small VM , it works great first time , but if I try to generate a new image , I'm out of disk space (seems to grow every time I generate and image for the same Architecture) . without removing everything and starting again , are there any locations I can remove files ? -

RedPill - the new loader for 6.2.4 - Discussion

scoobdriver replied to ThorGroup's topic in Developer Discussion Room

Just add the ARG's DiskIdxMap=1000 SataPortMap=4 to your boot parameters if you want to use a different Sata Controller for the Data Disk in ESXi . I've done this on Esxi 6.7 with redpilll DSM7 bromolow , The Data Disk then shows up as Disk 1 , and you don't see the synoboot disk . I added the redpill.vmdk to Sata 0:0 , and the data disk to 1:0 It images and boots.. (spoiler, I'm not able to create a volume with it, as it states incompatible )