lokiki

Member-

Posts

31 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

lokiki's Achievements

Junior Member (2/7)

1

Reputation

-

merci pour tes retours. Pour une migration Proxmox, je confirme que le plus simple est de : - garder la meme VM - remplacer le loader qui est sur le 1er disque (celui sur lequel vous démarrez) par le nouveau Redpill (tinycore-redpill.v0.9.2.7 à l'heure actuelle) - relancer la VM en suivant les indications du tuto ci-dessus (build du loader + redémarrage pour migration) Tout fonctionne nickel, pas besoin de créer une nouvelle VM et de migrer dessus.

-

tu as completement raison, je m'arrache les cheveux à migrer mes 2 HDD sur ma nouvelle VM alors qu'il suffit de modifier le loader de la VM initiale. Je fais des nouveaux tests dans ce sens.

-

merci à toi je confirme ca marche nickel. Maintenant, il faut que je comprenne comment "migrer" les disques de ma 1er VM restée en 7.0 vers la nouvelle VM en 7.1

-

Thanks again for all your advices. I have tried the command line and it seems to work (volume is rebuilding the RAID). I know it is super risky, but I could not backup all these data, the size is really too big (and not critical data so HDD crash will not ruine my life). I will defrag all the volumes after. Thanks again @flyride you have done a super job!

-

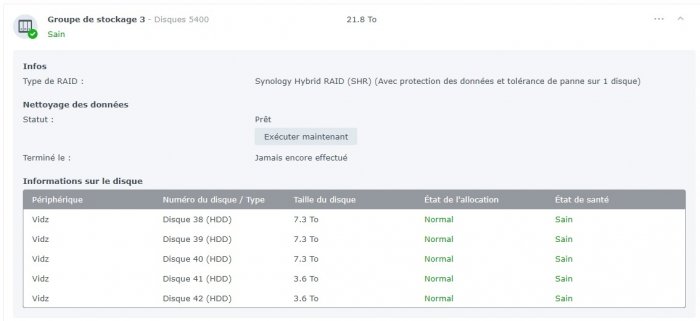

Additionnal info if needed : VM via ESXI (918+) The 1st volume is built with VM HDD (500Mo for the loader) + 32Go (DSM) + 100Go (DSM) + 100Go (DSM) The HDD in the SAS : Volume 2 and 3.

-

I used Automated Redpill Loader v0.4-alpha9 for building my loader. For the value of Satamap : SataPortMap=1 And here is the result of the command line: /dev/sdaf1 256 622815 622560 2.4G Linux RAID /dev/sdaf2 622816 1147103 524288 2G Linux RAID /dev/sdaf5 1181660 1953480779 1952299120 7.3T Linux RAID /dev/sdag1 256 622815 622560 2.4G Linux RAID /dev/sdag2 622816 1147103 524288 2G Linux RAID /dev/sdag5 1181660 1953480779 1952299120 7.3T Linux RAID /dev/sdah1 256 622815 622560 2.4G Linux RAID /dev/sdah2 622816 1147103 524288 2G Linux RAID /dev/sdah5 1181660 1953480779 1952299120 7.3T Linux RAID /dev/sdai1 256 622815 622560 2.4G Linux RAID /dev/sdai2 622816 1147103 524288 2G Linux RAID /dev/sdai5 1181660 1953480779 1952299120 7.3T Linux RAID /dev/sdaj1 256 622815 622560 2.4G Linux RAID /dev/sdaj2 622816 1147103 524288 2G Linux RAID /dev/sdaj5 1181660 1953480779 1952299120 7.3T Linux RAID /dev/sdak1 256 622815 622560 2.4G Linux RAID /dev/sdak2 622816 1147103 524288 2G Linux RAID /dev/sdak5 1181660 1953480779 1952299120 7.3T Linux RAID /dev/sdal1 2048 4982527 4980480 2.4G Linux RAID /dev/sdal2 4982528 9176831 4194304 2G Linux RAID /dev/sdal5 9453280 7813830239 7804376960 3.6T Linux RAID /dev/sdal6 7813846336 15627846239 7813999904 3.7T Linux RAID /dev/sdam1 2048 4982527 4980480 2.4G Linux RAID /dev/sdam2 4982528 9176831 4194304 2G Linux RAID /dev/sdam5 9453280 7813830239 7804376960 3.6T Linux RAID /dev/sdam6 7813846336 15627846239 7813999904 3.7T Linux RAID /dev/sdan1 2048 4982527 4980480 2.4G Linux RAID /dev/sdan2 4982528 9176831 4194304 2G Linux RAID /dev/sdan5 9453280 7813830239 7804376960 3.6T Linux RAID /dev/sdan6 7813846336 15627846239 7813999904 3.7T Linux RAID /dev/sdao1 2048 4982527 4980480 2.4G Linux RAID /dev/sdao2 4982528 9176831 4194304 2G Linux RAID /dev/sdao5 9453280 7813830239 7804376960 3.6T Linux RAID /dev/sdap1 2048 4982527 4980480 2.4G Linux RAID /dev/sdap2 4982528 9176831 4194304 2G Linux RAID /dev/sdap5 9453280 7813830239 7804376960 3.6T Linux RAID /dev/sdb1 8192 16785407 16777216 8G fd Linux raid autodetect /dev/sdb2 16785408 20979711 4194304 2G fd Linux raid autodetect /dev/sdb3 21241856 67107423 45865568 21.9G f W95 Ext'd (LBA) /dev/sdb5 21257952 66914655 45656704 21.8G fd Linux raid autodetect /dev/sdb3p1 16096 45672799 45656704 21.8G fd Linux raid autodetect /dev/sdc1 8192 16785407 16777216 8G fd Linux raid autodetect /dev/sdc2 16785408 20979711 4194304 2G fd Linux raid autodetect /dev/sdc3 21241856 209700351 188458496 89.9G f W95 Ext'd (LBA) /dev/sdc5 21257952 66914655 45656704 21.8G fd Linux raid autodetect /dev/sdc6 66930752 209523679 142592928 68G fd Linux raid autodetect Failed to read extended partition table (offset=45684934): Invalid argument /dev/sdc3p1 16096 45672799 45656704 21.8G fd Linux raid autodetect /dev/sdc3p2 45684934 188281823 142596890 68G 5 Extended /dev/sdd1 8192 16785407 16777216 8G fd Linux raid autodetect /dev/sdd2 16785408 20979711 4194304 2G fd Linux raid autodetect /dev/sdd3 21241856 209700351 188458496 89.9G f W95 Ext'd (LBA) /dev/sdd5 21257952 66914655 45656704 21.8G fd Linux raid autodetect /dev/sdd6 66930752 209523679 142592928 68G fd Linux raid autodetect Failed to read extended partition table (offset=45684934): Invalid argument /dev/sdd3p1 16096 45672799 45656704 21.8G fd Linux raid autodetect /dev/sdd3p2 45684934 188281823 142596890 68G 5 Extended Again many thanks for your help, I understand what your are doing, but I'm afraid to type a wrong command with disastrous consequences.

-

Thanks for your prompt reply! I know, I need to have a backup unfortunately, I can't have enough space to do it (24TB to backup....) Does the system not offer the ability to repair the array in the GUI? If it does, that is preferred over manual intervention. -> no, it is the same message as above : need one more disk to repair (7.4TB). And the main issue, is I don't have a such disk. Eveything before the migration was good, all HDD were OK. Here is the result of your command: admin@Vidz:~$ sudo fdisk -l /dev/sd? | grep "^/dev/" /dev/sdb1 8192 16785407 16777216 8G fd Linux raid autodetect /dev/sdb2 16785408 20979711 4194304 2G fd Linux raid autodetect /dev/sdb3 21241856 67107423 45865568 21.9G f W95 Ext'd (LBA) /dev/sdb5 21257952 66914655 45656704 21.8G fd Linux raid autodetect /dev/sdc1 8192 16785407 16777216 8G fd Linux raid autodetect /dev/sdc2 16785408 20979711 4194304 2G fd Linux raid autodetect /dev/sdc3 21241856 209700351 188458496 89.9G f W95 Ext'd (LBA) /dev/sdc5 21257952 66914655 45656704 21.8G fd Linux raid autodetect /dev/sdc6 66930752 209523679 142592928 68G fd Linux raid autodetect /dev/sdd1 8192 16785407 16777216 8G fd Linux raid autodetect /dev/sdd2 16785408 20979711 4194304 2G fd Linux raid autodetect /dev/sdd3 21241856 209700351 188458496 89.9G f W95 Ext'd (LBA) /dev/sdd5 21257952 66914655 45656704 21.8G fd Linux raid autodetect /dev/sdd6 66930752 209523679 142592928 68G fd Linux raid autodetect

-

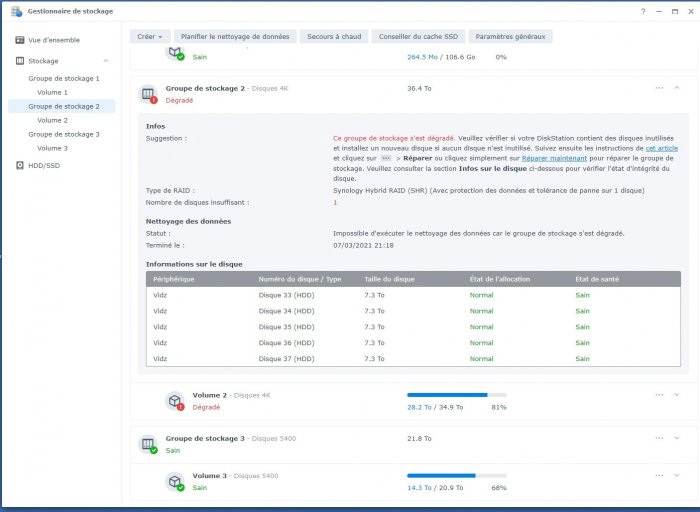

Hello, Soory to hijack this topic, but I have EXACTLY the same issue I have migrate my NAS from 6.2 to 7.1 I have a VM (ESXI) and SAS with plenty of HDD. Migration was ok, except with this error you describe @Crunch. I have type the different command listed here, except : sudo mdadm --manage /dev/md3 -a /dev/sde6 because I don't think I have the same HDD in error. Please find below the result of the previous command lines : admin@Vidz:~$ cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] [raidF1] md3 : active raid5 sdag5[1] sdak5[5] sdaj5[4] sdai5[3] sdah5[2] 39045977280 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/5] [_UUUUU] md4 : active raid5 sdal6[0] sdan6[2] sdam6[1] 7813997824 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU] md2 : active raid5 sdal5[0] sdap5[4] sdao5[3] sdan5[2] sdam5[1] 15608749824 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] md5 : active raid5 sdb5[0] sdd5[2] sdc5[1] 45654656 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU] md6 : active raid1 sdc6[0] sdd6[1] 71295424 blocks super 1.2 [2/2] [UU] md1 : active raid1 sdb2[0] sdc2[12] sdd2[11] sdap2[10] sdao2[9] sdan2[8] sdam2[7] sdal2[6] sdak2[5] sdaj2[4] sdai2[3] sdah2[2] sdag2[1] 2097088 blocks [16/13] [UUUUUUUUUUUUU___] md0 : active raid1 sdb1[0] sdc1[12] sdag1[11] sdah1[10] sdai1[9] sdaj1[8] sdak1[7] sdal1[6] sdam1[5] sdan1[4] sdao1[3] sdap1[2] sdd1[1] 2490176 blocks [16/13] [UUUUUUUUUUUUU___] So I can see md3 is in error : 5 HDD ok and 1 HDD in error... admin@Vidz:~$ sudo mdadm -D /dev/md3 /dev/md3: Version : 1.2 Creation Time : Sun Nov 15 18:27:08 2020 Raid Level : raid5 Array Size : 39045977280 (37237.15 GiB 39983.08 GB) Used Dev Size : 7809195456 (7447.43 GiB 7996.62 GB) Raid Devices : 6 Total Devices : 5 Persistence : Superblock is persistent Update Time : Sun Oct 2 14:23:45 2022 State : active, degraded Active Devices : 5 Working Devices : 5 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : Vidz2:3 UUID : fddca54d:8b2c0bba:cde1b911:8a91e14d Events : 6928 Number Major Minor RaidDevice State - 0 0 0 removed 1 66 5 1 active sync /dev/sdag5 2 66 21 2 active sync /dev/sdah5 3 66 37 3 active sync /dev/sdai5 4 66 53 4 active sync /dev/sdaj5 5 66 69 5 active sync /dev/sdak5 but here, I cannot read which HDD (sdxxx) I have to put in the command line below (instead of sde6) : sudo mdadm --manage /dev/md3 -a /dev/sde6 I would say sudo mdadm --manage /dev/md3 -a /dev/sdaf5 but as I'm not sure, I don't want to break something. I have still access to my files, only this message is display (Volume Degraded) Huge thanks in advance for your help @flyride

-

bugs dsm7.0 sous vmware workstation

lokiki replied to rodrigue7973's topic in Installation Virtuelle

Hello, désolé mais de mon coté je n'ai rien compris Tu parles de bug ? Mais quel est le résultat de ce bug ? Ton DSM reboot tout seul ? Tu confirmes que sur DSM 6.2.4-25556 tu n'as pas un bug ? bref, c'est pas du tout clair (pour moi). Merci de partager un peu plus d'information. -

[Tuto] DSM 7 Pour Proxmox en 8 minutes ( Update DSM-7.1.1 )

lokiki replied to Sabrina's topic in Installation Virtuelle

Hello, petit retour pour confirmer qu'avec l'image DS3615 ca marche impec ! Merci encore à toi @Sabrina. -

[Tuto] DSM 7 Pour Proxmox en 8 minutes ( Update DSM-7.1.1 )

lokiki replied to Sabrina's topic in Installation Virtuelle

Super, un grand merci pour ton aide (et ta réactivité !) En fait, je n'avais pas vu ton 1er tuto et il etait également tres clair en montrant comment faire un loader via Proxmox Je vais tester tout ca ! merci encore pour ton aide. -

[Tuto] DSM 7 Pour Proxmox en 8 minutes ( Update DSM-7.1.1 )

lokiki replied to Sabrina's topic in Installation Virtuelle

Bonjour, tout d'abord merci pour ce tuto, il est assez clair. La seule problematique que j'ai est qu'il est réalisé pour une base 918+ Je suis sur un GEN8 et donc non compatible avec cette version 918+ Je me suis cassé la tete pour savoir pourquoi cela ne marchait pas, et j'avais zappé cet info importante. Est-ce qu'il est possible d'avoir cette image : redpill-DS918+_7.0.1-42218 mais en version DS3615 ou DS3617 ? Merci d'avance ! -

many thanks for your great help!

-

hello, is it working with 6.2.3 25426-3 ? I can't see this version in the .sh and when I try to actvate .sh Thanks