gizmomelb

Member-

Posts

22 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

gizmomelb's Achievements

Junior Member (2/7)

0

Reputation

-

is yours the intel or the ARM version of the F5-221 please?

-

cannot expand volume on Xpeneology DSM 5.2-5967

gizmomelb replied to gizmomelb's question in General Questions

thank you for the detailed info, yes that is the process I had when updating my drives.. I replaced the failed 4TB with a 10TB as I had 2x 4TB drives fail me (WD purples, each with 22K hours usage on them) and I only had 1x 4TB replacement drive (a Seagate.. yeah desperate times) and I didn't want to run the NAS with a degraded volume so I moved some files around and shucked a 10TB external I had which hadn't had much use and installed that in the NAS. The only HDD I Could purchase was a 6TB WD which I then found out was SMR and not CMR so I'll be returning that as I've read it'll cause too many issues and most likely die early in it's lifespan. -

I know a backup would be best but I don't have the storage space to be able to do that (data is non essential, but a pain to have to re-rip all my DVDs, CDs, blurays etc. and at least another few months of work). If it's possible to expand the 4TB rebuilt partition to the 10TB capacity of the actual replacement drive it'd be a nice win. But also many, many thanks for sharing your time and knowledge helping me out and for my learning a little more how mdadm handles LVs and VGs.

-

good news!! yes I rebooted and the volume mounts and my data is there (whether it is intact is another thing, but it should be!) I don't know if this is too early but thank you so much for you help recovering the volume.

-

GIZNAS01> vgcfgrestore vg1000 Restored volume group vg1000 GIZNAS01> lvm vgscan Reading all physical volumes. This may take a while... Found volume group "vg1000" using metadata type lvm2 GIZNAS01> lvm lvscan inactive '/dev/vg1000/lv' [25.45 TB] inherit GIZNAS01> GIZNAS01> pvs PV VG Fmt Attr PSize PFree /dev/md2 vg1000 lvm2 a- 13.62T 0 /dev/md3 vg1000 lvm2 a- 4.55T 0 /dev/md4 vg1000 lvm2 a- 7.28T 0 GIZNAS01> vgs VG #PV #LV #SN Attr VSize VFree vg1000 3 1 0 wz--n- 25.45T 0 GIZNAS01> lvs LV VG Attr LSize Origin Snap% Move Log Copy% Convert lv vg1000 -wi--- 25.45T GIZNAS01> GIZNAS01> vgchange -ay vg1000 1 logical volume(s) in volume group "vg1000" now active GIZNAS01> GIZNAS01> pvdisplay --- Physical volume --- PV Name /dev/md2 VG Name vg1000 PV Size 13.62 TB / not usable 320.00 KB Allocatable yes (but full) PE Size (KByte) 4096 Total PE 3571088 Free PE 0 Allocated PE 3571088 PV UUID HO2fWh-RA9j-oqGv-kiCI-z9pY-41R1-9IJbgt --- Physical volume --- PV Name /dev/md3 VG Name vg1000 PV Size 4.55 TB / not usable 3.94 MB Allocatable yes (but full) PE Size (KByte) 4096 Total PE 1192312 Free PE 0 Allocated PE 1192312 PV UUID nodvRa-r0cq-NjRs-eW9K-xEuO-YPUT-t7NHbP --- Physical volume --- PV Name /dev/md4 VG Name vg1000 PV Size 7.28 TB / not usable 3.88 MB Allocatable yes (but full) PE Size (KByte) 4096 Total PE 1907190 Free PE 0 Allocated PE 1907190 PV UUID 8qIF1n-Wf00-CD79-M3M5-Dx5l-Lsx2-HZ9qle GIZNAS01> vgdisplay --- Volume group --- VG Name vg1000 System ID Format lvm2 Metadata Areas 3 Metadata Sequence No 23 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 0 Max PV 0 Cur PV 3 Act PV 3 VG Size 25.45 TB PE Size 4.00 MB Total PE 6670590 Alloc PE / Size 6670590 / 25.45 TB Free PE / Size 0 / 0 VG UUID BTXwFZ-flEc-6vgu-qTpk-82eW-k6wQ-NNha6o GIZNAS01> lvdisplay --- Logical volume --- LV Name /dev/vg1000/lv VG Name vg1000 LV UUID 6IB6tO-tatM-LsAF-1ll1-yOnx-V2D1-KkFuIG LV Write Access read/write LV Status available # open 0 LV Size 25.45 TB Current LE 6670590 Segments 3 Allocation inherit Read ahead sectors auto - currently set to 384 Block device 253:0 GIZNAS01> cat /etc/fstab none /proc proc defaults 0 0 /dev/root / ext4 defaults 1 1 /dev/vg1000/lv /volume1 ext4 0 0 GIZNAS01> seems to be getting closer.

-

where is the automatic backup and how do I restore it please? Thank you.

-

GIZNAS01> lvm vgscan Reading all physical volumes. This may take a while... GIZNAS01> GIZNAS01> lvm lvscan GIZNAS01> nothing

-

Ahh I just found a screenshot I made last night doesn't appear to be destructive though, just testing the filesystem for errors. This is what I had typed: syno_poweroff_task -d vgchange -ay fsck.ext4 -pvf -C 0 /dev/vg1000/lv then I executed these commands: vgchange -an vg1000 sync init 6 that looks to be it.. I deactivated the VG which explains why there isn't a VG when I execute vgs or vgdisplay etc. To re-activate the VG I need to execute 'vgchange -ay vg1000' - but I'll wait until it is confirmed. Thank you.

-

Hi Flyride, thank you for continuing to assist me - it's been a busy day but I will make the time to read up more about how mdadm works (it makes sense to me a little already). I think the damage I caused was in step 10 or 11 as detailed here: 9. Inform lvm that the physical device got bigger. $ sudo pvresize /dev/md2 Physical volume "/dev/md2" changed 1 physical volume(s) resized / 0 physical volume(s) not resized If you re-run vgdisplay now, you should see some free space. 10. Extend the lv $ sudo lvextend -l +100%FREE /dev/vg1/volume_1 Size of logical volume vg1/volume_1 changed from 15.39 GiB (3939 extents) to 20.48 GiB (5244 extents). Logical volume volume_1 successfully resized. 11. Finally, extend the filesystem (this is for ext4, there is a different command for btrfs) $ sudo resize2fs -f /dev/vg1/volume_1 I was trying to extend the partition for sdc (the replacement 10TB drive for the orginal 4TB drive) as DSM didn't automatically resize the new hdd partition after the rebuild finished. as requested: lvm pvscan PV /dev/md2 lvm2 [13.62 TB] PV /dev/md3 lvm2 [4.55 TB] PV /dev/md4 lvm2 [7.28 TB] Total: 3 [25.45 TB] / in use: 0 [0 ] / in no VG: 3 [25.45 TB] Thank you.

-

Hi yes that was my post about expanding the volume before the volume crashed - I tried expanding the volume following your instructions here: My apologies for not saying this was DSM 5.2 earlier - I don't know what I need to post and that is why I was asking for assistance. I was looking at similar issues across many forums but most solutions involved earlier versions of DSM with resize being available.

-

Hi, yes I am not a *nix expert.. I ran the vgchange -ay command it is literally does nothing, displays nothing and goes to the new command line I tried vgscan but I do not have that command. ok maybe I'm going in the wrong direction (please tell me if I am) but looking at this thread - https://community.synology.com/enu/forum/17/post/84956 the array seems to still be intact but has a size of '0'. fdisk -l fdisk: device has more than 2^32 sectors, can't use all of them Disk /dev/sda: 2199.0 GB, 2199023255040 bytes 255 heads, 63 sectors/track, 267349 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 1 267350 2147483647+ ee EFI GPT fdisk: device has more than 2^32 sectors, can't use all of them Disk /dev/sdb: 2199.0 GB, 2199023255040 bytes 255 heads, 63 sectors/track, 267349 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdb1 1 267350 2147483647+ ee EFI GPT fdisk: device has more than 2^32 sectors, can't use all of them Disk /dev/sdc: 2199.0 GB, 2199023255040 bytes 255 heads, 63 sectors/track, 267349 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdc1 1 267350 2147483647+ ee EFI GPT fdisk: device has more than 2^32 sectors, can't use all of them Disk /dev/sdd: 2199.0 GB, 2199023255040 bytes 255 heads, 63 sectors/track, 267349 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdd1 1 267350 2147483647+ ee EFI GPT fdisk: device has more than 2^32 sectors, can't use all of them Disk /dev/sde: 2199.0 GB, 2199023255040 bytes 255 heads, 63 sectors/track, 267349 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sde1 1 267350 2147483647+ ee EFI GPT fdisk: device has more than 2^32 sectors, can't use all of them Disk /dev/sdf: 2199.0 GB, 2199023255040 bytes 255 heads, 63 sectors/track, 267349 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sdf1 1 267350 2147483647+ ee EFI GPT Disk /dev/synoboot: 8382 MB, 8382316544 bytes 4 heads, 32 sectors/track, 127904 cylinders Units = cylinders of 128 * 512 = 65536 bytes Device Boot Start End Blocks Id System /dev/synoboot1 * 1 384 24544+ e Win95 FAT16 (LBA) cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md2 : active raid5 sda5[11] sde5[6] sdf5[7] sdd5[8] sdc5[9] sdb5[10] 14627177280 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/6] [UUUUUU] md3 : active raid5 sdf6[9] sda6[6] sdb6[7] sdc6[10] sdd6[2] sde6[8] 4883714560 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/6] [UUUUUU] md4 : active raid1 sda7[0] sdb7[1] 7811854208 blocks super 1.2 [2/2] [UU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] sde2[4] sdf2[5] 2097088 blocks [12/6] [UUUUUU______] md0 : active raid1 sda1[0] sdb1[1] sdc1[5] sdd1[3] sde1[2] sdf1[4] 2490176 blocks [12/6] [UUUUUU______] unused devices: <none> mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Sat Aug 6 21:35:22 2016 Raid Level : raid5 Array Size : 14627177280 (13949.56 GiB 14978.23 GB) Used Dev Size : 2925435456 (2789.91 GiB 2995.65 GB) Raid Devices : 6 Total Devices : 6 Persistence : Superblock is persistent Update Time : Fri Jun 4 12:39:36 2021 State : clean Active Devices : 6 Working Devices : 6 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : DiskStation:2 UUID : dc74ec9b:f2e2c601:4cf78ff1:38dc0feb Events : 1211707 Number Major Minor RaidDevice State 11 8 5 0 active sync /dev/sda5 10 8 21 1 active sync /dev/sdb5 9 8 37 2 active sync /dev/sdc5 8 8 53 3 active sync /dev/sdd5 7 8 85 4 active sync /dev/sdf5 6 8 69 5 active sync /dev/sde5 mdadm --detail /dev/md3 /dev/md3: Version : 1.2 Creation Time : Mon Jul 23 12:51:46 2018 Raid Level : raid5 Array Size : 4883714560 (4657.47 GiB 5000.92 GB) Used Dev Size : 976742912 (931.49 GiB 1000.18 GB) Raid Devices : 6 Total Devices : 6 Persistence : Superblock is persistent Update Time : Fri Jun 4 12:40:26 2021 State : clean Active Devices : 6 Working Devices : 6 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : GIZNAS01:3 (local to host GIZNAS01) UUID : 9717fe13:d84d4533:3a7153e2:17f9a9d0 Events : 434333 Number Major Minor RaidDevice State 9 8 86 0 active sync /dev/sdf6 8 8 70 1 active sync /dev/sde6 2 8 54 2 active sync /dev/sdd6 10 8 38 3 active sync /dev/sdc6 7 8 22 4 active sync /dev/sdb6 6 8 6 5 active sync /dev/sda6 mdadm --detail /dev/md4 /dev/md4: Version : 1.2 Creation Time : Tue Dec 15 10:41:07 2020 Raid Level : raid1 Array Size : 7811854208 (7449.96 GiB 7999.34 GB) Used Dev Size : 7811854208 (7449.96 GiB 7999.34 GB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Fri Jun 4 12:40:31 2021 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Name : GIZNAS01:4 (local to host GIZNAS01) UUID : 65bfef18:22c472bd:99421137:9958e284 Events : 4 Number Major Minor RaidDevice State 0 8 7 0 active sync /dev/sda7 1 8 23 1 active sync /dev/sdb7 If I am missing the obvious, please let me know as it is not obvious to me sorry. Thank you for your help and time.

-

Hi Flyride, vgchange -ay displays nothing, just goes to the next command line. I followed all the steps in the thread you mentioed and posted the results of the questions asked in that thread as well. mount /dev/vg1000/lv /volume1 mount: open failed, msg:No such file or directory mount: mounting /dev/vg1000/lv on /volume1 failed: No such device mount -o clear_cache /dev/vg1000/lv /volume1 mount: open failed, msg:No such file or directory mount: mounting /dev/vg1000/lv on /volume1 failed: No such device mount -o recovery /dev/vg1000/lv /volume1 mount: open failed, msg:No such file or directory mount: mounting /dev/vg1000/lv on /volume1 failed: No such device fsck.ext4 -v /dev/vg1000/lv e2fsck 1.42.6 (21-Sep-2012) fsck.ext4: No such file or directory while trying to open /dev/vg1000/lv Possibly non-existent device? Looks like I need to rebuild the volume group?

-

hi Flyride I know I definitely have ext4 filesystem and if I SSH in I have no volumes listed. I do not have the 'dump' command. vi /etc/fstab: none /proc proc defaults 0 0 /dev/root / ext4 defaults 1 1 /dev/vg1000/lv /volume1 ext4 0 0 cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid5 sdf6[9] sda6[6] sdb6[7] sdc6[10] sdd6[2] sde6[8] 4883714560 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/6] [UUUUUU] md4 : active raid1 sda7[0] sdb7[1] 7811854208 blocks super 1.2 [2/2] [UU] md2 : active raid5 sda5[11] sde5[6] sdf5[7] sdd5[8] sdc5[9] sdb5[10] 14627177280 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/6] [UUUUUU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] sde2[4] sdf2[5] 2097088 blocks [12/6] [UUUUUU______] md0 : active raid1 sda1[0] sdb1[1] sdc1[5] sdd1[3] sde1[2] sdf1[4] 2490176 blocks [12/6] [UUUUUU______] unused devices: <none> vgdisplay shows absolutely nothing. I hope this helps? thank you.

-

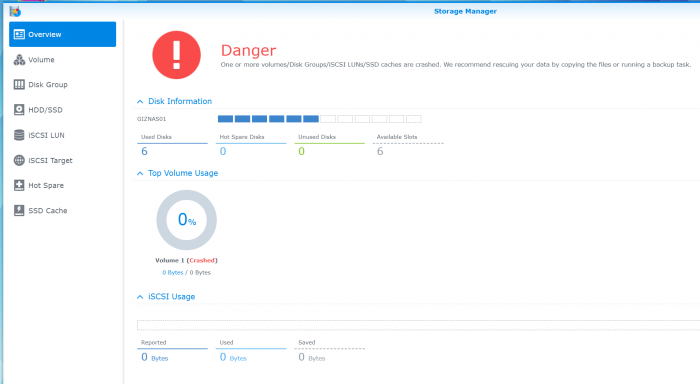

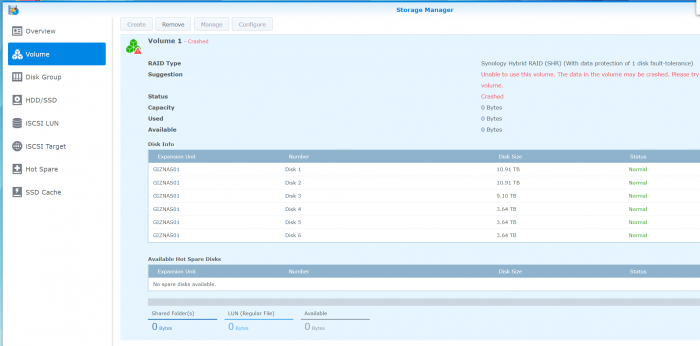

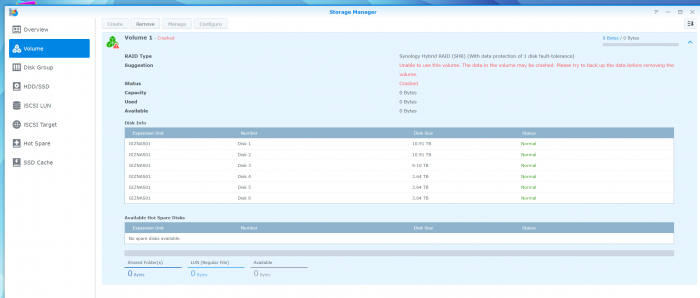

sigh... my NAS is now reporting a crashed volume. if I do the following 'cat /proc/mdstat' it reports: cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid5 sdf6[9] sda6[6] sdb6[7] sdc6[10] sdd6[2] sde6[8] 4883714560 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/6] [UUUUUU] md4 : active raid1 sda7[0] sdb7[1] 7811854208 blocks super 1.2 [2/2] [UU] md2 : active raid5 sda5[11] sde5[6] sdf5[7] sdd5[8] sdc5[9] sdb5[10] 14627177280 blocks super 1.2 level 5, 64k chunk, algorithm 2 [6/6] [UUUUUU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] sde2[4] sdf2[5] 2097088 blocks [12/6] [UUUUUU______] md0 : active raid1 sda1[0] sdb1[1] sdc1[5] sdd1[3] sde1[2] sdf1[4] 2490176 blocks [12/6] [UUUUUU______] unused devices: <none> Any assistance or suggestions to articles to read would be most appreciated please. Thank you.

-

Hi all, an old issue but so far my google-fu has not been able to resolve (some mentioned web pages and links not existing any more does not help). I recently installed a dead 4TB drive with a 10TB drive and after the volume was repaired it would not let me expand the volume. The array is a multi-disk SHR affair. if I SSH to the NAS and execute 'print devices' the results are: print devices /dev/hda (12.0TB) /dev/sda (12.0TB) /dev/sdb (12.0TB) /dev/sdc (10.0TB) <- the new HDD /dev/sdd (4001GB) /dev/sde (4001GB) /dev/sdf (4001GB) /dev/md0 (2550MB) /dev/md1 (2147MB) /dev/md2 (15.0TB) /dev/md3 (5001GB) /dev/md4 (7999GB) /dev/zram0 (591MB) /dev/zram1 (591MB) /dev/synoboot (8382MB) total storage is 25.1GB, but should expand to 31.something GB I guess. My parted doesn't support 'resizedisk' as it is version 3.1 the LVM is vg1000 mdadm --detail /dev/md2 | fgrep /dev/ /dev/md2: 11 8 5 0 active sync /dev/sda5 10 8 21 1 active sync /dev/sdb5 9 8 37 2 active sync /dev/sdc5 8 8 53 3 active sync /dev/sdd5 7 8 85 4 active sync /dev/sdf5 6 8 69 5 active sync /dev/sde5 if I try the following I cannot expand the partition due to the 'has no superblock' message. I've also tried /md2 and /md4 01> syno_poweroff_task -d 01> mdadm -S /dev/md3 mdadm: stopped /dev/md3 01> mdadm -A /dev/md3 -U devicesize /dev/sdc mdadm: cannot open device /dev/sdc: Device or resource busy mdadm: /dev/sdc has no superblock - assembly aborted Can anyone please assist me with how I can expand the partition on sdc from 4TB to 10TB? Or would booting up Ubuntu from a USB stick and doing it from gparted be the easiest / quicket way instead of trying to do it locally under SSH? Thank you.