Leaderboard

Popular Content

Showing content with the highest reputation on 09/24/2021 in all areas

-

Hello, With @haydibe help and validation, here is the redpill_tool_chain v0.11 redpill-tool-chain_x86_64_v0.11.zip New feature : - Supports to bind a local redpill-lkm folder into the container (set `"docker.local_rp_lkm_use": "true"` and set `"docker.local_rp_lkm_path": "path/to/rp-lkm"`) Thanks @haydibe Tested with 6.2.4 and 7.0 both bromolow I can't test for DS918+ as I don't have the CPU for. But it should be OK3 points

-

NVMe = PCIe. They are different form factors for the same interface type. So they have the same rules for ESXi and DSM as an onboard NVMe slot. I use two of them on my NAS to drive enterprise NVMe disks, then RDM them into my VM for extremely high performance volume. I also use an NVMe slot on the motherboard to run ESXi datastore and scratch.2 points

-

Of course, thanks for pointing both of those out! I've not had enough coffee today and am still not getting my head around virtualising DSM 😁2 points

-

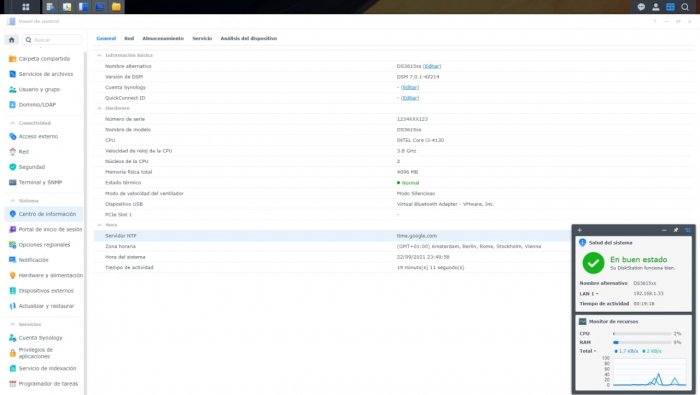

Update time! 💊 This week the updates are going to be less exciting but nonetheless very important, and the first point talks about that in more details. THE BUILD HAS CHANGED - READ ME We're putting this as the first and in red so people see it. The LKM build now requires a version to be specified. We know this will break the @haydibe toolchain but we're sure he will fix it quickly as this is a small change. For reasons why read further. Also, if you used a hack to "fix" the Info Center on 3515xs make sure to read "Hardware monitoring emulation" section carefully. A roadmap Lets first address why this week is less exciting: we spent most of the time testing various scenarios and tracking hidden-yet-missed bugs. This is most likely the second to last round of updates before we release a beta version. The beta version can be used in a day-to-day testing and should be free of dangerous crashes (ok, maybe except that strange kernel lookup - we're looking into it). In the beta some features may still be missing but overall we're already testing a few instances and it's looking good We don't have an official roadmap as someone here asked. However, this is the rough list of things we want to address in that order: Custom drivers support*: there's a hack discussed here to add drivers to a ramdisk. However, this isn't a sustainable solution for many reasons. Mainly the reason is a very limited size. The current ramdisk, especially on systems without compression, is almost at the limit and on slower systems can crash with even a few megabytes more added to it. This seems to be especially unstable on v7 Linux v4 kernel. The solution we're working on will simply include modules on the partition which are loaded in pre-boot and copied to the main OS partition on boot. Naturally from the perspective of the user it will involve simply adding a line to user_conf and dropping a file in "custom". For people digging deeper it will be also much easier as this involves no repacking: simply mount the partition and drop more files in and they will be copied 1:1 and loaded as needed. Full SAS support*: while cheap HBA cards are great and something we actually will recommend for home builds (as they're cheap, stable, and offer 4-6 ports), LSI is king for bigger arrays. We want to make sure these are working as intended making both DSM and Linux happy (which isn't easy in v7 ;)). UEFI support*: there are board which cannot boot in legacy mode. We want to fully test this and offer an official UEFI support in the RedPill. Add support for newest revisions of the OS to 918+ and 3615xs* Step-by-step instruction*/**: essentially we want you guys to help us test not only the code but also a guide It will be published alongisde the beta version. We will prepare it in a form of a GH page so that we can easily incorporate feedback (as we cannot edit posts after ~1h). After we resolve problems with it we will go ahead and publish an RC version on the main forum so that it becomes an official version released for usage by less experienced folks who still want to poke around the OS. Integrate build process into the main repository**: @haydibe is doing an AMAZING job here and we cannot thank him enough. As we discussed with him before we want to incorporate the docker directly into the redpill-load repo and enable LKM builds on GH, so that everything is automated and synchronized as soon as we publish an update. Small fixes: add native support for CPU governor, fix CPU detection to match the model (as some things on v7 react strangely if the CPU doesn't match the model), fix various internal code-smells in the kernel code Add support for GPU acceleration: in short we want to explore adding platform like DVA3221 AMD support: let's just say we aren't fans of Intel recently We want to add a model which natively supports AMD CPUs Work on making mfgBIOS LEDs/buzzers/hwmon stuff more real or at least publish an interface which can be used by others to add an arduino or similar. Things marked with * are aimed to be done by the beta release. Things marked with ** we are HOPING to get done before the beta but we cannot promise Fixed I/O scheduler not found errors Many people reported these, and we finally found some time to look at them. Fixing them should fix the disk scheduling and improve performance in some scenarios. All in all you shouldn't ever see them from now on. Commit: https://github.com/RedPill-TTG/redpill-lkm/commit/aaa5847c589b84783471f9a600eeb12fbf2dc31c Hardware monitoring emulation One of the big features boosted by syno in v7 is central monitoring. However, with that it looks like in v7 the hardware monitoring and things around that has changed a lot from v6. In short v6 allowed for things being out of whack here and there with the hardware sensors while v7 assumes all sensors are in order... and when they're not things break unexpectedly. For example that bug with General tab not loading in Info Center was just a tip of an iceberg. It was just a symptom of a MUCH MUCH bigger issue. If you do "cat /run/hwmon/*" on a DSM loaded with Jun's loader you will get: # cat /run/hwmon/* { "CPU_Temperature": { } } This isn't going to fly on v7. The Info Center is just the beginning of problems. High scemd usage at times was also caused by the lack of hwmon emulation (which again, wasn't strictly needed on v6). After painstakingly analyzing the synobios.h from the kernel and toolchains while also scratching our heads at how one of the drivers for ADT chip (fan controller on a real DS) was modified we think we've nailed it: # cat /run/hwmon/* { "CPU_Temperature": { "CPU_0": "61", "CPU_1": "61" } } { "System_Fan_Speed_RPM": { "fan1_rpm": "997", "fan2_rpm": "986" } } { "System_Thermal_Sensor": { "Remote1": "34", "Local": "32", "Remote2": "34" } } { "System_Voltage_Sensor": { "VCC": "11787", "VPP": "1014", "V33": "3418", "V5": "5161", "V12": "11721" } } The output above comes from a 3615xs instance. On 918+ you will see only CPU_Temperature and hdd backplane status as physically 918+ does not monitor anything else. You can read more about that in the new document in the research repo: https://github.com/RedPill-TTG/dsm-research/blob/master/quirks/hwmon.md All values above are fully emulated as on a VM we have no way of reading them and on a real hardware the only standardized one which we can pass along is the CPU temp (as voltages and thermal sensors vary widely between motherboards). We have a "todo" to make CPU temperature real on bare metal. The ugly part, which forced us to change the LKM build to require major version of the OS to be specified, is that syno changed so many things between v6 and v7 that they forgot to make proper headers. As you can see in the https://github.com/RedPill-TTG/dsm-research/blob/master/quirks/mfgbios.md document they just like that moved two fields in the middle of the struct. You never ever do that. Not without at least some ifdef (which they did for other things). The issue is that change we've found is not present in all toolchains, it's not documented anywhere and we discovered it by accident by comparing files. There's really no sane way to detect that (we've tried really hard). This is why we were forced to add (ugly) requirement for v6/v7 to be specified while building the kernel module. If we find a saner way we will go back and make it automatic again. With the hwmon being emulated there are two VERY important things: you CANNOT disable "supportsystemperature" and "supportsystempwarning"! The hack someone posted here with sed - https://gist.github.com/Izumiko/26b8f221af16b99ddad0bdffa90d4329 - should NOT be used. We cannot stress this enough. Anyone who used it and keeps the installation MUST put "supportsystemperature: yes" and "supportsystempwarning: yes" into user_config at least for a single boot. This is because these values (with =no) are now baked into your OS partition and you MUST fix them to the default "yes". As the loader script doesn't have them specified anywhere it will not touch them anymore. you CANNOT disable ADT support. This comes back way to the Jun's loader and deleting "supportadt7490" on 3615xs. If you're not installing from scratch on a new disk but playing with the same on you MUST put "supportadt7490: yes" to your user_config at least for one boot. Commits: https://github.com/RedPill-TTG/redpill-lkm/commit/72cb5c3620711bcabcfe6e1c67ebfbeca6f7e6bf (mfgBIOS structures v6/v7 changes) https://github.com/RedPill-TTG/redpill-lkm/commit/16adab519d6c4cc604e3fb680fb041e8ddd2167e (HWMON definitions) https://github.com/RedPill-TTG/redpill-lkm/commit/7d95ce2ec7d35493227196be58bbd2c7ca2704f8 (HW capabilities emulation) https://github.com/RedPill-TTG/redpill-lkm/commit/014f70e7127abdf28b47fe58f35086296b5d78ca (HWMON emulation) https://github.com/RedPill-TTG/redpill-load/commit/78c7bf9f903a73d51013a859f590c09ee835f8e7 (remove legacy ADT hack) Fix RTC power-on bug On 918+ the scemd complained about auto power-on setting failing. Now this is fixed and scemd no longer triggers an internal error state. This was happening when OS was sending an empty schedule. Commit: https://github.com/RedPill-TTG/redpill-lkm/commit/80a2879aae23d611228c8791b35e237bfa0ec7a8 Add support for emulated SMART log directory This is an internal change in the SMART emulation layer. Syno uses slightly out-of-spec approach (again, hello v7 mess) and assumes all disks implement one of the ATAPI functionalities which is actually optional. This may actually cause some real disks to fail as well on a real v7 so they will probably eventually fix it themselves. For the time being we did it in RP. Commit: https://github.com/RedPill-TTG/redpill-lkm/commit/278ee99a4dcb8fb8f025915118e7df93824e9bfc ------------------------------------------------------------------------ Today's update is rather short as HWMON layer took us a ton of time to properly research and figure out how to actually do it. There were literally 5 different implementation until we got it right. That being said the forum deleted our responses to comments which we were typing here so we will write them again... but probably tomorrow morning. We will try to sit together tomorrow morning (ok, developer's morning ;)) and write responses again. @WiteWulf Can you try deleting the line with "register_pmu_shim" from redpill_main.c (in init_(void) function) and rebuilding the kernel module? You can then use inject_rp_ko.sh (lkm repo) script to inject it into an existing loader image or rebuild the image. With that you shouldn't have PMU emulation anymore (so the instance can be killed in ~24-48h) but we can see if it's kernel panicking.2 points

-

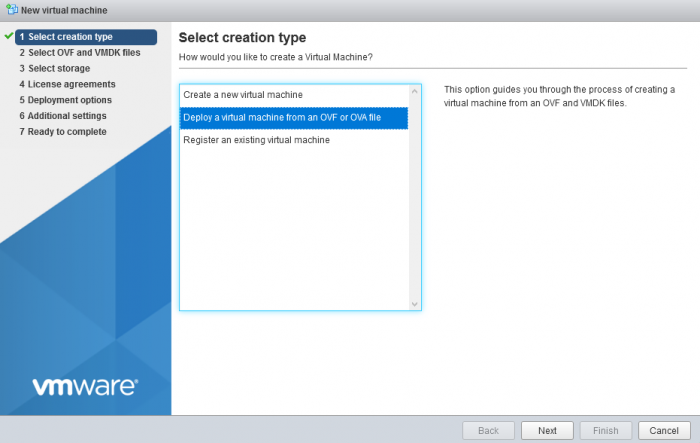

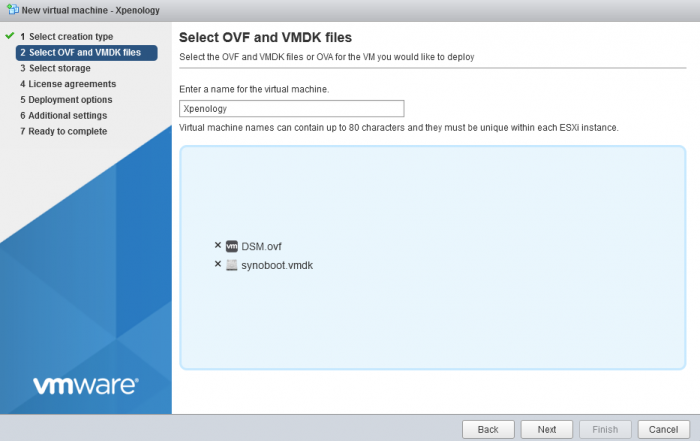

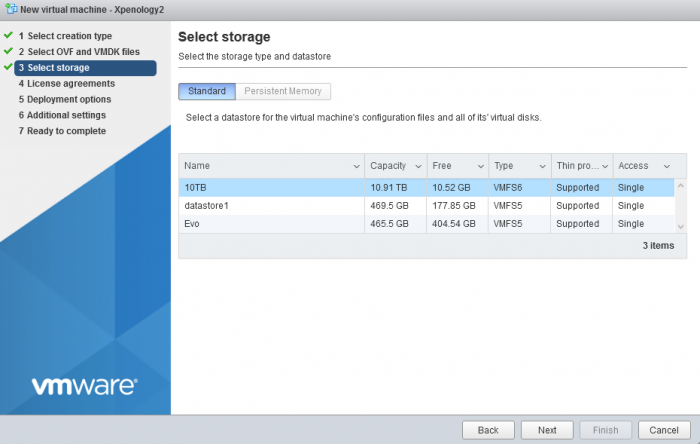

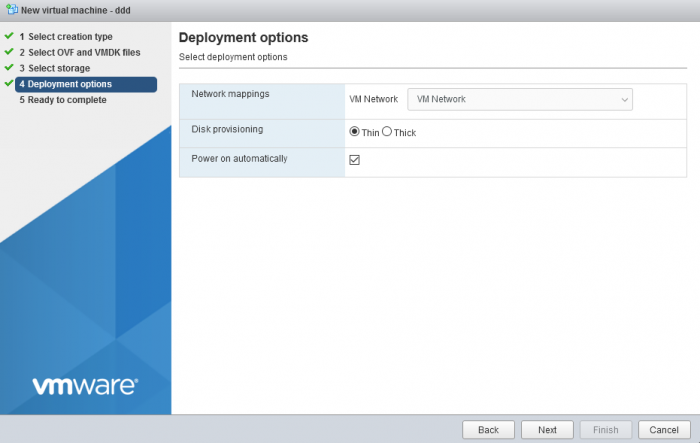

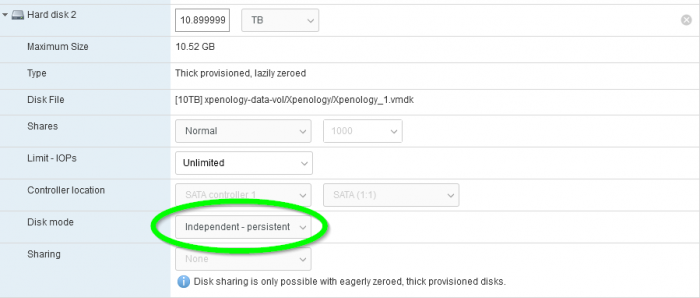

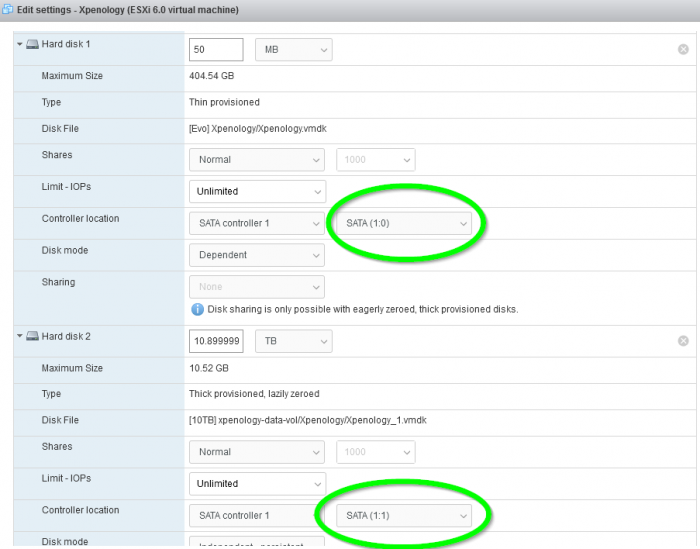

I got tired of searching tutorials, just to find 20+ pages of people having problems with no real bullseye solution, so I decided to make a tutorial with a single download, to provide everything you need in a deployable ovf format for esxi 6.7. Credit to https://xpenology.com/forum/topic/13019-tuto-configs-toute-pr%C3%AAte-pour-vmware/ Even though the page is in French, and I don't speak french, I still found the instructions easier to follow than any other tutorials. This is a very easy to follow tutorial. Make sure you follow it step by step, and you'll have yourself a fully functional xpenology server. This isn't an upgrade tutorial, and I don't include how to customize any mac addresses, etc. It's a simple tutorial to install the DS6317xs image on esxi 6.7 using the esxi web client to deploy an ovf. There is minimal settings adjustments. Adjust your CPU, Memory, add a 2nd volume for your data, adjust a few sata settings, install the .pat file, and you're done. 1. Download the file and extract it. It contains the DSM.ova and synoboot.vmdk file you'll need to deploy the ovf into esxi, as well as the DSM_DS3617xs_23739.pat file. xpenology-ds3617xs-all-in-one.zip 2. Open your esxi web client 3. Create a new virtual machine, and chose to "Deploy a virtual machine from an OVF or OVA file" 4. Add both the DSM.ovf and synoboot.vmdk files, then click next 5. Chose a storage location to install the new virtual to. This is my setup, yours will be different. Anywhere will do as long as you have enough free space. I imported the .ovf file to my Evo drive, and added the 2nd drive for all of my data to my 10TB drive. You can put it all in one place, or at two different locations. It's up to you. The end result will be the same. A working xpenology install. 6. Chose the VM Network you'd like to use. I left mine as default, as I only have one network running on my esxi server. Your setup may be different. Chose accordingly. Leave all other settings default. 7. Click Finish, and let the .ovf file deploy. It may take a few seconds/minutes, depending on your system. 8. BEFORE TURNING THE SERVER ON, YOU MUST CHANGE A FEW THINGS!. - I chose 4 CPU cores, and 4 GB of ram. That's enough for my needs. You can chose 8 cpu cores and 8 gb of ram if you'd like. - Add your 2nd hard disk. (I assume you know how to add a hard drive in esxi) --CRITICAL--You MUST chose Disk Mode: Independent - persistent. - The second critical thing you must do, is change your Hard disk 1 and Hard disk 2's controller location to SATA (1:0) and SATA (1:1). If you don't do this, you'll get an error that the system can't find any drives to install to when trying to install the .pat file. - You can remove the USB controller, and the CD Rom. You won't need them. Fire up the virtual, then using a web browser, visit http://find.synology.com. Upload you .pat file, set an IP and an admin password. YOU'RE DONE!1 point

-

1 point

-

with ESXi, it will always work with internal NIC as you configure the VM with E1000e NIC (handled by DSM) or if you add vmxnet3 driver to set NIC as VMXNET 3.1 point

-

It should, actually you would use it not for "Xpenology" but for ESXi as Datastore. As long as ESXi has the driver for this PCIe device (take care of PCIe slot compatibility of Gen8) you should be able to use it as datastore, and then passtrough the internal SATA AHCI to Xpenology VM.1 point

-

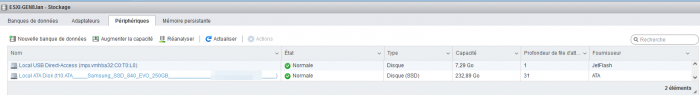

If you have only one controller (internal SATA AHCI), your only choice will be your four disks with RDM feature (there is a tutorial for it). I just meant you won't be able to passtrough the controller as if you do so, the SSD on odd port will be passed through also and won't be visible for ESXi host. PCI Controller passtrough is all or nothing. Maybe I'm wrong and in this case I missunderstood how PCI passtrough work. Using a datastore on USB is a "hack" not supported by VMWare, never tried it. So I can't help on this subject. Edit : Here for me with LSI card passed through : My 4 data disks are not visible on ESXi. Edit 2:1 point

-

1 point

-

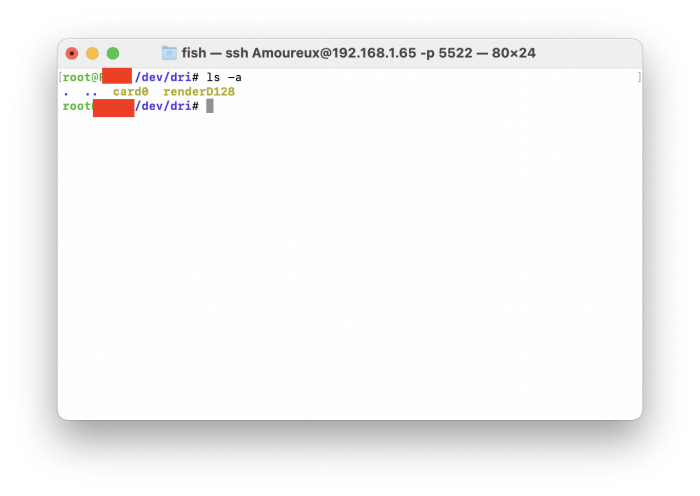

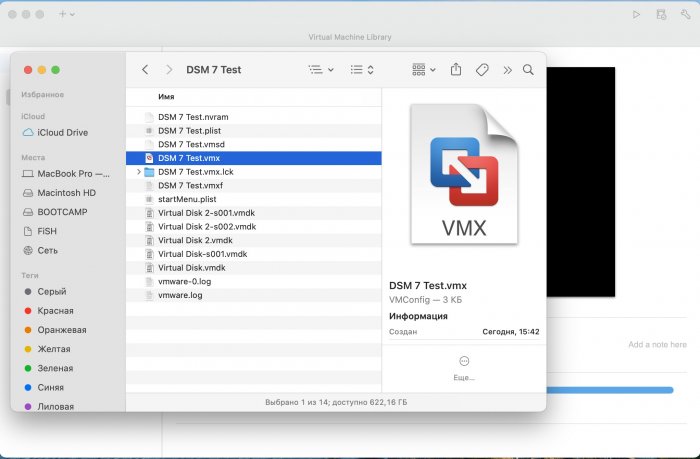

docker run -d \ --name=PlexMediaServer \ --net=host \ --device=/dev/dri:/dev/dri \ -e PLEX_UID=USER_ID \ -e PLEX_GID=100 \ -e TZ=Europe/Moscow \ -v /volume1/docker/plex/database:/config \ -v /volume1/docker/plex/transcode:/transcode \ -v /volume1/Video:/Video \ plexinc/pms-docker where USER_ID - your's ID and in task scheduler every boot from root user chmod 777 /dev/dri/*1 point

-

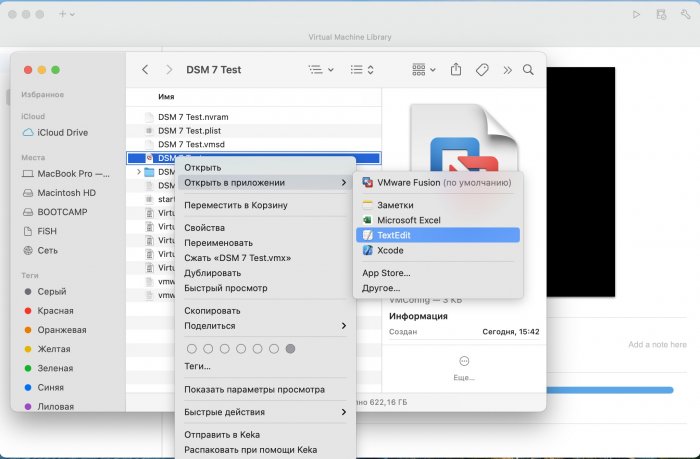

1 point

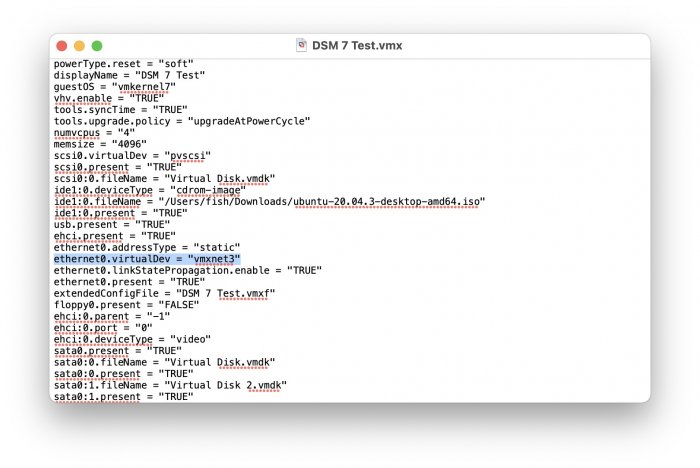

-

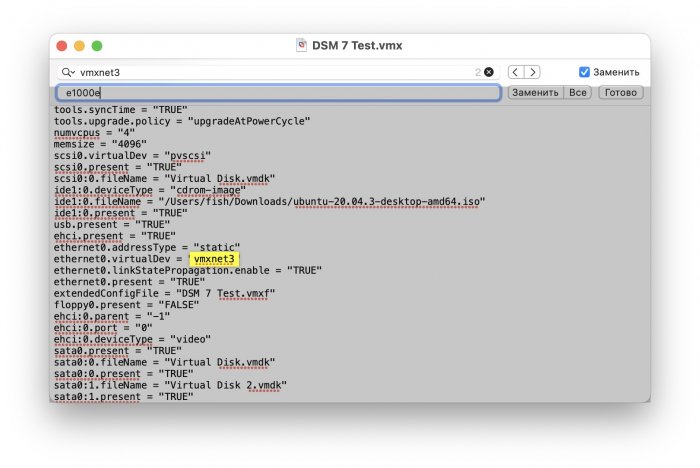

1 point

-

Every time there will be a zImage / rd.gz in new package yes. I must admit I don't know how we can handle small patches like 6.2.4 update 2 as it must be patched but can't be simply used to build a new loader if I'm not wrong1 point

-

Don't push your luck. I have already asked you to tone it down. There is no need to be condescending to anyone.1 point

-

For test DS315xs with VMWare I add to global_config.json this lines: { "id": "bromolow-7.0.1-42214", "platform_version": "bromolow-7.0.1-42214", "user_config_json": "bromolow_user_config.json", "docker_base_image": "debian:8-slim", "compile_with": "toolkit_dev", "redpill_lkm_make_target": "prod-v7", "downloads": { "kernel": { "url": "https://sourceforge.net/projects/dsgpl/files/Synology%20NAS%20GPL%20Source/25426branch/bromolow-source/linux-4.4.x.txz/download", "sha256": "af815ee065775d2e569fd7176e25c8ba7ee17a03361557975c8e5a4b64230c5b" }, "toolkit_dev": { "url": "https://sourceforge.net/projects/dsgpl/files/toolkit/DSM7.0/ds.bromolow-7.0.dev.txz/download", "sha256": "a5fbc3019ae8787988c2e64191549bfc665a5a9a4cdddb5ee44c10a48ff96cdd" } }, "redpill_lkm": { "source_url": "https://github.com/RedPill-TTG/redpill-lkm.git", "branch": "master" }, "redpill_load": { "source_url": "https://github.com/jumkey/redpill-load.git", "branch": "develop" } }, For Apollolake need to add this lines: It's very fast and easy make a build an .img for test, only need linux (In my case use Terminal on Ubuntu): 1. Install Docker sudo apt-get update sudo apt install docker.io 2. install jq & curl: sudo apt install jq sudo apt install curl 3. download redpill-tool-chain_x86_64_v0.10 https://xpenology.com/forum/applications/core/interface/file/attachment.php?id=13072 4. Go to folder and permissions to .sh cd redpill-tool-chain_x86_64_v0.10 chmod +x redpill_tool_chain.sh 5. If you want edit vid,pid,sn,mac: #edit apollolake vi apollolake_user_config.json #edit bromolow vi bromolow_user_config.json 6. build img #for apollolake ./redpill_tool_chain.sh build apollolake-7.0.1-42214 && ./redpill_tool_chain.sh auto apollolake-7.0.1-42214 #for bromolow ./redpill_tool_chain.sh build bromolow-7.0.1-42214 && ./redpill_tool_chain.sh auto bromolow-7.0.1-42214 then the file was in redpill-tool-chain_x86_64_v0.10/images 7. For VMWare I convert .img to .vmdk with StarWind V2V Converter, and then add to Virtual Machine like sata. Also, change ethernet0.VirtualDeb = "e1000" to "e1000e on file .vmx Thanks ThorGroup for the great work global_config.json1 point

-

@ThorGroup thank you for the update! And indeed, I spoted and incorporated the new make targets into the new toolchain builder version Taken from the README.md: Supports the make target to specify the redpill.ko build configuration. Set <platform version>.redpill_lkm_make_target to `dev-v6`, `dev-v7`, `test-v6`, `test-v7`, `prod-v6` or `prod-v7`. Make sure to use the -v6 ones on DSM6 build and -v7 on DSM7 build. By default the targets `dev-v6` and `dev-v7` are used. I snatched following details from the redpill-lkm Makefile: - dev: all symbols included, debug messages included - test: fully stripped with only warning & above (no debugs or info) - prod: fully stripped with no debug messages See README.md for usage. redpill-tool-chain_x86_64_v0.10.zip1 point

-

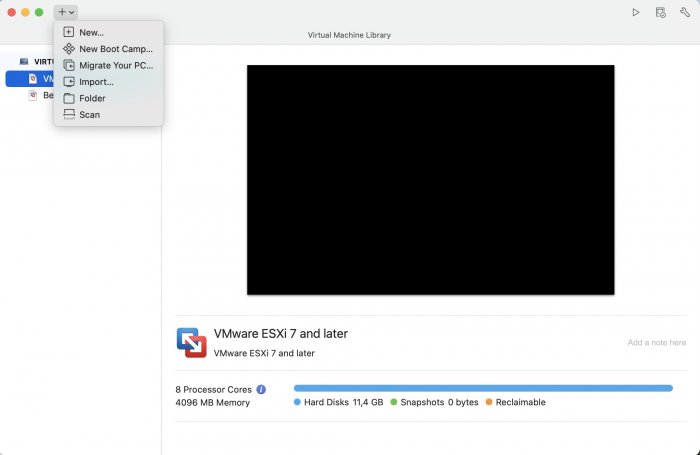

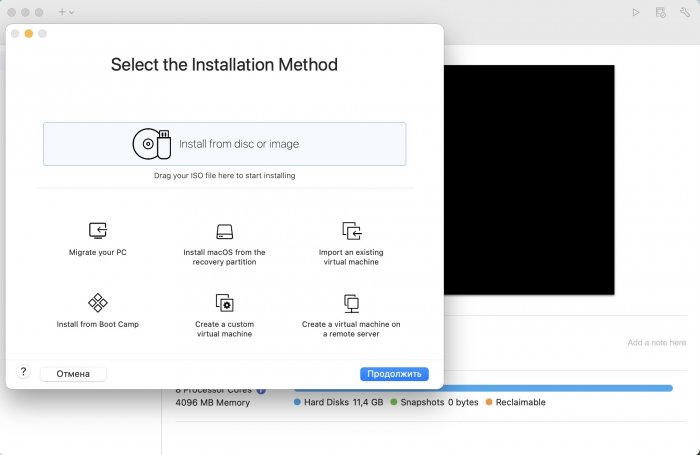

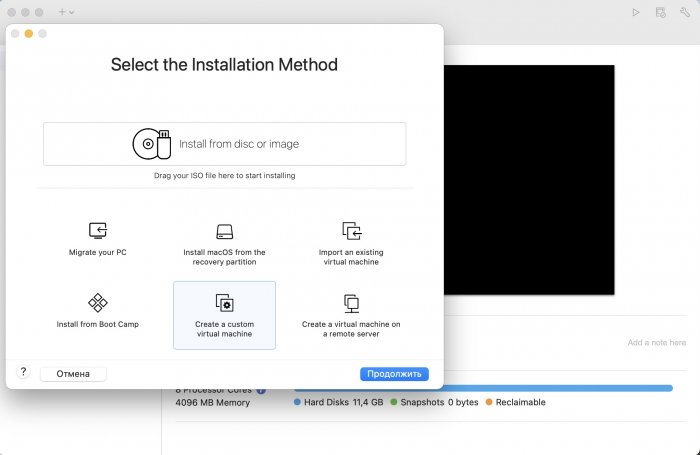

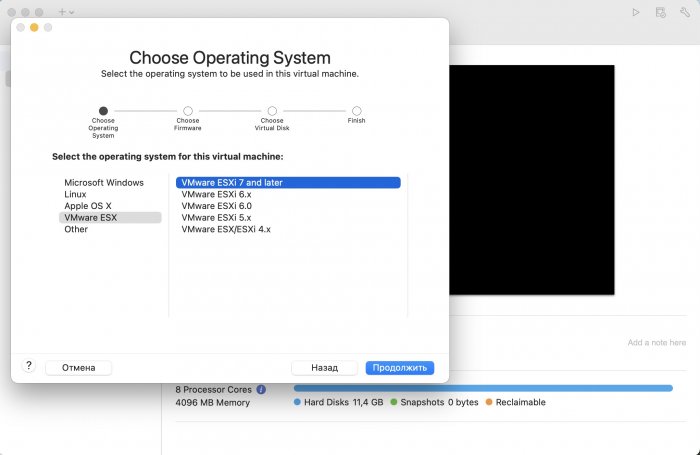

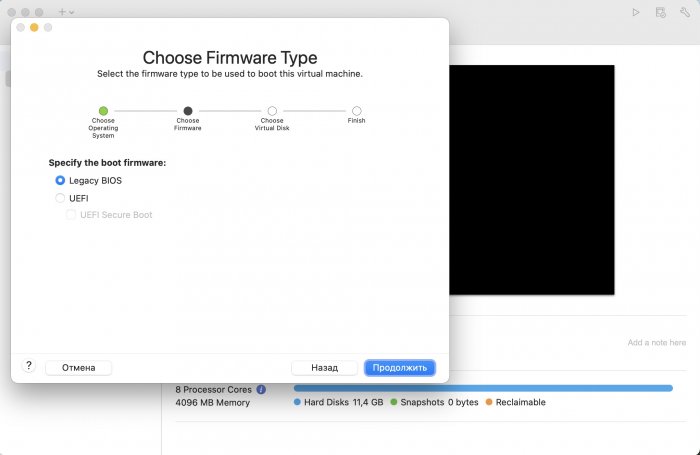

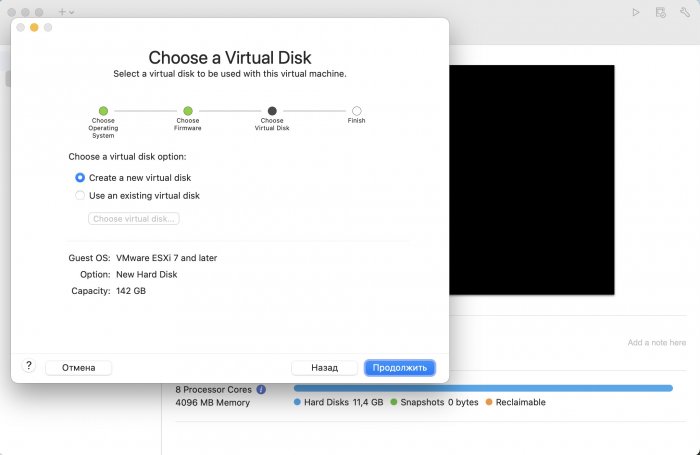

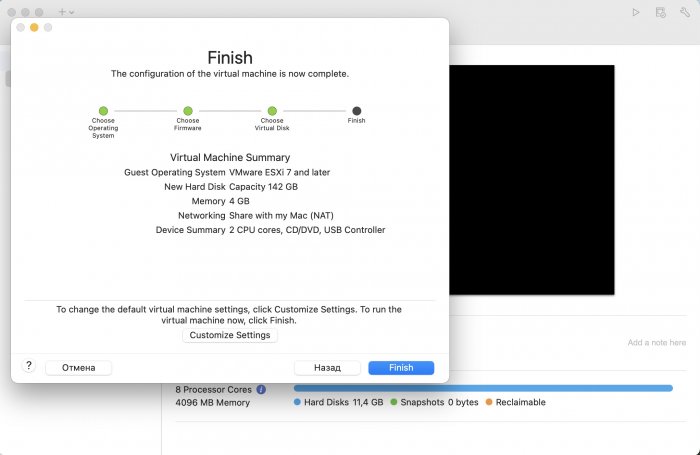

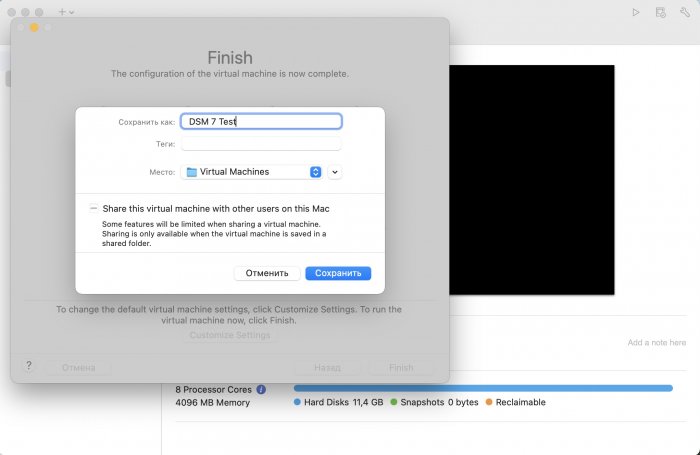

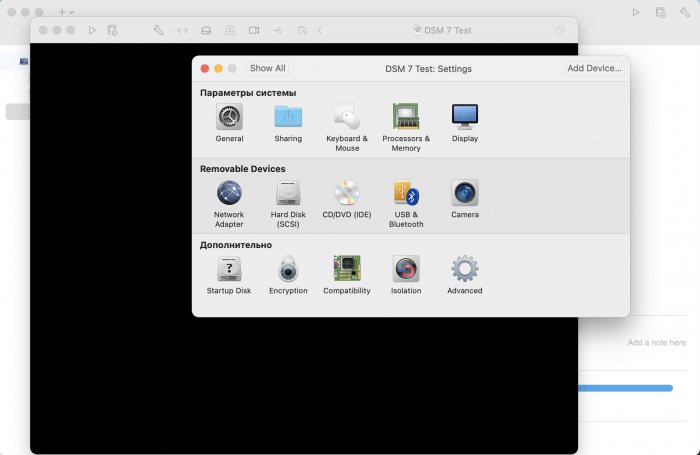

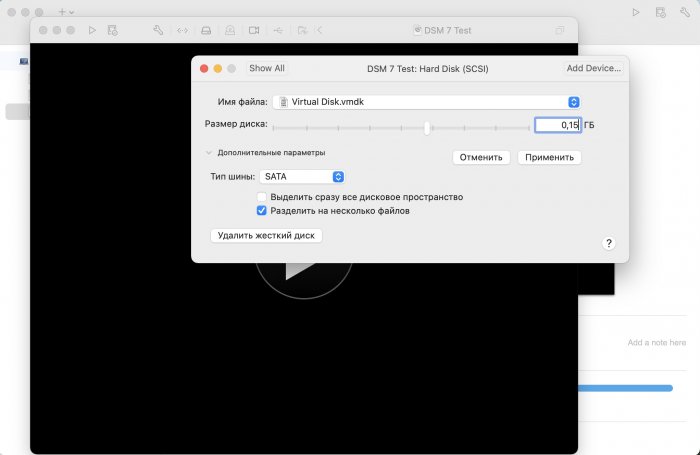

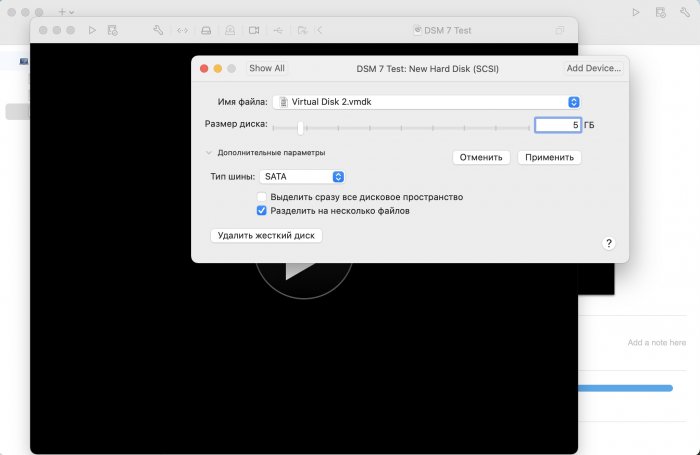

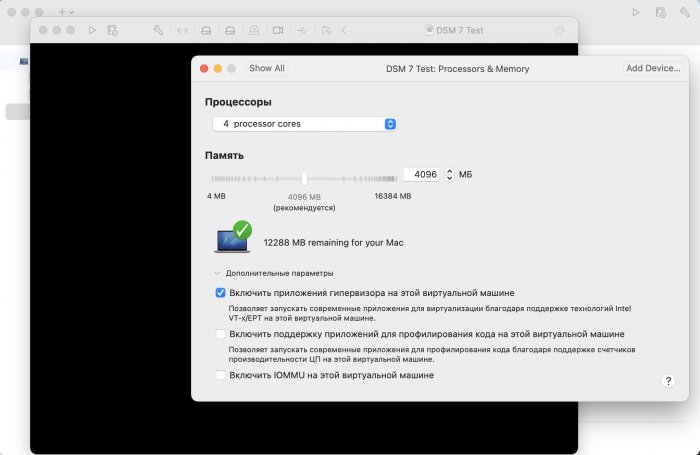

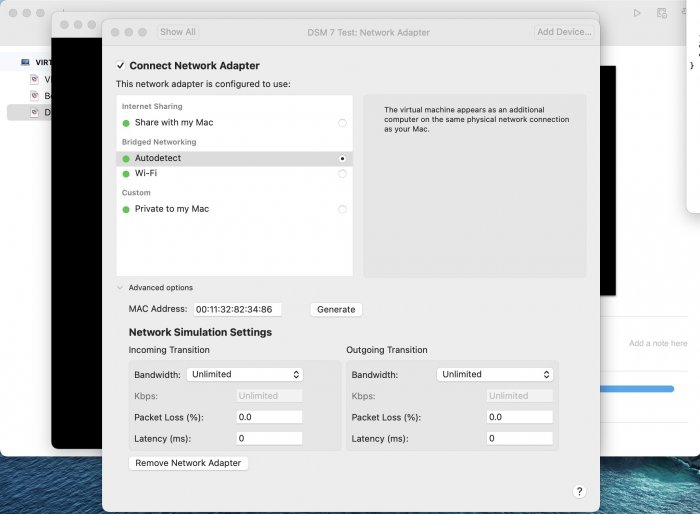

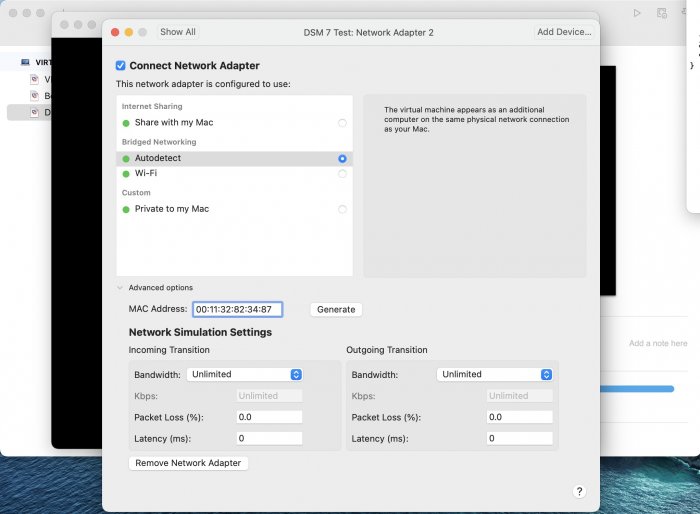

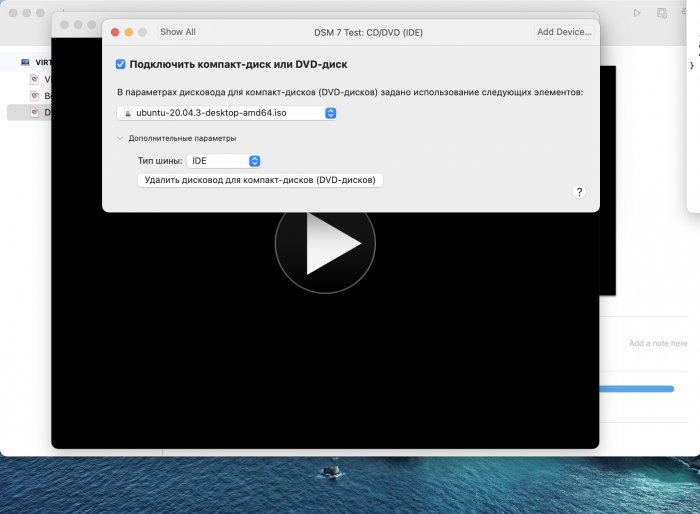

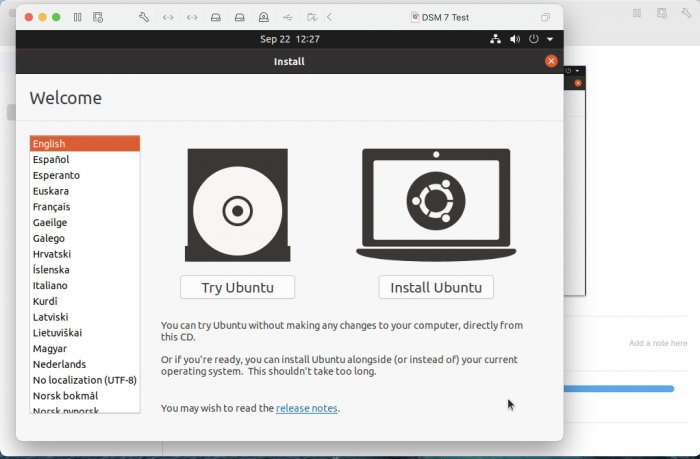

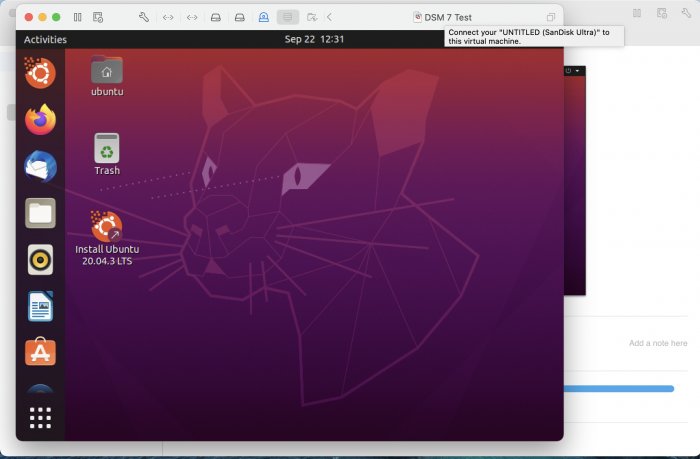

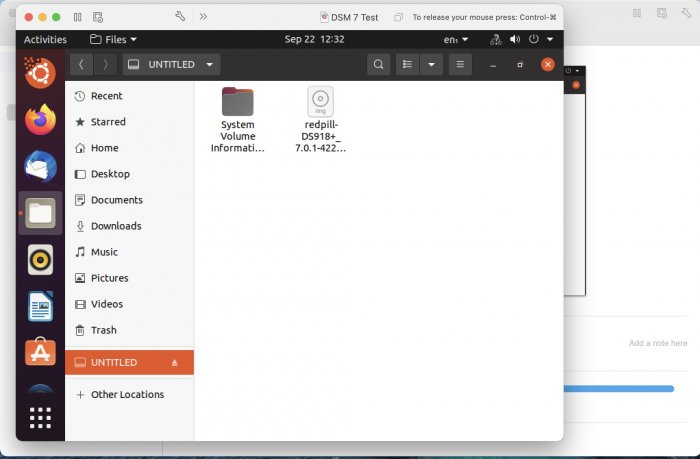

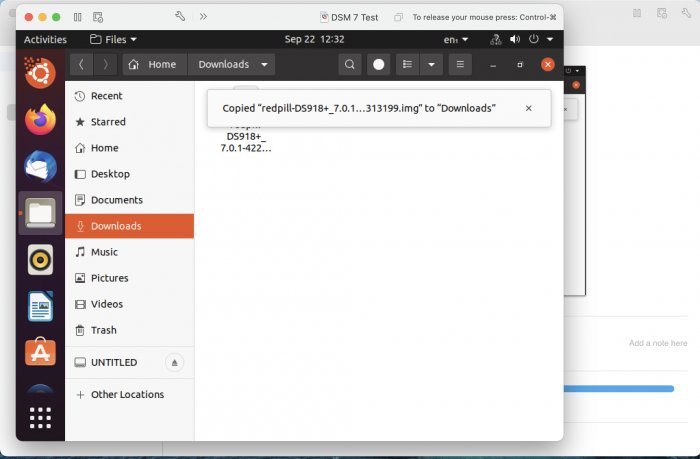

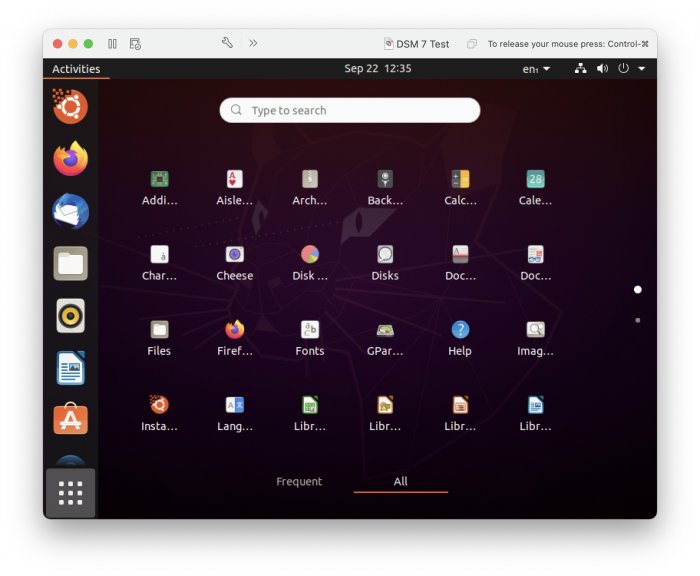

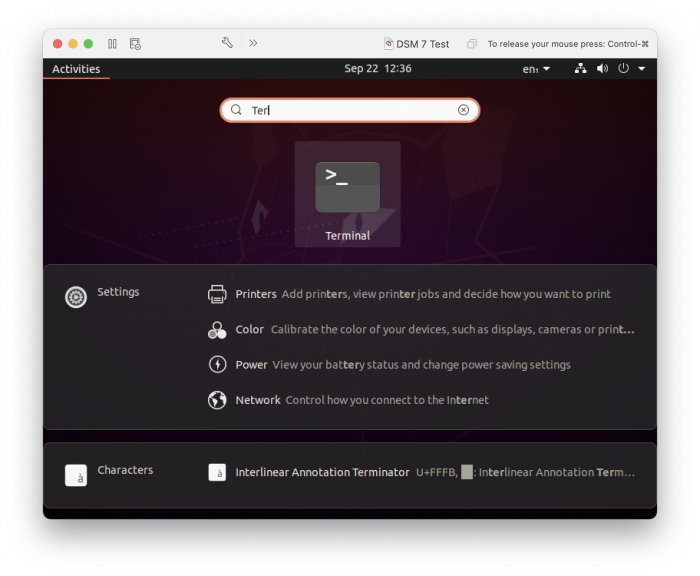

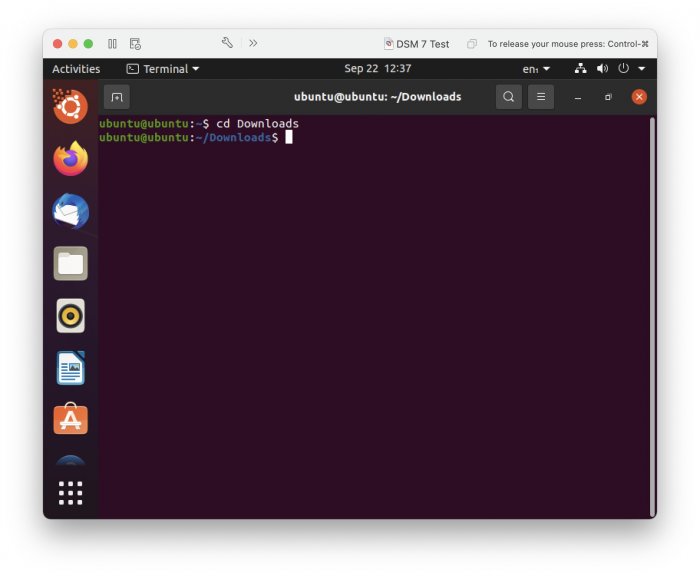

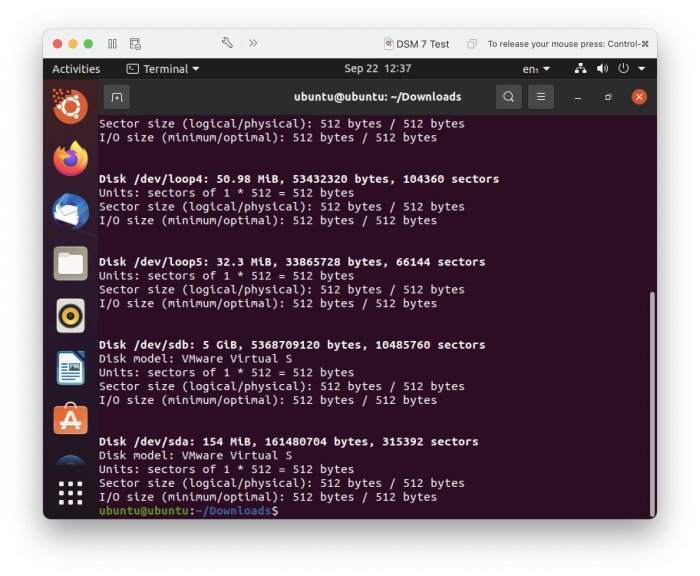

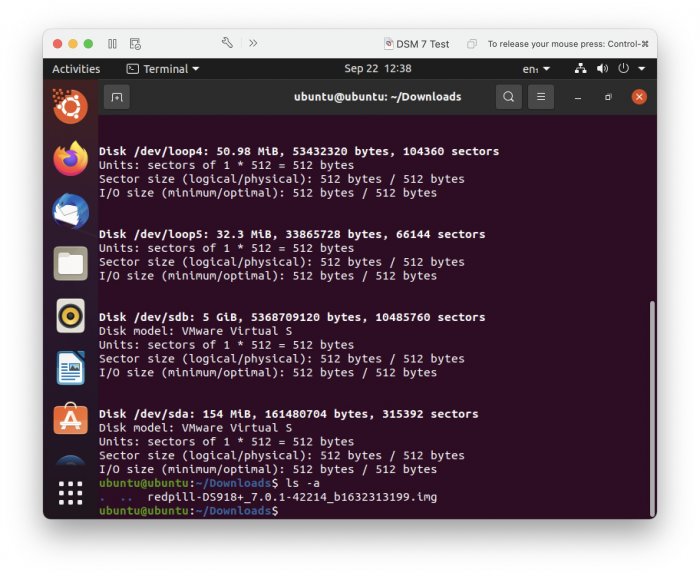

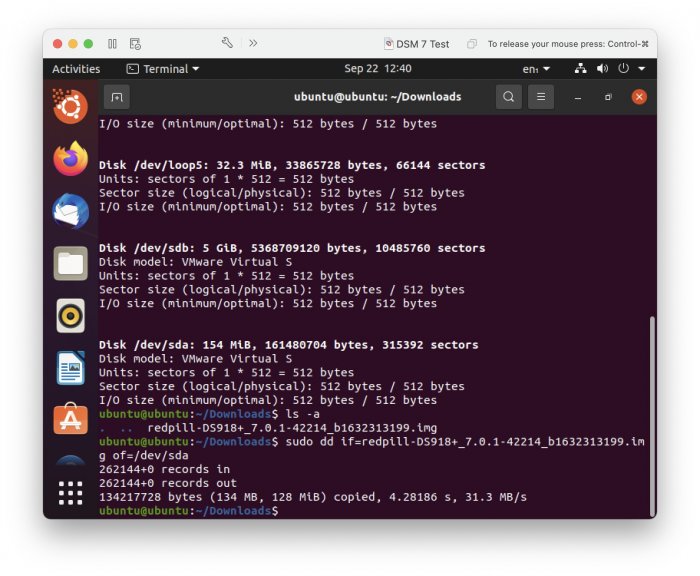

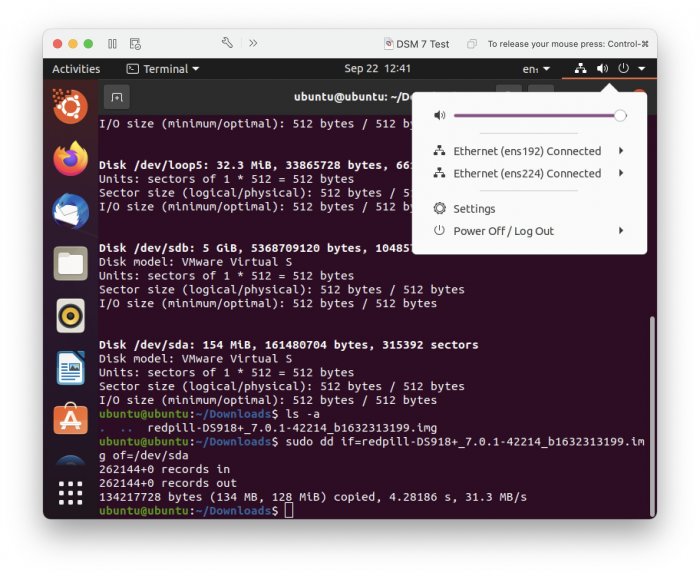

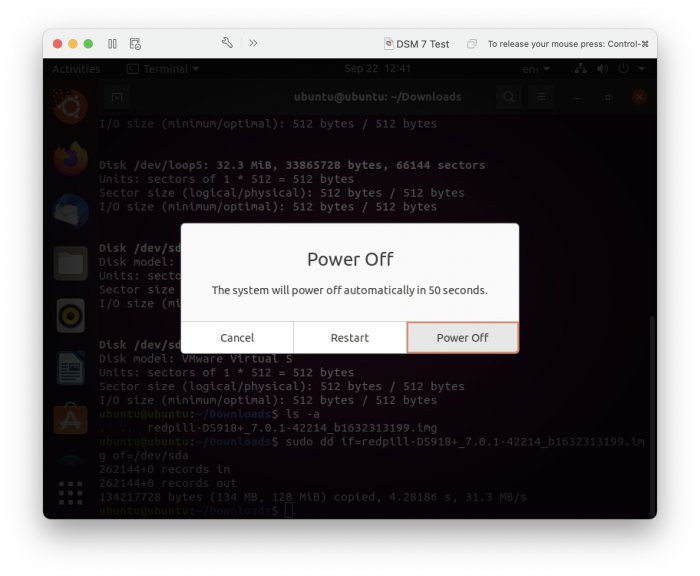

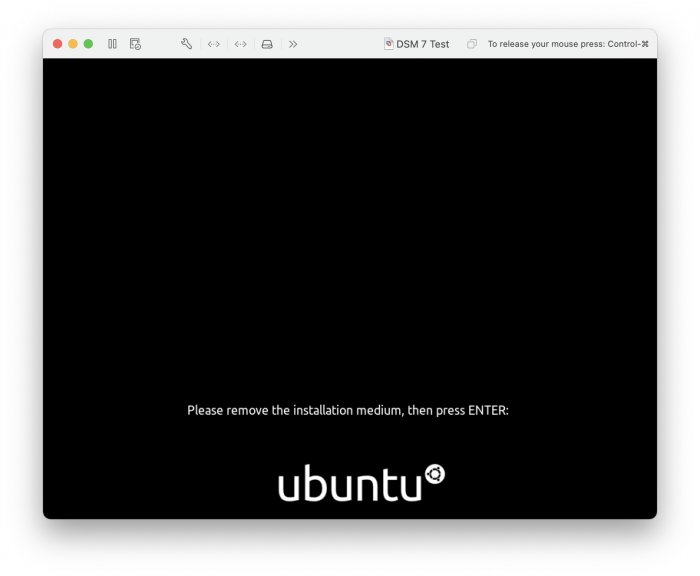

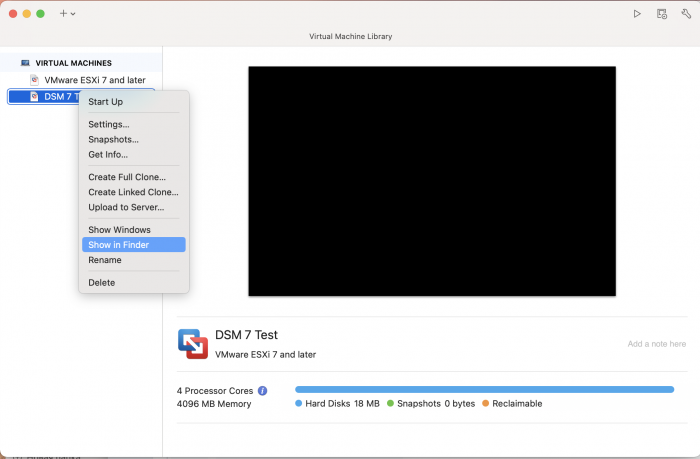

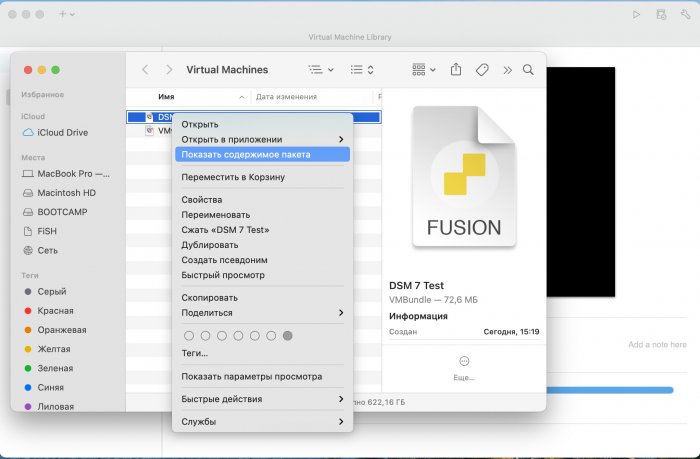

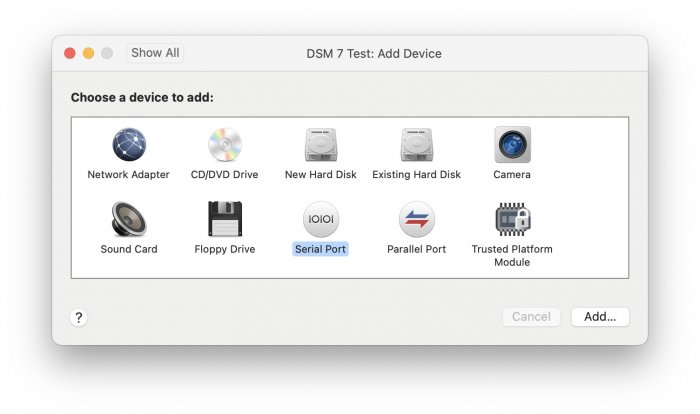

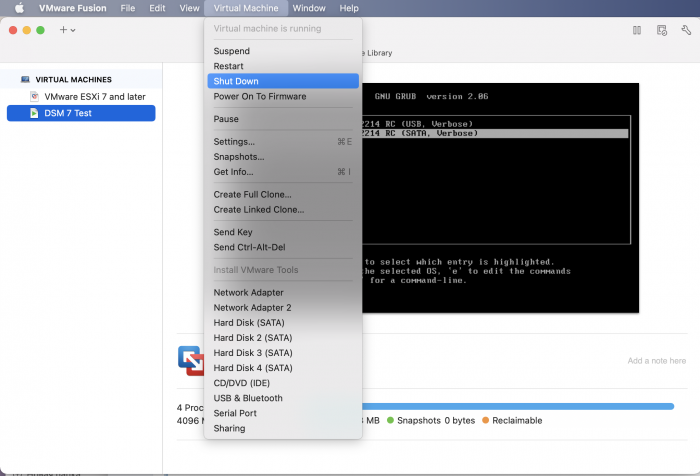

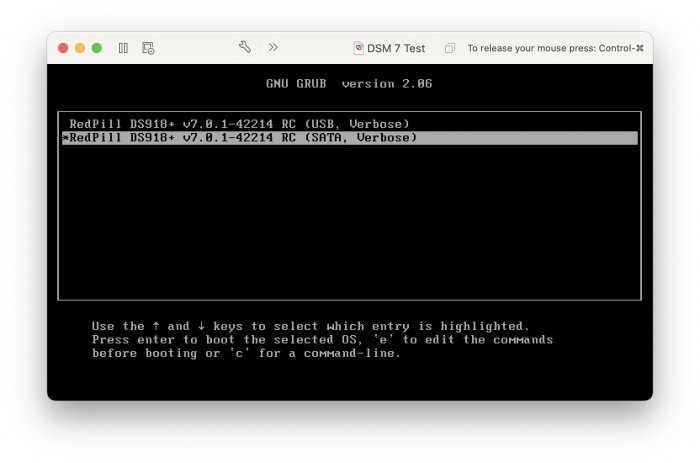

Ввиду участившихся просьб с подобным вопросом, решил накидать небольшую инструкцию. Нам понадобится: macOS 11.5.2 VMware 12.1.2 Образ загрузчика RedPill отдельным файлом на флешке. В моем случае для инструкции это файл redpill-DS918+_7.0.1-42214_b1632313199.img (Инструкция по сборке в macOS). Собрал специально для данной темы с данными, которые не соответствуют реальным устройствам и приведены ниже. В них мак-адреса сетевых карт и серийный номер взяты с потолка. { "extra_cmdline": { "pid": "0x0001", "vid": "0x46f4", "sn": "14A0PDN849204", "netif_num": "2", "mac1": "001132823486", "mac2": "001132823487", "DiskIdxMap": "1000", "SataPortMap": "4" }, "synoinfo": {}, "ramdisk_copy": {} } Live CD Ubuntu 20.04.03 Помнить сочетания control+command для того, чтобы включить мышку после запуска виртуальной машины Записать мак адресы, чтобы были под рукой для настройки виртуальной машины Поехали! Будет штук 40 картинок и немного описания. 1. Создаем новую виртуальную машину 2. Выбираем пункт Create a custom virtual machine 3. VMware ESX ->VMware ESXi 7 and later 4. Тип биоса Legacy 5. Создать новый виртуальный диск 6. Выбираем Customize Settings 7. Придумываем имя 8. Это основное окно настройки нашей виртуальной машины. Тут мы будем добавлять (кнопка Add Device в правом верхнем углу) и удалять устройства. 9. Выбираем диск SCSI, и в его настройках исправляем на SATA, а размер указываем 150мб под загрузчик. Позже на него мы запишем созданный образ redpill-DS918+_7.0.1-42214_b1632313199.img используя Live CD c Ubuntu. Все диски у нас будут SATA! После завершения настройки этого диска нажимаем Add Device, выбираем добавить новый диск. 10. Создаем второй диск, для DSM, размер можно взять любым, главное условие, чтобы емкости хватило. Позже мы добавим еще парочку, но чтобы не запутаться, начнем с одного. На данном этапе рекомендую выбирать размер дисков для DSM большей емкости, потому что при 5ГБ после установки у вас не останется места, и система не даст создать раздел под данные. Это даст вам возможность их потом не ресайзить как мне во время написания инструкции. Но если все таки кто-то столкнется, просто установите виртуальную машину, и в настройках измените раздел в с 5 гб, на тот, который будет вам нужен и удобен. Я его изменил на 20 гб, и все работает после загрузки. 11.Выбираем желаемое количество выделяемой памяти и количества используемых ядер для виртуальной машины 12. Настраиваем сетевой интерфейс который у нас уже имеется. Тут важны два параметра: нужны выбрать Autodetect и пописать мак-адрес первый из тех, что задавался при сборке загрузчика в apollolake_user_config.json. В данном случае - 001132823486 13. Создаем новый сетевой интерфейс, настройки как у предыдущего, только мак-адрес вставляем следующий 001132823487 14. Заходим в настройки CD/DVD. Ставим галочку в чекбоксе "подключить" и выбираем образ Ubuntu, который предварительно скачали. И стартуем виртуальную машину. 15. Выбираем пункт Try Ubuntu 16. По окончании загрузки попадаем на рабочий стол 17. Вставляем флешку с образом загрузчика. Система спросит что с ней сделать, выбираем примонтировать к виртуальной машине. Открываем файловый менеджер, ищем наше устройство USB, и копируем с него образ в папку Downloads 18.Переходим в портал приложений, и в поисковой строке набираем Terminal и запускаем его. 19. Набираем команду, чтобы перейти в папку, в которой лежит наш образ загрузчика cd Downloads 20. Водим команду, чтобы вывести список доступных разделов, нас интересует тот, что на 150 мб (/dev/sda) sudo fdisk -l 21. Вводим команду, чтобы посмотреть список файлов в каталоге, в котором мы находимся, и убедится, что скопированный нами образ на месте. Это даст возможность легко скопировать его имя полностью. ls -a 22. Следующей командой мы запишем образ redpill-DS918+_7.0.1-42214_b1632313199.im на раздел /dev/sda sudo dd if=redpill-DS918+_7.0.1-42214_b1632313199.img of=/dev/sda 23. Выключаемся. 24. После того, как виртуальная машина выключилась правой кнопкой Show in Finder 25. Выбираем нашу виртуальную машину, вызываем контекстное меню, и просим показать содержимое пакета. 26. Ищем указанный файл, и просим открыть его текстовым редактором. 27. Первое, что нам нужно сделать, это прописать нужный сетевой драйвер виртуальной машины, иначе она не будет обнаруживаться в сети 28. Ищем "vmxnet3" и меняем на "e1000e". Сетевых интрефейса у нас два, потому замены должно быть тоже две. В конечном итоге все должно выглядеть как-то так. ethernet0.virtualDev = "e1000e" ethernet1.virtualDev = "e1000e" 29. После все предыдущих манипуляций должна быть примерно следующая картина. Посмотрев на которую я понял, что забыл добавить последовательный порт для лога. 30. Поэтому выбираем Add Device и добавляем, заодно указываем, куда сохранять лог. 31. Стартуем машину (выбираем режим SATA, потому что в нем игнорируются значения PID/VID), даем ей немного поработать (минуту-две), потому что как выяснилось, некоторые настройки в файл *.vmx прописываются чуть позже. На этом этапе она уже должна определяться в сети, но видеть диски не будет. Это мы исправим дальше. 32. Останавливаем виртуалку (Shut down ) 33. Добавляем еще парочку дисков как в пункте 10 (размер может совпадать, диски должны быть SATA). Этот пункт не обязателен, но я его добавил, чтобы было понятнее, что и для чего мы еще будем редактировать в фале *.vmx 34. Возвращаемся к пункам 24 - 27, в которых мы редактировали тип драйвера сетевого интерфейса, только теперь займемся настройками наших виртуальных HDD. Суть заключается в том, что нам нужно разнести виртуальный диск, на который записан загрузчик, и с дисками, на которых будет жить DSM, на разные виртуальные контроллеры. Номер контроллера или устройства начинается с нуля! Следуя этой логике, нам нужно сделать так, чтобы загрузчик был на контроллере с номером "0", а остальные диски - с номером "1". Получаем: sata0 - контроллер на котором сидит диск (устройство sata0:0) с загрузчиком, sata1 - контроллер на котором сидят диски для DSM (устройства sata1:0, sata1:1, sata1:2) Каждый контроллер для дисков тоже определяется в настройках, и имеет свои свойства, которые выглядят следующим образом: sata0.present = "TRUE" sata0.pciSlotNumber = "35" sata1.present = "TRUE" sata1.pciSlotNumber = "36" Как и каждое устройство (диск в нашем случае): sata0:0.fileName = "Virtual Disk.vmdk" sata0:0.present = "TRUE" sata0:0.redo = "" sata1:0.fileName = "Virtual Disk 2.vmdk" sata1:0.present = "TRUE" sata1:0.redo = "" sata1:1.fileName = "Virtual Disk 3.vmdk" sata1:1.present = "TRUE" sata1:1.redo = "" sata1:2.fileName = "Virtual Disk 4.vmdk" sata1:2.present = "TRUE" sata1:2.redo = "" 35. Итоговый файл у меня выглядит вот так: .encoding = "UTF-8" config.version = "8" virtualHW.version = "18" pciBridge0.present = "TRUE" pciBridge4.present = "TRUE" pciBridge4.virtualDev = "pcieRootPort" pciBridge4.functions = "8" pciBridge5.present = "TRUE" pciBridge5.virtualDev = "pcieRootPort" pciBridge5.functions = "8" pciBridge6.present = "TRUE" pciBridge6.virtualDev = "pcieRootPort" pciBridge6.functions = "8" pciBridge7.present = "TRUE" pciBridge7.virtualDev = "pcieRootPort" pciBridge7.functions = "8" vmci0.present = "TRUE" hpet0.present = "TRUE" nvram = "DSM 7 Test.nvram" virtualHW.productCompatibility = "hosted" powerType.powerOff = "soft" powerType.powerOn = "soft" powerType.suspend = "soft" powerType.reset = "soft" displayName = "DSM 7 Test" guestOS = "vmkernel7" vhv.enable = "TRUE" tools.syncTime = "TRUE" tools.upgrade.policy = "upgradeAtPowerCycle" numvcpus = "4" memsize = "4096" scsi0.virtualDev = "pvscsi" scsi0.present = "TRUE" scsi0:0.fileName = "Virtual Disk.vmdk" ide1:0.deviceType = "cdrom-image" ide1:0.fileName = "/Users/fish/Downloads/ubuntu-20.04.3-desktop-amd64.iso" ide1:0.present = "TRUE" usb.present = "TRUE" ehci.present = "TRUE" ethernet0.addressType = "static" ethernet0.virtualDev = "e1000e" ethernet0.linkStatePropagation.enable = "TRUE" ethernet0.present = "TRUE" extendedConfigFile = "DSM 7 Test.vmxf" ehci:0.parent = "-1" ehci:0.port = "0" ehci:0.deviceType = "video" sata0.present = "TRUE" sata1.present = "TRUE" sata0:0.fileName = "Virtual Disk.vmdk" sata0:0.present = "TRUE" ethernet0.address = "00:11:32:82:34:86" ethernet1.addressType = "static" ethernet1.virtualDev = "e1000e" ethernet1.present = "TRUE" ethernet1.linkStatePropagation.enable = "TRUE" ethernet1.address = "00:11:32:82:34:87" ehci:0.deviceId = "0x8020000005ac8514" numa.autosize.cookie = "40012" numa.autosize.vcpu.maxPerVirtualNode = "4" uuid.bios = "56 4d 9f 5f d2 3b 6a f5-ae a1 7d 94 e0 ca 92 6a" uuid.location = "56 4d 9f 5f d2 3b 6a f5-ae a1 7d 94 e0 ca 92 6a" sata0:0.redo = "" pciBridge0.pciSlotNumber = "17" pciBridge4.pciSlotNumber = "21" pciBridge5.pciSlotNumber = "22" pciBridge6.pciSlotNumber = "23" pciBridge7.pciSlotNumber = "24" scsi0.pciSlotNumber = "160" usb.pciSlotNumber = "32" ethernet0.pciSlotNumber = "192" ethernet1.pciSlotNumber = "224" ehci.pciSlotNumber = "33" vmci0.pciSlotNumber = "34" sata0.pciSlotNumber = "35" scsi0.sasWWID = "50 05 05 6f d2 3b 6a f0" svga.vramSize = "268435456" vmotion.checkpointFBSize = "4194304" vmotion.checkpointSVGAPrimarySize = "268435456" vmotion.svga.mobMaxSize = "268435456" vmotion.svga.graphicsMemoryKB = "262144" vmci0.id = "-523595158" monitor.phys_bits_used = "45" cleanShutdown = "FALSE" softPowerOff = "FALSE" usb:1.speed = "2" usb:1.present = "TRUE" usb:1.deviceType = "hub" usb:1.port = "1" usb:1.parent = "-1" svga.guestBackedPrimaryAware = "TRUE" sata1:0.present = "TRUE" sata1:0.fileName = "Virtual Disk 2.vmdk" floppy0.present = "FALSE" serial0.fileType = "file" serial0.fileName = "/Users/fish/Desktop/log" serial0.present = "TRUE" sata1:0.redo = "" sata1.pciSlotNumber = "36" sata1:1.fileName = "Virtual Disk 3.vmdk" sata1:1.present = "TRUE" sata1:2.fileName = "Virtual Disk 4.vmdk" sata1:2.present = "TRUE" sata1:2.redo = "" sata1:1.redo = "" usb:0.present = "TRUE" usb:0.deviceType = "hid" usb:0.port = "0" usb:0.parent = "-1" P.S. Постарался максимально подробно расписать и показать все то, что придется сделать, чтобы запустить DSM 7 в виртуалке на macOS. Пытался рассказать так, чтобы была понятна логика в тех местах, где она присутствует. Некоторые вещи всплыли уже в процессе оформления поста, когда все перепроверял (история с ресайзом дисков), так что другие ошибки тоже могу быть, но на суть они не влияют.1 point

-

I incorparated the latest changes in the redpill universe into the toolchain builder: For 6.2.4 builds: the kernel sources are configured to be used by default. For 7.0 builds: the Toolkit Dev sources are configured to be used by default. # Inofficial redpill tool chain image builder - Creates a OCI Container (~= Docker) image based toolchain - Takes care of downloading (and caching) the required sources to compile redpill.ko and the required os packages that the build process depends on. - Caches .pat downloads inside the container on the host. ## Changes - Migrated from Make to Bash (requires `jq`, instead of `make` now ) - Removed Synology Toolchain, the tool chain now consists of debian packages - Configuration is now done in the JSON file `global_config.json` - The configuration allows to specify own configurations -> just copy a block underneath the `building_configs` block and make sure it has a unique value for the id attribute. The id is used what actualy is used to determine the <platform_version>. ## Usage 1. Create `user_config.json` according https://github.com/RedPill-TTG/redpill-load 2. Build the image for the platform and version you want: `redpill_tool_chain.sh build <platform_version>` 3. Run the image for the platform and version you want: `redpill_tool_chain.sh run <platform_version>` 4. Inside the container, run `make build_all` to build the loader for the platform_version Note: run `redpill_tool_chain.sh build` to get the list of supported <platform_version> After step 4. the redpill load image should be build and can be found in the host folder "images". Feel free to customize the heck out of every part of the toolchain builder and post it here. If things can be done better, feel free to make them better and share the result with us. redpill-tool-chain_x86_64_v0.5.1.zip1 point