All Activity

- Past hour

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2.1 69057-Update 4 - DSM version AFTER update: DSM 7.2.1 69057-Update 5 - Loader version and model: ARC 24.4.25 / DS3622xs+ - Installation type: i5-8400 | ASUS TUF Z370-PLUS GAMING - Additional comments: Downloaded and Manual Install. No issues.

- Today

-

Подтверждаю. Обновление прошло без проблем в штатном режиме.

-

Surveillance Station 8.2.7-6222 + камера tp-link c200

use-nas replied to TRT_miha's topic in Програмное обеспечение

По рукам ему )) Что за роутер такой, все что просят открыть он закрыл, а что не просили открыл )) Может пора ему в утиль ... или полный сброс поможет пожить еще ) -

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2.1 69057-Update 4 - DSM version AFTER update: DSM 7.2.1 69057-Update 5 - Loader version and model: RR v24.4.2 / DS3622xs+ - Installation type: BAREMETAL – Pentium G4560 - Gigabyte G1 Sniper B7 - 16GB DDR4 - Additional comments: Downloaded and Manual Install. No issues.

-

cyraxan started following восстановление DSM после краша btrfs

-

Отпишу свой очередной опыт восстановления DSM. Моя домашняя DSM Xpenology что 6.х, что 7.х работая как ВМ VMware Workstation при аварийном выключении хоста по питанию постоянно ломала MFT и разделы с BTRFS томом на виртуальном диске с приложениями размещенном на SSD диске с NTFS. При этом диски HDD отданные ВМ как RDM под данные никогда не страдали. В очередной раз после выключения питания покараптился системный том на DSM и никак не чинился и не монтировался. Все привычно испробованные способы с btrfs check стабильно не дали результата. Очередной поиск дал утилиты для восстановления данных с btrfs. https://github.com/cblichmann/btrfscue - не испробовал https://www.easeus.com/data-recovery/btrfs-recovery-software.html - не распознавал данные https://dmde.ru/ тулза отличная вроде и бесплатная, но имена файлов и структуру данных не вытащила https://www.reclaime.com/library/btrfs-recovery.aspx - распознал, но ненашел кряк https://www.ufsexplorer.com/articles/how-to/recover-data-btrfs-raid/ - распознал, нашел ломаный, восстановил данные, годная тулза В итоге отказался вообще от тома на SSD и совместил восстановление DSM с давно запланированным внедрением readonly кэша на NVMe. Последовательность: обновил arc лоадер до текущего 25.4.18 включил эмуляцию совместимого nvme в лоадере в DSM удалил погибший том2 настроил весь nvme как кэш на чтение для вол1 загрузил восстановленные данные приложений /volume1/@appconf/ /volume1/@appstore/ /volume1/@MailPlus-Server/ /volume1/@maillog/ К сожалению пропали права и владельцы папок-файлов, пришлось выставлять наугад и подсматривая на тестовых DSM. Большинство приложений пришлось переинсталировать с сохранением данных. После восстановления приложений и прав никак не заводилась почта, не работала связь с БД postgres для transaction_log DB. Решил это бэкапом установленного приложения почты на резервный DSM, полным удалением приложения и БД и восстановлением приложения с бэкапа. Несколько парадоксально - забэкапил неработающий сервис уже, а восстановил рабочий). Базу транзак логов восстанавливать не стал, хоть и проверил возможность восстановления через pgadmin.

-

Surveillance Station 8.2.7-6222 + камера tp-link c200

TRT_miha replied to TRT_miha's topic in Програмное обеспечение

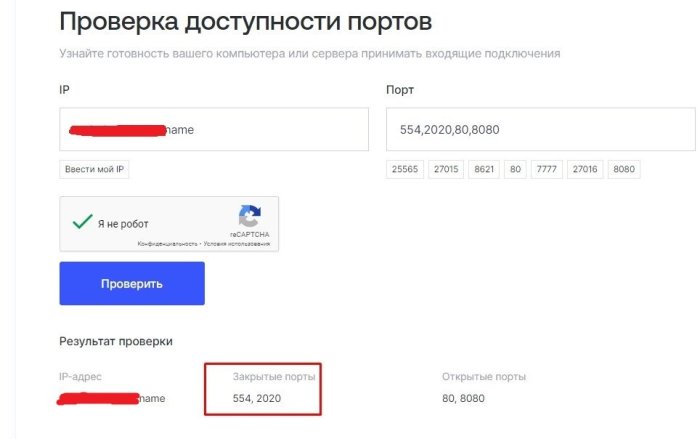

8080...? я думаю это дело рук KeenDNS. На роутере он закрыт у меня -

Surveillance Station 8.2.7-6222 + камера tp-link c200

use-nas replied to TRT_miha's topic in Програмное обеспечение

Роутер виноват А зачем 8080 открыт? -

Surveillance Station 8.2.7-6222 + камера tp-link c200

TRT_miha replied to TRT_miha's topic in Програмное обеспечение

-

Surveillance Station 8.2.7-6222 + камера tp-link c200

TRT_miha replied to TRT_miha's topic in Програмное обеспечение

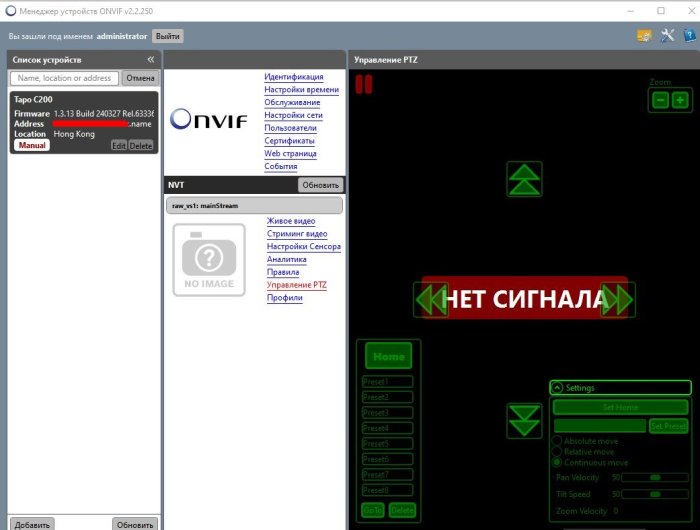

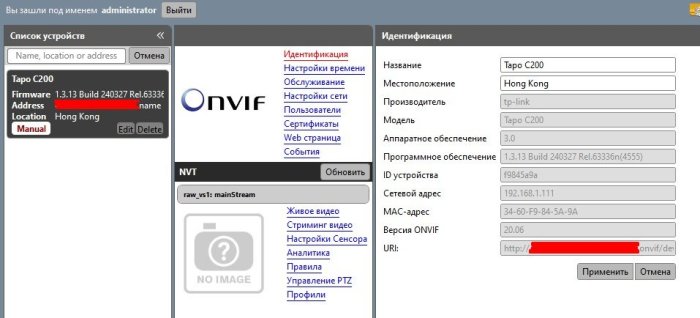

а камера даже управляется (механически) через интерфейс ONVIF менеджер..но изображения нет. Порты 554 и 2020 так же закрыты -

josito joined the community

-

Earth34 joined the community

-

Surveillance Station 8.2.7-6222 + камера tp-link c200

TRT_miha replied to TRT_miha's topic in Програмное обеспечение

схема включения через KeenDNS.. всё верно пропинговать с DSM камеру не получилось. провайдер ответил на запрос по открытию портов. вроде открыл. пока результатов нет. вяло ковыряю -

The voting is on the left side of the post.

-

Работает, кажется выше я писал как это активировать. Никто не пробовал 9.2.0 версию полечить.Работает?

-

Surveillance Station 8.2.7-6222 + камера tp-link c200

radial replied to TRT_miha's topic in Програмное обеспечение

Схема включения какая, камеры по ddns через инет отдают поток, верно? C dsm камеры пингуются? -

Surveillance Station 8.2.7-6222 + камера tp-link c200

TRT_miha replied to TRT_miha's topic in Програмное обеспечение

доступ к роутеру и камере я поменял.. ковырял-ковырял.. в итоге добился обнаружения камеры в ONVIF менеджере. но изображения как не было, так и нет. Связался с провайдером.. подумал, что он на своём железе может блочить 554 и 2020. Жду ответа -

там не в проце дело, а в видео карте

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2.1 69057-Update 4 - DSM version AFTER update: DSM 7.2.1 69057-Update 5 - Loader version and model: ARC v24.4.19 / DS3622xs+ - Installation type: BAREMETAL – MSI H310M PRO - I5 9100F - 32GB DDR4 - Additional comments: Downloaded and Manual Install. No issues.

-

anneblls01 joined the community

-

Hmm too much hassle for my current setup and the little goal I wanted to reach, but thanks.

-

Polanskiman started following where to download DSM 6.1 PAT files

-

You probably have to make a direct request to Synology now. I would not trust any download links you find in some thrid party websites.

-

Переход на 7.Х.Х с помощью Automated Redpill loader

use-nas replied to Olegin's topic in Програмное обеспечение

Появился адон вентиляторов и температуры процессора 👍работает не у всех ... -

That's very interesting. So change the model is doable, but has to be DSM converted, you mean like from 6.2.3 to 7.X.X? This is because every DSM is always a different OS depending on the hardware and is not a common OS for all, right? If so, it is a pity there is no project trying to unify all the DSM OS's and create just one to be compatible with all the hardware at the same time, and is just matter of installing, and no loader needed anymore... could be somewhat doable?

- Yesterday

-

Hi I just tried @Peter Suh tinycore-redpill.v1.0.2.7.m-shell.img It worked and was easy to install. Current fimware 7.2.1-69057 update 5 I am not looking for a fight just stating my experience I am a rookie with xpenolog and DSM but I can say it worked and worked very well for me. Also to note the install of DS1823xs+ Power schedule is working for me unlike the DS2422+ with firmware 7.1.1-42962 update 6. I don't know if that is his build or the firmware or the model. His Redpill loader did all the work for me. Ail I have to do was hit any key. Only issue I had was Any Key to continue does not included the space bar. So it should read hit enter to continue.

-

ajrkaffir joined the community

-

Переход на 7.Х.Х с помощью Automated Redpill loader

The Chief replied to Olegin's topic in Програмное обеспечение

Заново поднял и продолжает разработку. -

Much better is to setup DS3622xs. Yes, you have to select a new model (in loader setup), build a loader, boot into freshly built loader, get into web and migrate to a new model DSM, preferring to save settings.

-

its about dsm being able to "convert" its settings from a aolder ds, version to a more recent one without additional steps (recommanded by synology) the loader is not much involved here as long as it can make dsm run (redpill kernl module on all loaders we unse 6.2.4 an any 7.x) as long as the loader has anough drivers to support the hardware in question ... (ahci sata is part of syno's kernel so any ahci compatible controller will work, so in most cases its about network driver support) as far as i have seen yes, but i use dva1622 for now (as sa6400 pretty new), sa6400 might have better intel qsv support as its kernel 5.x based and its i915 driver support newer hardware ootb then what comes with kernel 4.x try arc or arc-c for sa6400 https://github.com/AuxXxilium/AuxXxilium/wiki/Arc:-Choose-a-Model-|-Platform#epyc7002---dt only downside might be not beeing able to use VMM with intel cpu (i guess that, not testes it myself and might never do that as if i need really might vm's to run i would choose a "real hypervisor" system like proxmox (esxi by now sees to be out of the race because of broadcom ditching the free version) Why you chosing this model? more free survailance cam's by default, native intel qsv build in by synology as the system in it original is using gemini lake (with the right cpu that is supported ootb its also easy to use jellyfin or plex, , "AI" stuff ootb in survailance station in the end i do not use any of that, still options i "could" use (lucky me i had not have to spend all the mony synology asks for a real 1622), but with the loaders you can switch models any time and and dsm will be able to convert everything (usually) as all is x64 based (some specifics about sas hba's or cpu dependent features like intel qsv and amd vmm support) arpl is on hold for at least a year (and might never come back), arc is good supported,lots of features and easy to use as its menu based (and the wiki is helpful too - people should use it more often), it might get confusing with all the options but there is the wiki, youtube, this forum and discord ... nothing different in result, as long as the loader is configured the right way (model) you will not see much difference as dsm is doing your jobs and somem things like care for some special problems might not be different between maintained loaders as its open source and new knowledge about how to fix quirks are shared and might find there way in your specific loader overtime (but in most cases you find the loader that work now for you and you look for new stuff 2 years after the or even later)

-

C hasswell вроде начиналось, но я уже не помню, кто младше )