trublu

Member-

Posts

89 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

trublu's Achievements

-

Use nvme/m.2 hard drives as storage pools in Synology

trublu replied to yanjun's topic in Developer Discussion Room

fdisk -l /dev/nvme0n1 shows the following /dev/nvme0n1p1 256 4980735 4980480 2.4G fd Linux raid autodetect /dev/nvme0n1p2 4980736 9175039 4194304 2G fd Linux raid autodetect /dev/nvme0n1p3 9437184 2000397734 1990960551 949.4G fd Linux raid autodetect I have just one 2tb though Starting to think I was shipped the wrong size -

Use nvme/m.2 hard drives as storage pools in Synology

trublu replied to yanjun's topic in Developer Discussion Room

I tried the script but the singe 2tb i have in my build didn't show. However using your "classic method" worked but I have only 1tb available. is that expected? Btw, should the last line be "mkfs.btrfs -f /dev/md3"? -

Use nvme/m.2 hard drives as storage pools in Synology

trublu replied to yanjun's topic in Developer Discussion Room

*double post* -

I had the same issue with the machine not showing on the network. I had to use arpl 1.03b

-

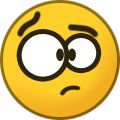

I'm having a similar issue. A bunch of my HDDs started showing only as "detected". Try the following in this link. I was able to get my drives back online (healthy/normal) but it looks like rebuild of storage pool with all drives needs to be done before restarting because, a reboot devolves to drives only showing as detected. Maybe you can trying this and replace the problem drives with clones and attempt another rebuild/repair. I just got a standalone duplicator in order to try the same thing. What loader are you using?

-

Hey, were you able to fix this issue?

-

trublu started following Missing storage pool and Drive warning

-

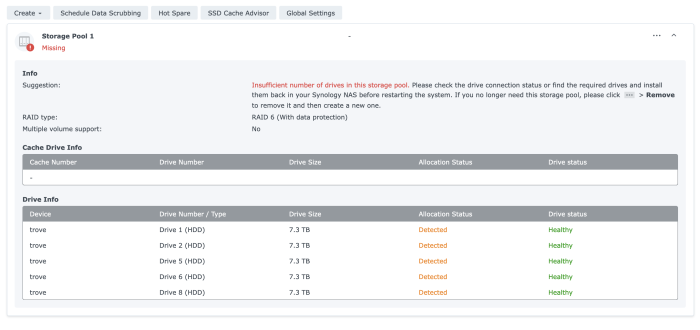

Anyone had an issue with losing their storage pool or volume after Update 4? I posted about my issue here but the questions subforum doesn't seem to get much traffic. I'm considering moving my HDDs to my Synology DS1817+ as a last resort to rebuilding the volume but I'd love to get any pointers before trying that as a last resort?

-

Had issues after upgrading to DSM 7.1.1-42962 Update 4. After changing to ARPL 1.03b, I was able to recover the system after a migration and log into a fresh DSM, however, the storage pool is missing/lost. Looking for tips on how to rebuild the original storage pool. Thanks. Hardware: Motherboard with UEFi disabled: SUPERMICRO X10SDV-TLN4F D-1541 (2 x 10 GbE LAN & 2 x Intel i350-AM2 GbE LAN) LSI HBA with 8 HDDs (onboard STA controllers disabled) root@trove:~# cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sdd2[1] 2097088 blocks [12/1] [_U__________] md0 : active raid1 sdd1[1] 2490176 blocks [12/1] [_U__________] unused devices: <none> root@trove:~# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Wed Mar 8 11:46:28 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Events : 0.38 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 50 1 active sync /dev/sdd2 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@trove:~# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Wed Mar 8 12:38:17 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Events : 0.2850 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 49 1 active sync /dev/sdd1 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@trove:~# mdadm --examine /dev/sd* /dev/sda: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sda1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Update Time : Wed Mar 8 10:31:50 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 11 Spare Devices : 0 Checksum : b4fd4b61 - correct Events : 1764 Number Major Minor RaidDevice State this 0 8 1 0 active sync /dev/sda1 0 0 8 1 0 active sync /dev/sda1 1 1 0 0 1 faulty removed 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sda2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Update Time : Wed Mar 8 10:31:34 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 11 Spare Devices : 0 Checksum : dfcee89f - correct Events : 31 Number Major Minor RaidDevice State this 0 8 2 0 active sync /dev/sda2 0 0 8 2 0 active sync /dev/sda2 1 1 0 0 1 faulty removed 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sda3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 3875a9e5:a7260006:808f7c11:9cedf8a0 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 82ba8080 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdb: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdb1. /dev/sdb2: Magic : a92b4efc Version : 0.90.00 UUID : d552bb6c:670b1dca:05d949f7:b0bbaec7 Creation Time : Sat Jul 2 16:17:54 2022 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 3 Preferred Minor : 1 Update Time : Tue Mar 7 18:16:53 2023 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 9 Spare Devices : 0 Checksum : 63069156 - correct Events : 125 Number Major Minor RaidDevice State this 1 8 18 1 active sync /dev/sdb2 0 0 8 2 0 active sync /dev/sda2 1 1 8 18 1 active sync /dev/sdb2 2 2 8 34 2 active sync 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdb3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 178434ea:f014dcc9:f52e6b43:460d8647 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 239d3cd0 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdc1. /dev/sdc3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : active Device UUID : 86058974:c155190f:4805d800:856081a4 Update Time : Mon Mar 6 20:03:55 2023 Checksum : d9eeeb2 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdd1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Update Time : Wed Mar 8 12:22:28 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 10 Spare Devices : 0 Checksum : b4fd6ba7 - correct Events : 2592 Number Major Minor RaidDevice State this 1 8 49 1 active sync /dev/sdd1 0 0 0 0 0 removed 1 1 8 49 1 active sync /dev/sdd1 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdd2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Update Time : Wed Mar 8 11:46:28 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 10 Spare Devices : 0 Checksum : dfcefa9b - correct Events : 38 Number Major Minor RaidDevice State this 1 8 50 1 active sync /dev/sdd2 0 0 0 0 0 removed 1 1 8 50 1 active sync /dev/sdd2 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sde1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Update Time : Tue Mar 7 19:36:00 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : b4fc79cf - correct Events : 1720 Number Major Minor RaidDevice State this 2 8 65 2 active sync /dev/sde1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 0 0 3 faulty removed 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Update Time : Tue Mar 7 19:31:47 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : dfce16e9 - correct Events : 22 Number Major Minor RaidDevice State this 2 8 66 2 active sync /dev/sde2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 0 0 3 faulty removed 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 1f5ac73f:a2d2d169:44fbeeaa:f0293f1d Update Time : Tue Mar 7 18:23:17 2023 Checksum : 5662b981 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdf1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 5 Preferred Minor : 0 Update Time : Tue Mar 7 19:31:40 2023 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 7 Spare Devices : 0 Checksum : b4fc787f - correct Events : 1629 Number Major Minor RaidDevice State this 3 8 81 3 active sync /dev/sdf1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 8 81 3 active sync /dev/sdf1 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdf2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 5 Preferred Minor : 1 Update Time : Tue Mar 7 19:05:08 2023 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 7 Spare Devices : 0 Checksum : dfce110f - correct Events : 19 Number Major Minor RaidDevice State this 3 8 82 3 active sync /dev/sdf2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 8 82 3 active sync /dev/sdf2 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdf3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 5e941a18:8b4def78:efcc7281:413e6a0f Update Time : Tue Mar 7 18:23:17 2023 Checksum : 68254a4 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdg: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdg1. /dev/sdg3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : active Device UUID : e95d48ae:87297dfa:8571bbc2:89098f37 Update Time : Mon Mar 6 20:03:55 2023 Checksum : 87b33020 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdh: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdh1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Update Time : Tue Mar 7 19:36:05 2023 State : active Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : b4fc7350 - correct Events : 1721 Number Major Minor RaidDevice State this 4 8 113 4 active sync /dev/sdh1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 0 0 3 faulty removed 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdh2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Update Time : Tue Mar 7 19:31:47 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : dfce171d - correct Events : 22 Number Major Minor RaidDevice State this 4 8 114 4 active sync /dev/sdh2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 0 0 3 faulty removed 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdh3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 7a2f8c59:2331f484:50fbb33a:bfe5fa58 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 56caa935 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing)

-

Hoping to get some help with update issues. I was previously running DSM 7.1.1-42962 DS3622xs+ using arpl-0.1-alpha2 and recently updated to DSM 7.1.1-42962 Update 4 however I made the mistake of not swapping in the new USB I'd made with v1.1-beta2a. Initially, all went went but after a recent reboot I couldn't boot back into DSM. I've tried multiple versions of ARPL but each time I get the migration screen and reinstall DSM, I still end up with not being able to get an etherne t connection that holds. Anyone else have a similar issue or have tips to fix this?

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.1-42661 Update 3 - Loader version and model: Automated Redpill Loader - Using custom extra.lzma: NO - Installation type: BAREMETAL - Supermicro X10SDV-TLN4F, LSI SAS2008 - Additional comments: Upgraded via GUI

-

No drives detected

trublu replied to trublu's topic in General Installation Questions/Discussions (non-hardware specific)

Have you tried the automated red pill loader? That was the only loader I could get working. -

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.1-42661 Update 3 - Loader version and model: Automated Redpill Loader - Using custom extra.lzma: NO - Installation type: BAREMETAL - Supermicro X10SDV-TLN4F, LSI SAS2008 - Additional comments: Upgraded via GUI

-

use ifconfig then look at eth0

-

Boot back into the loader config and add your NICs (netif_num and mac1 to macX) using the commandline menu or edit the user config file manually. rebuild shouldn't be required. e.g. cmdline: mac1: "AC1D5B19E0E3" mac2: "AC1D5B19E0E4" netif_num: "2"

-

Resolved by using the automated redpill loader